As the modern world transitions with the development of generative AI, it has also left its impact on the field of entertainment. Be it shows, movies, games, or other formats, AI has transformed every aspect of these modes of entertainment.

Runway AI Film Festival is the rising aspect of this AI-powered era of media. It can be seen as a step towards recognizing the power of artificial intelligence in the world of filmmaking. One can conclude that AI is a definite part of the media industry and stakeholders must use this tool to bring innovation into their art.

Learn how AI is helping Webmaster and content creators progress in 4 new ways

In this blog, we will explore the rising impact of AI films, particularly in light of the recent Runway AI Festival Film of 2024 and its role in promoting AI films. We will also navigate through the winners of this year’s festival, uncovering the power of AI in making them exceptional.

Explore how robotics have revolutionized 8 industries

Before we delve into the world of Runway AI Film Festival, let’s understand the basics of AI films.

What are AI films? What is their Impact?

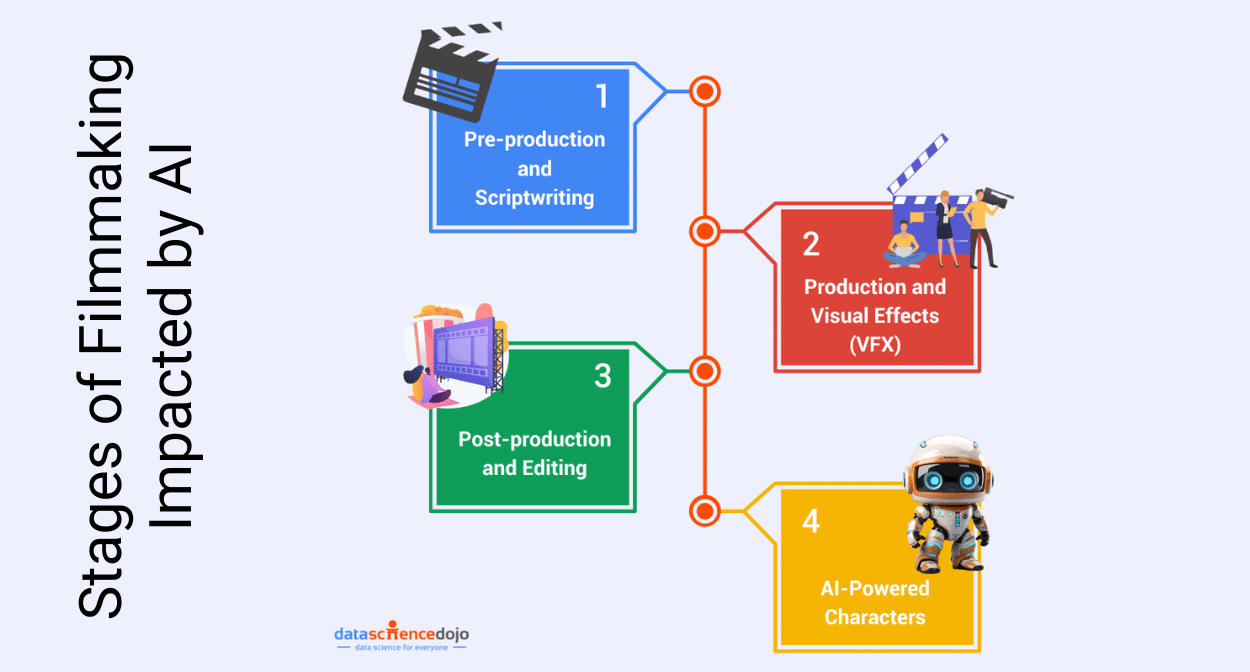

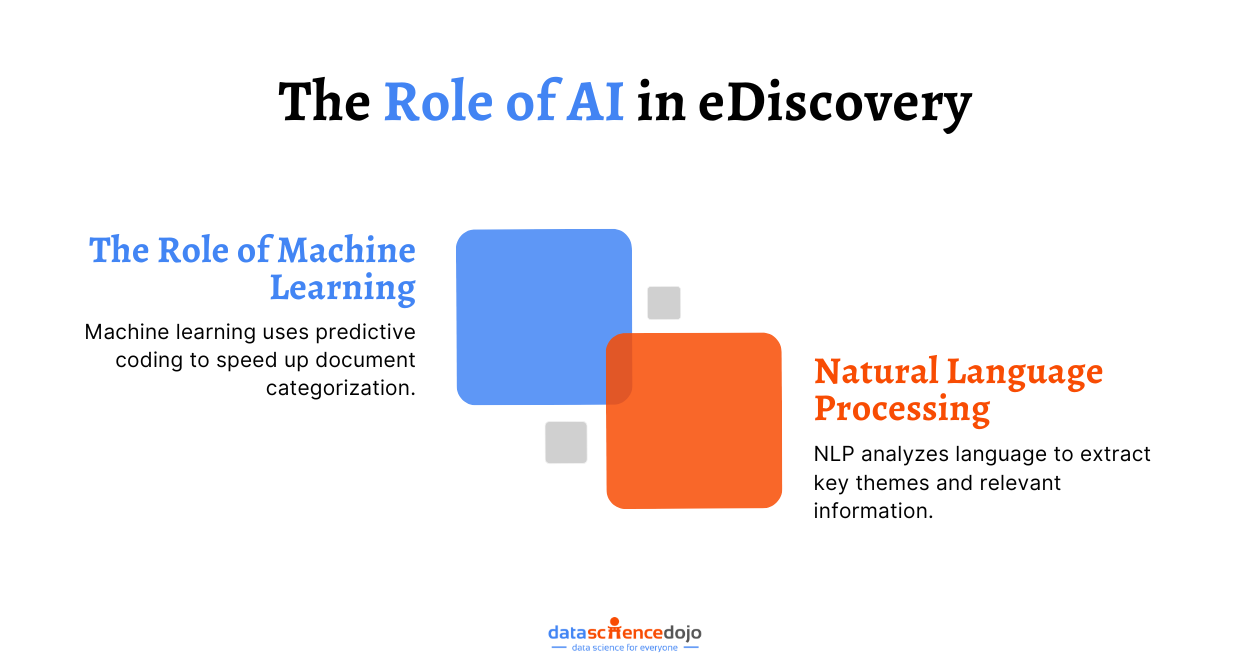

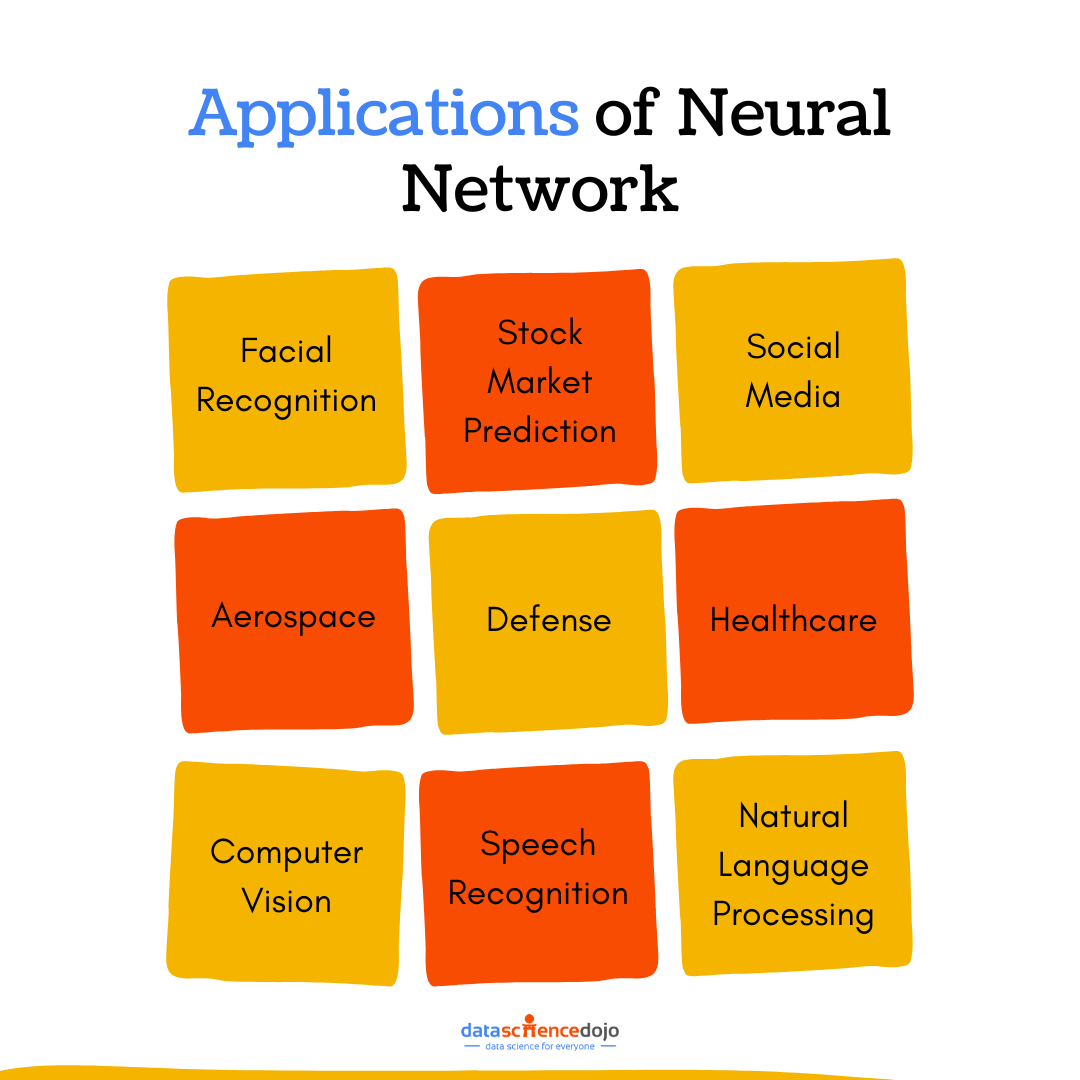

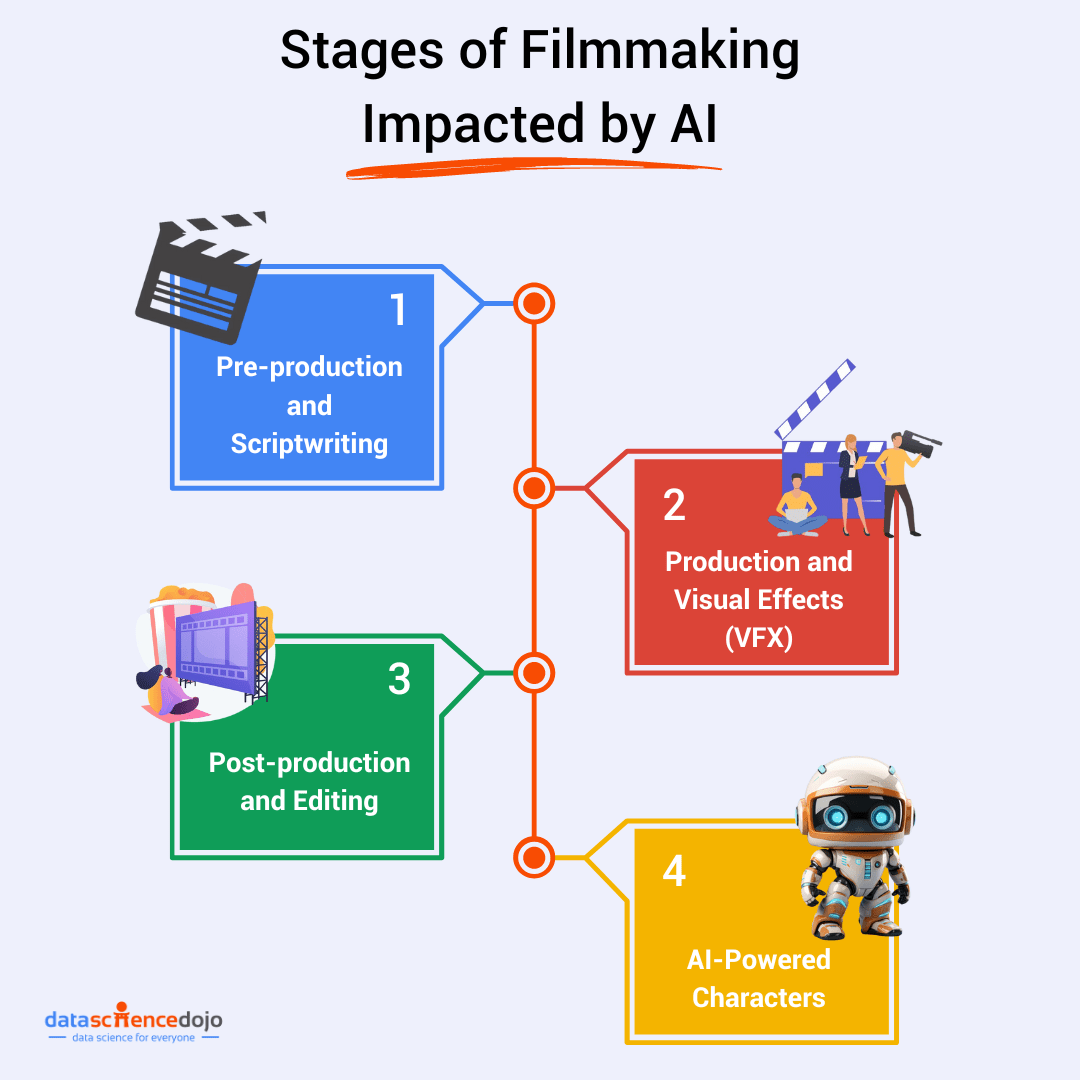

AI films refer to movies that use the power of artificial intelligence in their creation process. The role of AI in films is growing with the latest advancements, assisting filmmakers in several stages of production. Its impact can be broken down into the following sections of the filmmaking process.

Pre-production and Scriptwriting

At this stage, AI is becoming a valuable asset for screenwriters. The AI-powered tools can analyze the scripts, uncover the story elements, and suggest improvements that can resonate with the audiences better. Hence, creating storylines that are more relevant and set to perform better.

Understand 15 Spectacular AI, ML, and Data Science Movies

Moreover, AI can even be used to generate complete drafts based on the initial ideas, enabling screenwriters to brainstorm in a more effective manner. It also results in generating basic ideas using AI that can then be refined further. Hence, AI and human writers can sync up to create strong narratives and well-developed characters.

Production and Visual Effects (VFX)

The era of film production has transitioned greatly, owing to the introduction of AI tools. The most prominent impact is seen in the realm of visual effects (VFX) where AI is used to create realistic environments and characters. It enables filmmakers to breathe life into their imaginary worlds.

Using Custom vision AI and power BI to build a bird recognition app

Hence, they can create outstanding creatures and extraordinary worlds. The power of AI also results in the transformation of animation, automating processes to save time and resources. Even de-aging actors is now possible with AI, allowing filmmakers to showcase a character’s younger self.

Post-production and Editing

While pre-production and production processes are impacted by AI, its impact has also trickled into the post-production phase. It plays a useful role in editing by tackling repetitive tasks like finding key scenes or suggesting cuts for better pacing. It gives editors more time for creative decisions.

Know how AI as a Service (AIaaS) transforms the Industry

AI is even used to generate music based on film elements, giving composers creative ideas to work with. Hence, they can partner up with AI-powered tools to create unique soundtracks that form a desired emotional connection with the audience.

AI-Powered Characters

With the rising impact of AI, filmmakers are using this tool to even generate virtual characters through CGI. Others who have not yet taken such drastic steps use AI to enhance live-action performances. Hence, the impact of AI remains within the characters, enabling them to convey complex emotions more efficiently.

Thus, it would not be wrong to say that AI is revolutionizing filmmaking, making it both faster and more creative. It automates tasks and streamlines workflows, leaving more room for creative thinking and strategy development. Plus, the use of AI tools is revamping filmmaking techniques, and creating outstanding visuals and storylines.

Learn easily build AI-based chatbots in Python

With the advent of AI in the media industry, the era of filmmaking is bound to grow and transition in the best ways possible. It opens up avenues that promise creativity and innovation in the field, leading to amazing results.

Why Should We Watch AI Films?

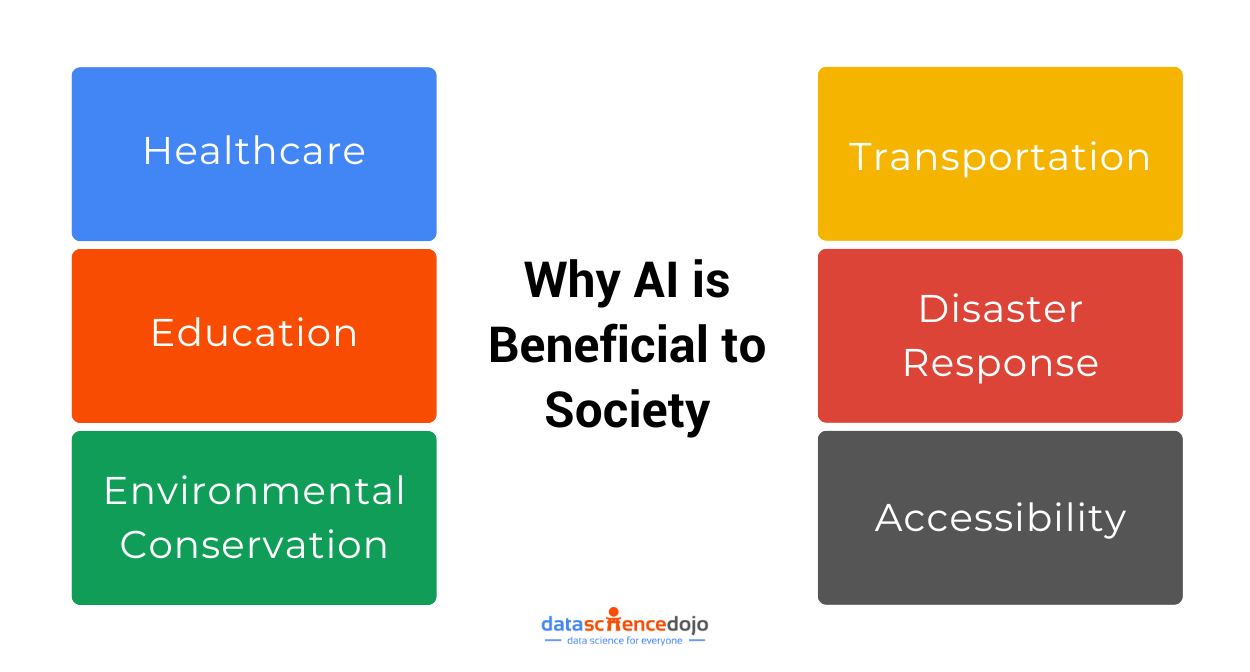

In this continuously changing world, the power of AI is undeniable. While we welcome these tools in other aspects of our lives, we must also enjoy their impact in the world of entertainment. These movies push the boundaries of visual effects, crafting hyper-realistic environments and creatures that wouldn’t be possible otherwise.

Hence, giving life to human imagination in the most accurate way. It can be said that AI opens a portal into the human mind that can be depicted in creative ways through AI films. This provides you a chance to navigate alien landscapes and encounter unbelievable characters simply through a screen.

Comprehend how AI in healthcare has improved patient care

However, AI movies are not just about the awe-inspiring visuals and cinematic effects. Many AI films delve into thought-provoking themes about artificial intelligence, prompting you to question the nature of consciousness and humanity’s place in a technology-driven world.

Such films initiate conversations about the future and the impact of AI on our lives. Thus, AI films come with a complete package. From breathtaking visuals and impressive storylines to philosophical ponderings, it brings it all to the table for your enjoyment. Take a dive into AI films, you might just be a movie away from your new favorite genre.

To kickstart your exploration of AI films, let’s look through the recent film festival about AI-powered movies.

What is the Runway AI Film Festival?

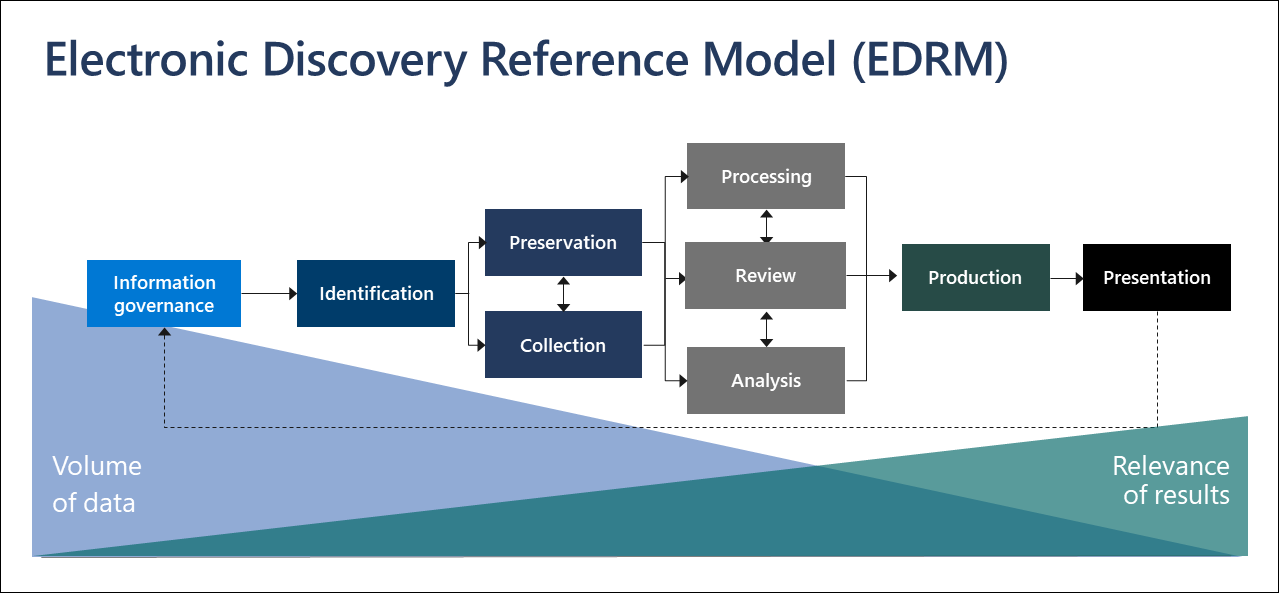

It is an initiative taken by Runway, a company that works to develop AI tools and bring AI research to life in their products. Found in 2018, the company has been striving for creativity with its research in AI and ML through in-house work and collaborating globally.

In an attempt to recognize and celebrate the power of AI tools, they have introduced a global event known as the Runway AI Film Festival. It aims to showcase the potential of AI in filmmaking. Since the democratization of AI tools for creative personnel is Runway’s goal, the festival is a step towards achieving it.

The first edition of the AI film festival was put forward in 2023. It became the initiation point to celebrate the collaboration of AI and artists to generate mind-blowing art in the form of films. The festival became a platform to recognize and promote the power of AI films in the modern-day entertainment industry.

Details of the AI Film Festival (AIFF)

The festival format allows participants to submit their short films for a specified period of time. Some key requirements that you must fulfill include:

- Your film must be 1 to 10 minutes long

- An AI-powered tool must be used in the creation process of your film, including but not limited to generative AI

- You must submit your film via a Runway AI company link

While this provides a glimpse of the basic criteria for submissions at a Runway AI Film Festival, they have provided detailed submission guidelines as well. You must adhere to these guidelines when submitting your film to the festival.

These submissions are then judged by a panel of jurors who score each submission. The scoring criteria for every film is defined as follows:

- The quality of your film composition

- The quality and cohesion of your artistic message and film narrative

- The originality of your idea and subsequently the film

- Your creativity in incorporating AI techniques

Each juror scores a submission from 1-10 for every defined criterion. Hence, each submission gets a total score out of 40. Based on this scoring, the top 10 finalists are announced who receive cash prizes and Runway credits. Moreover, they also get to screen their films at the gala screenings in New York and Los Angeles.

Here’s a list of 15 must-watch AI, ML, and data science movies

Runway AI Film Festival 2024

The Film Festival of 2024 is only the second edition of this series and has already gained popularity in the entertainment industry and its fans. While following the same format, this series of festivals is becoming a testament to the impact of AI in filmmaking and its boundless creativity.

So far, we have navigated through the details of AI films and the Runway AI Film Festival, so it is only fair to navigate through the winners of the 2024 edition.

Winners of the 2024 Festival

1. Get Me Out / 囚われて by Daniel Antebi

Runtime: 6 minutes 34 seconds

Revolving around Aka and his past, it navigates through his experiences while he tries to get out of a bizarre house in the suburbs of America. Here, escape is an illusion, and the house itself becomes a twisted mirror, forcing Aka to confront the chilling reflections of his past.

Intrigued enough? You can watch it right here.

2. Pounamu by Samuel Schrag

Runtime: 4 minutes 48 seconds

It is the story of a kiwi bird as it chases his dream through the wilderness. As it pursues a dream deeper into the heart of the wild, it might hold him back but his spirit keeps him soaring.

3. e^(i*π) + 1 = 0 by Junie Lau

Runtime: 5 minutes 7 seconds

A retired mathematician creates digital comics, igniting an infinite universe where his virtual children seek to decode the ‘truth,’. Armed with logic and reason, they journey across time and space, seeking to solve the profound equations that hold the key to existence itself.

4. Where Do Grandmas Go When They Get Lost? by Léo Cannone

Runtime: 2 minutes 27 seconds

Told through a child’s perspective, the film explores the universal question of loss and grief after the passing of a beloved grandmother. The narrative is a delicate blend of whimsical imagery and emotional depth.

5. L’éveil à la création / The dawn of creation by Carlo De Togni & Elena Sparacino

Runtime: 7 minutes 32 seconds

Gauguin’s journey to Tahiti becomes a mystical odyssey. On this voyage of self-discovery, he has a profound encounter with an enigmatic, ancient deity. This introspective meeting forever alters his artistic perspective.

6. Animitas by Emeric Leprince

Runtime: 4 minutes

A tragic car accident leaves a young Argentine man trapped in limbo.

7. A Tree Once Grew Here by John Semerad & Dara Semerad

Runtime: 7 minutes

Through a mesmerizing blend of animation, imagery, and captivating visuals, it delivers a powerful message that transcends language. It’s a wake-up call, urging us to rebalance our relationship with nature before it’s too late.

8. Dear Mom by Johans Saldana Guadalupe & Katie Luo

Runtime: 3 minutes 4 seconds

It is a poignant cinematic letter written by a daughter to her mother as she explores the idea of meeting her mother at their shared age of 20. It’s a testament to unconditional love and gratitude.

9. LAPSE by YZA Voku

Runtime: 1 minute 47 seconds

Time keeps turning, yet you never quite find your station on the dial. You drift between experiences, a stranger in each, the melody of your life forever searching for a place to belong.

10. Separation by Rufus Dye-Montefiore, Luke Dye-Montefiore & Alice Boyd

Runtime: 4 minutes 52 seconds

It is a thought-provoking film that utilizes a mind-bending trip through geologic time. As the narrative unfolds, the film ponders a profound truth: both living beings and the world itself must continually adapt to survive in a constantly evolving environment.

How will AI Film Festivals Impact the Future of AI Films?

Events like the Runway AI Film Festival are shaping the exciting future of AI cinema. These festivals highlight the innovation of films, generating buzz and attracting new audiences and creators. Hence, growing the community of AI filmmakers.

These festivals like AIFF offer a platform that fosters collaboration and knowledge sharing, boosting advancements in AI filmmaking techniques. Moreover, they will help define the genre of AI films with a bolder use of AI in storytelling and visuals. It is evident that AI film festivals will play a crucial role in the advanced use of AI in filmmaking.