Data Science Bootcamp

- An introduction to data science and data engineering

- Mastering Data Exploration and Visualization

- Storytelling with Data and Predictive Modeling Techniques

- Advanced Decision Tree Learning and Model Evaluation

- Boosting, Ensemble Methods, and Hyperparameter Tuning

- Deploying Models as a Service and A/B Testing for Online Experimentation

- Unsupervised Learning, Text Analytics, and Recommender Systems

No technical skills required

We have carefully designed our data science bootcamp to bring you the best practical exposure in the world of data science, programming, and machine learning. With our comprehensive curriculum, interactive learning environment, and challenging real-world exercises, you’ll learn through a practical approach.

Our curriculum includes the right mix of lectures and hands-on exercises, along with office hours and mentoring. Our data science training employs a business-first approach to help you stand out in the market.

Curriculum

A lay-person’s overview to get you started with practical data science and data engineering.

Data Exploration, Visualization and Data Engineering

Storytelling with Data

Predictive Modeling for Real World Problems

Decision Tree

Evaluation of Classification Models

Understand and evaluate classification models using metrics like precision, recall, and F1 score.

Tuning of Model Hyperparameters

Learn hyperparameter tuning to build well-generalized models. Conduct practical exercises to prevent overfitting using cross-validation.

Ensemble Methods, Bagging, and Random Forest

Boosting

Online Experimentation and A/B Testing

Hypothesis Testing Fundamentals

Designing and running experiments depend upon a good understanding of hypothesis testing fundamentals.

Text Analytics Fundamentals

Unsupervised Learning and K-Means Clustering

Linear Models for Regression

Build a linear regression model for continuous numerical data. Optimize performance by adjusting regularization penalties and parameter updates. Regularization and Tuning of Linear Models

Ranking and Recommendation Systems

Big Data Engineering and Distributed Systems

Real-Time Analytics and IoT

More than just a course

Our data science bootcamp is not just a training program; it’s a gateway to mastering data science, with a variety of resources to support your learning journey.

Access hundreds of coding exercises, sandboxes, free tutorials and other bonus learning material.

Just bring a laptop

Watch tutorials or practice coding in browser-based Jupyter notebooks and inline code runners. We call our learning platform a complete learning ecosystem.

Personal coding sandboxes

Every attendee gets a dedicated compute and storage pre-configured with relevant libraries and packages.

100s of code samples

In addition to the in-class exercises, attendees receive Python and R code samples that have been pre-tested.

No subscriptions needed

All software licenses, subscriptions, tools, and computing resources are included.

Cohort discussion forum

Get help from course staff, share ideas and discuss with your peers.

Free courses

Dozens of other short courses and coding exercises are included in the registration.

Exclusive alumni community

Join the exclusive community of 11,000+ alumni globally. Discuss, collaborate, and expand your network.

Trusted by Leading Companies

Recommended by Practitioners

At the end of the bootcamp I think all of us are at the same place, so that’s the beauty of this program. You could come from any background because we are covering some diverse topics here, and making sure it’s a level playing field and again, going back to to the motto of, hey, this is for everyone. Kapil Pandey, Analytics Manager at Samsung

It was a great experience for increasing the expertise on data science. The abstract concepts were explained well and always focused on real applications and business cases. The pace was adjusted as needed to let everyone follow the topics. Week was intense as there are many topics to cover but schedule was well managed to optimize people attention.Harris Thamby, Manager at Microsoft

What I enjoyed most about the Data Science Dojo bootcamp was the enthusiasm for data science from the instructors.Eldon Prince, Senior Principal Data Scientist at DELL

Highly valuable course condensed into a single week. Enough background is given to allow one to continue their learning and training on their own.Good energy from the instructors. It is clear that they have real industry experience working on problems.Ben Gawiser, Software Engineer at Amazon

I’m really impressed by the quality of the bootcamp, I came with high expectation and Data Science Dojo exceeded it. I highly recommend the bootcamp to anyone interested in Data Science!Marcello Azambuja, Engineering Manager at Uber

With the knowledge I’ve gained from this bootcamp I can further add value to my clients. Data Science Dojo is the only training which provides alot of useful content and now I can confidently make a predictive model in few minutes.Iyinola Abosede-Brown, Senior Technology Consultant at KPMG

Future proof your career

Coming Soon !

Learn data science from leading experts in industry.

Start Learning

Use DSB1000 for USD 1000 discount.-

Learn from industry experts through live session

-

Get 1-year access to dedicated learner sandboxes.

-

Access to exclusive Data science coding labs.

-

Access all session recordings.

-

Get a verified certificate

Related Courses

in-person & Online | 40 hours | 5 days

Large Language Models Bootcamp

A comprehensive introduction to building generative AI and large language models applications. Designed for anyone who wants to build large language models applications. Learn more

Online | 30 hours | 8 WEEKS

Agentic AI Bootcamp

Learn to build agents, not just apps. Automate reasoning, planning, context retrieval and execution.Learn to build and evaluate Agentic models, tune model hyper parameters. Hundreds of practical exercises and capstone project. Learn more

online | 15 hours | 5 days

Python for Data Science

A practical course in Python for data science and data engineering. Learn data exploration, visualization, feature engineering, data transformation, machine learning model building, and data pipelines. Learn more

Online | 8 hours | 1 day

Large Language Models for Everyone

Large language models course is designed for anyone interested in getting started with large language models and generative AI without all the math and programming. Learn more

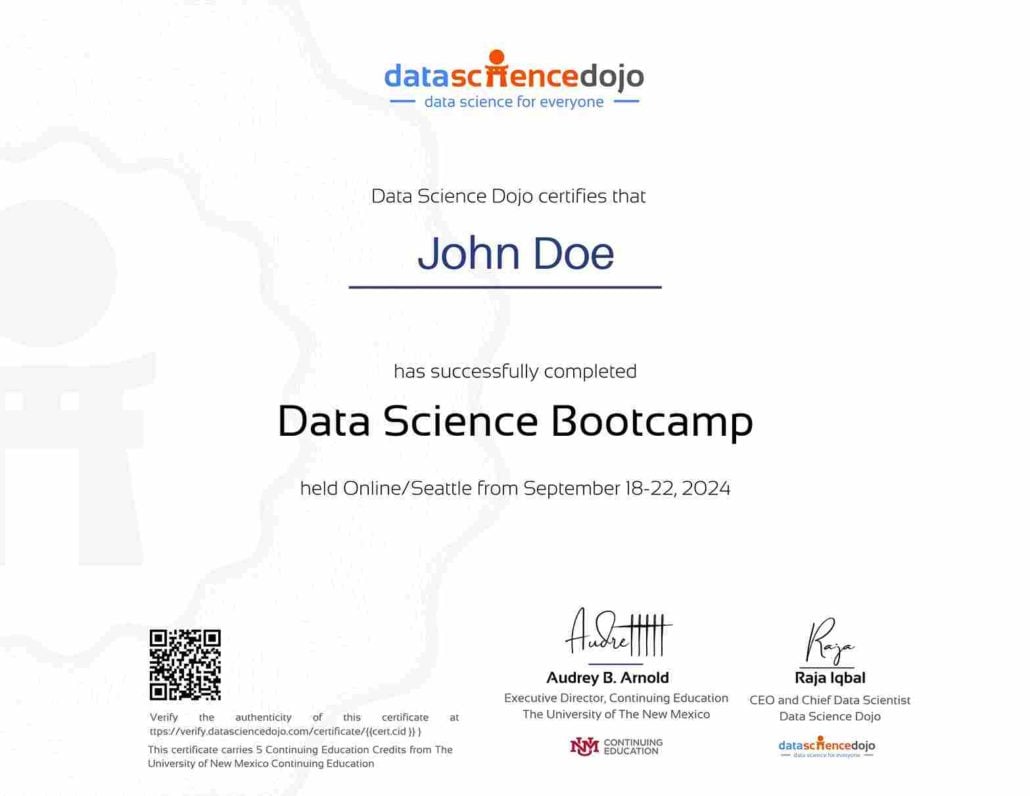

Earn a Verified Certificate

Earn a verified certificate from The University of New Mexico Continuing Education:

- 7 Continuing Education Credit (CEU)

- Acceptable by employers for reimbursements

- Valid for professional licensing renewal

- Verifiable by The University of New Mexico Registrar’s office

- Add to LinkedIn and share with your network

Attend the Data Science Bootcamp for free

We Accept Tuition Benefits

All of our programs are backed by a certificate from The University of New Mexico, Continuing Education. This means that you may be eligible to attend the bootcamp for FREE.

Not sure? Fill out the form so we can help.

Frequently Asked Questions

Is the training conducted in R or Python?

We are a technology-neutral and vendor-agnostic training. Both R and Python code samples will be shared with attendees.

Are the live sessions recorded for viewing later?

Each live session is recorded and made available for review to both online and in-person participants a few days after the bootcamp concludes.

How many Continuing Education Credits (CEUs) will I receive?

Yes, you will receive 7 CEU’s after completing the Data Science Bootcamp. You will be able to request a transcript from the University of New Mexico by paying a fee of ten US Dollars.

What is the difference between in-person and online?

There is no difference between the online and in-person bootcamp in terms of curriculum and instructors. The only distinction is that breakfast, lunch, and beverages are provided at the in-person bootcamp.

Do you have any in-person part-time options?

No, we do not offer any in-person part-time options. The program requires a full-time commitment of 40 hours over 5 days.

What is the refund policy?

If for any reason, you decide to cancel, we will gladly refund your registration fee in full if notified the Monday prior to the start of the training. We would also be happy to transfer your registration to another bootcamp or workshop. Refunds cannot be processed if you have transferred to a different bootcamp after registration.

Will I earn a certificate from this data science bootcamp?

Yes! Once you have completed our Data Science Bootcamp, you will be issued a certificate in association with The University of New Mexico Continuing Education that you can print or add to your LinkedIn profile for others to see.

Are there any discounts available?

Yes, discounts are available. The Dojo and Guru packages are currently offered at a 25% early-bird discount. Please note that these discounts are limited and available on a first-come, first-served basis.

How many hours per week are we expected in class?

You are expected to be in class for 8 hours each day.

What backgrounds do people have that take this data science bootcamp?

We have had attendees from a wide range of backgrounds – software engineers, product/program managers, physicists, financial analysts – even medical doctors and veterinarians, attend and successfully completed our data science bootcamp. This bootcamp is for anyone who is curious about data science and willing to explore, segment, analyze, and understand their data in order to make better data-driven decisions. We recommend that you talk to one of our advisors before joining this data science bootcamp. If you would like to set up a time with one of our instructors, please let us know.

What kind of jobs can a data science bootcamp get me?

It depends on your capabilities and skills you have grasped while attending a bootcamp that will lead to a career track that you can choose in data analytics, but here are some jobs you can look forward to, based on your skillset:

Data Scientist

Data Engineer

Machine Learning Engineer

Data Analyst

Business Analyst / Product Analyst

If I have questions during the live instructor-led sessions or while working on homework?

Yes, our live instructor-led sessions are highly interactive. Students are encouraged to ask questions, and our instructors make sure to provide thorough responses without rushing. Additionally, discussions relevant to the topic being taught are actively encouraged. We also understand that questions may arise during homework. To support you, we have a dedicated Discord community where you can receive help from our instructors and connect with fellow students.

What is the transfer policy?

Transfers are allowed once with no penalty. Transfers requested more than once will incur a $200 processing fee.