Large Language Models (LLMs) like GPT-3 and BERT have revolutionized the field of natural language processing. However, large language models evaluation is as crucial as their development. This blog delves into the methods used to assess LLMs, ensuring they perform effectively and ethically.

Evaluation Metrics and Methods

Evaluating large language models (LLMs) is a comprehensive and intricate process that ensures models perform effectively, reliably, and ethically across a wide range of applications. Here’s a look at the key aspects;

Understand 7 Best Large Language Models (LLMs)

Perplexity: Perplexity measures how well a model predicts a text sample. A lower perplexity indicates better performance, as the model is less ‘perplexed’ by the data.

Accuracy, safety, and fairness: Beyond mere performance, assessing an LLM involves evaluating its accuracy in understanding and generating language, safety in avoiding harmful outputs, and fairness in treating all groups equitably.

Embedding-based methods: Methods like BERTScore use embeddings (vector representations of text) to evaluate the semantic similarity between the model’s output and reference texts.

Human evaluation panels: Panels of human evaluators can judge the model’s output for aspects like coherence, relevance, and fluency, offering insights that automated metrics might miss.

Benchmarks like MMLU and HellaSwag: These benchmarks test an LLM’s ability to handle complex language tasks and scenarios, gauging its generalizability and robustness.

Learn about the Top 10 LLM Benchmarks for Comprehensive Model Evaluation

Holistic evaluation: Frameworks like the Holistic Evaluation of Language Models (HELM) assess models across multiple metrics, including accuracy and calibration, to provide a comprehensive view of their capabilities.

Bias detection and interpretability methods: These methods evaluate how biased a model’s outputs are and how interpretable its decision-making process is, addressing ethical considerations.

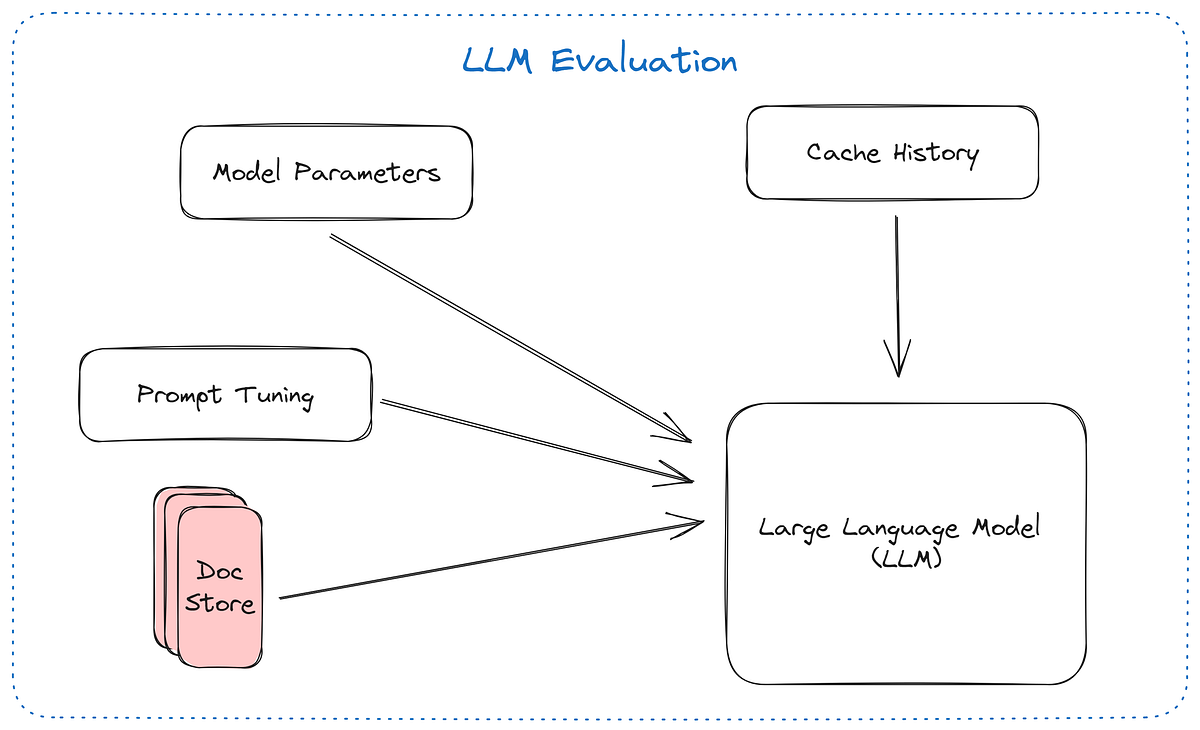

How Does Large Language Models Evaluation Work

To enhance your understanding of how large language models (LLM) evaluation works, let’s delve deeper into each of the key methods involved in the evaluation process:

Understand LLM Evaluation and Real-World Applications

Performance Assessment

Performance assessment is a fundamental aspect of evaluating LLMs, focusing on how well these models predict or generate text. One of the primary metrics used is perplexity, which measures the model’s ability to predict a sequence of words.

Explore Text analytics

A lower perplexity score indicates that the model is better at predicting the next word in a sequence, reflecting its proficiency in understanding language patterns. This metric is crucial for tasks like language modeling and text generation, where the model’s ability to produce coherent and contextually appropriate text is paramount.

Knowledge and Capability Evaluation

This evaluation assesses the model’s ability to provide accurate and relevant information. It involves tasks such as question-answering, text completion, and summarization to test the model’s understanding and language generation capabilities.

Learn Natural Language Processing and its Applications

For instance, in a question-answering task, the model is evaluated on its ability to comprehend the question and provide a precise and relevant answer. This evaluation helps determine the model’s effectiveness in various applications, from customer support to educational tools.

Alignment and Safety Evaluation

Ensuring that LLMs produce safe, unbiased, and ethically aligned outputs is critical. This evaluation involves testing the model for harmful outputs, biases, or misinformation. Developers use techniques like adversarial testing and bias detection to identify and mitigate potential issues.

By addressing these concerns, developers can ensure that the model’s outputs are equitable and do not perpetuate harmful stereotypes or misinformation, aligning with ethical standards and societal values.

Explore Algorithmic Biases and Challenges to achieve Fairness in AI

Use of Evaluation Metrics like BLEU and ROUGE

Metrics such as BLEU (Bilingual Evaluation Understudy) and ROUGE (Recall-Oriented Understudy for Gisting Evaluation) are widely used to assess the quality of machine-translated text. BLEU measures the overlap between the model’s output and a set of reference translations, focusing on precision.

ROUGE, on the other hand, emphasizes recall, evaluating how much of the reference content is captured in the model’s output. These metrics are essential for tasks like translation and summarization, where the quality and fidelity of the generated text are crucial.

Mastering LLM Evaluation Metrics and Real-Life Applications

Holistic Evaluation Methods

Frameworks like the Holistic Evaluation of Language Models (HELM) provide a comprehensive assessment of LLMs by evaluating them based on multiple metrics, including accuracy, calibration, and robustness.

This approach ensures that the model is not only accurate but also reliable and adaptable to different contexts. By considering a wide range of factors, holistic evaluation methods offer a more complete picture of the model’s capabilities and limitations.

Human Evaluation Panels

In addition to automated metrics, human evaluation panels play a vital role in assessing aspects of the model’s output that machines might miss, such as coherence, relevance, and fluency. Human evaluators provide qualitative insights into the model’s performance, offering valuable feedback that can guide further refinement and improvement.

This human-centric approach ensures that the model’s outputs meet user expectations and enhance the overall user experience.

Explore LLM Guide: A Beginner’s Resource to the Decade’s Top Technology

By employing these comprehensive evaluation methods, developers and researchers can refine LLMs to ensure they are not only efficient in language understanding and generation but also safe, unbiased, and aligned with ethical standards. This holistic approach to evaluation helps build trust and confidence in the capabilities of LLMs, ensuring they can be deployed responsibly and effectively in a wide range of applications.

These evaluation methods help in refining LLMs, ensuring they are not only efficient in language understanding and generation but also safe, unbiased, and aligned with ethical standards. This holistic approach to evaluation helps build trust and confidence in the capabilities of LLMs, ensuring they can be deployed responsibly and effectively in a wide range of applications.

Considerations to Choose Large Language Models Evaluation

Deciding which evaluation method to use for large language models (LLMs) depends on the specific aspects of the model you wish to assess. Here are key considerations:

- Model performance: If the goal is to assess how well the model predicts or generates text, use metrics like perplexity, which quantifies the model’s predictive capabilities. Lower perplexity values indicate better performance.

- Adaptability to unfamiliar topics: Out-of-distribution testing can be used when you want to evaluate the model’s ability to handle new datasets or topics it hasn’t been trained on.

- Language fluency and coherence: If evaluating the fluency and coherence of the model’s generated text is essential, consider methods that measure these features directly, such as human evaluation panels or automated coherence metrics.

- Bias and fairness analysis: Diversity and bias analysis are critical for evaluating the ethical aspects of LLMs. Techniques like the Word Embedding Association Test (WEAT) can quantify biases in the model’s outputs.

- Manual human evaluation: This method is suitable for measuring the quality and performance of LLMs in terms of the naturalness and relevance of the generated text. It involves having human evaluators assess the outputs manually.

- Zero-shot evaluation: This approach is used to measure the performance of LLMs on tasks they haven’t been explicitly trained for, which is useful for assessing the model’s generalization capabilities.

Each method addresses different aspects of large language models evaluation, so the choice should align with your specific evaluation goals and the characteristics of the model you are assessing.

Learn in detail about LLM evaluations

Evaluating LLMs is a multifaceted process requiring a combination of automated metrics and human judgment. It ensures that these models not only perform efficiently but also adhere to ethical standards, paving the way for their responsible and effective use in various applications.