A study by the Equal Rights Commission found that AI is being used to discriminate against people in housing, employment, and lending. Thinking why? Well! Just like people, Algorithmic biases can occur sometimes.

Imagine this: You know how in some games you can customize your character’s appearance? Well, think of AI as making those characters. If the game designers only use pictures of their friends, the characters will all look like them. That’s what happens in AI. If it’s trained mostly on one type of data, it might get a bit prejudiced.

For example, picture a job application AI that learned from old resumes. If most of those were from men, it might think men are better for the job, even if women are just as good. That’s AI bias, and it’s a bit like having a favorite even when you shouldn’t.

Artificial intelligence (AI) is rapidly becoming a part of our everyday lives. AI algorithms are used to make decisions about everything from who gets a loan to what ads we see online. However, AI algorithms can be biased, which can have a negative impact on people’s lives.

What is AI Bias?

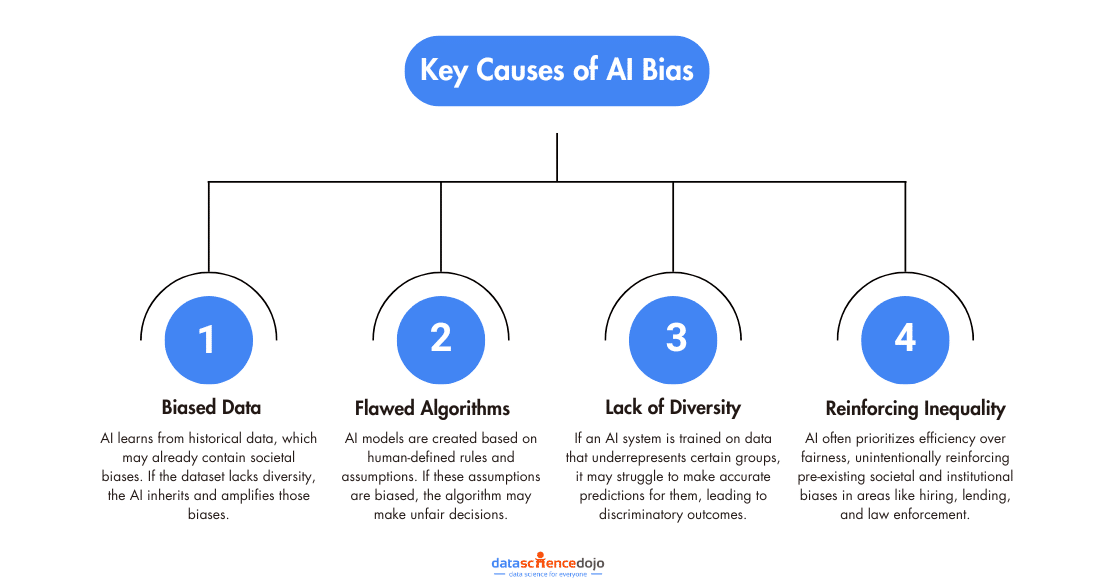

AI bias is a phenomenon that occurs when an AI algorithm produces results that are systematically prejudiced due to erroneous assumptions in the machine learning process. This can happen for a variety of reasons, including:

- Data bias: The training data used to train the AI algorithm may be biased, reflecting the biases of the people who collected or created it. For example, a facial recognition algorithm that is trained on a dataset of mostly white faces may be more likely to misidentify people of color.

Also learn how to build an LLM with toxic probabilities and bias

- Algorithmic bias: The way that the AI algorithm is designed or implemented may introduce bias. For example, an algorithm that is designed to predict whether a person is likely to be a criminal may be biased against people of color if it is trained on a dataset that disproportionately includes people of color who have been arrested or convicted of crimes.

- Human bias: The people who design, develop, and deploy AI algorithms may introduce bias into the system, either consciously or unconsciously. For example, a team of engineers who are all white men may create an AI algorithm that is biased against women or people of color.

Understanding Fairness in AI

Fairness in AI is not a monolithic concept but a multifaceted and evolving principle that varies across different contexts and perspectives. At its core, fairness entails treating all individuals equally and without discrimination. In the context of AI, this means that AI systems should not exhibit bias or discrimination towards any specific group of people, be it based on race, gender, age, or any other protected characteristic.

However, achieving fairness in AI is far from straightforward. AI systems are trained on historical data, which may inherently contain biases. These biases can then propagate into the AI models, leading to discriminatory outcomes. Recognizing this challenge, the AI community has been striving to develop techniques for measuring and mitigating bias in AI systems.

These techniques range from pre-processing data to post-processing model outputs, with the overarching goal of ensuring that AI systems make fair and equitable decisions.

Read in detail about ‘Algorithm of Thoughts’

Companies that Experienced Biases in AI

Here are some examples and stats for bias in AI from the past and present:

- Amazon’s recruitment algorithm: In 2018, Amazon was forced to scrap a recruitment algorithm that was biased against women. The algorithm was trained on historical data of past hires, which disproportionately included men. As a result, the algorithm was more likely to recommend male candidates for open positions.

- Google’s image search: In 2015, Google was found to be biased in its image search results. When users searched for terms like “CEO” or “scientist,” the results were more likely to show images of men than women. Google has since taken steps to address this bias, but it is an ongoing problem.

- Microsoft’s Tay chatbot: In 2016, Microsoft launched a chatbot called Tay on Twitter. Tay was designed to learn from its interactions with users and become more human-like over time. However, within hours of being launched, Tay was flooded with racist and sexist language. As a result, Tay began to repeat this language, and Microsoft was forced to take it offline.

- Facial recognition algorithms: Facial recognition algorithms are often biased against people of color. A study by MIT found that one facial recognition algorithm was more likely to misidentify black people than white people. This is because the algorithm was trained on a dataset that was disproportionately white.

Here’s another interesting article about FraudGPT: The dark evolution of ChatGPT

These are just a few examples of AI bias. As AI becomes more pervasive in our lives, it is important to be aware of the potential for bias and to take steps to mitigate it.

Here are some additional stats on AI bias:

A study by the AI Now Institute found that 70% of AI experts believe that AI is biased against certain groups of people.

The good news is that there is a growing awareness of AI bias and a number of efforts underway to address it. There are a number of fair algorithms that can be used to avoid bias, and there are also a number of techniques that can be used to monitor and mitigate bias in AI systems. By working together, we can help to ensure that AI is used for good and not for harm.

The Pitfalls of Algorithmic Biases

Bias in AI algorithms can manifest in multiple ways, leading to unfair and discriminatory outcomes. These biases often stem from imbalanced training data, flawed assumptions, or societal prejudices embedded in algorithms.

Facial Recognition Bias

One of the most glaring examples of AI bias is seen in facial recognition technology.

- Studies show that some algorithms perform significantly better on lighter-skinned individuals than on those with darker skin tones.

- This disparity can lead to misidentification, especially when used by law enforcement, increasing wrongful arrests and reinforcing racial biases.

AI Bias in Other Sectors

Facial recognition is just one area where AI bias appears. It also affects:

- Lending decisions – Biased AI may unfairly deny loans to specific racial or socioeconomic groups.

- Job applications – AI-driven hiring tools could favor certain demographics, leading to discriminatory hiring practices.

- Medical diagnoses – Some AI models are trained on non-diverse datasets, leading to misdiagnoses or overlooking symptoms in underrepresented groups.

Curious about how Generative AI exposes existing social inequalities and its profound impact on our society? Tune in to our podcast Future of Data and AI now.

The role of data in bias

To comprehend the root causes of bias in AI, one must look no further than the data used to train these systems. AI models learn from historical data, and if this data is biased, the AI model will inherit those biases. This underscores the importance of clean, representative, and diverse training data. It also necessitates a critical examination of historical biases present in our society.

Consider, for instance, a machine learning model tasked with predicting future criminal behavior based on historical arrest records. If these records reflect biased policing practices, such as the over-policing of certain communities, the AI model will inevitably produce biased predictions, disproportionately impacting those communities.

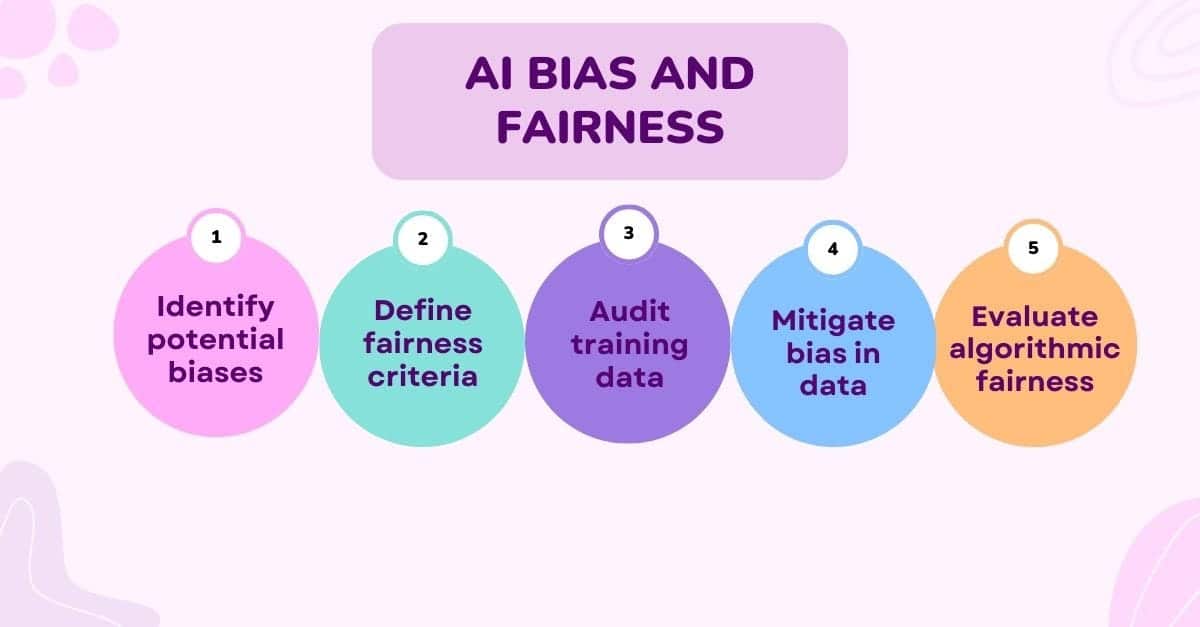

Mitigating bias in AI

Mitigating bias in AI is a pressing concern for developers, regulators, and society as a whole. Several strategies have emerged to address this challenge:

- Diverse Data Collection: Ensuring that training data is representative of the population and includes diverse groups is essential. This can help reduce biases rooted in historical data.

- Bias Audits: Regularly auditing AI systems for bias is crucial. This involves evaluating model predictions for fairness across different demographic groups and taking corrective actions as needed.

- Transparency and explainability: Making AI systems more transparent and understandable can help in identifying and rectifying biases. It allows stakeholders to scrutinize decisions made by AI models and holds developers accountable.

- Ethical guidelines: Adopting ethical guidelines and principles for AI development can serve as a compass for developers to navigate the ethical minefield. These guidelines often prioritize fairness, accountability, and transparency.

- Diverse development teams: Ensuring that AI development teams are diverse and inclusive can lead to more comprehensive perspectives and better-informed decisions regarding bias mitigation.

- Using unbiased data: The training data used to train AI algorithms should be as unbiased as possible. This can be done by collecting data from a variety of sources and by ensuring that the data is representative of the population that the algorithm will be used to serve.

- Using fair algorithms: There are a number of fair algorithms that can be used to avoid bias. These algorithms are designed to take into account the potential for bias and to mitigate it.

- Monitoring for bias: Once an AI algorithm is deployed, it is important to monitor it for signs of bias. This can be done by collecting data on the algorithm’s outputs and by analyzing it for patterns of bias.

- Ensuring transparency: It is important to ensure that AI algorithms are transparent, so that people can understand how they work and how they might be biased. This can be done by providing documentation on the algorithm’s design and by making the algorithm’s code available for public review.

Regulatory responses

In recognition of the gravity of bias in AI, governments and regulatory bodies have begun to take action. In the United States, for example, the Federal Trade Commission (FTC) has expressed concerns about bias in AI and has called for transparency and accountability in AI development.

Give it a read too: AI Ethics: Tackling Bias & Fairness

Additionally, the European Union has introduced the Artificial Intelligence Act, which aims to establish clear regulations for AI, including provisions related to bias and fairness.

These regulatory responses are indicative of the growing awareness of the need to address bias in AI at a systemic level. They underscore the importance of holding AI developers and organizations accountable for the ethical implications of their technologies.

The Road Ahead

Navigating the complex terrain of fairness and bias in AI is an ongoing journey. It requires continuous vigilance, collaboration, and a commitment to ethical AI development. As AI becomes increasingly integrated into our daily lives, from autonomous vehicles to healthcare diagnostics, the stakes have never been higher.

To achieve true fairness in AI, we must confront the biases embedded in our data, technology, and society. We must also embrace diversity and inclusivity as fundamental principles in AI development. Only through these concerted efforts can we hope to create AI systems that are not only powerful but also just and equitable.

In conclusion, the pursuit of fairness in AI and the eradication of bias are pivotal for the future of technology and humanity. It is a mission that transcends algorithms and data, touching the very essence of our values and aspirations as a society. As we move forward, let us remain steadfast in our commitment to building AI systems that uplift all of humanity, leaving no room for bias or discrimination.

Conclusion

AI bias is a serious problem that can have a negative impact on people’s lives. It is important to be aware of AI bias and to take steps to avoid it. By using unbiased data, fair algorithms, and monitoring and transparency, we can help to ensure that AI is used in a fair and equitable way.