What if AI could think more like humans—efficiently, flexibly, and systematically? Microsoft’s Algorithm of Thoughts (AoT) is redefining how Large Language Models (LLMs) solve problems, striking a balance between structured reasoning and dynamic adaptability.

Unlike rigid step-by-step methods (Chain-of-Thought) or costly multi-path exploration (Tree-of-Thought), AoT enables AI to self-regulate, breaking down complex tasks without excessive external intervention. This reduces computational overhead while making AI smarter, faster, and more insightful.

From code generation to decision-making, AoT is revolutionizing AI’s ability to tackle challenges—paving the way for the next generation of intelligent systems.

Under the Spotlight: “Algorithm of Thoughts”

Microsoft, the tech behemoth, has introduced an innovative AI training technique known as the “Algorithm of Thoughts” (AoT). This cutting-edge method is engineered to optimize the performance of expansive language models such as ChatGPT, enhancing their cognitive abilities to resemble human-like reasoning.

This unveiling marks a significant progression for Microsoft, a company that has made substantial investments in artificial intelligence (AI), with a particular emphasis on OpenAI, the pioneering creators behind renowned models like DALL-E, ChatGPT, and the formidable GPT language model.

Microsoft UnveABils Groundbreaking AoT Technique: A Paradigm Shift in Language Models

In a significant stride towards AI evolution, Microsoft has introduced the “Algorithm of Thoughts” (AoT) technique, touting it as a potential game-changer in the field. According to a recently published research paper, AoT promises to revolutionize the capabilities of language models by guiding them through a more streamlined problem-solving path.

Also explore: OpenAI’s O1 Model

How Algorithm of Thoughts (AoT) Works

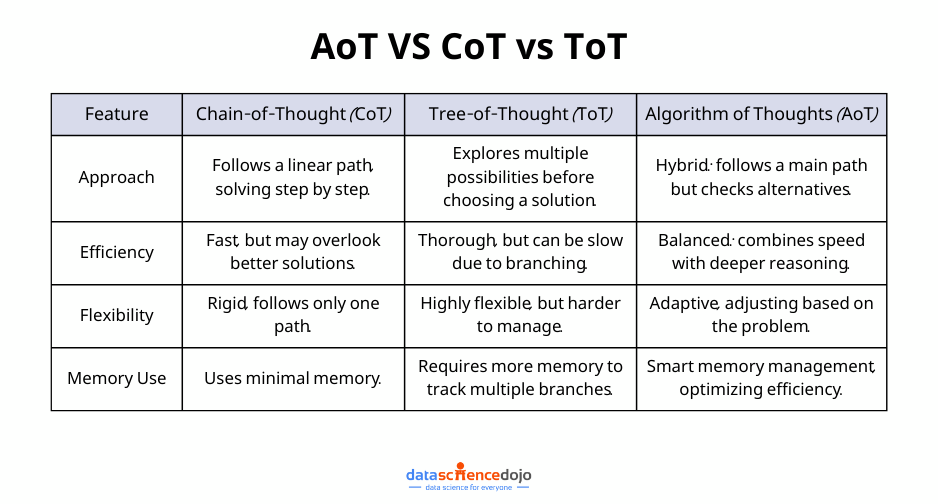

To understand how Algorithm of Thoughts (AoT) enhances AI reasoning, let’s compare it with two other widely used approaches: Chain-of-Thought (CoT) and Tree-of-Thought (ToT). Each of these techniques has its strengths and weaknesses, but AoT brings the best of both worlds together.

Breaking It Down with a Simple Analogy

Imagine you’re solving a complex puzzle:

- Chain-of-Thought (CoT): You follow a single path from start to finish, taking one logical step at a time. This approach is straightforward and efficient but doesn’t always explore the best solution.

- Tree-of-Thought (ToT): Instead of sticking to one path, you branch out into multiple possible solutions, evaluating each before choosing the best one. This leads to better answers but requires more time and resources.

- Algorithm of Thoughts (AoT): AoT is a hybrid approach that follows a structured reasoning path like CoT but also checks alternative solutions like ToT. This balance makes it both efficient and flexible—allowing AI to think more like a human.

Step-by-Step Flow of AoT

To better understand how AoT works, let’s walk through its step-by-step reasoning process:

1. Understanding the Problem

Just like a human problem-solver, the AI first breaks down the challenge into smaller parts. This ensures clarity before jumping into solutions.

2. Generating an Initial Plan

Next, it follows a structured reasoning path similar to CoT, where it outlines the logical steps needed to solve the problem.

3. Exploring Alternatives

Unlike traditional linear reasoning, AoT also briefly considers alternative approaches, just like ToT. However, instead of getting lost in too many branches, it efficiently selects only the most relevant ones.

You might also like: RFM-1 Model

4. Evaluating the Best Path

Using intelligent self-regulation, the AI then compares the different approaches and chooses the most promising path for an optimal solution.

5. Finalizing the Answer

The AI refines its reasoning and arrives at a final, well-thought-out solution that balances efficiency and depth—giving it an edge over traditional methods.

Empowering Language Models with In-Context Learning

At the heart of this pioneering approach lies the concept of “in-context learning.” This innovative mechanism equips the language model with the ability to explore various problem-solving avenues in a structured and systematic manner.

Accelerated Problem-Solving with Reduced Resource Dependency

The outcome of this paradigm shift in AI? Significantly faster and resource-efficient problem-solving. Microsoft’s AoT technique holds the promise of reshaping the landscape of AI, propelling language models like ChatGPT into new realms of efficiency and cognitive prowess.

Read more –> ChatGPT Enterprise: OpenAI’s enterprise-grade version of ChatGPT

Synergy of Human & Algorithmic Intelligence: Microsoft’s AoT Method

The Algorithm of Thoughts (AoT) emerges as a promising solution to address the limitations encountered in current in-context learning techniques such as the Chain-of-Thought (CoT) approach. Notably, CoT at times presents inaccuracies in intermediate steps, a shortcoming AoT aims to rectify by leveraging algorithmic examples for enhanced reliability.

Drawing Inspiration from Both Realms – AoT is inspired by a fusion of human and machine attributes, seeking to enhance the performance of generative AI models. While human cognition excels in intuitive thinking, algorithms are renowned for their methodical, exhaustive exploration of possibilities. Microsoft’s research paper articulates AoT’s mission as seeking to “fuse these dual facets to augment reasoning capabilities within Large Language Models (LLMs).”

Enhancing Cognitive Capacity

One of the most significant advantages of Algorithm of Thoughts (AoT) is its ability to transcend human working memory limitations—a crucial factor in complex problem-solving.

Unlike Chain-of-Thought (CoT), which follows a rigid linear reasoning approach, or Tree-of-Thought (ToT), which explores multiple paths but can be computationally expensive, AoT strikes a balance between structured logic and flexibility. It efficiently handles diverse sub-problems, allowing AI to consider multiple solution paths dynamically without getting stuck in inefficient loops.

Key advantages include:

- Minimal prompting, maximum efficiency – AoT performs well even with concise instructions.

- Optimized decision-making – It competes with traditional tree-search tools while using fewer computational resources.

- Balanced computational cost vs. reasoning depth – Unlike brute-force approaches, AoT selectively explores promising paths, making it suitable for real-world applications like programming, data analysis, and AI-powered assistants.

By intelligently adjusting its reasoning process, AoT ensures AI models remain efficient, adaptable, and capable of handling complex challenges beyond human memory limitations.

Real-World Applications of Algorithm of Thoughts (AoT)

Algorithm of Thoughts (AoT ) isn’t just an abstract AI concept—it has real, practical uses across multiple domains. Let’s explore some key areas where it can make a difference.

1. Programming Challenges & Code Debugging

Think about coding competitions or complex debugging sessions. Traditional AI models often get stuck when handling multi-step programming problems.

How AoT Helps: Instead of following a rigid step-by-step approach, AoT evaluates different problem-solving paths dynamically. If one approach isn’t working, it pivots and tries another.

Example: Suppose an AI is solving a dynamic programming problem in Python. If its initial solution path leads to inefficiencies, AoT enables it to reconsider and restructure the approach—leading to optimized code.

2. Data Analysis & Decision Making

When analyzing large datasets, AI needs to filter, interpret, and make sense of complex patterns. A simple step-by-step method might miss valuable insights.

How AoT Helps: It can explore multiple angles of analysis before committing to the best conclusion, making it ideal for business intelligence or predictive analytics.

Example: Imagine an AI analyzing customer purchase patterns. Instead of relying on one predictive model, AoT allows it to test various hypotheses—such as seasonality effects, demographic preferences, and market trends—before finalizing a sales forecast.

3. AI-Powered Assistants & Chatbots

Current AI assistants sometimes struggle with complex, multi-turn conversations. They either forget previous context or stick too rigidly to one train of thought.

How AoT Helps: By balancing structured reasoning with adaptive exploration, AoT allows chatbots to handle ambiguous queries better.

Example: If a user asks a finance AI assistant about investment strategies, AoT enables it to weigh multiple options—stock investments, real estate, bonds—before providing a well-rounded answer tailored to the user’s risk appetite.

A Paradigm Shift in AI Reasoning

AoT marks a notable shift away from traditional supervised learning by integrating the search process itself. With ongoing advancements in prompt engineering, researchers anticipate that this approach can empower models to efficiently tackle complex real-world problems while also contributing to a reduction in their carbon footprint.

Read more –> NOOR, the new largest NLP Arabic language model

Microsoft’s Strategic Position

Given Microsoft’s substantial investments in the realm of AI, the integration of AoT into advanced systems such as GPT-4 seems well within reach. While the endeavor of teaching language models to emulate human thought processes remains challenging, the potential for transformation in AI capabilities is undeniably significant.

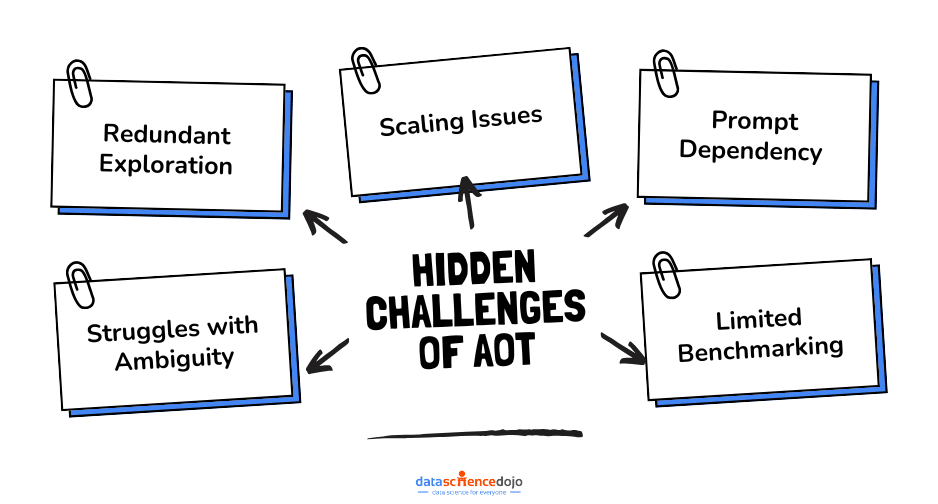

Limitations of AoT

While AoT offers clear advantages, it’s not a magic bullet. Here are some challenges to consider:

1. Computational Overhead

Since AoT doesn’t follow just one direct path (like Chain-of-Thought), it requires more processing power to explore multiple possibilities. This can slow down real-time applications, especially in environments with limited computing resources.

Example: In mobile applications or embedded systems, where processing power is constrained, AoT’s exploratory nature could make responses slower than traditional methods.

2. Complexity in Implementation

Building an effective AoT model requires careful tuning. Simply adding more “thought paths” can lead to excessive branching, making the AI inefficient rather than smarter.

Example: If an AI writing assistant uses AoT to generate content, too much branching might cause it to get lost in irrelevant alternatives rather than producing a clear, concise output.

3. Potential for Overfitting

By evaluating multiple solutions, AoT runs the risk of over-optimizing for certain problems while ignoring simpler, more generalizable approaches.

Example: In AI-driven medical diagnosis, if AoT explores too many rare conditions instead of prioritizing common diagnoses first, it might introduce unnecessary complexity into the decision-making process.

Wrapping up

In summary, AoT presents a wide range of potential applications. Its capacity to transform the approach of Large Language Models (LLMs) to reasoning spans diverse domains, ranging from conventional problem-solving to tackling complex programming challenges. By incorporating algorithmic pathways, LLMs can now consider multiple solution avenues, utilize model backtracking methods, and evaluate the feasibility of various subproblems. In doing so, AoT introduces a novel paradigm in in-context learning, effectively bridging the gap between LLMs and algorithmic thought processes.