Concerns about AI replacing jobs have become more prominent as we enter the fourth industrial revolution. Historically, every technological revolution has disrupted the job market—eliminating certain roles while creating new ones in unpredictable areas.

This pattern has been observed for centuries, from the introduction of the horse collar in Europe, through the Industrial Revolution, and up to the current digital age. With each technological advance, fears arise about job losses, but history suggests that technology is, in the long run, a net creator of jobs.

The agricultural revolution, for example, led to a decline in farming jobs but gave rise to an increase in manufacturing roles. Similarly, the rise of the automobile industry in the early 20th century led to the creation of multiple supplementary industries, such as filling stations and automobile repair, despite eliminating jobs in the horse-carriage industry.

Explore 8 Industries Undergoing Robotics Revolution: Security, Entertainment, and More

The Fourth Industrial Revolution: Generative AI’s Impact on Jobs and Communities

The introduction of personal computers and the internet also followed a similar pattern, with an estimated net gain of 15.8 million jobs in the U.S. over the last few decades. Now, with generative AI and robots with us, we are entering the fourth industrial revolution. Here are some stats to show you the seriousness of the situation:

- Generative AI could add the equivalent of $2.6 trillion to $4.4 trillion annually across 63 use cases analyzed. Read more

- Current generative AI technologies have the potential to automate work activities that absorb 60 to 70 percent of employees’ time today, which is a significant increase from the previous estimate that technology has the potential to automate half of the time employees spend working.

This bang of generative AI’s impact will be heard in almost all of the industries globally, with the biggest impact seen in banking, high-tech, and life sciences. This means that lots of people will be losing jobs. We can see companies laying off jobs already.

But what’s more concerning is the fact that different communities will face this impact differently.

The Concern: AI Replacing Jobs of the Communities of Color

Regarding the annual wealth generation from generative AI, it’s estimated to produce around $7 trillion worldwide, with nearly $2 trillion of that projected to benefit the United States.

Explore the Top 7 Generative AI courses offered online

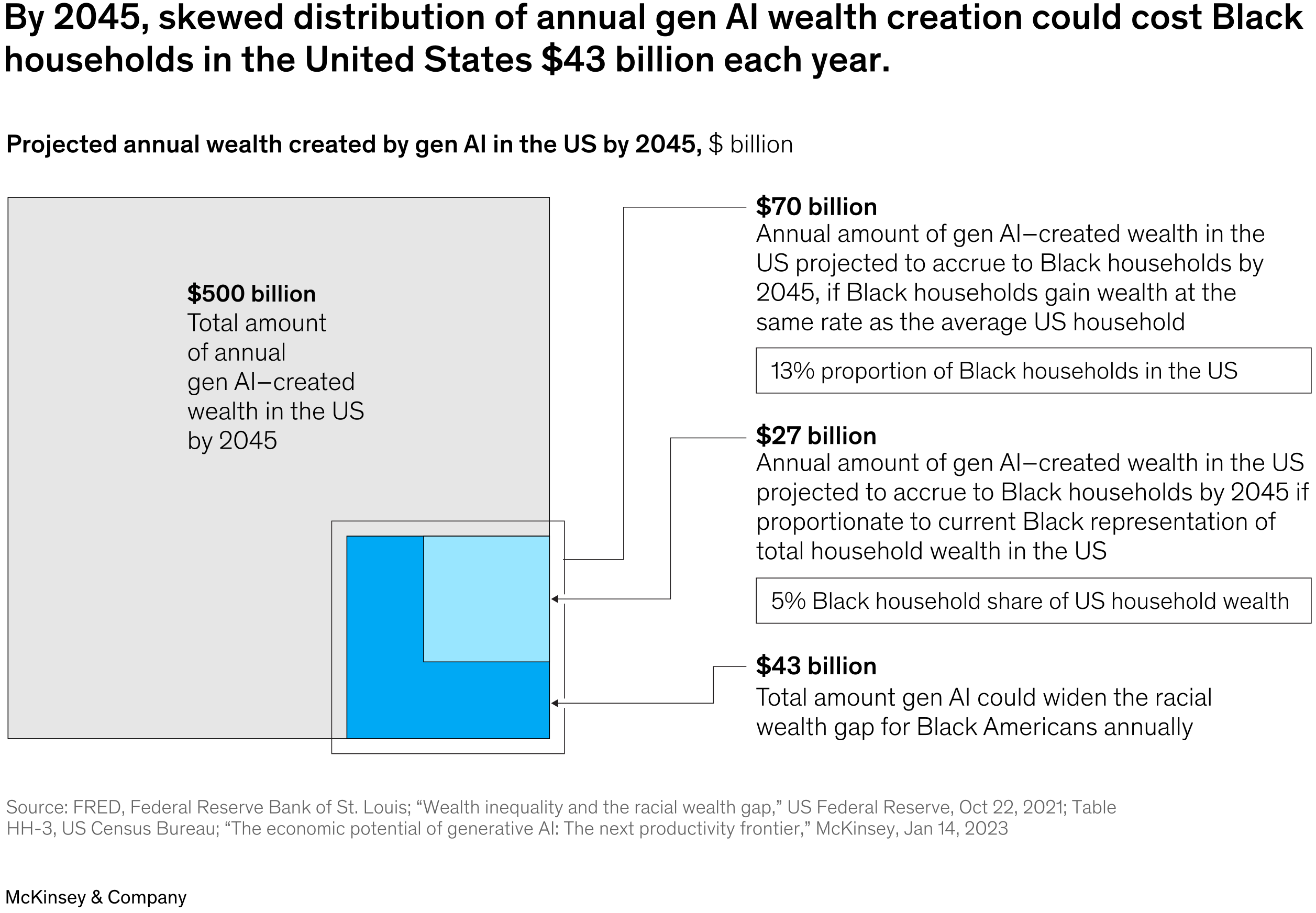

US household wealth captures about 30 percent of US GDP, suggesting the United States could gain nearly $500 billion in household wealth from gen AI value creation. This would translate to an average of $3,400 in new wealth for each of the projected 143.4 million US households in 2045.

Understand the Generative AI Roadmap

However, black Americans capture only about 38 cents of every dollar of new household wealth despite representing 13 percent of the US population. If this trend continues, by 2045, the racially disparate distribution of new wealth created by generative AI could increase the wealth gap between black and White households by $43 billion annually.

Read more about The Impact of Generative AI on Jobs: Job Creation or Disruption?

Higher Employment of Black Community in High Mobility Jobs

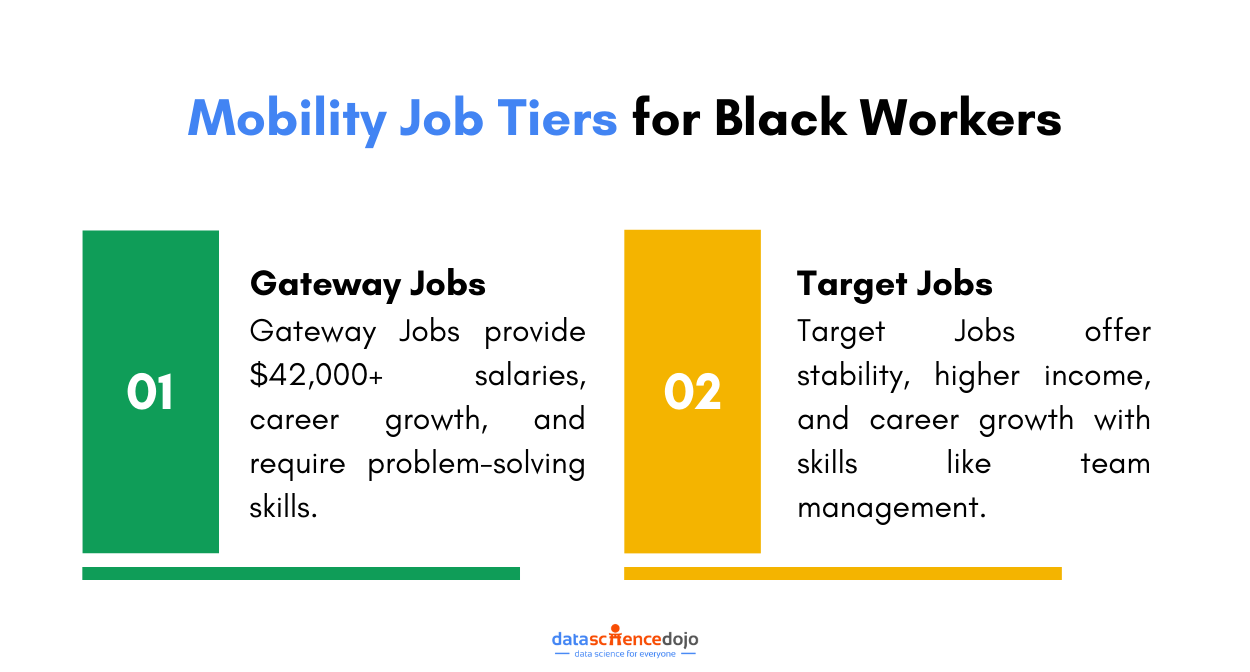

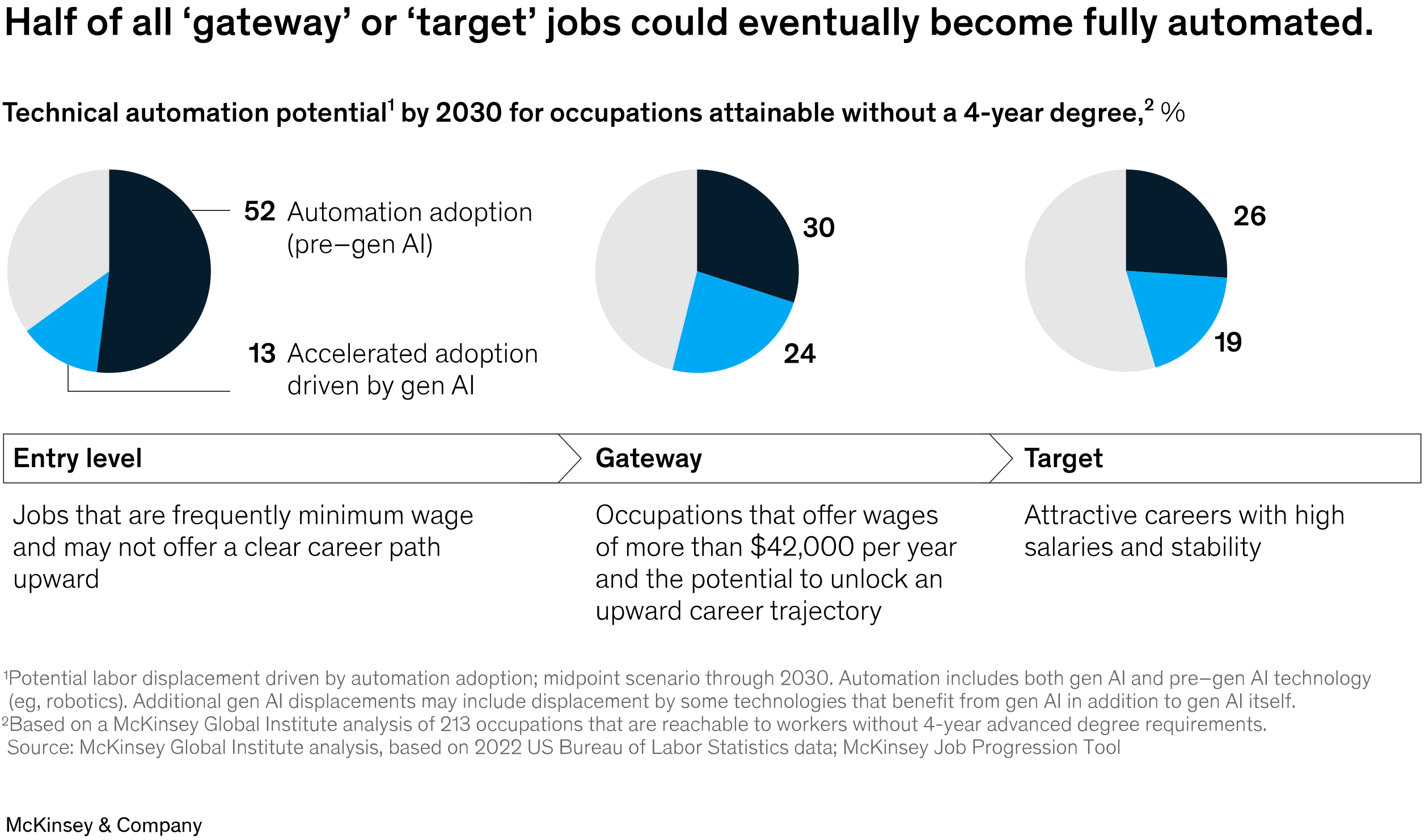

Mobility jobs are those that provide livable wages and the potential for upward career development over time without requiring a four-year college degree. They have two tiers including target jobs and gateway jobs.

Gateway Jobs

Gateway jobs are positions that do not require a four-year college degree and are based on experience. They offer a salary of more than $42,000 per year and can unlock a trajectory for career upward mobility.

An example of a gateway job could be a role in customer support, where an individual has significant experience in client interaction and problem-solving.

Target Jobs

Target jobs represent the next level up for people without degrees. These are attractive occupations in terms of risk and income, offering generally higher annual salaries and stable positions.

An example of a target job might be a production supervision role, where a worker oversees manufacturing processes and manages a team on the production floor.

The Affect of Generative AI on Mobility Job Tiers

Generative AI may significantly affect these occupations, as many of the tasks associated with them—including customer support, production supervision, and office support—are precisely what generative AI can do well.

Learn how is Generative AI Reshaping the Future of Work.

For black workers, this is particularly relevant. Seventy-four percent of black workers do not have college degrees, yet in the past five years, one in every eight has moved to a gateway or target job.

However, generative AI may be able to perform about half of these gateway or target jobs that many workers without degrees have pursued between 2030 and 2060. This could close a pathway to upward mobility that many black workers have relied on which leads to AI replacing jobs for the communities of color.

Furthermore, coding boot camps and training, which have risen in popularity and have unlocked access to high-paying jobs for many workers without college degrees, are also at risk of disruption as gen AI-enabled programming has the potential to automate many entry-level coding positions.

These shifts could potentially widen the racial wealth gap and increase inequality if not managed thoughtfully and proactively. Therefore, it is crucial for initiatives to be put in place to support black workers through this transition, such as reskilling programs and the development of “future-proof skills”.

These skills include socioemotional abilities, physical presence skills, and the ability to engage in nuanced problem-solving in specific contexts. Focusing efforts on developing non-automatable skills will better position black workers for the rapid changes that generative AI will bring.

Understand Data Scientist Skills essential for a Data Science Job

Harnessing Generative AI to Bridge the Racial Wealth Gap in the U.S.

Despite all the foreseeable downsides of Generative AI, it has the potential to close the racial wealth gap in the United States by leveraging its capabilities across various sectors that influence economic mobility for black communities.

Explore the Potential of Generative AI and LLMs to Empower Non-Profit Organizations

In healthcare, generative AI can improve access to care and outcomes for black Americans, addressing issues such as preterm births and enabling providers to identify risk factors earlier.

In financial inclusion, gen AI can enhance access to banking services, helping black consumers connect with traditional banking and save on fees associated with nonbank financial services.

Key Areas Where Generative AI Can Drive Change

AI can be applied to the eight pillars of black economic mobility, including credit and ecosystem development for small businesses, health, workforce and jobs, pre–K–12 education, the digital divide, affordable housing, and public infrastructure.

Learn about 15 Spectacular AI, ML, and Data Science Movies

Thoughtful application of gen AI can generate personalized financial plans and marketing, support the creation of long-term financial plans, and enhance compliance monitoring to ensure equitable access to financial products.

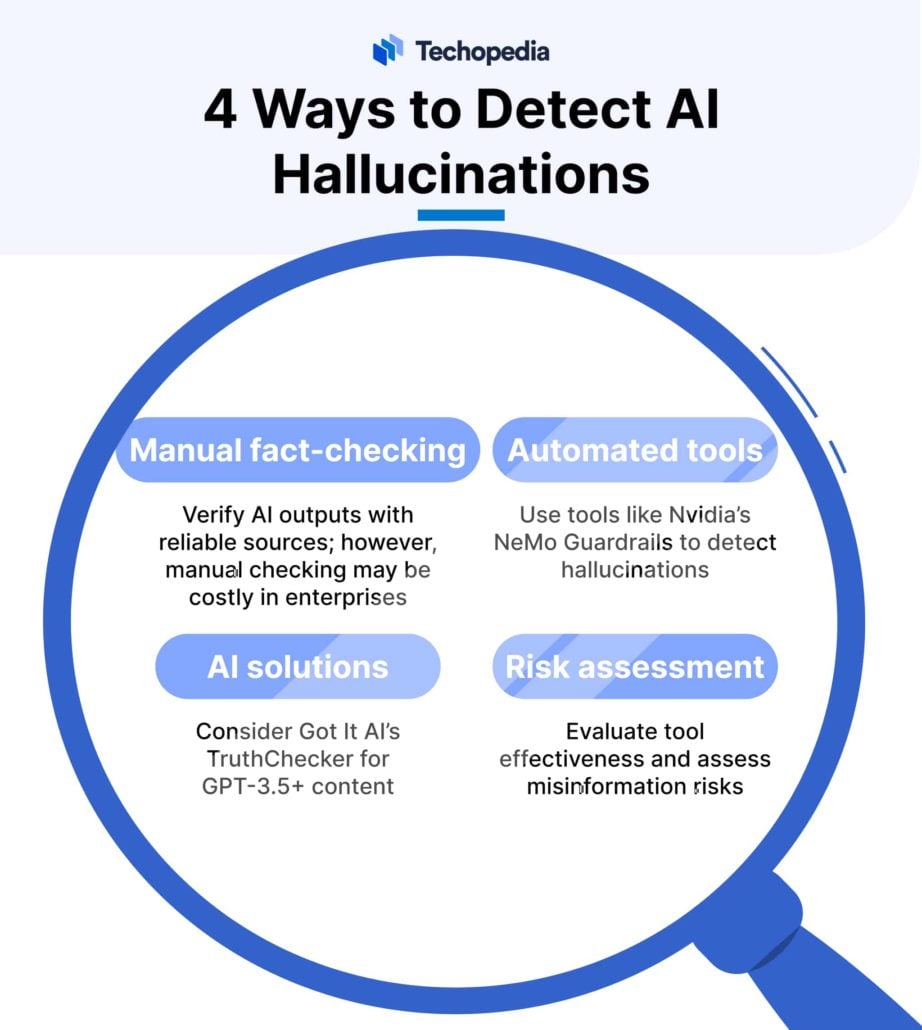

However, to truly close the racial wealth gap, generative AI must be deployed with an equity lens. This involves reskilling workers, ensuring that AI is used in contexts where it can make fair decisions, and establishing guardrails to protect black and marginalized communities from potential negative impacts of the technology.

Explore Generative AI in Healthcare

Democratized access to generative AI and the cultivation of diverse tech talent is also critical to ensure that the benefits of gen AI are equitably distributed.

Embracing the Future: Ensuring Equity in the Generative AI Era

In conclusion, the advent of generative AI presents a complex and multifaceted challenge, particularly for the black community. While it offers immense potential for economic growth and innovation, it also poses a significant risk of exacerbating existing inequalities and widening the racial wealth gap.

To harness the benefits of this technological revolution while mitigating its risks, it is crucial to implement inclusive strategies. These should focus on reskilling programs, equitable access to technology, and the development of non-automatable skills.

By doing so, we can ensure that generative AI becomes a tool for promoting economic mobility and reducing disparities, rather than an instrument that deepens them. The future of work in the era of generative AI demands not only technological advancement but also a commitment to social justice and equality.