As the world becomes more interconnected and data-driven, the demand for real-time applications has never been higher. Artificial intelligence (AI) and natural language processing (NLP) technologies are evolving rapidly to manage live data streams.

They power everything from chatbots and predictive analytics to dynamic content creation and personalized recommendations. Moreover, LangChain is a robust framework that simplifies the development of advanced, real-time AI applications.

In this blog, we’ll explore the concept of streaming Langchain, how to set it up, and why it’s essential for building responsive AI systems that react instantly to user input and real-time data.

What is Streaming Langchain?

In the context of Langchain, streaming refers to the continuous and real-time processing of data as it is received, rather than processing data in large batches at scheduled intervals. This approach is essential for applications that require immediate, context-aware responses or real-time insights.

Streaming enables developers to build applications that react dynamically to ever-changing inputs. For example, Langchain can be used to stream live data such as real-time queries from users, sensor data, financial market movements, or even continuous social media posts.

Unlike batch processing systems, which require collecting data over a period of time before generating output, streaming allows applications to process data instantly as it arrives, ensuring up-to-the-minute responses and analyses.

Learn more about LangChain, its key features, tools, and use cases

By leveraging Langchain’s streaming functionality, developers can build systems for:

- Real-time Chatbots: AI-powered chatbots that can continuously process user input and deliver immediate, contextually relevant responses without delay.

- Live Data Analysis: Applications that can analyze and act on continuously flowing data, such as financial market updates, weather reports, or social media feeds, in real-time.

- Interactive Experiences: Dynamic, real-time interactions in gaming, virtual assistants, or customer service applications, where the system provides instant feedback and adapts to user queries as they happen.

Thus, it empowers developers to build dynamic, real-time applications capable of instant processing and adaptive interactions. LangChain’s streaming functionality ensures timely, context-aware responses, enabling smarter and more responsive systems, positioning LangChain as an invaluable tool for building innovative AI solutions.

Why does Streaming Matter in Langchain?

Traditional batch processing workflows often introduce delays in response time. In many modern AI applications, where user interaction is central, this delay can hinder performance. Streaming in Langchain allows for instant feedback as it processes data in real time, ensuring that applications are more interactive and efficient.

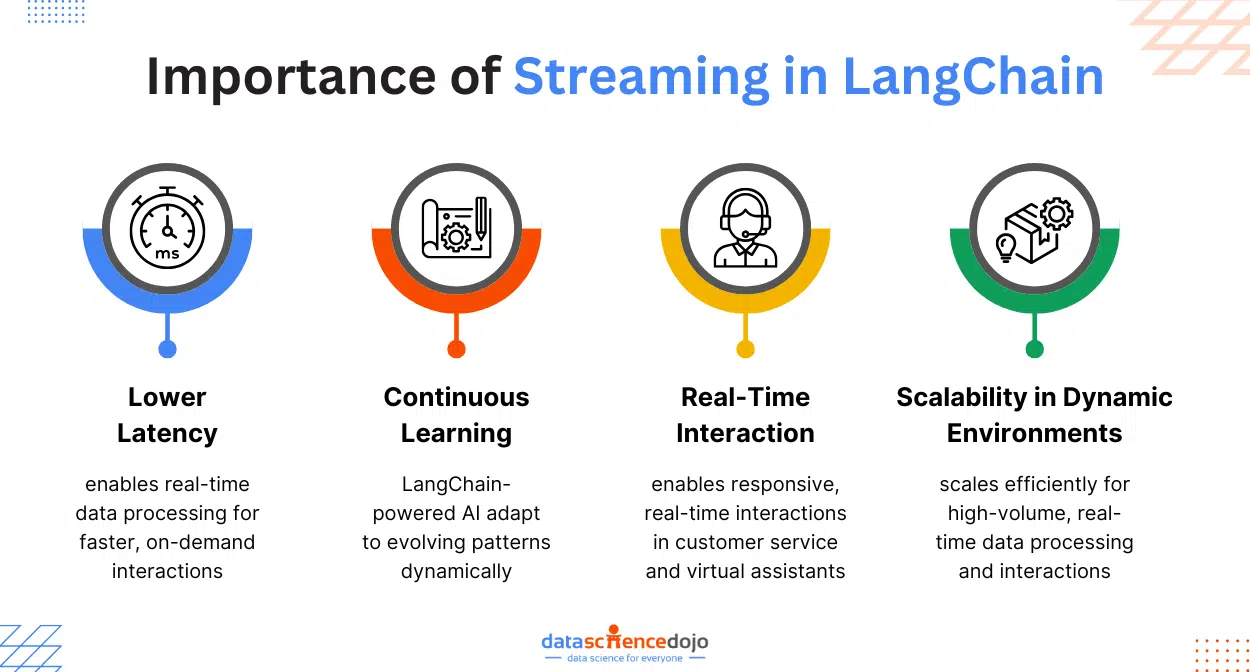

Here’s why streaming is particularly important in Langchain:

Lower Latency

Streaming drastically reduces the time it takes to process incoming data. In real-time applications, such as a customer service chatbot or live data monitoring system, reducing latency is crucial for providing quick, on-demand responses. With Langchain, you can process data as it arrives, minimizing delays and ensuring faster interactions.

Continuous Learning

Real-time data streams allow AI models to adapt and evolve as new data becomes available. This ability to continuously learn means that Langchain-powered systems can better respond to emerging trends, shifts in user behavior, or changing market conditions.

This is especially useful for applications like recommendation engines or predictive analytics systems, where the model must adjust to new patterns over time.

Learn to build a recommendation system using Python

Real-Time Interaction

Whether it’s engaging with customers, analyzing live events, or responding to user queries, streaming enables more natural, responsive interactions. This capability is particularly valuable in customer service applications, virtual assistants, or interactive digital experiences where users expect instant, contextually aware responses.

Scalability in Dynamic Environments

Langchain’s streaming functionality is well-suited for applications that need to scale and handle large volumes of data in real-time. Whether you’re processing high-frequency data streams or managing a growing number of concurrent user interactions, streaming ensures your system can handle the increased load without compromising performance.

Here’s your one-stop guide for large language models

Hence, streaming LangChain ensures scalable performance, handling large data volumes and concurrent interactions efficiently. Let’s dig deeper into setting up the streaming process.

How to Set Up Streaming in Langchain?

Setting up streaming in Langchain is straightforward and designed to seamlessly integrate real-time data processing into your AI models. Langchain provides two main APIs for streaming outputs in real-time, making it easy to handle dynamic, real-time workflows.

These APIs are supported by any component that implements the Runnable Interface, including Large Language Models (LLMs) and LangGraph workflows.

- sync stream and async astream: Stream outputs from individual Runnables (like a chatbot model) as they are generated or stream entire workflows created with LangGraph.

- async astream_events: This API provides access to custom events and intermediate outputs from LLM applications built with LCEL (Langchain Expression Language).

Here’s a basic example that implements streaming on the LLM response:

Prerequisite:

- Install Python: Make sure you have installed Python 3.8 or later

- Install Langchain: Ensure that Langchain is installed in your Python environment. You can install it by pip install langchain_community

- Install OpenAi: This is optional and required only in case you want to use OpenAi API

Setting up LLM for streaming:

- Begin by importing the required libraries

- Set up your OpenAI API key (if you wish to use an OpenAI API)

- Make sure the model you want to use supports streaming. Import your model with the “streaming” attribute set to “True”.

- Create a function to stream the responses chunk by chunk using the LangChain stream()

- Finally, use the function by invoking it on a query/prompt for streaming.

Sample notebook:

You can explore the full example in this Collab Notebook

Challenges and Considerations in Streaming Langchain

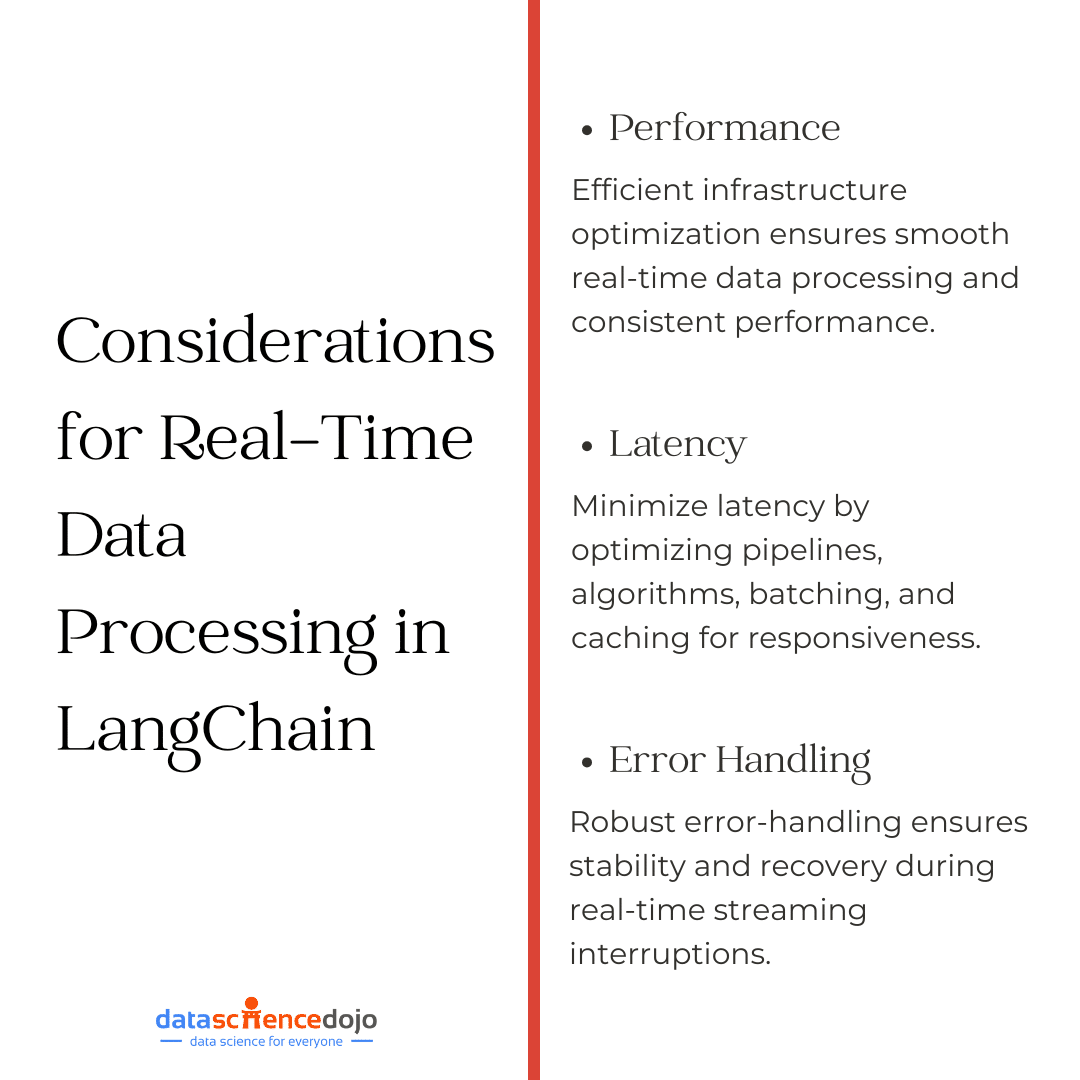

While Langchain’s streaming capabilities offer powerful features, it’s essential to be aware of a few challenges when implementing real-time data processing.

Below are a few challenges and considerations to highlight when streaming LangChain:

Performance

Streaming real-time data can place significant demands on system resources. To ensure smooth operation, it’s critical to optimize your infrastructure, especially when handling high data throughput. Efficient resource management will help you avoid overloading your servers and ensure consistent performance.

Latency

While streaming promises real-time processing, it can introduce latency, particularly with large or complex data streams. To reduce delays, you may need to fine-tune your data pipeline, optimize processing algorithms, and leverage techniques like batching and caching for better responsiveness.

Error Handling

Real-time streaming data can occasionally experience interruptions or incomplete data, which can affect the stability of your application. Implementing robust error-handling mechanisms is vital to ensure that your AI agents can recover gracefully from disruptions, providing a smooth experience even in the face of network or data issues.

Read more about design patterns for AI agents in LLMs

Summing It Up

Streaming with Langchain opens exciting new possibilities for building dynamic, real-time AI applications. Whether you are developing intelligent chatbots, analyzing live data, or creating interactive user experiences, Langchain’s streaming capabilities empower you to build more responsive and adaptive LLM systems.

The ability to process and react to data in real-time gives you a significant edge in creating smarter applications that can evolve as they interact with users or other data sources.

As Langchain continues to evolve, we can expect even more robust tools to handle streaming data efficiently. Future updates may include advanced integrations with various streaming services, enhanced memory management, and better scalability for large-scale, high-performance applications.

If you’re ready to explore the world of real-time data processing and leverage Langchain’s streaming power, now is the time to dive in and start creating next-gen AI solutions.