Large language models (LLMs), such as OpenAI’s GPT-4, are swiftly metamorphosing from mere text generators into autonomous, goal-oriented entities displaying intricate reasoning abilities. This crucial shift carries the potential to revolutionize the manner in which humans connect with AI, ushering us into a new frontier.

This blog will break down the working of these agents, illustrating the impact they impart on what is known as the ‘Lang Chain‘.

Working of the Agents

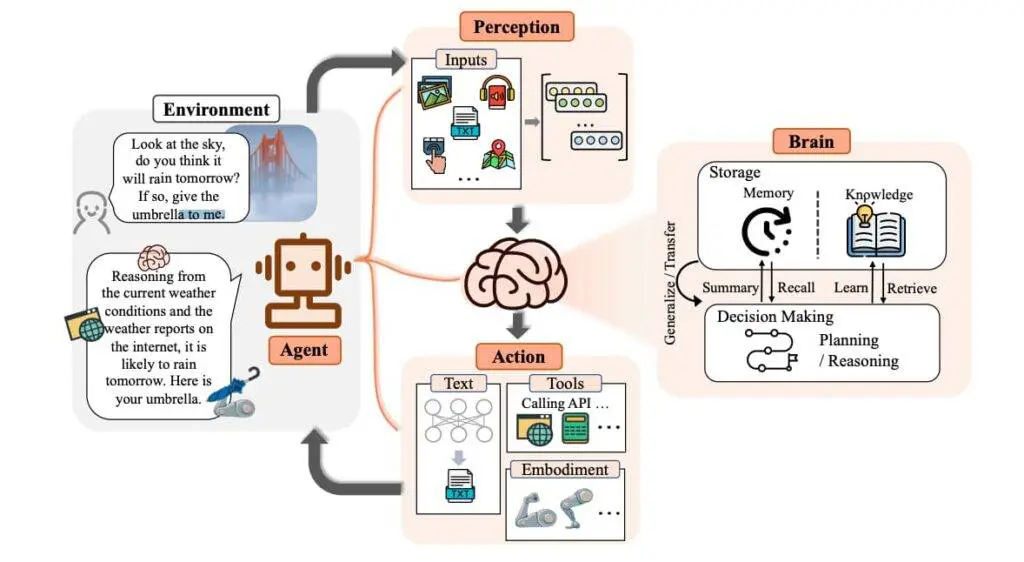

Our exploration into the realm of LLM agents begins with understanding the key elements of their structure, namely the LLM core, the Prompt Recipe, the Interface and Interaction, and Memory. The LLM core forms the fundamental scaffold of an LLM agent. It is a neural network trained on a large dataset, serving as the primary source of the agent’s abilities in text comprehension and generation.

The functionality of these agents heavily relies on prompt engineering. Prompt recipes are carefully crafted sets of instructions that shape the agent’s behaviors, knowledge, goals, and persona and embed them in prompts.

The agent’s interaction with the outer world is dictated by its user interface, which can range from command-line and graphical to conversational interfaces. For fully autonomous systems, prompts are programmatically received from other systems or entities.

Another crucial aspect of their structure is the inclusion of memory, which can be categorized into short-term and long-term. While the former helps the agent be aware of recent actions and conversation histories, the latter works in conjunction with an external database to recall information from the past.

Learn in detail about LangChain

Ingredients Involved in Agent Creation

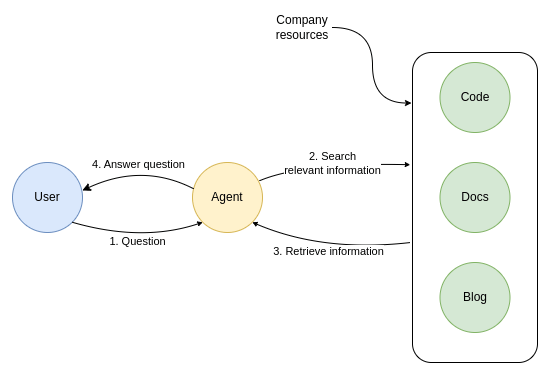

Creating robust and capable LLM agents demands integrating the core LLM with additional components for knowledge, memory, interfaces, and tools.

The LLM forms the foundation, while three key elements are required to allow these agents to understand instructions, demonstrate essential skills, and collaborate with humans: the underlying LLM architecture itself, effective prompt engineering, and the agent’s interface.

Tools

Tools are functions that an agent can invoke. There are two important design considerations around tools:

- Giving the agent access to the right tools

- Describing the tools in a way that is most helpful to the agent

Without thinking through both, you won’t be able to build a working agent. If you don’t give the agent access to a correct set of tools, it will never be able to accomplish the objectives you give it. If you don’t describe the tools well, the agent won’t know how to use them properly. Some of the vital tools a working agent needs are:

Also explore this: LlamaIndex vs LangChain

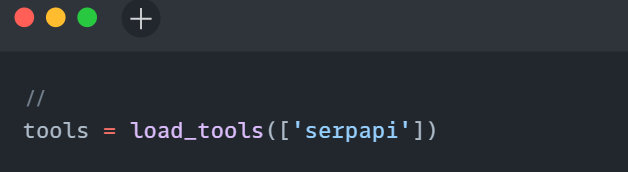

1. SerpAPI: This page covers how to use the SerpAPI search APIs within Lang Chain. It is broken into two parts: installation and setup, and then references to the specific SerpAPI wrapper. Here are the details for its installation and setup:

- Install requirements with pip install google-search-results

- Get a SerpAPI API key and either set it as an environment variable (SERPAPI_API_KEY)

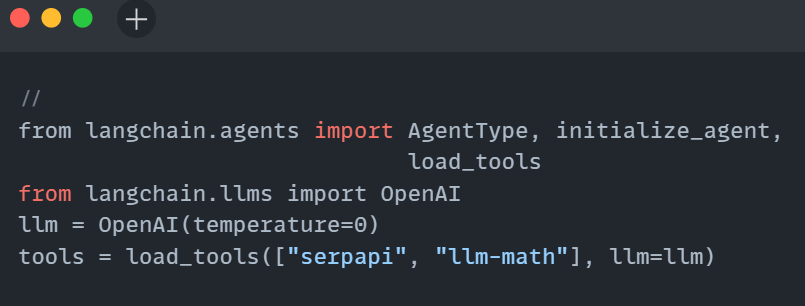

You can also easily load this wrapper as a tool (to use with an agent). You can do this with:

2. Math-tool: The llm-math tool wraps an LLM to do math operations. It can be loaded into the agent tools like:

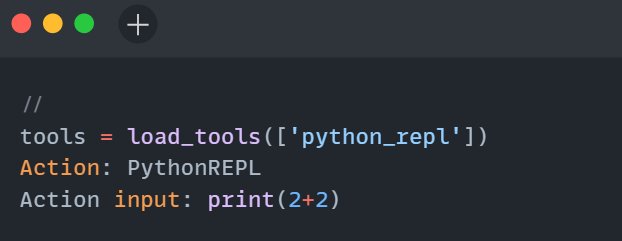

Python-REPL tool: Allows agents to execute Python code. To load this tool, you can use:

The action of python REPL allows agent to execute the input code and provide the response.

The Impact of Agents:

A noteworthy advantage of LLM agents is their potential to exhibit self-initiated behaviors ranging from purely reactive to highly proactive. This can be harnessed to create versatile AI partners capable of comprehending natural language prompts and collaborating with human oversight.

LLM-powered systems leverage LLMs innate linguistic abilities to understand instructions, context, and goals, operate autonomously and semi-autonomously based on human prompts, and harness a suite of tools such as calculators, APIs, and search engines to complete assigned tasks, making logical connections to work towards conclusions and solutions to problems. Here are few of the services that are highly dominated by the use of Lang Chain agents:

Facilitating Language Services

Agents play a critical role in delivering language services such as translation, interpretation, and linguistic analysis. Ultimately, this process steers the actions of the agent through the encoding of personas, instructions, and permissions within meticulously constructed prompts.

Users effectively steer the agent by offering interactive cues following the AI’s responses. Thoughtfully designed prompts facilitate a smooth collaboration between humans and AI. Their expertise ensures accurate and efficient communication across diverse languages.

A comprehensive guide on NLP

Quality Assurance and Validation

Ensuring the accuracy and quality of language-related services is a core responsibility. These systems verify translations, validate linguistic data, and maintain high standards to meet user expectations. They can also manage relatively self-contained workflows with human oversight.

Use internal validation to verify the accuracy and coherence of their generated content. Agents undergo rigorous testing against various datasets and scenarios. These tests validate the agent’s ability to comprehend queries, generate accurate responses, and handle diverse inputs.

Types of Agents

These systems leverage an LLM to determine the appropriate actions and their sequence. An action may involve using a tool and analyzing its output or generating a response for the user. Below are the available options in LangChain.

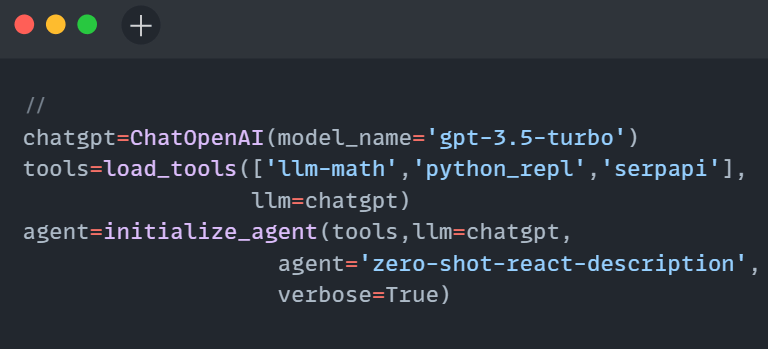

Zero-Shot ReAct: This agent uses the ReAct framework to determine which tool to use based solely on the tool’s description. Any number of tools can be provided. This agent requires that a description is provided for each tool. Below is how we can set up this Agent:

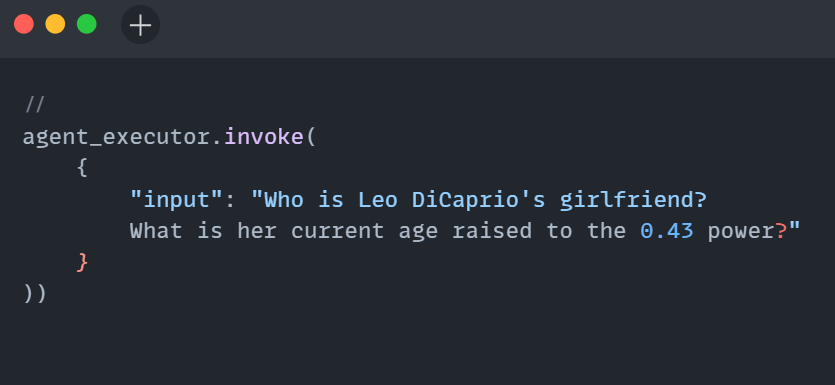

Let’s invoke this agent and check if it’s working in chain

This will invoke the agent.

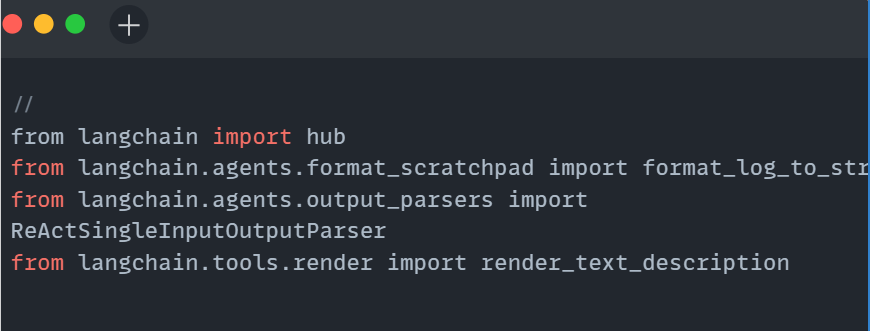

Structured-Input ReAct: The structured tool chat agent is capable of using multi-input tools. Older agents are configured to specify an action input as a single string, but this agent can use a tool’s argument schema to create a structured action input. This is useful for more complex tool usage, like precisely navigating around a browser. Here is how one can setup the React agent:

The further necessary imports required are:

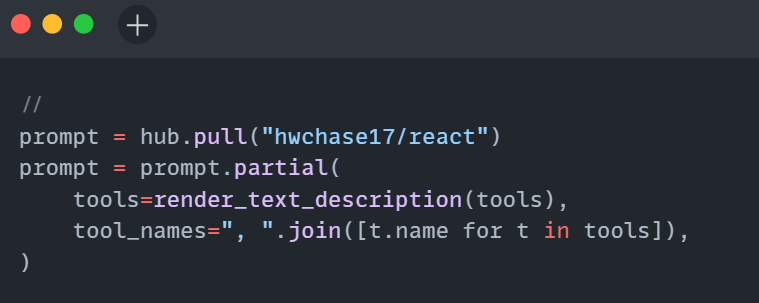

Setting up parameters:

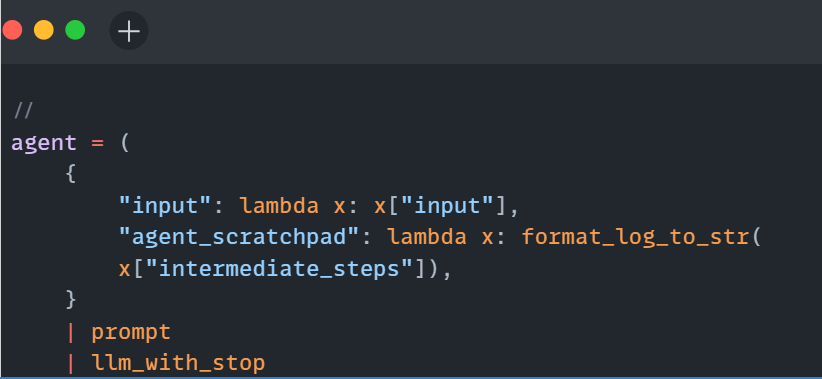

Creating the agent:

Improving Performance of an Agent

Enhancing the capabilities of agents in Large Language Models (LLMs) necessitates a multi-faceted approach. Firstly, it is essential to keep refining the art and science of prompt engineering, which is a key component in directing these systems securely and efficiently. As prompt engineering improves, so does the competencies of LLM agents, allowing them to venture into new spheres of AI assistance.

Secondly, integrating additional components can expand agents’ reasoning and expertise. These components include knowledge banks for updating domain-specific vocabularies, lookup tools for data gathering, and memory enhancement for retaining interactions.

Thus, increasing the autonomous capabilities of agents requires more than just improved prompts; they also need access to knowledge bases, memory, and reasoning tools.

Lastly, it is vital to maintain a clear iterative prompt cycle, which is key to facilitating natural conversations between users and LLM agents. Repeated cycling allows the LLM agent to converge on solutions, reveal deeper insights, and maintain topic focus within an ongoing conversation.

Conclusion

The advent of large language model agents marks a turning point in the AI domain. With increasing advances in the field, these agents are strengthening their footing as autonomous, proactive entities capable of reasoning and executing tasks effectively.

The application and impact of Large Language Model agents are vast and game-changing, from conversational chatbots to workflow automation. The potential challenges or obstacles include ensuring the consistency and relevance of the information the agent processes, and the caution with which personal or sensitive data should be treated. The promising future outlook of these systems is the potentially increased level of automated and efficient interaction humans can have with AI.