InstructGPT is an advanced iteration of the GPT (Generative Pretrained Transformer) language models developed by OpenAI. Here’s a detailed look into InstructGPT:

What is InstructGPT?

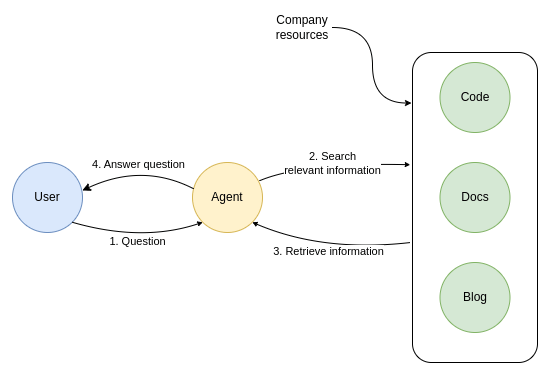

The main objective of InstructGPT is to better align AI-powered language models with human intentions by training them using Reinforcement Learning from Human Feedback (RLHF). This method improves the model’s ability to understand and follow instructions more accurately.

Target Users

InstructGPT is built for a broad range of users, from developers creating AI applications to businesses leveraging AI for enhanced customer service and for educational purposes where clear, concise, and contextually correct language is crucial.

Key Features

- Alignment with Human Intent: The model is fine-tuned to understand and execute instructions as intended by the user.

- Enhanced Accuracy and Relevance: Through self-evaluation and human feedback, InstructGPT provides responses that are more accurate and contextually relevant.

- Instruction-based Task Performance: It is designed to perform structured tasks based on specific instructions.

Examples of Use

- Creating more effective chatbots that can understand and respond to user queries accurately.

- Generating educational content that can help explain complex topics in a simple manner.

- Assisting in programming by providing code explanations or generating code snippets based on a given prompt.

- Enhancing customer service by providing precise answers to customer inquiries, reducing the need for human intervention.

InstructGPT represents a significant move towards creating AI that can interact with humans more naturally and effectively, leading to a wide array of practical applications across different industries

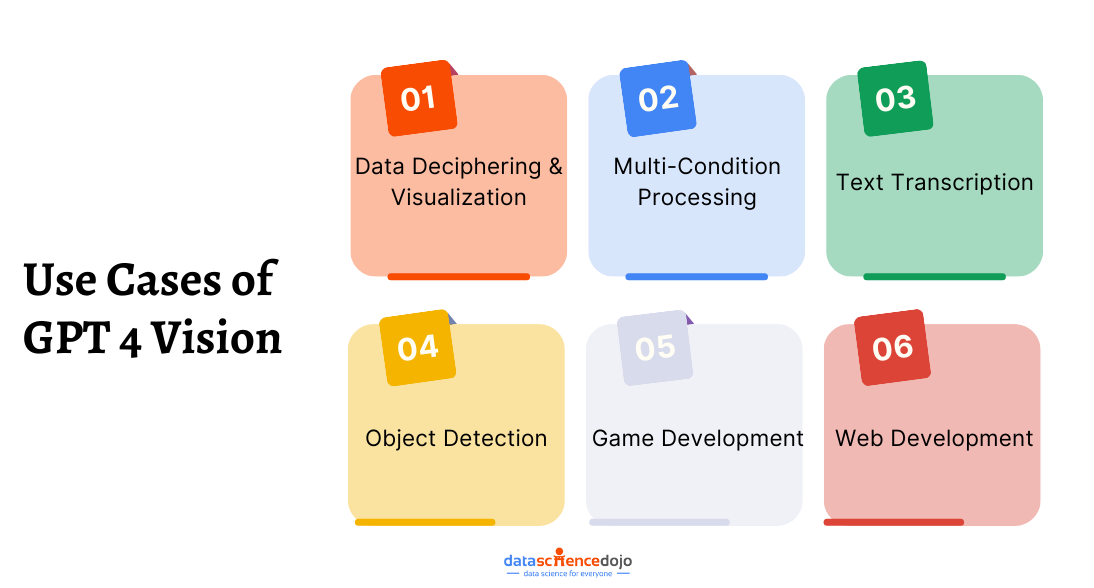

Read in detail about GPT 4 use cases

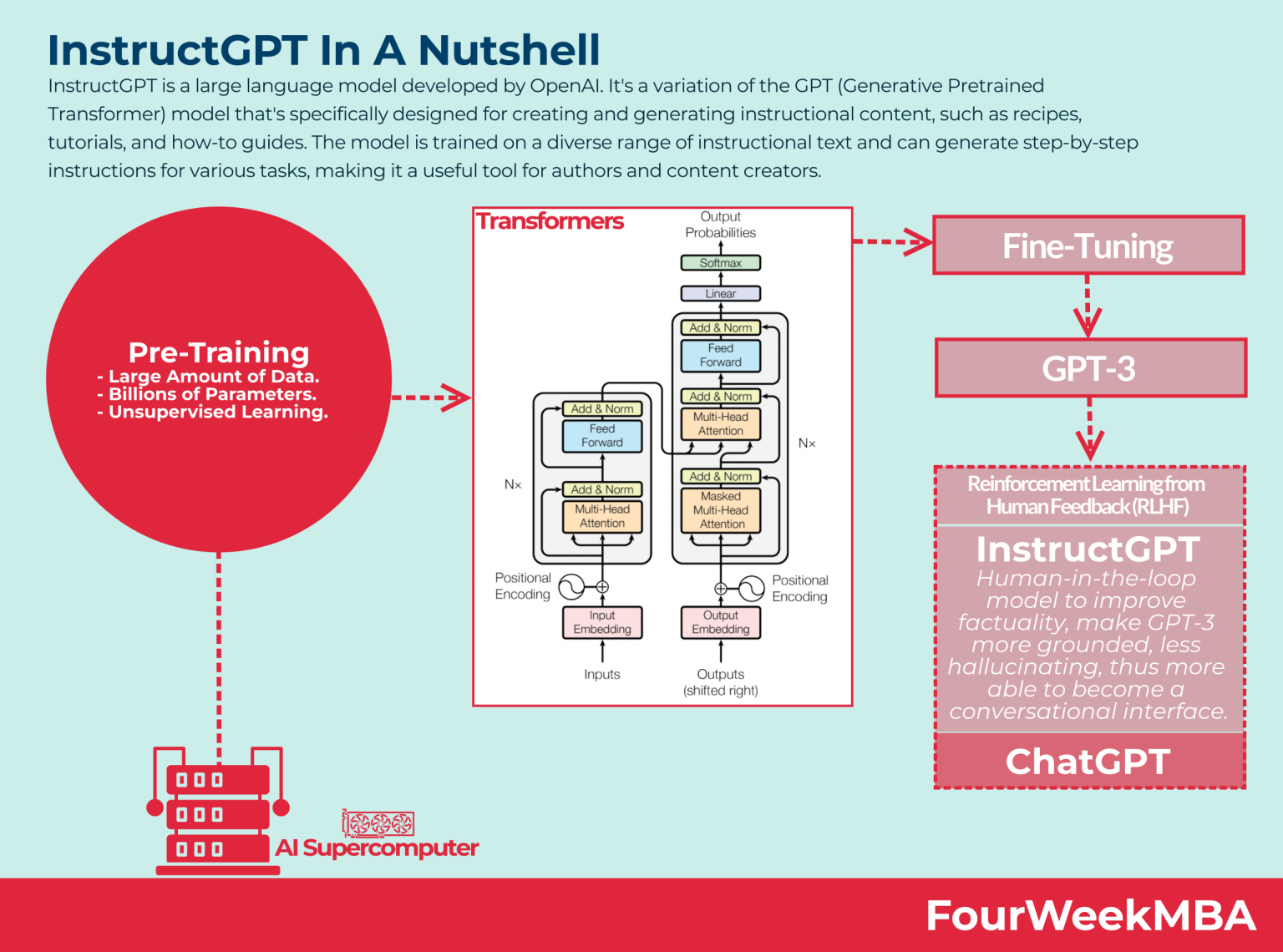

InstructGPT Architecture

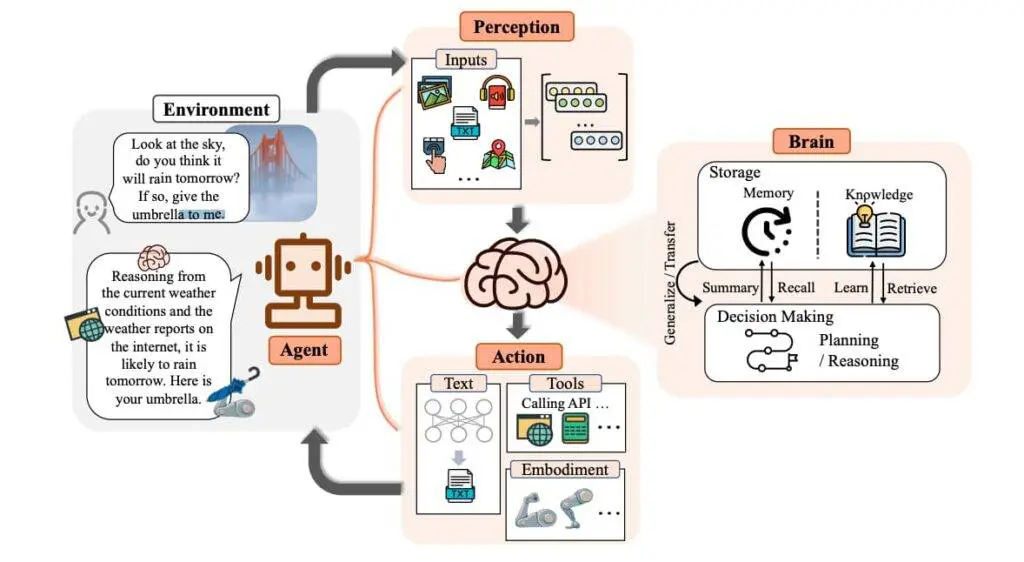

Let’s break down the architecture of InstructGPT in a way that’s easy to digest. Imagine that you’re building a really complex LEGO model. Now, instead of LEGO bricks, InstructGPT uses something called a transformer architecture, which is just a fancy term for a series of steps that help the computer understand and generate human-like text.

At the heart of this architecture are things called attention mechanisms. Think of these as little helpers inside the computer’s brain that pay close attention to each word in a sentence and decide which other words it should pay attention to. This is important because, in language, the meaning of a word often depends on the other words around it.

Also learn in detail about the AI technology behind ChatGPT

Now, InstructGPT takes this transformer setup and tunes it with something called Reinforcement Learning from Human Feedback (RLHF). This is like giving the computer model a coach who gives it tips on how to get better at its job. For InstructGPT, the job is to follow instructions really well.

So, the “coach” (which is actually people giving feedback) helps InstructGPT understand which answers are good and which aren’t, kind of like how a teacher helps a student understand right from wrong answers. This training helps InstructGPT give responses that are more useful and on point.

And that’s the gist of it. InstructGPT is like a smart LEGO model built with special bricks (transformers and attention mechanisms) and coached by humans to be really good at following instructions and helping us out.

Differences Between InstructorGPT, GPT 3.5 and GPT 4

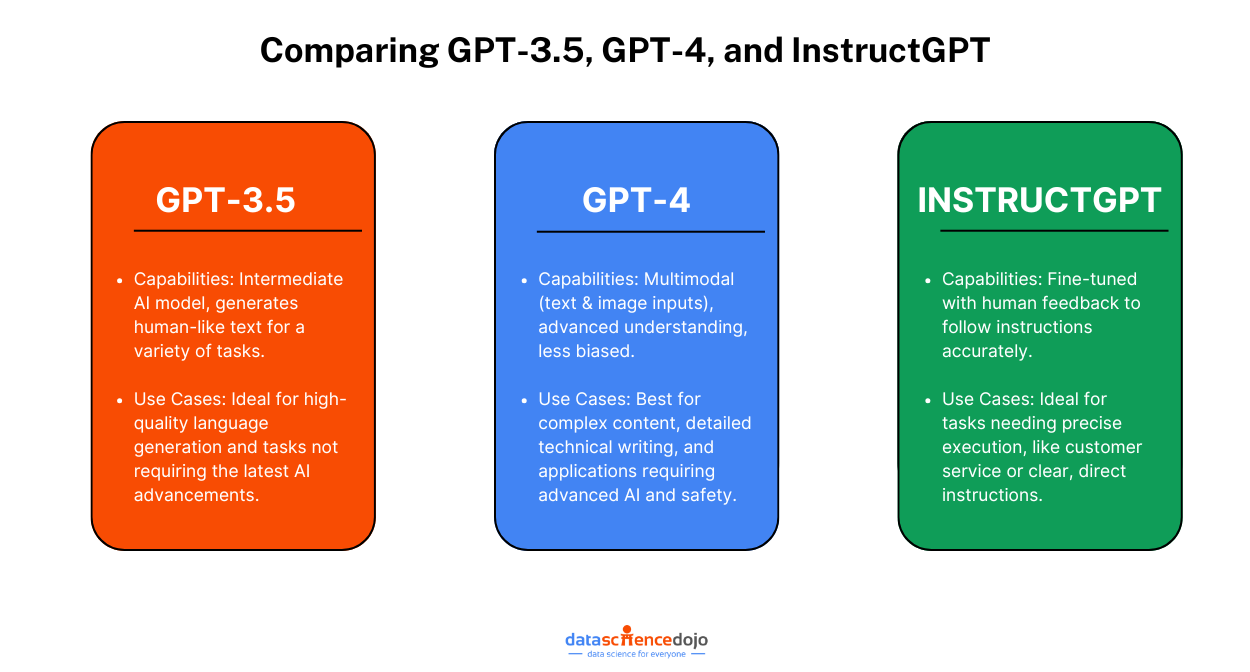

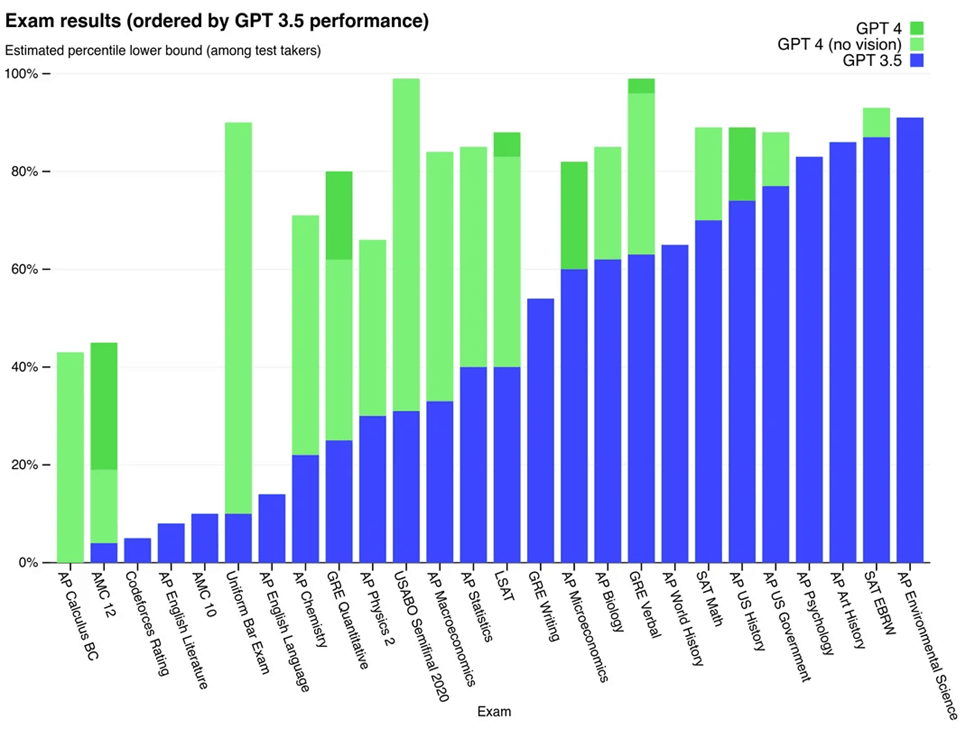

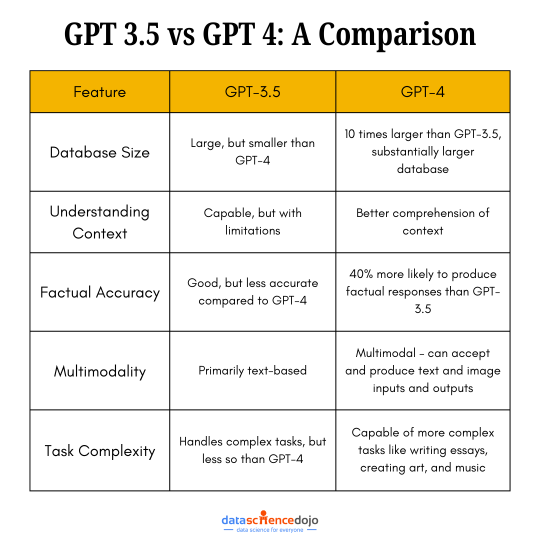

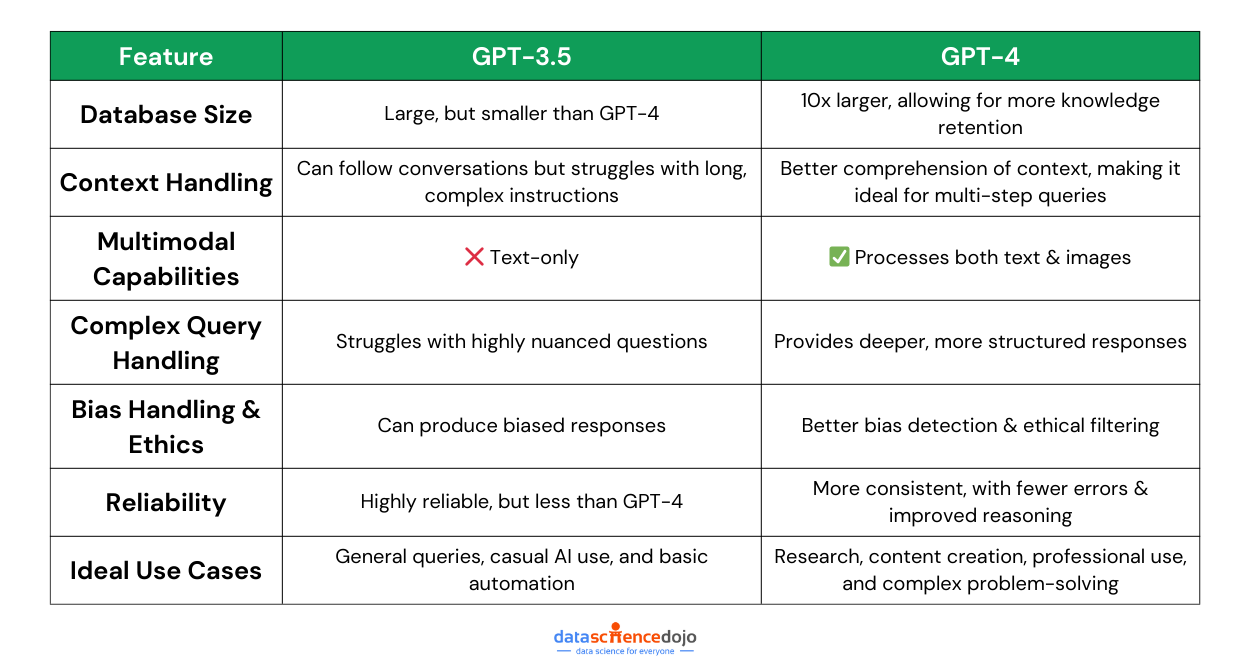

Comparing GPT-3.5, GPT-4, and InstructGPT involves looking at their capabilities and optimal use cases.

| Feature | InstructGPT | GPT-3.5 | GPT-4 |

|---|---|---|---|

| Purpose | Designed for natural language processing in specific domains | General-purpose language model, optimized for chat | Large multimodal model, more creative and collaborative |

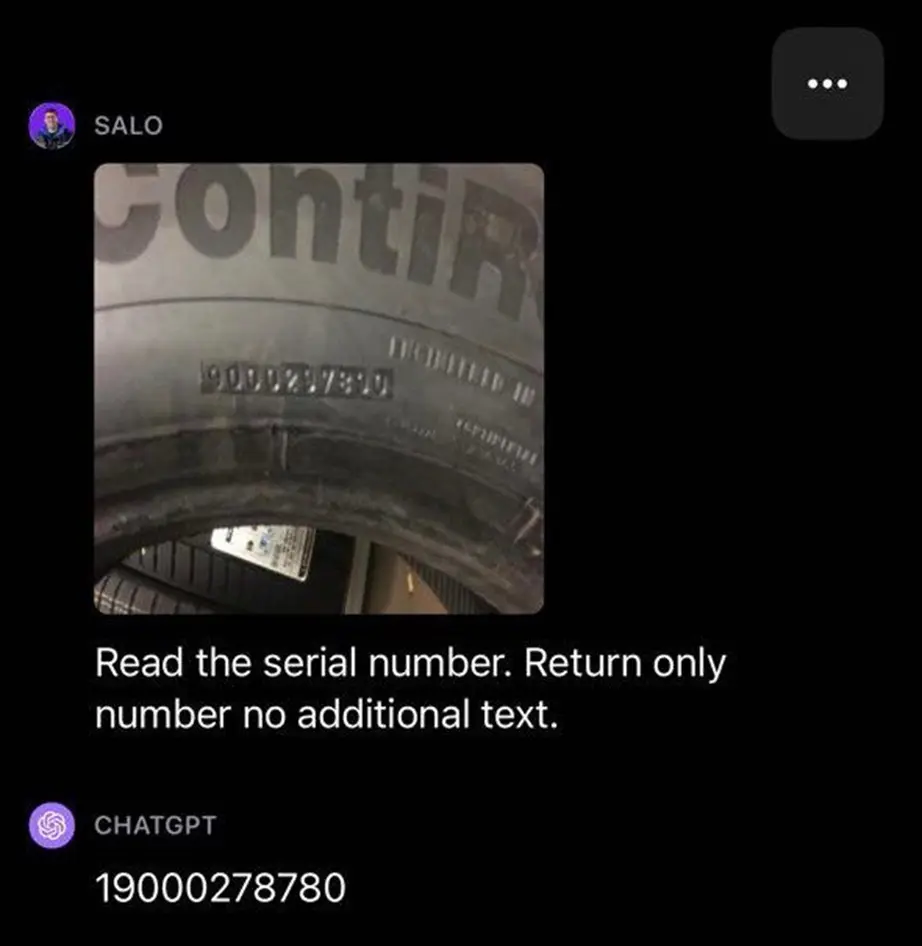

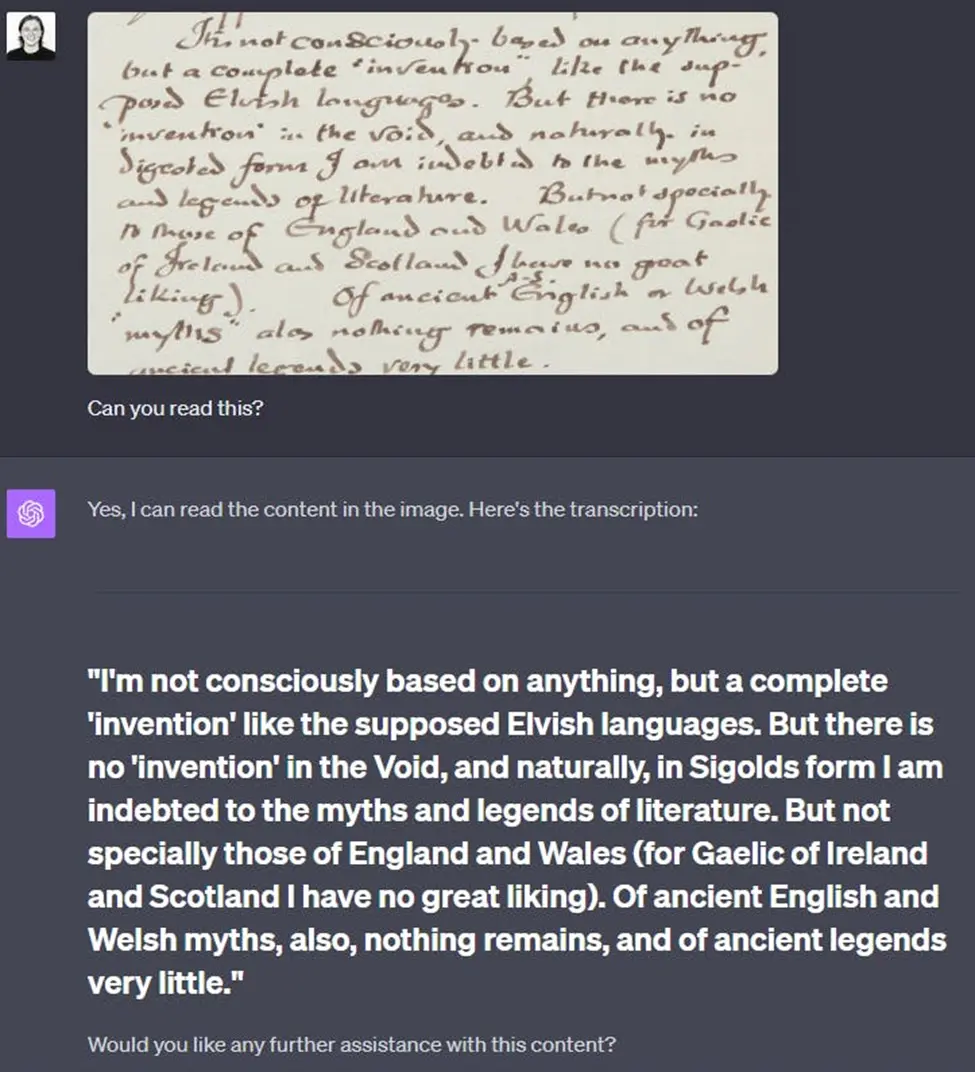

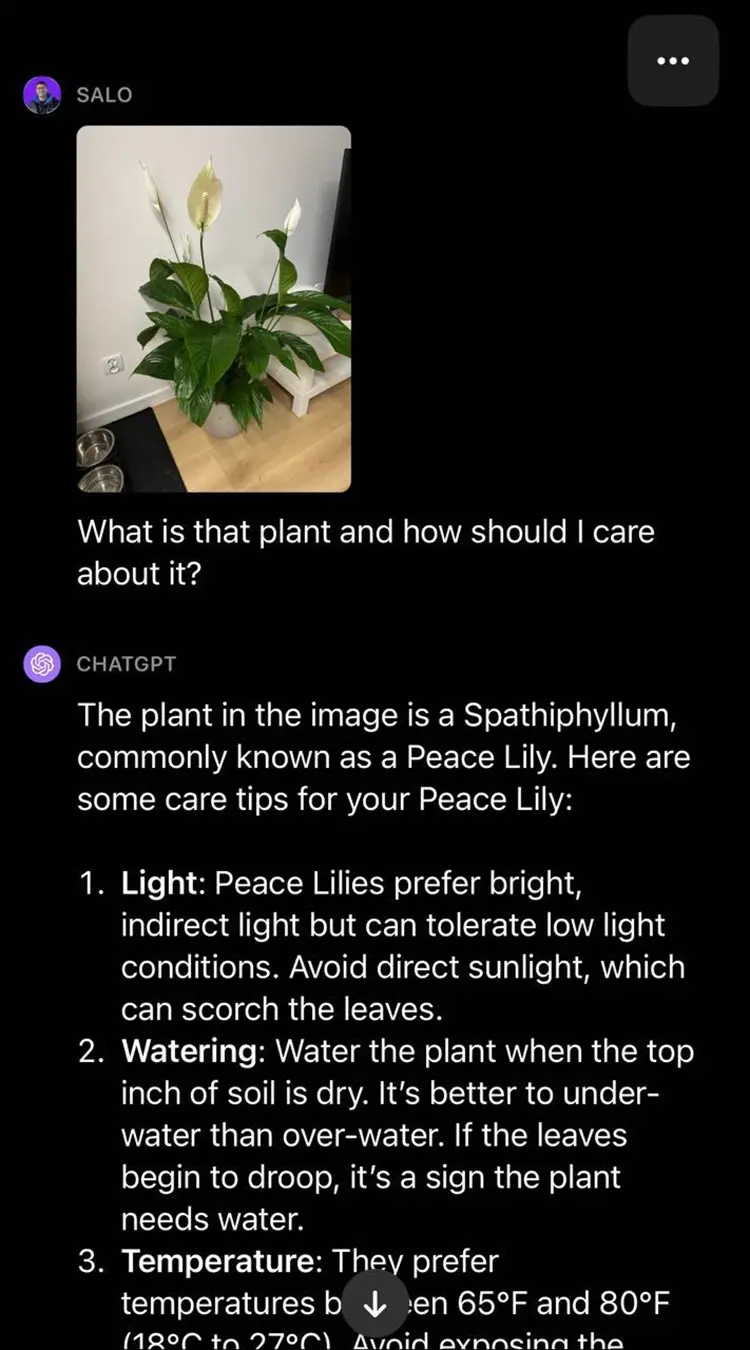

| Input | Text inputs | Text inputs | Text and image inputs |

| Output | Text outputs | Text outputs | Text outputs |

| Training Data | Combination of text and structured data | Massive corpus of text data | Massive corpus of text, structured data, and image data |

| Optimization | Fine-tuned for following instructions and chatting | Fine-tuned for chat using the Chat Completions API | Improved model alignment, truthfulness, less offensive output |

| Capabilities | Natural language processing tasks | Understand and generate natural language or code | Solve difficult problems with greater accuracy |

| Fine-Tuning | Yes, on specific instructions and chatting | Yes, available for developers | Fine-tuning capabilities improved for developers |

| Cost | – | Initially more expensive than base model, now with reduced prices for improved scalability |

GPT-3.5

- Capabilities: GPT-3.5 is an intermediate version between GPT-3 and GPT-4. It’s a large language model known for generating human-like text based on the input it receives. It can write essays, create content, and even code to some extent.

- Use Cases: It’s best used in situations that require high-quality language generation or understanding but may not require the latest advancements in AI language models. It’s still powerful for a wide range of NLP tasks.

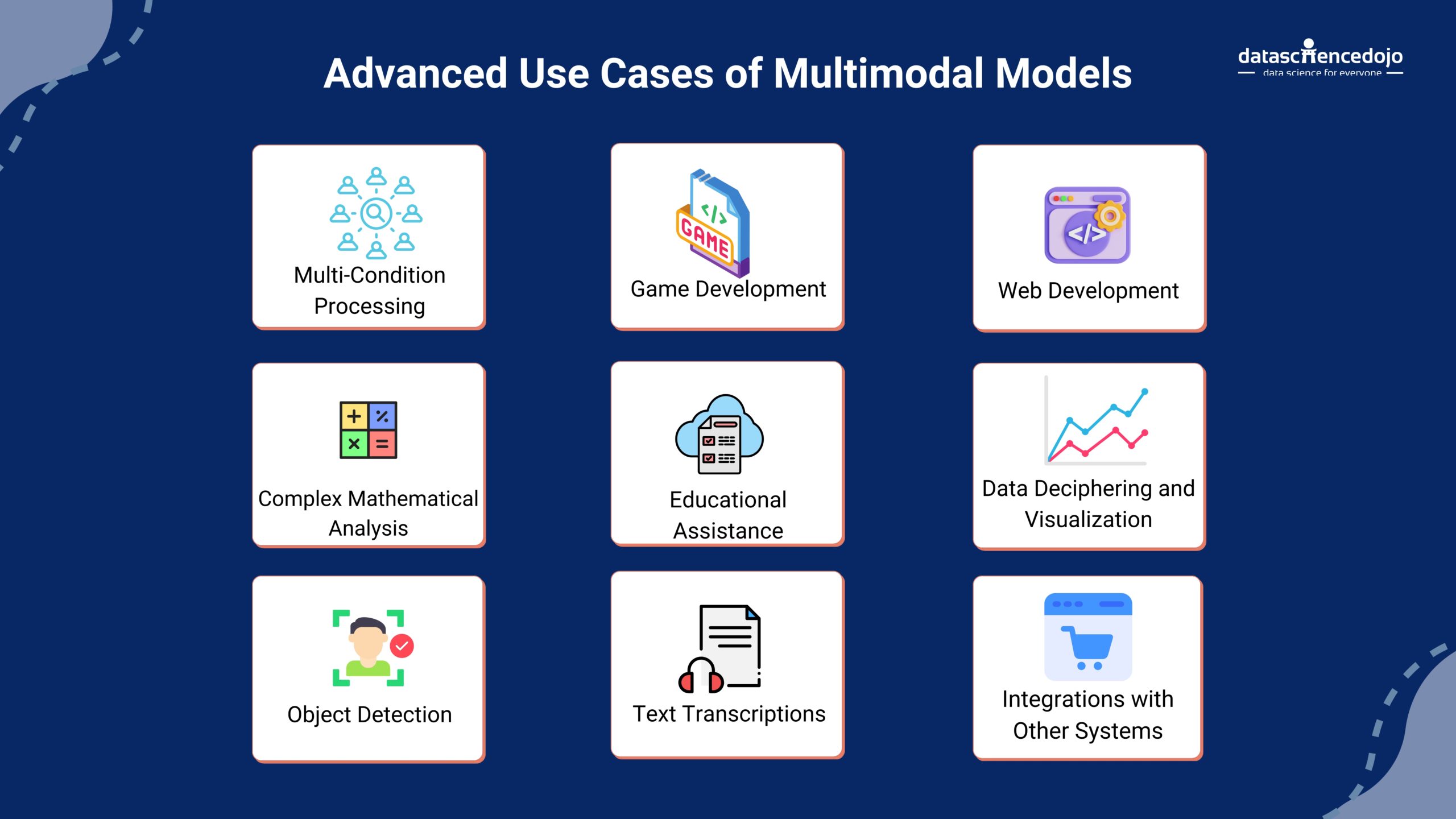

GPT-4

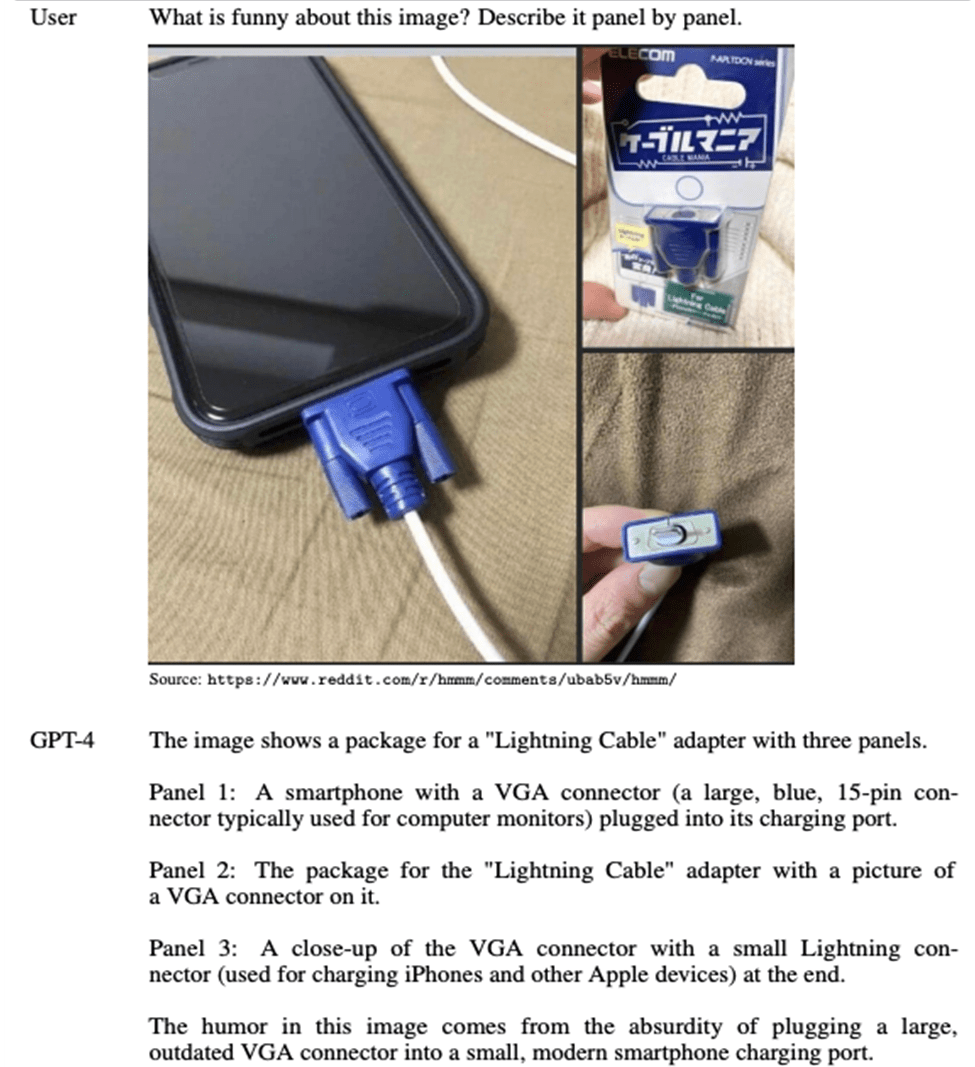

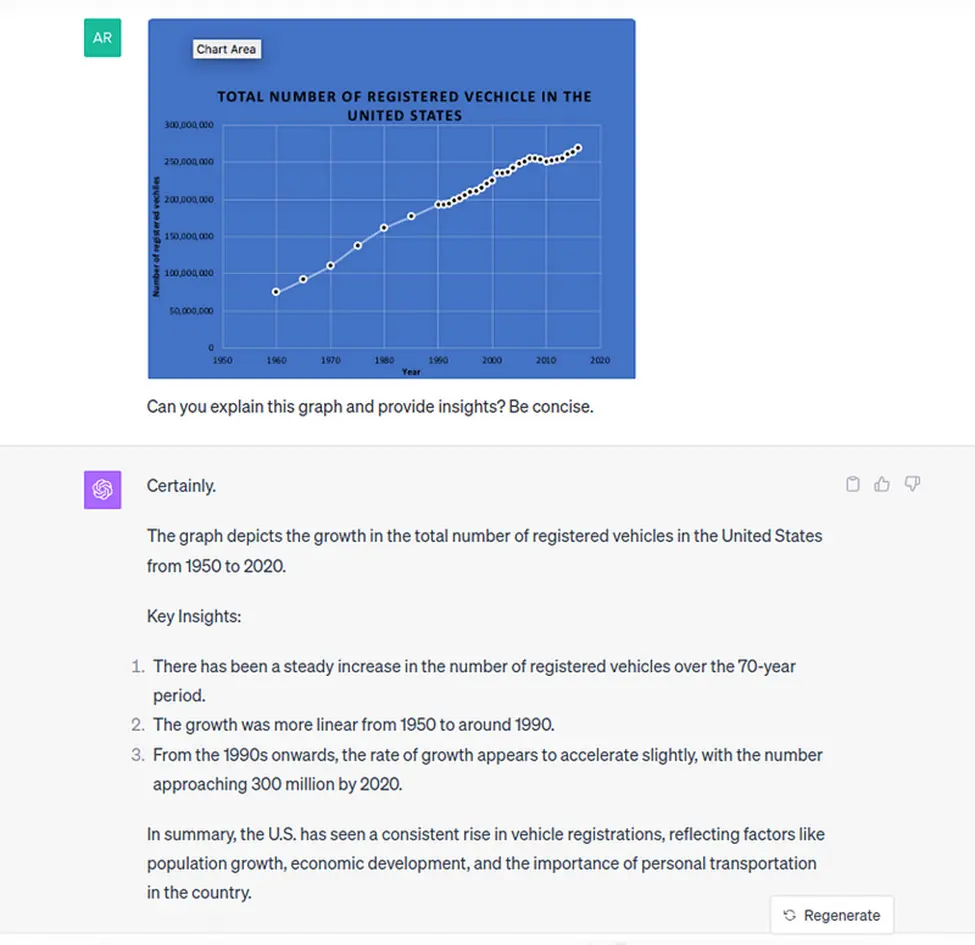

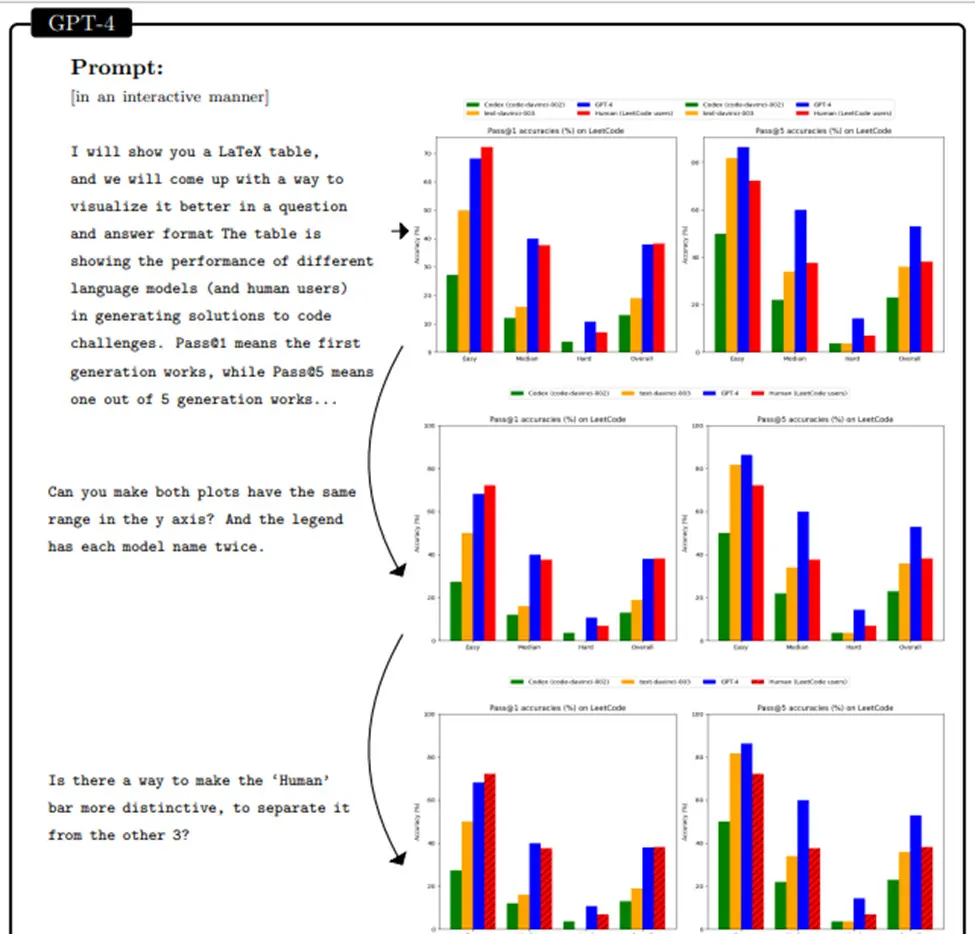

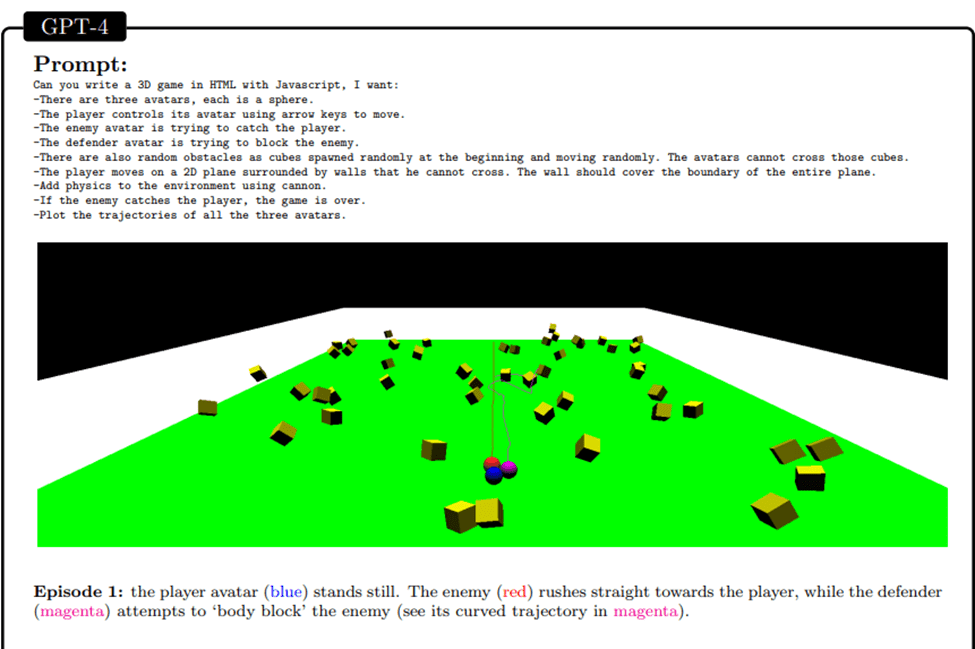

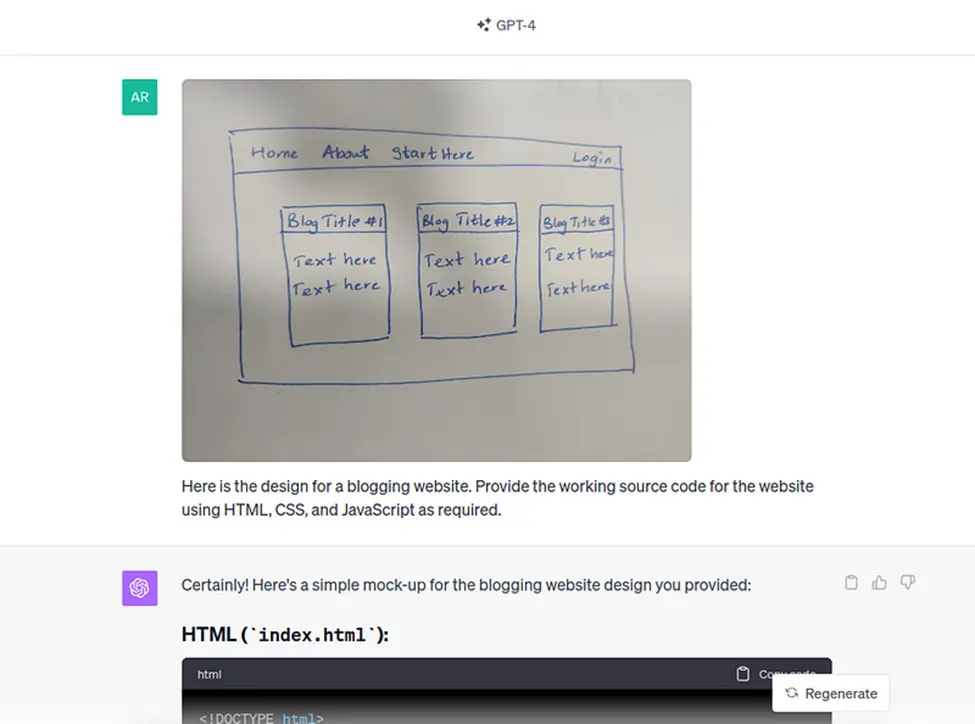

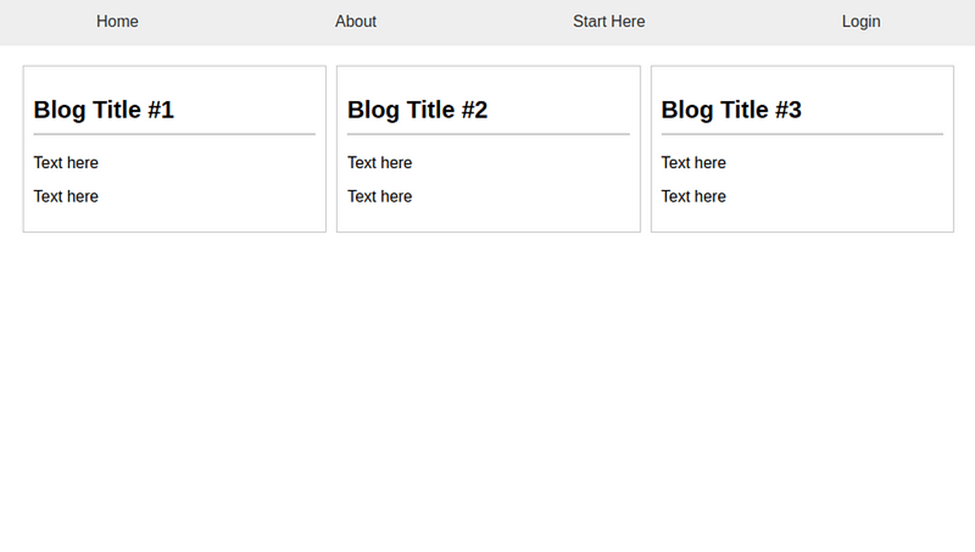

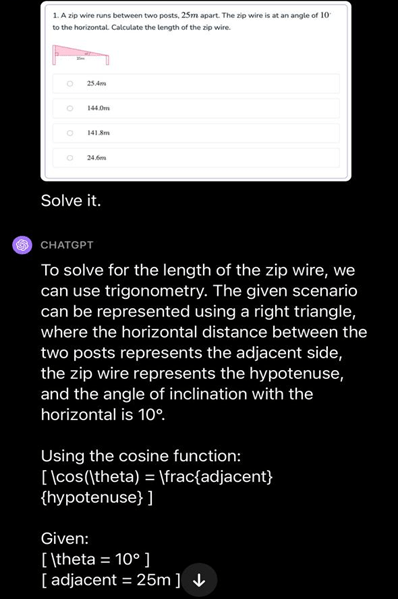

- Capabilities: GPT-4 is a multimodal model that accepts both text and image inputs and provides text outputs. It’s capable of more nuanced understanding and generation of content and is known for its ability to follow instructions better while producing less biased and harmful content.

- Use Cases: It shines in situations that demand advanced understanding and creativity, like complex content creation, detailed technical writing, and when image inputs are part of the task. It’s also preferred for applications where minimizing biases and improving safety is a priority.

Learn more about GPT 3.5 vs GPT 4 in this blog

InstructGPT

- Capabilities: InstructGPT is fine-tuned with human feedback to follow instructions accurately. It is an iteration of GPT-3 designed to produce responses that are more aligned with what users intend when they provide those instructions.

- Use Cases: Ideal for scenarios where you need the AI to understand and execute specific instructions. It’s useful in customer service for answering queries or in any application where direct and clear instructions are given and need to be followed precisely.

How Each Model Handles Instructions

To better understand how InstructGPT, GPT-3.5, and GPT-4 differ in their capabilities, let’s look at how they handle the same prompt. For example, when asked, “Explain quantum computing to a 10-year-old,” InstructGPT might provide a simplified explanation but could lack depth or clarity in breaking it down.

GPT-3.5, on the other hand, might offer a more detailed answer but occasionally include complex terms that a child might struggle to grasp.

Also learn how to detect chatbots like ChatGPT

GPT-4 takes it a step further by delivering a highly nuanced yet straightforward explanation, using analogies and language that resonate perfectly with the intended audience.

By comparing these responses, it’s easier to see how each model is designed to approach instructions and adapt to different levels of complexity.

When to Use Each

- GPT-3.5: Choose this for general language tasks that do not require the cutting-edge abilities of GPT-4 or the precise instruction-following of InstructGPT.

- GPT-4: Opt for this for more complex, creative tasks, especially those that involve interpreting images or require outputs that adhere closely to human values and instructions.

- InstructGPT: Select this when your application involves direct commands or questions and you expect the AI to follow those to the letter, with less creativity but more accuracy in instruction execution.

Limitations and Challenges of the Models

While InstructGPT, GPT-3.5, and GPT-4 have made remarkable strides in natural language understanding, they aren’t without limitations. For instance, all three models can occasionally produce biased or factually inaccurate responses, particularly when dealing with complex or nuanced topics.

Another interesting read: ChatGPT Money-Making Ideas

InstructGPT, while more focused on following instructions, may oversimplify tasks, whereas GPT-3.5 might struggle with maintaining consistency in longer conversations.

GPT-4, although significantly more advanced, still faces challenges with reasoning in highly specialized domains. Understanding these limitations helps set realistic expectations and highlights the importance of human oversight when using these models in critical applications.

To Sum It Up

In conclusion, each model—InstructGPT, GPT-3.5, and GPT-4—offers unique strengths tailored to specific tasks. While they all demonstrate remarkable capabilities in natural language processing, it’s important to acknowledge their limitations, such as biases and occasional inaccuracies. By understanding their respective strengths and challenges, users can make more informed decisions about which model best suits their needs and ensure they are applied effectively in real-world scenarios.