Artificial intelligence is evolving fast, and Grok 4, developed by xAI (Elon Musk’s AI company), is one of the most ambitious steps forward. Designed to compete with giants like OpenAI’s GPT-4, Google’s Gemini, and Anthropic’s Claude, Grok 4 brings a unique flavor to the large language model (LLM) space: deep reasoning, multimodal understanding, and real-time integration with live data.

But what exactly is Grok 4? How powerful is it, and what can it really do? In this post, we’ll walk you through Grok 4’s architecture, capabilities, benchmarks, and where it fits into the future of AI.

What is Grok 4?

Grok 4 is the latest LLM from xAI, officially released in July 2025. At its core, Grok 4 is designed for advanced reasoning tasks—math, logic, code, and scientific thinking. Unlike earlier Grok versions, Grok 4 comes in two flavors:

-

Grok 4 (standard): A powerful single-agent language model.

-

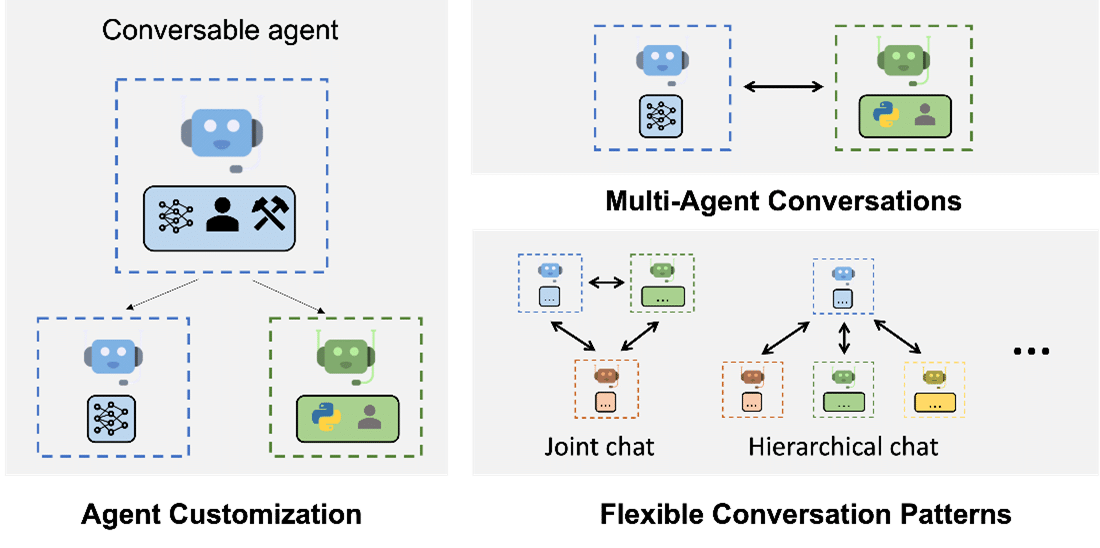

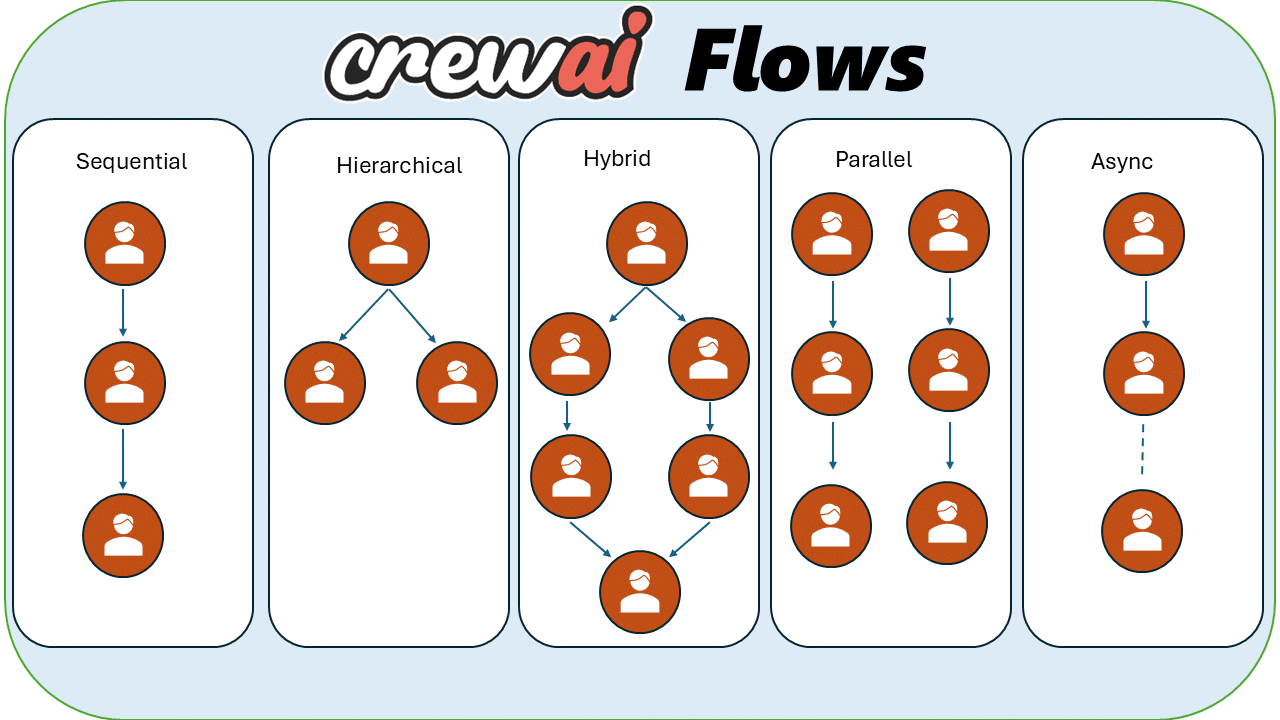

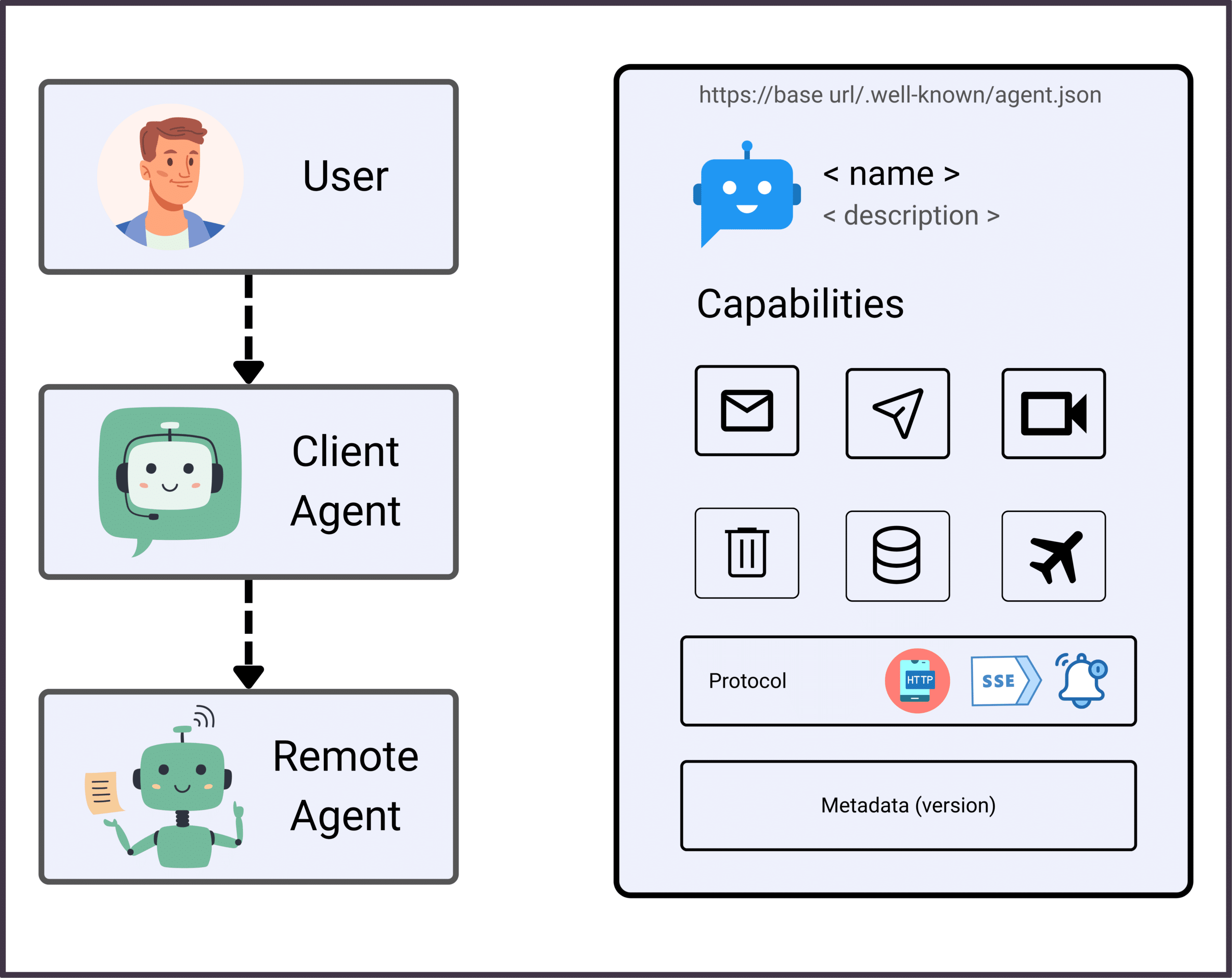

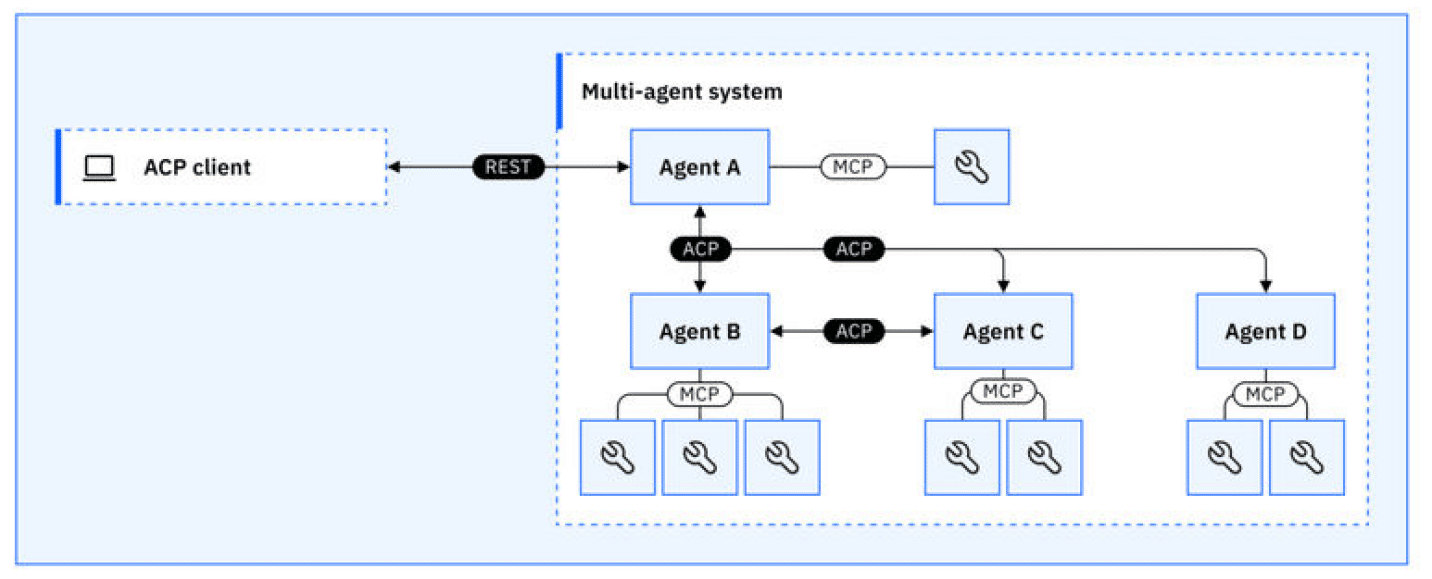

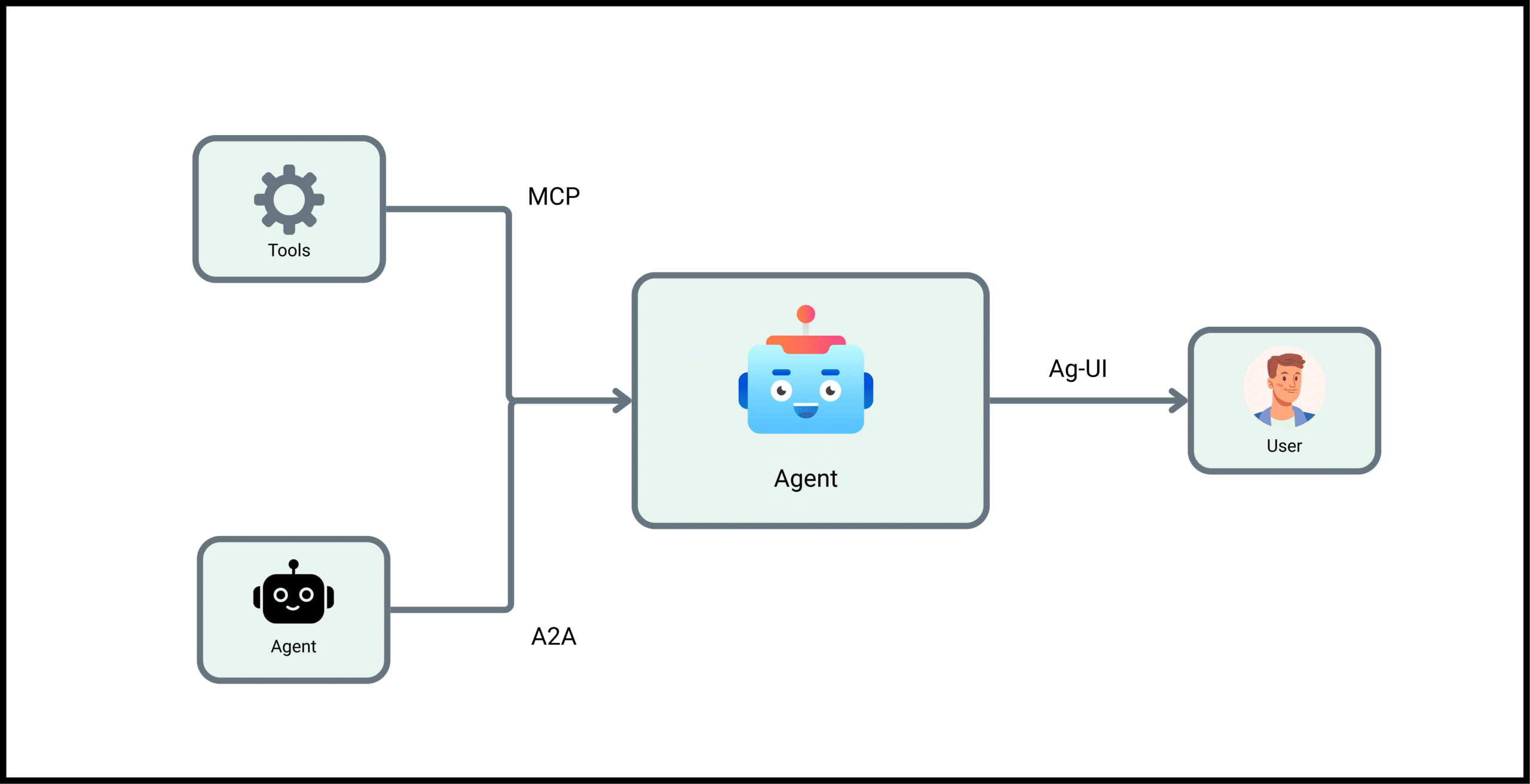

Grok 4 Heavy: A multi-agent architecture for complex collaborative reasoning (think several AI minds working together on a task).

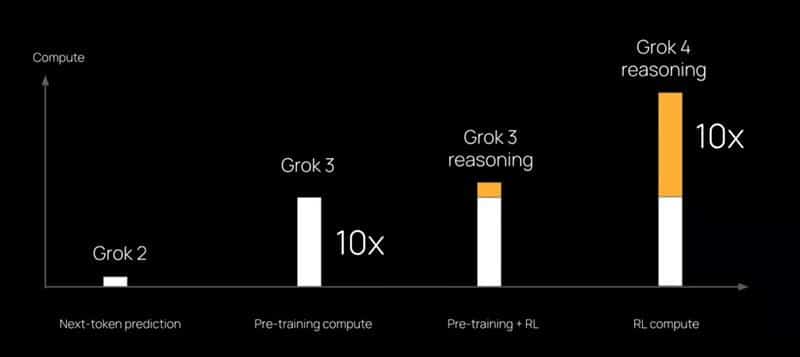

And yes, it’s big—Grok 4 boasts around 1.7 trillion parameters and was trained with 100× more compute than Grok 2, including heavy reinforcement learning, placing it firmly in the top tier of today’s models.

Technical Architecture and Capabilities

Let’s unpack what makes Grok 4 different from other LLMs.

1. Hybrid Neural Design

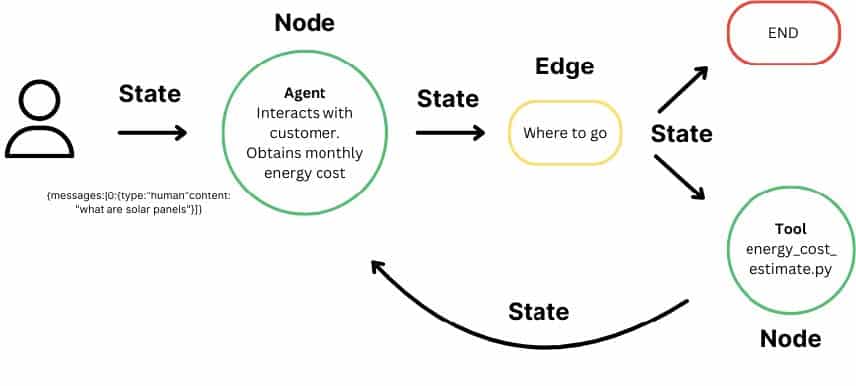

Grok 4 uses a modular architecture. That means it has specialized subsystems for tasks like code generation, language understanding, and mathematical reasoning. These modules are deeply integrated but operate with some autonomy—especially in the “Heavy” version, which simulates multi-agent collaboration.

2. Large Context Window

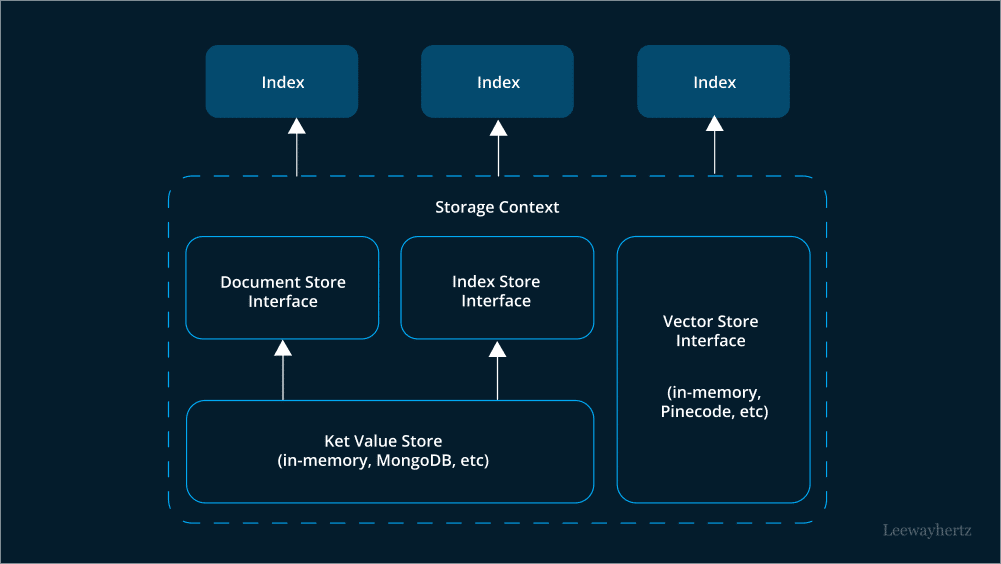

Context windows matter—especially for reasoning over long documents. Grok 4 supports up to 128,000 tokens in-app, and 256,000 tokens via API, allowing for detailed, multi-turn interactions and extended memory.

3. Multimodal AI

Grok 4 isn’t just about text. It can understand and reason over text and images, with voice capabilities as well (it features a British-accented voice assistant called Eve). Future updates are expected to add image generation and deeper visual reasoning.

5. Powered by Colossus

xAI trained Grok 4 using its Colossus supercomputer, which reportedly runs on 200,000 Nvidia GPUs—a serious investment in compute infrastructure.

Key Features That Stand Out

Reasoning & Scientific Intelligence

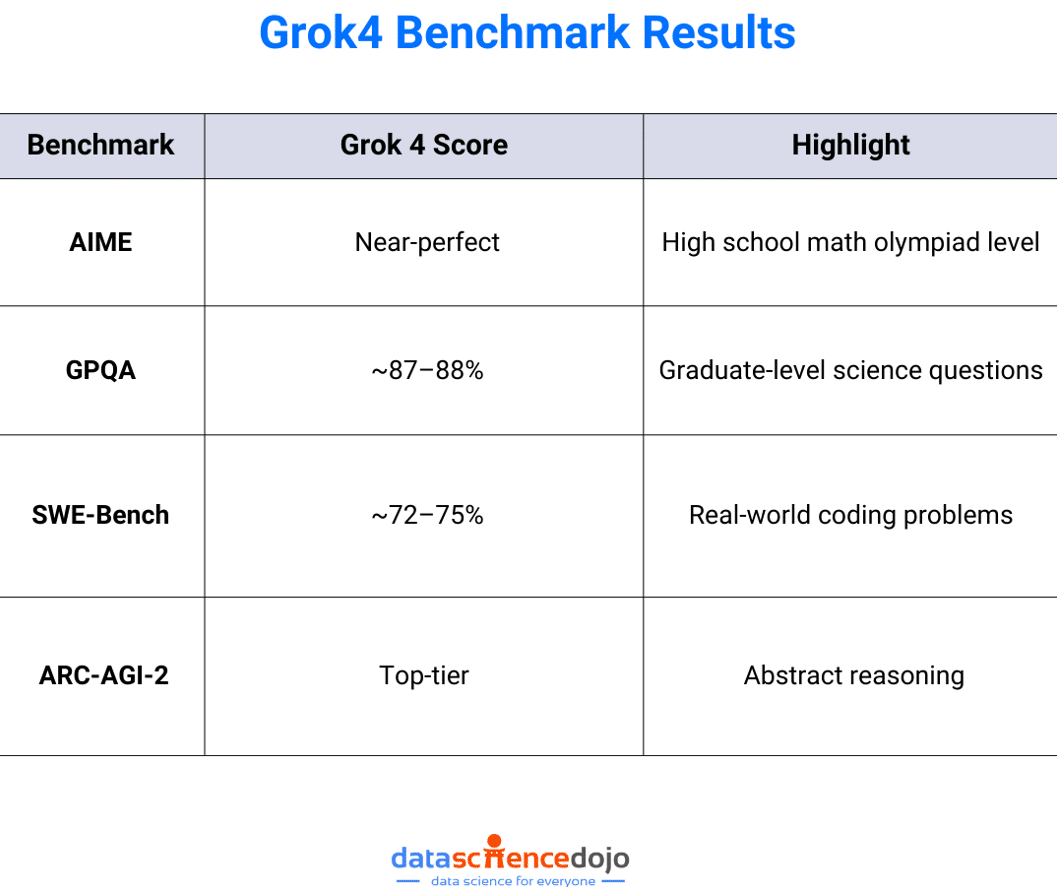

Grok 4 is built for deep thinking. It performs strongly in multi-step math, logic problems, and graduate-level scientific questions. On internal benchmarks like GPQA, AIME, and ARC-AGI, Grok 4 matches or surpasses other frontier models.

Code Generation with Grok 4 Code

The specialized Grok 4 Code variant targets developers. It delivers smart code suggestions, debugging help, and even software design ideas. It scores ~72–75% on SWE-Bench, a benchmark for real-world coding tasks—placing it among the best models for software engineering.

Real-Time Data via X

Here’s something unique: Grok 4 can access real-time data from X (formerly Twitter). That gives it a dynamic edge for tasks like market analysis, news summarization, and live sentiment tracking.

(Note: Despite some speculation, there’s no confirmed integration with live Tesla or SpaceX data.)

Benchmark Performance: Where Grok 4 Shines

Here’s how Grok 4 compares on key LLM benchmarks:

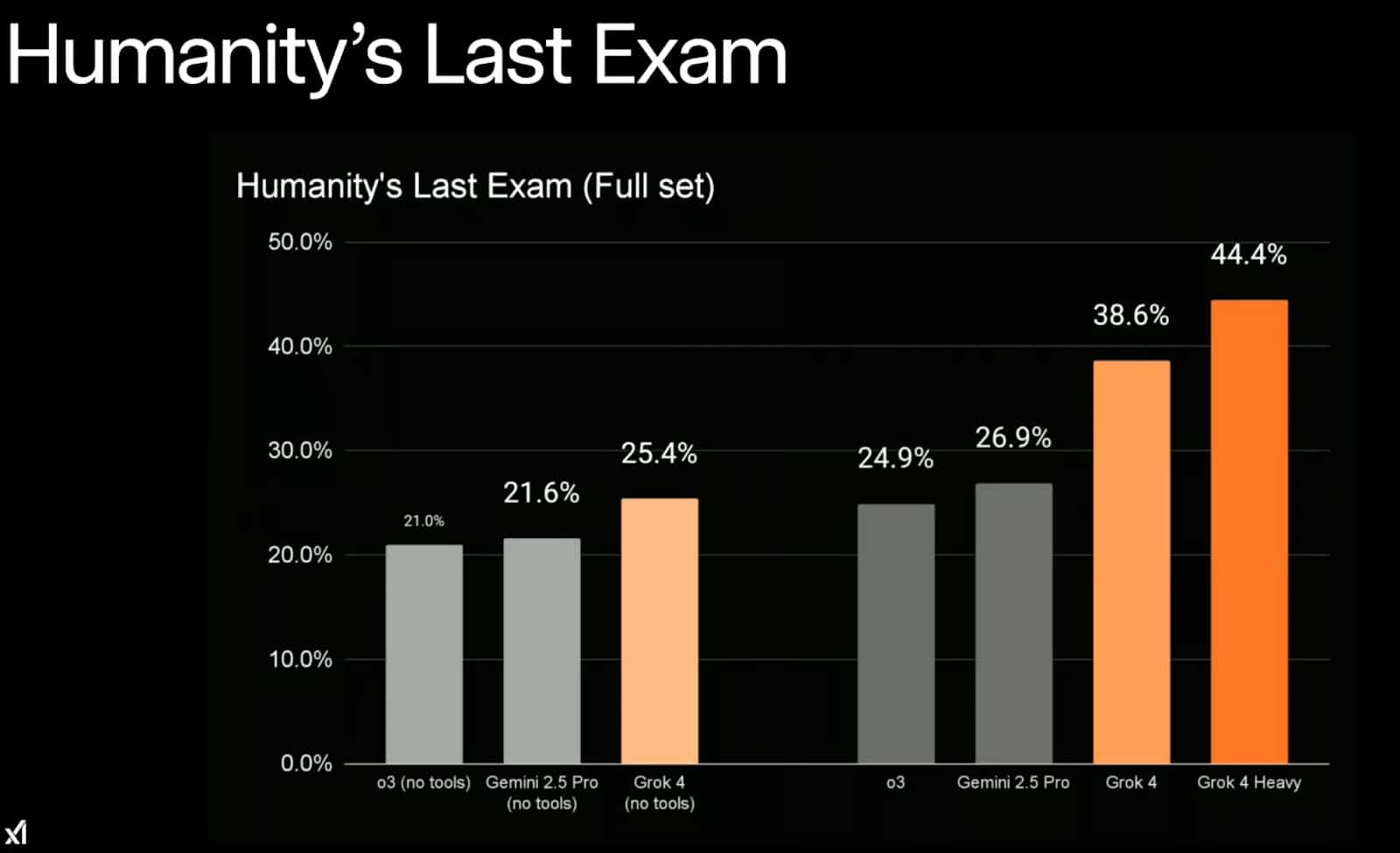

Compared to competitors like GPT-4 and Gemini, Grok 4 is especially strong in math, logic, and coding. The most notable result was from Humanit’s Last Exam, a benchmark comprising of 2500 hand-curated PhD level questions spanning math, physics, chemistry, linguistics and engineering. Grok 4 was able to solve about 38.6% of thw problems

Real-World Use Cases

Whether you’re a data scientist, developer, or researcher, Grok 4 opens up a wide range of possibilities:

-

Exploratory Data Analysis: Grok 4 can automate EDA, identify patterns, and suggest hypotheses.

-

Software Development: Generate, review, and optimize code with the Grok 4 Code variant.

-

Document Understanding: Summarize long documents, extract key insights, and answer questions in context.

-

Real-Time Analytics: Leverage live data from X for trend analysis, event monitoring, and anomaly detection.

-

Collaborative Research: In its “Heavy” form, Grok 4 supports multi-agent collaboration on scientific tasks like literature reviews and data synthesis.

Developer Tools and API Access

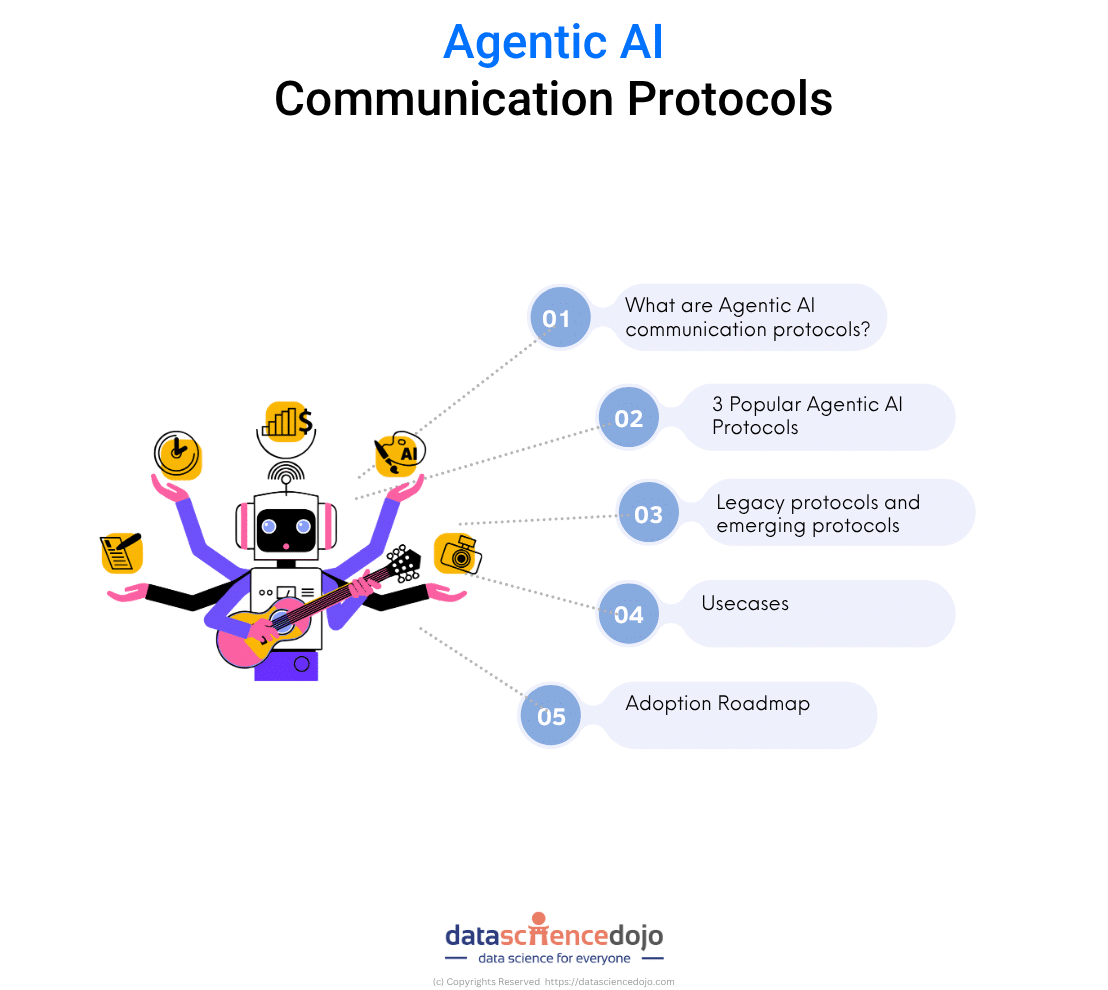

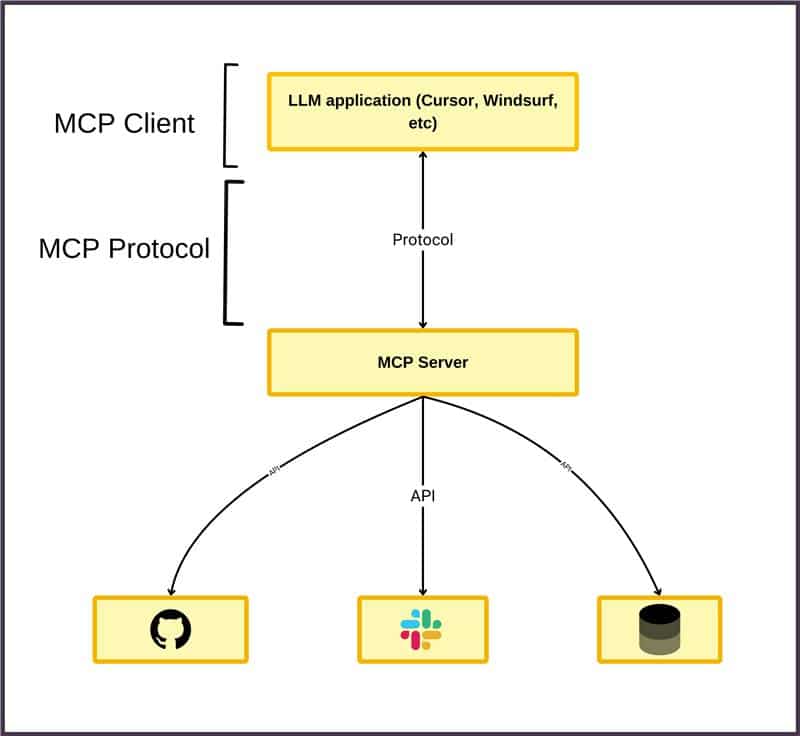

Developers can tap into Grok 4’s capabilities via APIs. It supports:

-

Structured outputs (like JSON)

-

Function calling

-

Multimodal inputs (text + image)

-

Voice interaction via integrated assistant (in Grok 4 web app)

The API is accessible via a “SuperGrok” plan ($30/month) for Grok 4 and $300/month for Grok 4 Heavy (SuperGrok Heavy).

Ethics, Bias, and Environmental Impact

No powerful model is without trade-offs. Here’s what to watch:

-

Bias and Content Moderation: Earlier versions of Grok generated problematic or politically charged content. xAI has since added filters, but content safety remains an active concern.

-

Accessibility: The price point may limit access for independent researchers and small teams.

-

Environmental Footprint: Training Grok 4 required massive compute power. xAI’s Colossus supercomputer raises valid questions about energy efficiency and sustainability.

Challenges and Limitations

While Grok 4 is impressive, it’s not without challenges:

-

Speed: Especially for the multi-agent “Heavy” model, latency can be noticeable.

-

Visual Reasoning: While it supports images, Grok 4’s vision capabilities still trail behind dedicated models like Gemini or Claude Opus.

-

Scalability: Managing collaborative agents at scale (in Grok 4 Heavy) is complex and still evolving.

What’s Next for Grok?

xAI has big plans:

-

Specialized Models: Expect focused versions for coding, multimodal generation, and even video reasoning.

-

Open-Source Releases: Smaller Grok variants may be open-sourced to support research and transparency.

-

Human-AI Collaboration: Musk envisions Grok as a step toward AGI, capable of teaming with humans to solve tough scientific and societal problems.

FAQ

Q1: What makes Grok 4 different from previous large language models?

Grok 4’s hybrid, multi-agent architecture and advanced reasoning capabilities set it apart, enabling superior performance in mathematical, coding, and multimodal tasks.

Q2: How does Grok 4 handle real-time data?

Grok 4 integrates live data from platforms like X, supporting real-time analytics and decision-making.

Q3: What are the main ethical concerns with Grok 4?

Unfiltered outputs and potential bias require robust content moderation and ethical oversight.

Q4: Can developers integrate Grok 4 into their applications?

Yes, Grok 4 offers comprehensive API access and documentation for seamless integration.

Q5: What’s next for Grok 4?

xAI plans to release specialized models, enhance multimodal capabilities, and introduce open-source variants to foster community research.

For more in-depth AI guides and technical resources, visit Data Science Dojo’s blog.

Final Thoughts

Grok 4 is more than just another LLM—it’s xAI’s bold bet on the future of reasoning-first AI. With cutting-edge performance in math, code, and scientific domains, Grok 4 is carving out a unique space in the AI ecosystem.

Yes, it has limitations. But if you’re building advanced AI applications or exploring the frontiers of human-machine collaboration, Grok 4 is a model to watch—and maybe even build with.