Claude vs ChatGPT isn’t just another casual debate—it’s about understanding two of the most advanced AI tools we use today. OpenAI’s ChatGPT, launched in late 2022, quickly became a part of our daily routines, offering incredible solutions powered by AI.

Then came Anthropic’s Claude, designed to address some of the limitations people noticed in ChatGPT. Both tools bring unique strengths to the table, but how do they really compare? And where does Claude stand out enough to make you choose it over ChatGPT?

Let’s explore everything you need to know about this fascinating clash of AI giants.

What is Claude AI?

Before you get into the Claude vs ChatGPT debate, it’s important to understand both AI tools fully. So, let’s start with the basics—what is Claude AI?

Claude is Anthropic’s AI chatbot designed for natural, text-based conversations. Whether you need help editing content, getting clear answers to your questions, or even writing code, Claude is your go-to tool. Sounds familiar, right? It’s similar to ChatGPT in many ways, but don’t worry, we’ll explore their key differences shortly.

First, let’s lay the groundwork.

What is Anthropic AI?

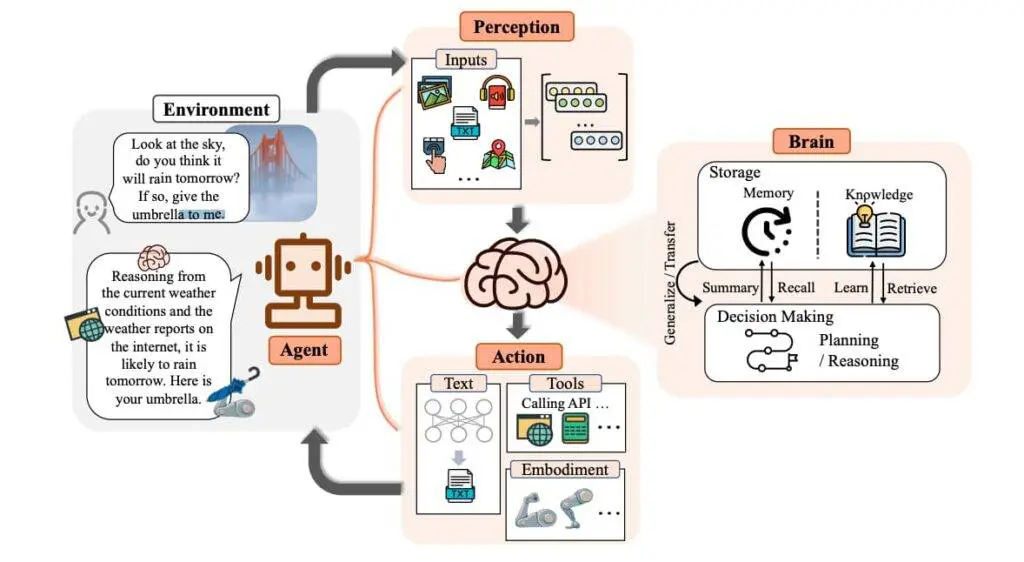

To understand Claude’s design and priorities, it’s essential to look at its parent company, Anthropic. It is the driving force behind Claude and its mission centers around creating AI that is both safe and ethical.

Founded by seven former OpenAI employees, including Daniela and Dario Amodei, Anthropic was born out of a desire to address growing concerns about AI safety. With Daniela and Dario’s experience in developing ChatGPT-3, they set out to build an AI that puts safety first—giving birth to Claude.

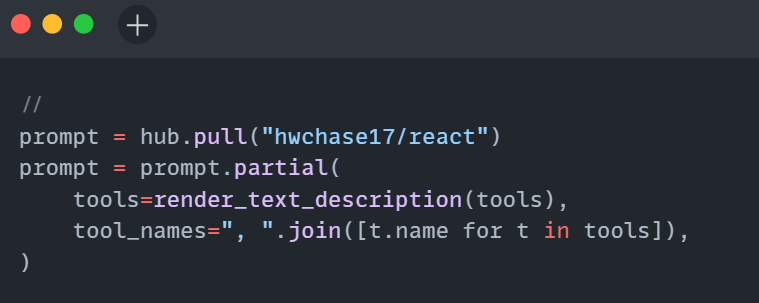

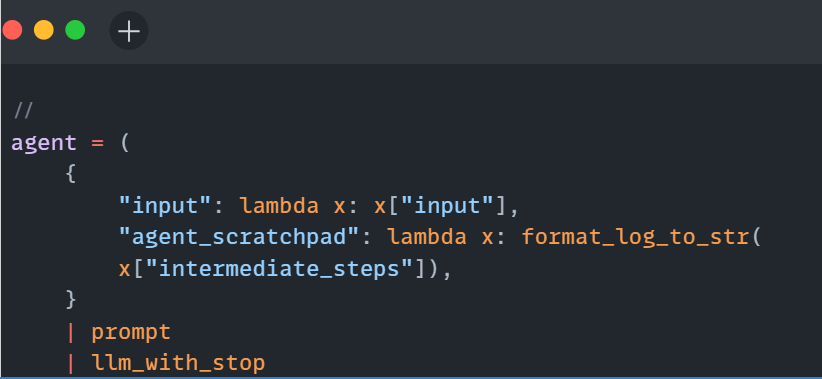

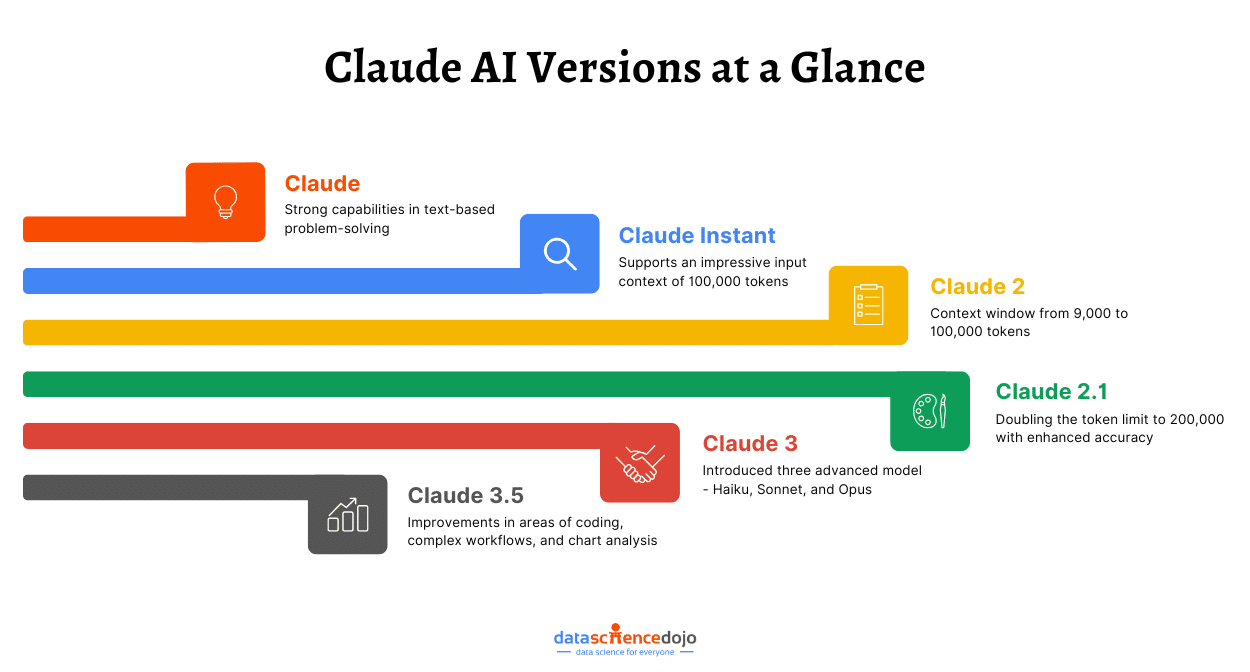

Versions of Claude AI

To fully answer the question, “What is Claude AI?” it’s important to explore its various versions, which include:

- Claude

- Claude Instant

- Claude 2

- Claude 2.1

- Claude 3

- Claude 3.5

Each version represents a step forward in Anthropic’s commitment to creating versatile and safe AI, with unique improvements and features tailored to specific needs. Let’s dive into the details of these versions and see how they evolved over time.

Claude

The journey of Claude AI began in March 2023 with the release of its first version. This initial model demonstrated strong capabilities in text-based problem-solving but faced limitations in areas like coding, mathematical reasoning, and handling complex logic. Despite these hurdles, Claude gained traction through integrations with platforms like Notion and Quora, enhancing tools like the Poe chatbot.

Claude Instant

Anthropic later introduced Claude Instant, a faster and more affordable alternative to the original. Although lighter in functionality, it still supports an impressive input context of 100,000 tokens (roughly 75,000 words), making it ideal for users seeking quick responses and streamlined tasks.

Claude 2

Released in July 2023, Claude 2 marked a significant upgrade by expanding the context window from 9,000 tokens to 100,000 tokens. It also introduced features like the ability to read and summarize documents, including PDFs, enabling users to tackle more complex assignments. Unlike its predecessor, Claude 2 was accessible to the general public.

Explore the impact of Claude 2 further

Claude 2.1

This version built on Claude 2’s success, doubling the token limit to 200,000. With the capacity to process up to 500 pages of text, it offered users greater efficiency in handling extensive content. Additionally, Anthropic enhanced its accuracy, reducing the chances of generating incorrect information.

Claude 3

In March 2024, Anthropic released Claude 3, setting a new benchmark in AI capabilities. This version introduced three advanced models—Haiku, Sonnet, and Opus—with the Opus model supporting a context window of 200,000 tokens, expandable to an incredible 1 million for specific applications. Claude 3’s ability to excel in cognitive tasks and adapt to testing scenarios made it a standout in the AI landscape.

Claude 3.5

June 2024 brought the release of Claude 3.5 Sonnet, which showcased major improvements in areas like coding, complex workflows, chart analysis, and extracting information from images. This version also introduced a feature to generate and preview code in real-time, such as SVG graphics or website designs.

By October 2024, Anthropic unveiled an upgraded Claude 3.5 with the innovative “computer use” capability. This feature allowed the AI to interact with desktop environments, performing actions like moving the cursor, typing, and clicking buttons autonomously, making it a powerful tool for multi-step tasks.

Read in detail about Claude 3.5

Standout Features of Claude AI

The Claude vs ChatGPT debate could go on for a while, but Claude stands out with a few key features that set it apart.

Here’s a closer look at what makes it shine:

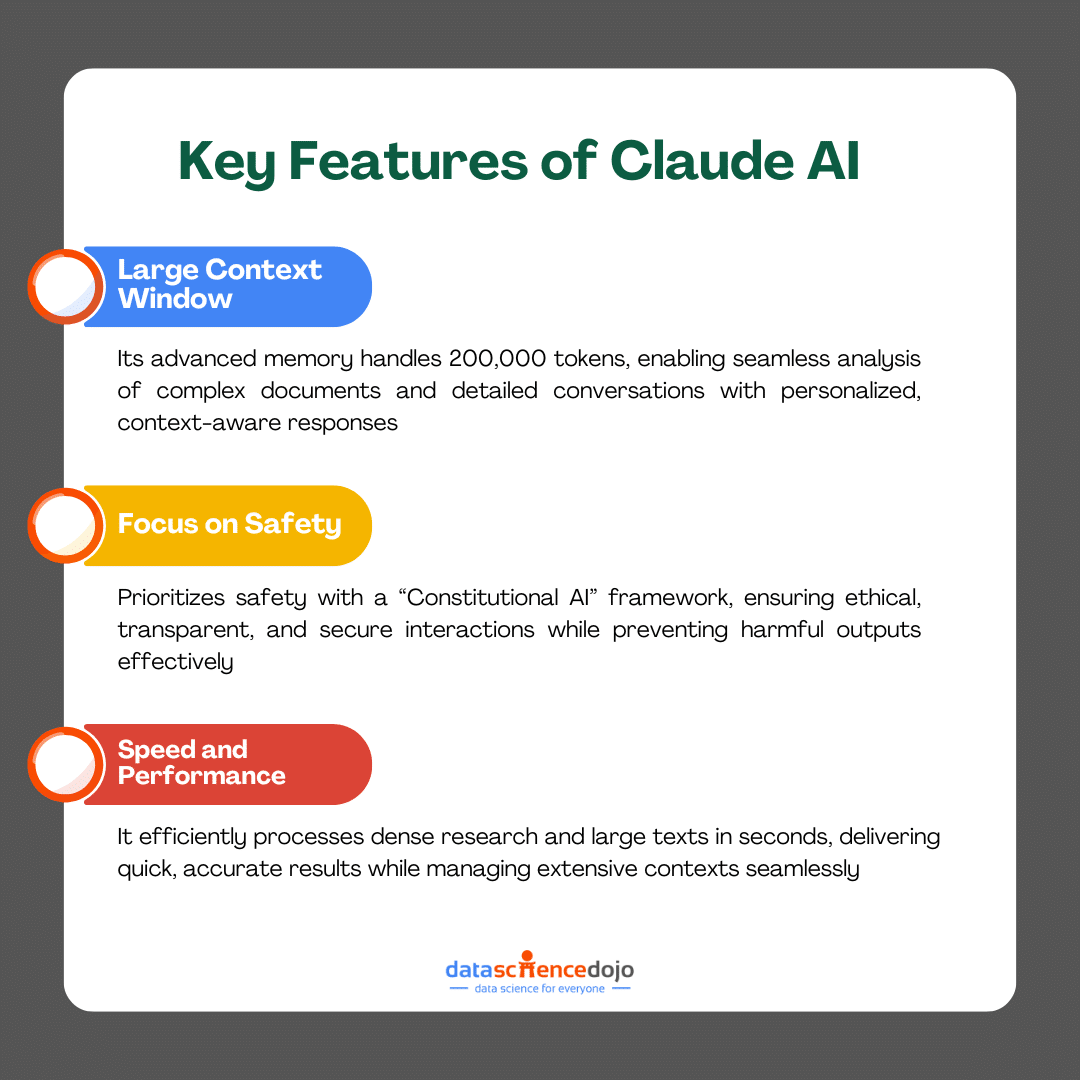

Large Context Window

Claude’s exceptional contextual memory allows it to process up to 200,000 tokens at once. This means it can manage lengthy conversations and analyze complex documents seamlessly. Whether you’re dissecting detailed reports or tackling intricate questions, Claude ensures personalized and highly relevant responses by retaining and processing extensive information effectively.

Focus on Safety

Safety is at the heart of Claude’s design. Using a “Constitutional AI” framework, it is carefully crafted to avoid harmful outputs and follow ethical guidelines. This commitment to responsible AI ensures users can trust Claude for transparent and secure interactions. Its openly accessible safety model further solidifies this trust by providing clarity on how it operates.

Speed and Performance

Claude is built for efficiency. It processes dense research papers and large volumes of text in mere seconds, making it a go-to for users who need quick yet accurate results. Coupled with its ability to handle extensive contexts, Claude ensures you can manage demanding tasks without sacrificing time or quality.

What is ChatGPT?

To truly understand the Claude vs ChatGPT debate, you also need to know what ChatGPT is and what makes it so popular.

ChatGPT is OpenAI’s AI chatbot, designed to deliver natural, human-like conversations. Whether you need help writing an article, answering tricky questions, or just want a virtual assistant to chat with, ChatGPT has got you covered.

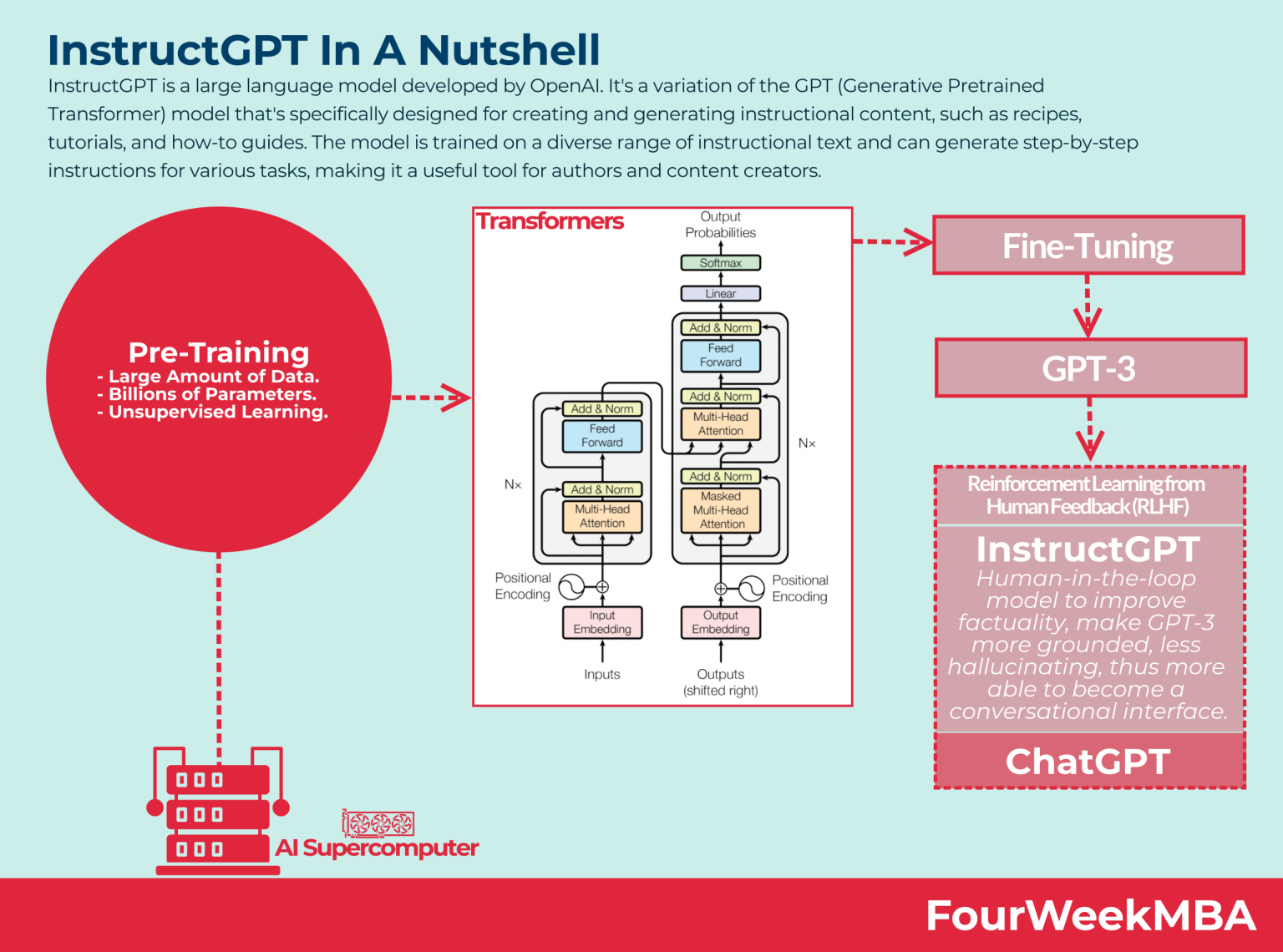

It’s built on the Generative Pre-trained Transformer (GPT) architecture, which is a fancy way of saying it understands and generates text that feels spot-on and relevant. No wonder it’s become a go-to for everything from casual use to professional tasks.

Overview of OpenAI

So, who’s behind ChatGPT? That’s where OpenAI comes in. Founded in 2015, OpenAI is all about creating AI that’s not only powerful but also safe and beneficial for everyone. They’ve developed groundbreaking technologies, like the GPT series, to make advanced AI tools accessible to anyone—from casual users to businesses and developers.

With innovations like ChatGPT, OpenAI has completely changed the game, making AI tools more practical and useful than ever before.

ChatGPT Versions

Now that we’ve covered a bit about OpenAI, let’s explore the different versions of ChatGPT. The most notable active versions include:

- GPT-4

- GPT-4o

- GPT-4o Mini

With each new release, OpenAI has enhanced ChatGPT’s capabilities, refining its performance and adding new features.

Here’s a closer look at these latest active versions and what makes them stand out:

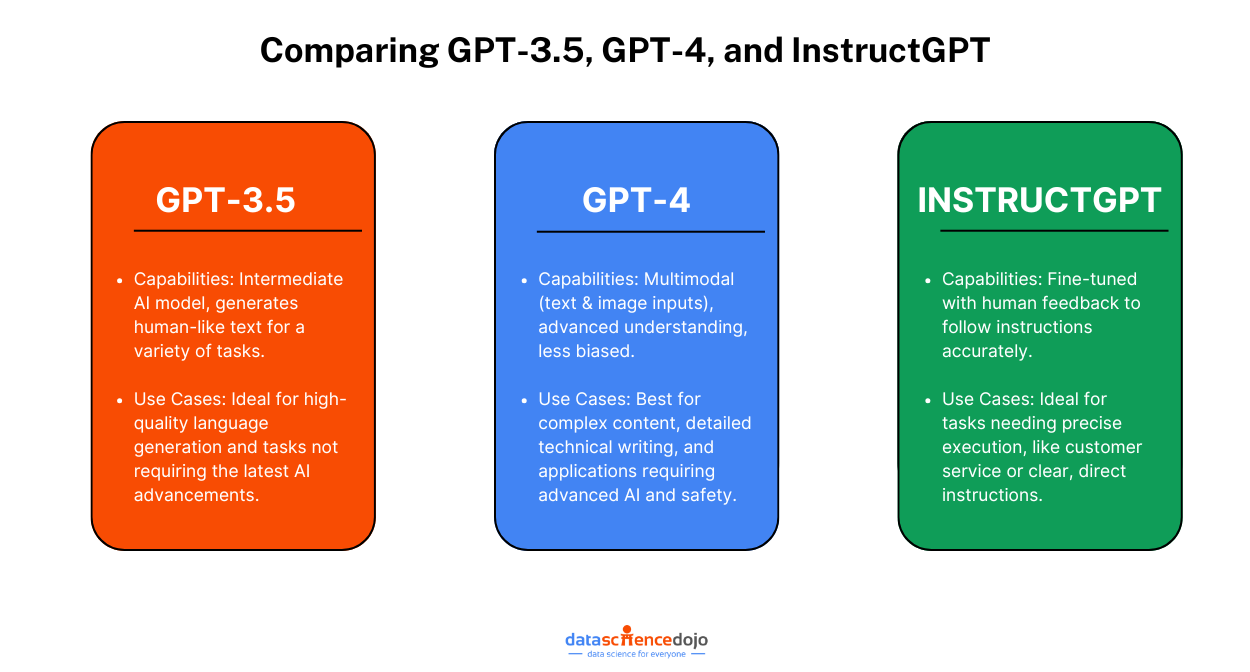

GPT-4 (March 2023): GPT-4 marked a major leap in ChatGPT’s abilities. Released with the ChatGPT Plus subscription, it offered a deeper understanding of complex queries, improved contextual memory, and the ability to handle a wider variety of topics. This made it the go-to version for more advanced and nuanced tasks.

Here’s a comparative analysis between GPT-3.5 and GPT-4

GPT-4o (May 2024): Fast forward to May 2024, and we get GPT-4o. This version took things even further, allowing ChatGPT to process not just text but images, audio, and even video. It’s faster and more capable than GPT-4, with higher usage limits for paid subscriptions, making it a powerful tool for a wider range of applications.

GPT-4o Mini (July 2024): If you’re looking for a more affordable option, GPT-4o Mini might be the right choice. Released in July 2024, it’s a smaller, more budget-friendly version of GPT-4o. Despite its smaller size, it still packs many of the features of its bigger counterpart, making it a great choice for users who need efficiency without the higher price tag.

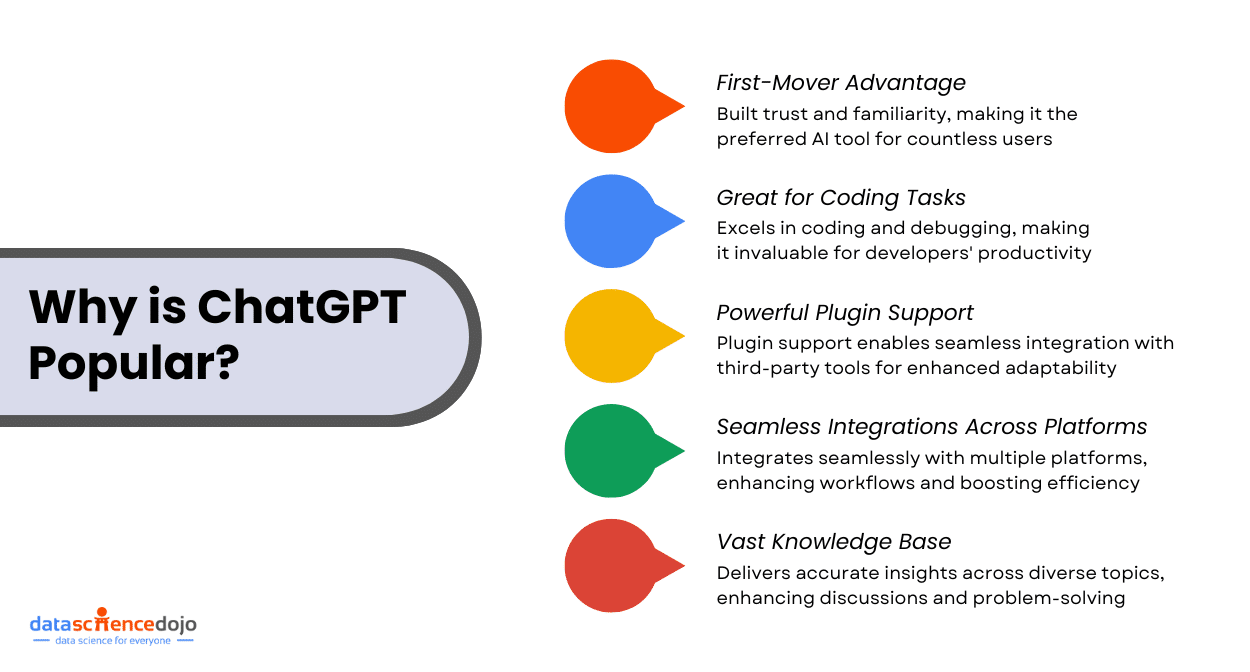

Why ChatGPT is Everyone’s Favorite?

So, what makes ChatGPT such a favorite among users? There are several reasons why it has seamlessly integrated into everyday life and become a go-to tool for many.

Here’s why it’s earned such widespread fame:

First-Mover Advantage

One major reason is its first-mover advantage. Upon launch, it quickly became the go-to conversational AI tool, earning widespread trust and adoption. As the first AI many users interacted with, it helped build confidence in relying on artificial intelligence, creating a sense of comfort and familiarity. For countless users, ChatGPT became the AI they leaned on most, leading to a natural preference for it as their tool of choice.

Great for Coding Tasks

In addition to its early success, ChatGPT’s versatility shines through, particularly for developers. It excels in coding tasks, helping users generate code snippets and troubleshoot bugs with ease. Whether you’re a beginner or an experienced programmer, ChatGPT’s ability to quickly deliver accurate and functional code makes it an essential tool for developers looking to save time and enhance productivity.

Read about the top 5 no-code AI tools for developers

Powerful Plugin Support

Another reason ChatGPT has become so popular is its powerful plugin support. This feature allows users to integrate the platform with a variety of third-party tools, customizing it to fit specific needs—whether it’s analyzing data, creating content, or streamlining workflows. This flexibility makes ChatGPT highly adaptable, empowering users to take full control over their experience.

Seamless Integrations Across Platforms

Moreover, ChatGPT’s ability to work seamlessly across multiple platforms is a key factor in its widespread use. Whether connecting with project management tools, CRM systems, or productivity apps, ChatGPT integrates effortlessly with the tools users already rely on. This smooth interoperability boosts efficiency and simplifies workflows, making everyday tasks easier to manage.

Vast Knowledge Base

At the core of ChatGPT’s appeal is its vast knowledge base. Trained on a wide range of topics, ChatGPT provides insightful, accurate, and detailed information—whether you’re seeking quick answers or diving deep into complex discussions. Its comprehensive understanding across various fields makes it a valuable resource for users in virtually any industry.

Enhance your skills with this ChatGPT cheat sheet with examples

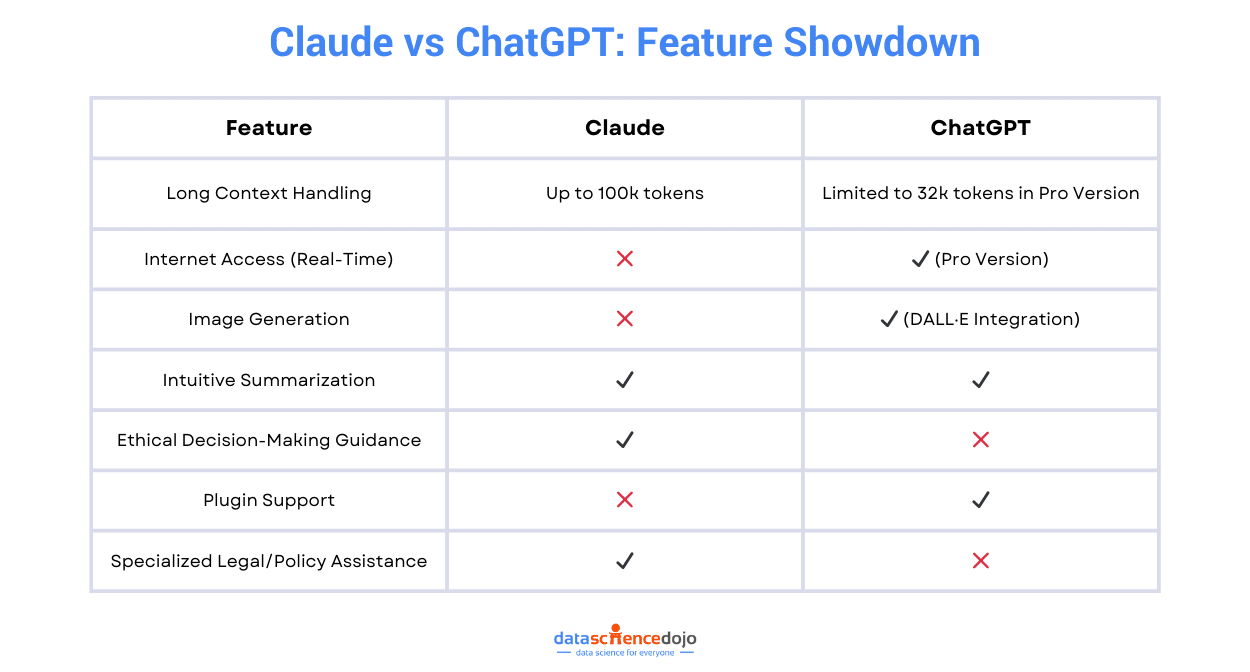

Head-to-Head Comparison: Claude vs ChatGPT

When considering Claude vs ChatGPT, it’s essential to understand how these two AI tools stack up against each other. So, what is Claude AI in comparison to ChatGPT? While both offer impressive capabilities, they differ in aspects like memory, accuracy, user experience, and ethical design.

Here’s a quick comparison to help you choose the best tool for your needs.

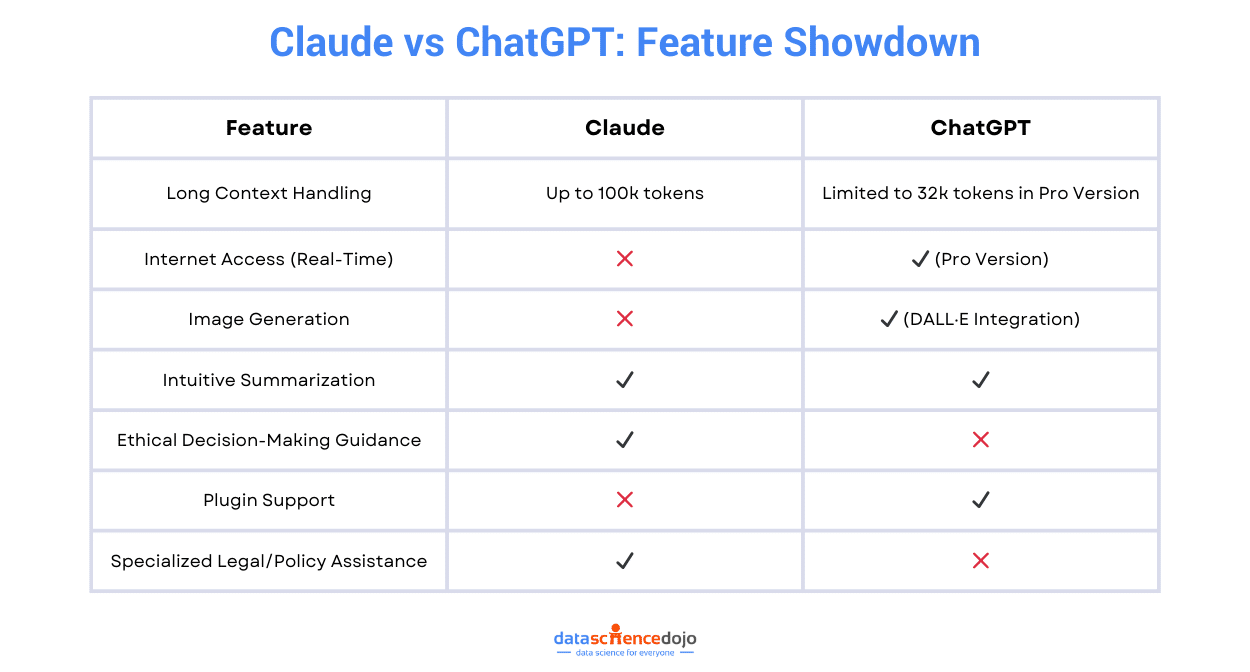

| Feature | Claude AI | ChatGPT |

| Contextual Memory & Window | Larger memory window (200,000 tokens, up to 1,000,000 tokens for specific use cases) | Shorter context window (128,000 tokens, GPT-4) |

| Accuracy | Generally, more accurate in ethical and fact-based tasks | Known for occasional inaccuracies (hallucinations) |

| User Experience | Clean, simple interface ideal for casual users | More complex interface, but powerful and customizable for advanced users |

| AI Ethics and Safety | Focus on “safe AI” with strong ethical design and transparency | Uses safeguards, but has faced criticism for biases and potential harm |

| Response Speed | Slightly slower due to complex safety protocols | Faster responses, especially with smaller prompts |

| Content Quality | High-quality, human-like content generation | Highly capable, but sometimes struggles with nuance in content |

| Coding Capabilities | Good for basic coding tasks, limited compared to ChatGPT | Excellent for coding, debugging, and development support |

| Pricing | $20/month for Claude Pro | $20/month for ChatGPT Plus |

| Internet Access | No | Yes |

| Image Generation | No | Yes (via DALL·E) |

| Supported Languages | Officially supports English, Japanese, Spanish, and French; additional languages supported (e.g., Azerbaijani) | 95+ languages |

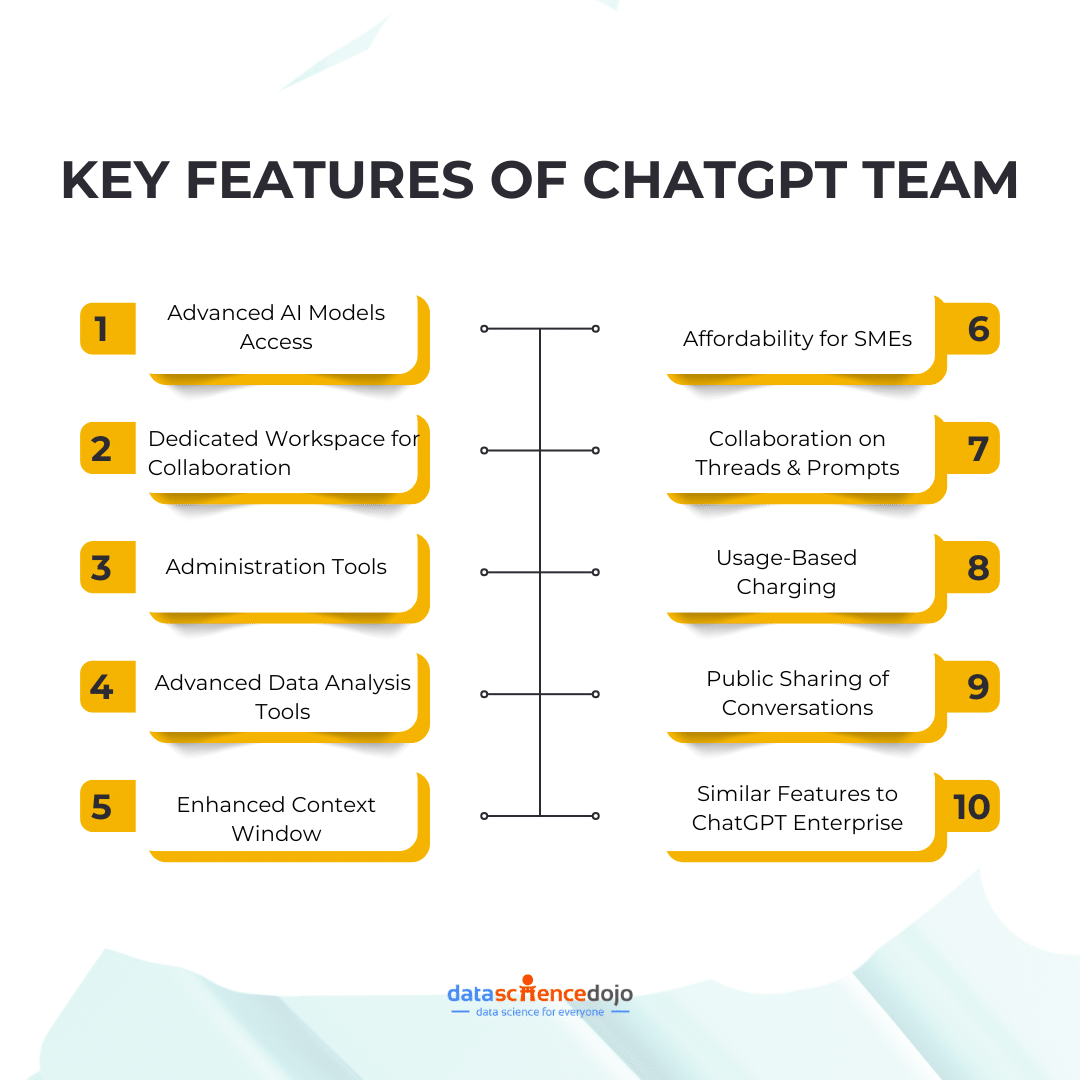

| Team Plans | $30/user/month; includes Projects for collaboration | $30/user/month; includes workspace features and shared custom GPTs |

| API Pricing (Input) | $15 per 1M input tokens (Claude 3 Opus) | $5 per 1M input tokens (GPT-4) |

| API Pricing (Output) | $75 per 1M output tokens (Claude 3 Opus) $3 per 1M input tokens (Claude 3.5 Sonnet) $0.25 per 1M input tokens (Claude 3 Haiku) $5 per 1M input tokens (GPT-4o) $15 per 1M output tokens (GPT-4o) |

$60 per 1M output tokens (GPT-4) $1.50 per 1M output tokens (GPT-3.5 Turbo) $15 per 1M output tokens (GPT-3.5 Turbo) $30 per 1M input tokens (GPT-4) $75 per 1M output tokens (GPT-4) |

Claude vs ChatGPT: Choosing the Best AI Tool for Your Needs

In the debate of Claude vs ChatGPT, selecting the best AI tool ultimately depends on what aligns most with your specific needs. By now, it’s clear that both Claude and ChatGPT offer unique strengths, making them valuable in different scenarios.

To truly benefit from these tools, it’s essential to evaluate which one stands out as the best AI tool for your requirements.

You can also explore the Bard vs ChatGPT debate

Let’s break it down by the type of tasks and users who would benefit most from each tool.

Students & Researchers

Claude

Claude’s strength lies in its ability to handle lengthy and complex texts. With a large context window (up to 200,000 tokens), it can process and retain information from long documents, making it perfect for students and researchers working on academic papers, research projects, or lengthy reports. Plus, its ethical AI framework helps avoid generating misleading or harmful content, which is a big plus when working on sensitive topics.

ChatGPT

ChatGPT, on the other hand, is excellent for interactive learning. Whether you’re looking for quick answers, explanations of complex concepts, or even brainstorming ideas for assignments, ChatGPT shines. It also offers plugin support for tasks like math problem-solving or citation generation, which can enhance the academic experience. However, its shorter context window can make it less effective for handling lengthy documents.

Explore the role of generative AI in education

Recommendation: If you’re diving deep into long texts or research-heavy projects, Claude’s your best bet. For quick, interactive learning or summarizing, ChatGPT is the way to go.

Content Writers

Claude

For long-form content creation, Claude truly excels. Its ability to remember context throughout lengthy articles, blog posts, and reports makes it a strong choice for professional writing. Whether you’re crafting research-backed pieces or marketing content, Claude provides depth, consistency, and a safety-first approach to ensure content stays on track and appropriate.

ChatGPT

ChatGPT is fantastic for short-form, creative writing. From generating social media posts to crafting email campaigns, it’s quick and versatile. Plus, with its integration with tools like DALL·E for image generation, it adds a multimedia edge to your creative projects. Its plugin support for SEO and language refinement further enhances its utility for content creators.

Recommendation: Use Claude for detailed, research-driven writing projects. Turn to ChatGPT for fast, creative content, and when you need to incorporate multimedia elements.

Business Professionals

Claude

For business professionals, Claude is an invaluable tool when it comes to handling large reports, financial documents, or legal papers. Its ability to process detailed information and provide clear summaries makes it perfect for professionals who need precision and reliability. Plus, its ethical framework adds trustworthiness, especially when working in industries that require compliance or confidentiality.

ChatGPT

ChatGPT is more about streamlining day-to-day business operations. With integrations for tools like Slack, Notion, and Trello, it helps manage tasks, communicate with teams, and even draft emails or meeting notes. Its ability to support custom plugins also means you can tailor it to your specific business needs, making it a great choice for enhancing productivity and collaboration.

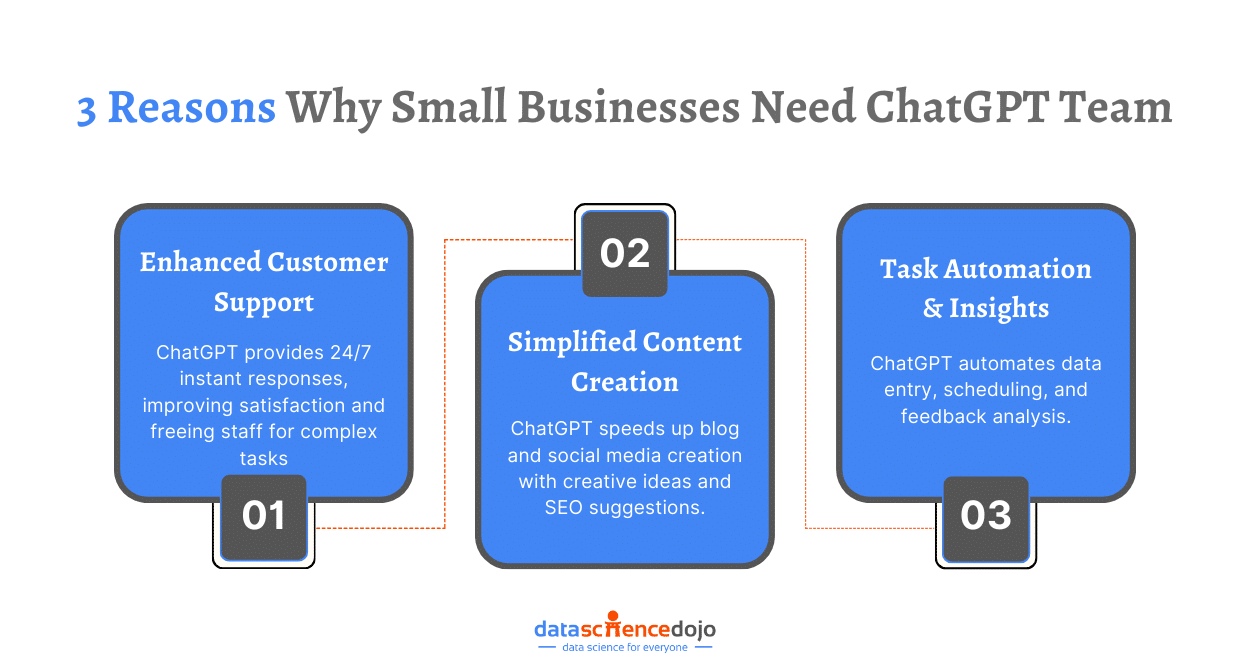

Read more about ChatGPT Enterprise and its role for businesses

Recommendation: Go with Claude for detailed documents and data-heavy tasks. For everyday productivity, task management, and collaborative workflows, ChatGPT is the better option.

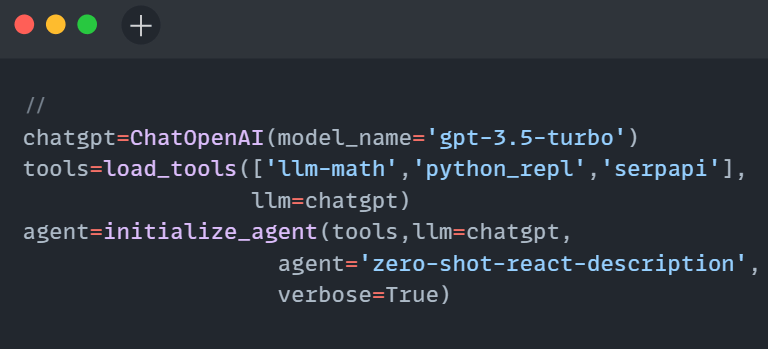

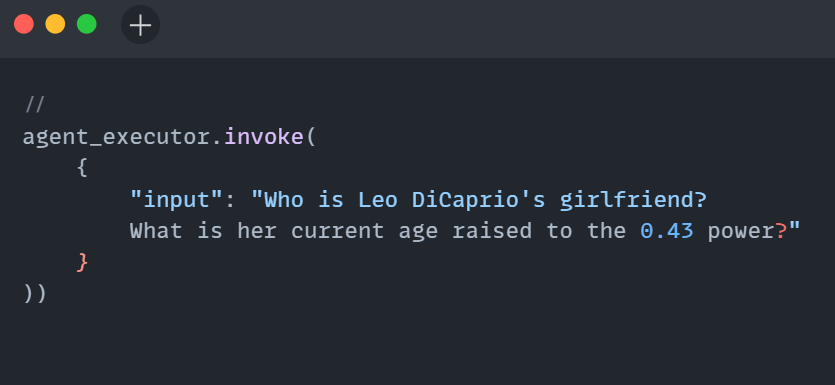

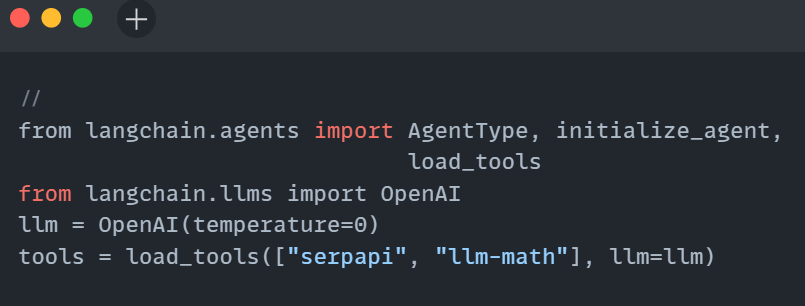

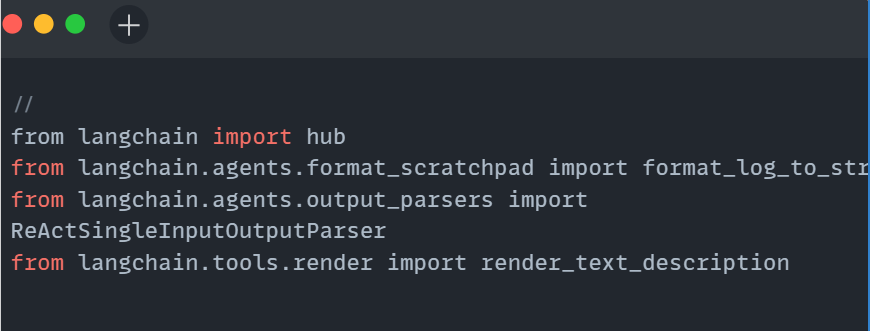

Developers & Coders

Claude

For developers working on large-scale projects, Claude is highly effective. Its long context retention allows it to handle extensive codebases and technical documentation without losing track of important details. This makes it ideal for reviewing large projects or brainstorming technical solutions.

ChatGPT

ChatGPT, on the other hand, is perfect for quick coding tasks. Whether you’re debugging, writing scripts, or learning a new language, ChatGPT is incredibly helpful. With its plugin support, including integrations with GitHub, it also facilitates collaboration with other developers and teams, making it a go-to for coding assistance and learning.

Recommendation: Use Claude for large-scale code reviews and complex project management. Turn to ChatGPT for coding support, debugging, and quick development tasks.

To Sum it Up…

In the end, choosing the best AI tool — whether it’s Claude or ChatGPT — really depends on what you need from your AI. Claude is a powerhouse for tasks that demand large-scale context retention, ethical considerations, and in-depth analysis.

With its impressive 200,000-token context window, it’s the go-to option for researchers, content writers, business professionals, and developers handling complex, data-heavy work. If your projects involve long reports, academic research, or creating detailed, context-rich content, Claude stands out as the more reliable tool.

On the flip side, ChatGPT excels in versatility. It offers incredible speed, creativity, and a broad range of integrations that make it perfect for dynamic tasks like brainstorming, coding, or managing day-to-day business operations. It’s an ideal choice for anyone needing quick answers, creative inspiration, or enhanced productivity through plugin support.

So, what’s the final verdict on Claude vs ChatGPT? If you’re after deep context understanding, safe, ethical AI practices, and the ability to handle long-form content, Claude is your best AI tool. However, if you prioritize versatility, creative tasks, and seamless integration with other tools, ChatGPT will be the better fit.

To learn about LLMs and their practical applications – check out our LLM Bootcamp today!