OpenAI’s latest marvel, GPT4o, is here, and it’s making waves in the AI community. This model is not just another iteration; it’s a significant leap toward making artificial intelligence feel more human. GPT-4o has been designed to interact with us in a way that’s closer to natural human communication.

In this blog, we’ll dive deep into what makes GPT-4o special, how it’s trained, its performance, key features, API comparisons, advanced use cases, and finally, why this model is a game-changer.

Before moving forward, if you want to build your own LLM like ChatGPT, check out our LLM Bootcamp—everything you need to get started!

How is GPT-4o Trained?

Training GPT-4o involves a complex process using massive datasets that include text, images, and audio.

Unlike its predecessors, which relied primarily on text, GPT4o’s training incorporated multiple modalities. This means it was exposed to various forms of communication, including written text, spoken language, and visual inputs. By training on diverse data types, GPT-4o developed a more nuanced understanding of context, tone, and emotional subtleties.

The model uses a neural network that processes all inputs and outputs, enabling it to handle text, vision, and audio seamlessly. This end-to-end training approach allows GPT-4o to perceive and generate human-like interactions more effectively than previous models.

It can recognize voices, understand visual cues, and respond with appropriate emotions, making the interaction feel natural and engaging.

How is the Performance of GPT-4o?

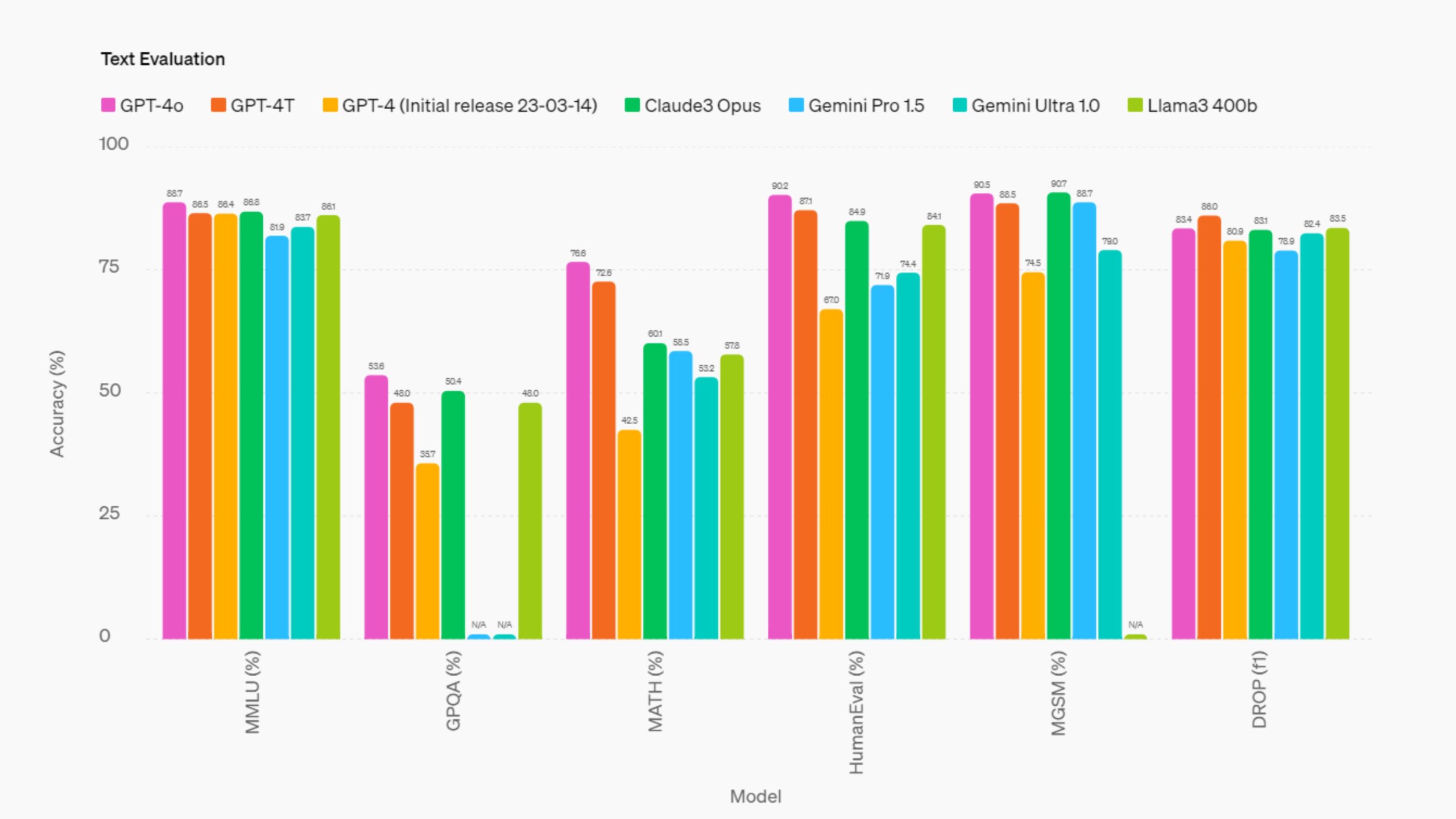

GPT4o features slightly improved or similar scores compared to other Large Multimodal Models (LMMs) like previous GPT-4 iterations, Anthropic’s Claude 3 Opus, Google’s Gemini, and Meta’s Llama3, according to self-released benchmark results by OpenAI.

Text Evaluation

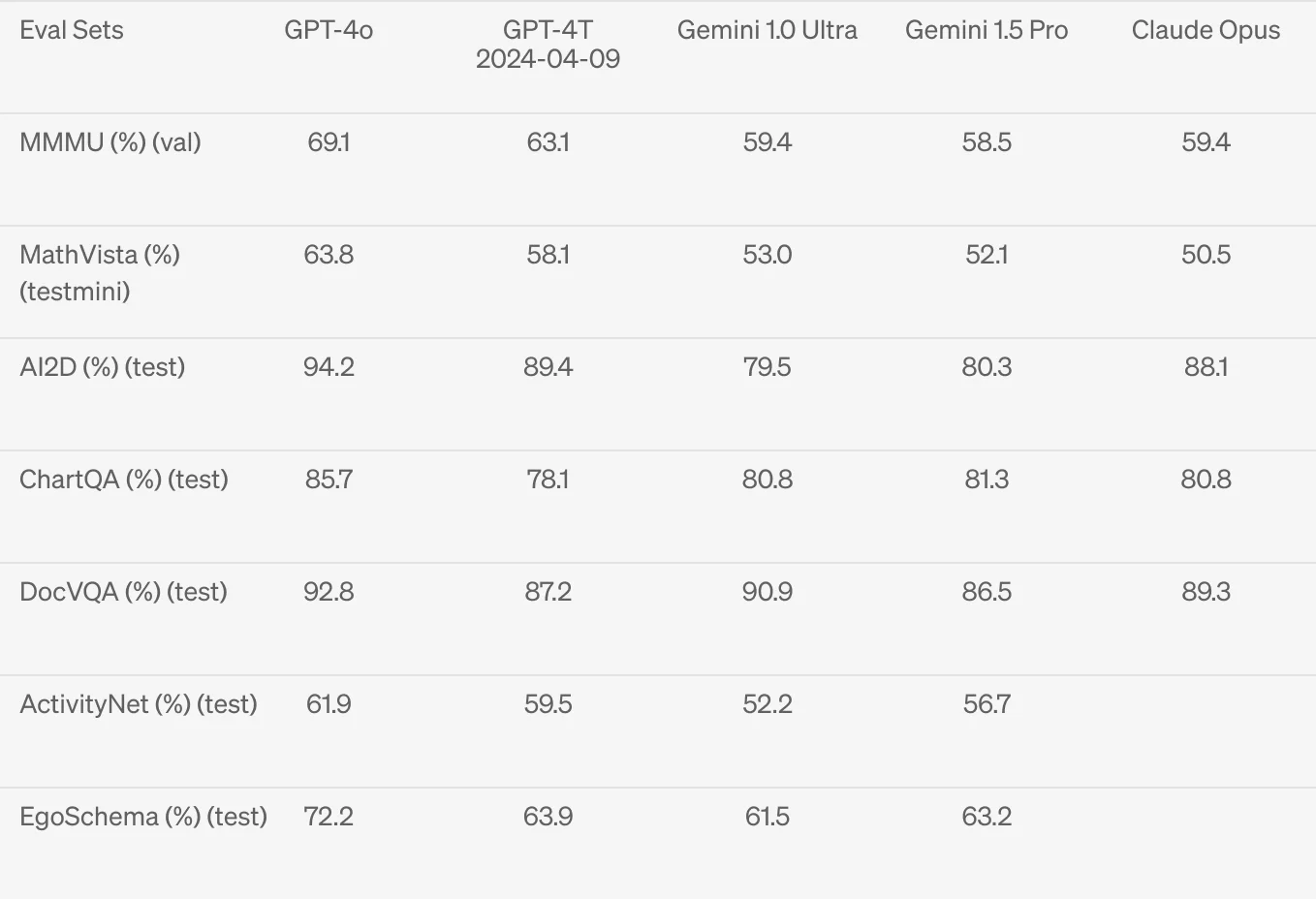

Visual Perception

Moreover, it achieves state-of-the-art performance on visual perception benchmarks.

Features of GPT-4o

1. Vision

GPT-4o’s vision capabilities are impressive. It can interpret and generate visual content, making it useful for applications that require image recognition and analysis. This feature enables the model to understand visual context, describe images accurately, and even create visual content.

2. Memory

One of the standout features of GPT4o is its advanced memory. The model can retain information over extended interactions, making it capable of maintaining context and providing more personalized responses. This memory feature enhances its ability to engage in meaningful and coherent conversations.

Another interesting read: Claude vs ChatGPT

3. Advanced Data Analysis

GPT-4o’s data analysis capabilities are robust. It can process and analyze large datasets quickly, providing insights and generating detailed reports. This feature is valuable for businesses and researchers who need to analyze complex data efficiently.

4. 50 Languages

GPT4o supports 50 languages, making it a versatile tool for global communication. Its multilingual capabilities allow it to interact with users from different linguistic backgrounds, broadening its applicability and accessibility.

5. GPT Store

The GPT Store is an innovative feature that allows users to access and download various plugins and extensions for GPT-4o. These add-ons enhance the model’s functionality, enabling users to customize their AI experience according to their needs.

API – Compared to GPT-4o Turbo

GPT-4o is now accessible through an API for developers looking to scale their applications with cutting-edge AI capabilities. Compared to GPT-4 Turbo, GPT-4o is:

1. 2x Faster

GPT-4o operates twice as fast as the Turbo version. This increased speed enhances user experience by providing quicker responses and reducing latency in applications that require real-time interaction.

2. 50% Cheaper

Using the GPT4o API is cost-effective, being 50% cheaper than the Turbo version. This affordability makes it accessible to a wider range of users, from small businesses to large enterprises.

Also understand the AI technology behind ChatGPT

3. 5x Higher Rate Limits

The API also boasts five times higher rate limits compared to GPT-4o Turbo. This means that applications can handle more requests simultaneously, improving efficiency and scalability for high-demand use cases.

Advanced Use Cases

GPT-4o’s multimodal capabilities open up a wide range of advanced use cases across various fields. Its ability to process and generate text, audio, and visual content makes it a versatile tool that can enhance efficiency, creativity, and accessibility in numerous applications.

1. Healthcare

- Virtual Medical Assistants: GPT-4o can interact with patients through video calls, recognizing symptoms via visual cues and providing preliminary diagnoses or medical advice.

- Telemedicine Enhancements: Real-time transcription and translation capabilities can aid doctors during virtual consultations, ensuring clear and accurate communication with patients globally.

- Medical Training: The model can serve as a virtual tutor for medical students, using its vision and audio capabilities to simulate real-life scenarios and provide interactive learning experiences.

2. Education

- Interactive Learning Tools: GPT4o can deliver personalized tutoring sessions, utilizing both text and visual aids to explain complex concepts.

- Language Learning: The model’s support for 50 languages and its ability to recognize and correct pronunciation can make it an effective tool for language learners.

- Educational Content Creation: Teachers can leverage GPT-4o to generate multimedia educational materials, combining text, images, and audio to enhance learning experiences.

Explore in detail how AI is revolutionizing the education industry

3. Customer Service

- Enhanced Customer Support: GPT4o can handle customer inquiries via text, audio, and video, providing a more engaging and human-like support experience.

- Multilingual Support: Its ability to understand and respond in 50 languages makes it ideal for global customer service operations.

- Emotion Recognition: By recognizing emotional cues in voice and facial expressions, GPT-4o can provide empathetic and tailored responses to customers.

4. Content Creation

- Multimedia Content Generation: Content creators can use GPT4o to generate comprehensive multimedia content, including articles with embedded images and videos.

- Interactive Storytelling: The model can create interactive stories where users can engage with characters via text or voice, enhancing the storytelling experience.

- Social Media Management: GPT-4o can analyze trends, generate posts in multiple languages, and create engaging multimedia content for various platforms.

You might also like: Content with AI

5. Business and Data Analysis

- Data Visualization: GPT-4o can interpret complex datasets and generate visual representations, making it easier for businesses to understand and act on data insights.

- Real-Time Reporting: The model can analyze business performance in real-time, providing executives with up-to-date reports via text, visuals, and audio summaries.

- Virtual Meetings: During business meetings, GPT-4o can transcribe conversations, translate between languages, and provide visual aids, improving communication and decision-making.

6. Accessibility

- Assistive Technologies: GPT4o can aid individuals with disabilities by providing voice-activated commands, real-time transcription, and translation services, enhancing accessibility to information and communication.

- Sign Language Interpretation: The model can potentially interpret sign language through its vision capabilities, offering real-time translation to text or speech for the hearing impaired.

- Enhanced Navigation: For visually impaired users, GPT-4o can provide detailed audio descriptions of visual surroundings, assisting with navigation and object recognition.

7. Creative Arts

- Digital Art Creation: Artists can collaborate with GPT-4o to create digital artworks, combining text prompts with visual elements generated by the model.

- Music Composition: The model’s ability to understand and generate audio can be used to compose music, create soundscapes, and even assist with lyrical content.

- Film and Video Production: Filmmakers can use GPT4o for scriptwriting, storyboarding, and even generating visual effects, streamlining the creative process.

Related Read:

A Future with GPT4o

OpenAI’s GPT4o is a groundbreaking model that brings us closer to human-like AI interactions. Its advanced training, impressive performance, and versatile features make it a powerful tool for a wide range of applications. From enhancing customer service to supporting healthcare and education, GPT-4o has the potential to transform various industries and improve our daily lives.

By understanding how GPT4o works and its capabilities, we can better appreciate the advancements in AI technology and explore new ways to leverage these tools for our benefit. As we continue to integrate AI into our lives, models like GPT-4o will play a crucial role in shaping the future of human-AI interaction.

Let’s embrace this technology and explore its possibilities, knowing that we are one step closer to making AI as natural and intuitive as human communication.