OpenAI model series, o1, marks a turning point in AI development, setting a new standard for how machines approach complex problems. Unlike its predecessors, which excelled in generating fluent language and basic reasoning, the o1 models were designed to think step-by-step, making them significantly better at tackling intricate tasks like coding and advanced mathematics.

What makes the OpenAI model, o1 stand out? It’s not just about size or speed—it’s about their unique ability to process information in a more human-like, logical sequence. This breakthrough promises to reshape what’s possible with AI, pushing the boundaries of accuracy and reliability. Curious about how these models are redefining the future of artificial intelligence? Read on to discover what makes them truly groundbreaking.

What is o1? Decoding the Hype Around the New OpenAI Model

The OpenAI o1 model series, which includes o1-preview and o1-mini, marks a significant evolution in the development of artificial intelligence. Unlike earlier models like GPT-4, which were optimized primarily for language generation and basic reasoning, o1 was designed to handle more complex tasks by simulating human-like step-by-step thinking.

This model series was developed to excel in areas where precision and logical reasoning are crucial, such as advanced mathematics, coding, and scientific analysis.

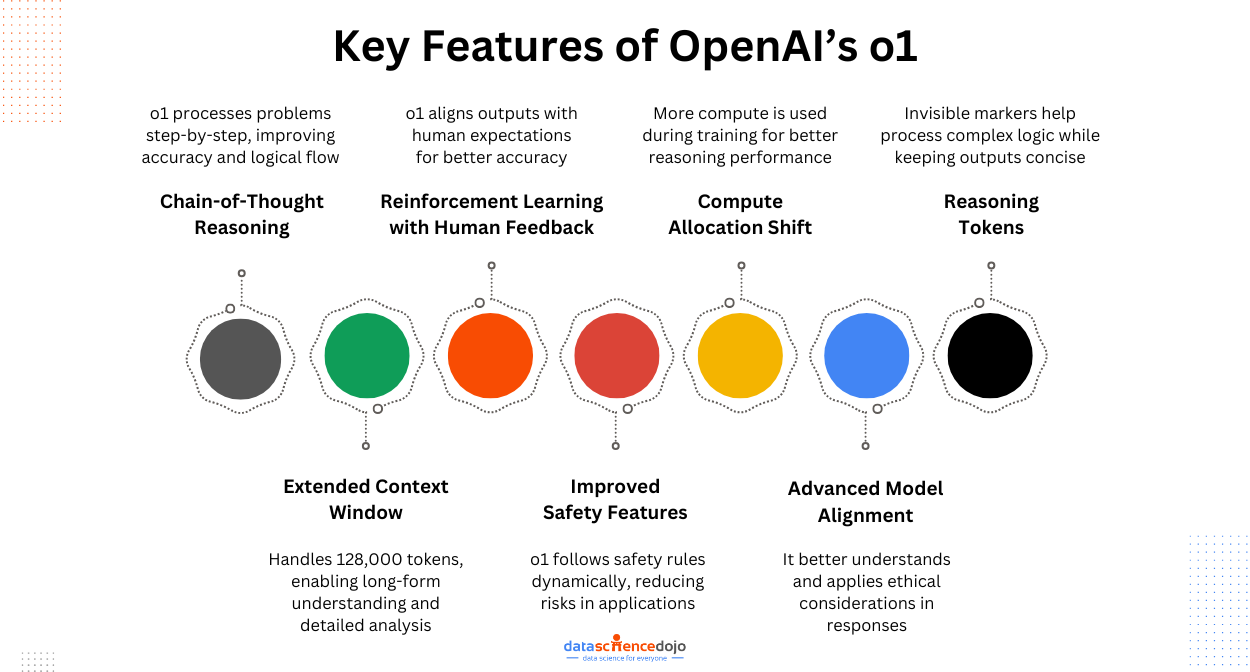

Key Features of OpenAI o1

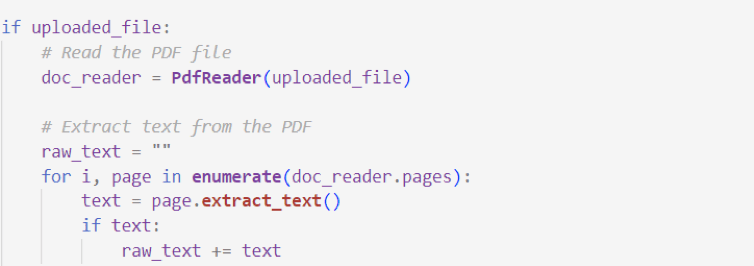

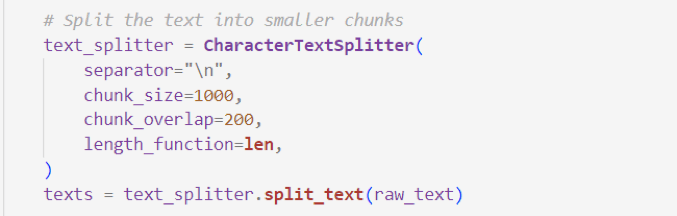

- Chain-of-Thought Reasoning: A key innovation in the o1 series is its use of chain-of-thought reasoning, which enables the model to think through problems in a sequential manner. This involves processing a series of intermediate steps internally, which helps the model arrive at a more accurate final answer.

For instance, when solving a complex math problem, the OpenAI o1 model doesn’t just generate an answer; it systematically works through the formulas and calculations, ensuring a more reliable result. - Reinforcement Learning with Human Feedback: Unlike earlier models, o1 was trained using reinforcement learning with human feedback (RLHF), which means the model received rewards for generating desired reasoning steps and aligning its outputs with human expectations.

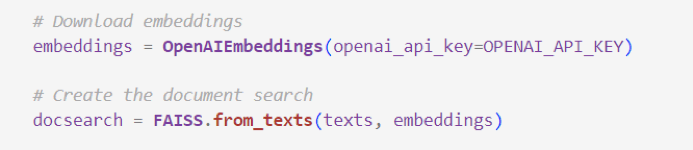

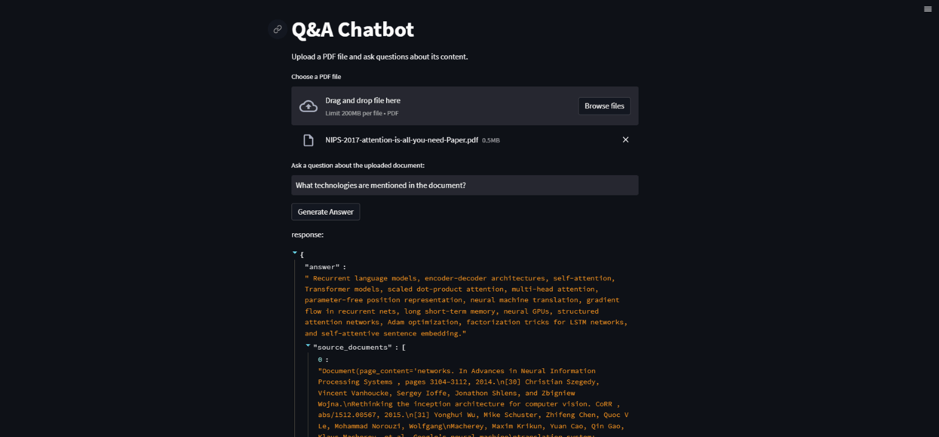

This approach not only enhances the model’s ability to perform intricate tasks but also improves its alignment with ethical and safety guidelines. This training methodology allows the model to reason about its own safety protocols and apply them in various contexts, thereby reducing the risk of harmful or biased outputs. - A New Paradigm in Compute Allocation: The OpenAI o1 model stands out by reallocating computational resources from massive pretraining datasets to the training and inference phases. This shift enhances the model’s complex reasoning abilities.

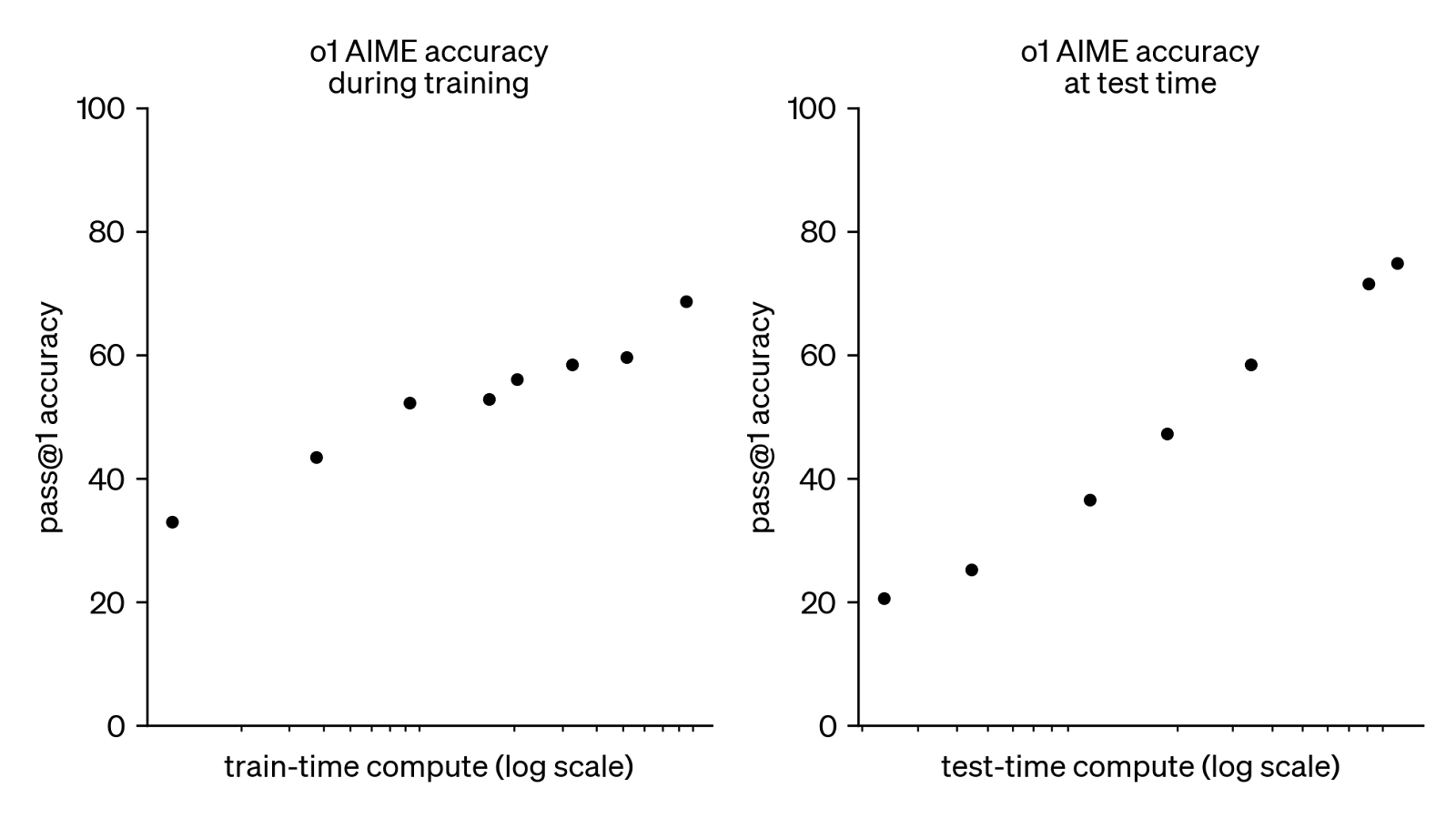

Source: OpenAI The provided chart illustrates that increased compute, especially during inference, significantly boosts the model’s accuracy in solving AIME math problems. This suggests that more compute allows o1 to “think” more effectively, highlighting its compute-intensive nature and potential for further gains with additional resources.

- Reasoning Tokens: To manage complex reasoning internally, the o1 models use “reasoning tokens”. These tokens are processed invisibly to users but play a critical role in allowing the model to think through intricate problems. By using these internal markers, the model can maintain a clear and concise output while still performing sophisticated computations behind the scenes.

- Extended Context Window: The o1 models offer an expanded context window of up to 128,000 tokens. This capability enables the model to handle longer and more complex interactions, retaining much more information within a single session. It’s particularly useful for working with extensive documents or performing detailed code analysis.

- Enhanced Safety and Alignment: Safety and alignment have been significantly improved in the o1 series. The models are better at adhering to safety protocols by reasoning through these rules in real-time, reducing the risk of generating harmful or biased content. This makes them not only more powerful but also safer to use in sensitive applications.

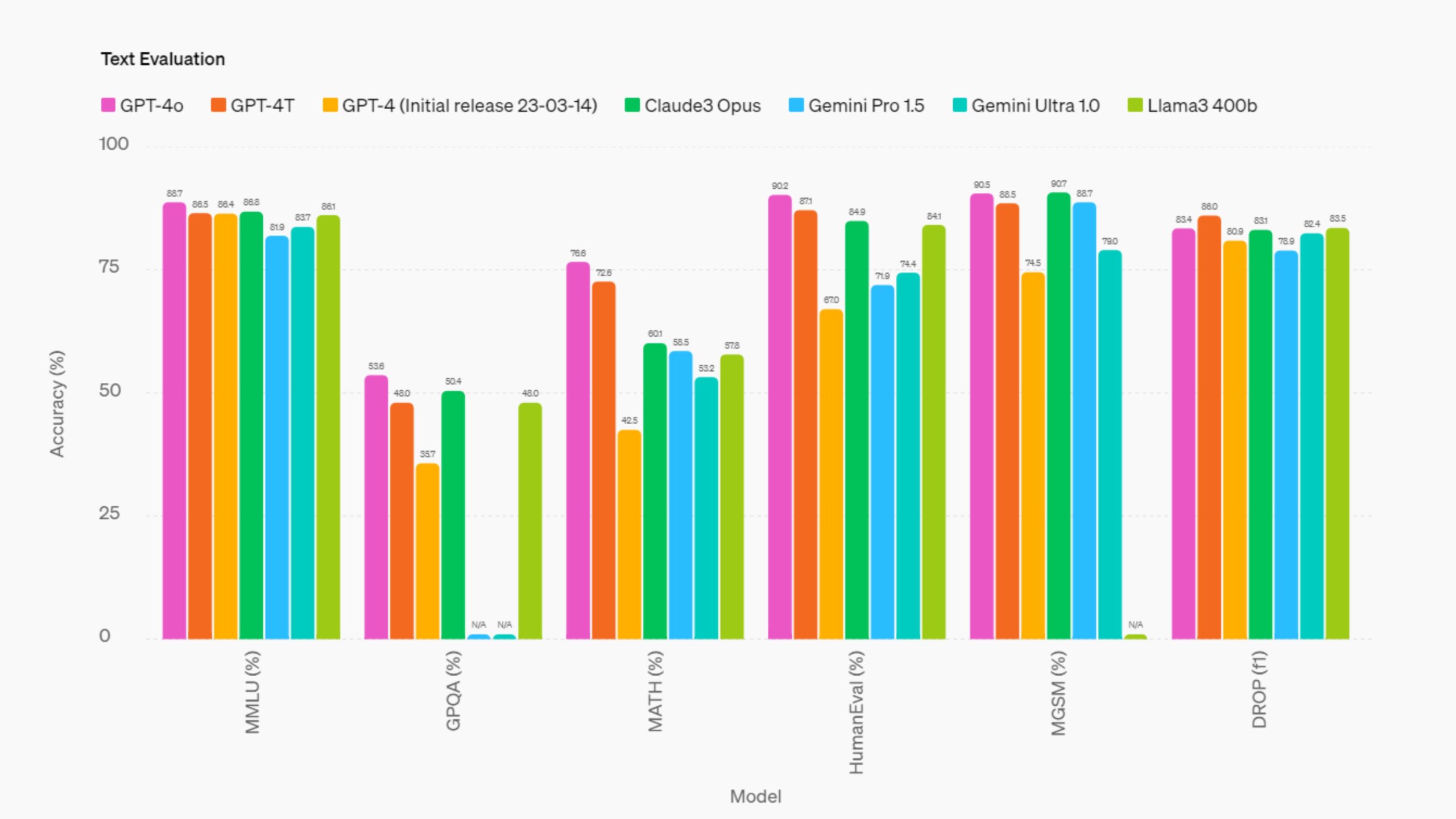

Performance of o1 Vs. GPT-4o; Comparing the Latest OpenAI Models

The OpenAI o1 series showcases significant improvements in reasoning and problem-solving capabilities compared to previous models like GPT-4o.

Here’s a complete guide to understanding LLM evaluation

Here’s a detailed look at how o1 outperforms its predecessors across various domains:

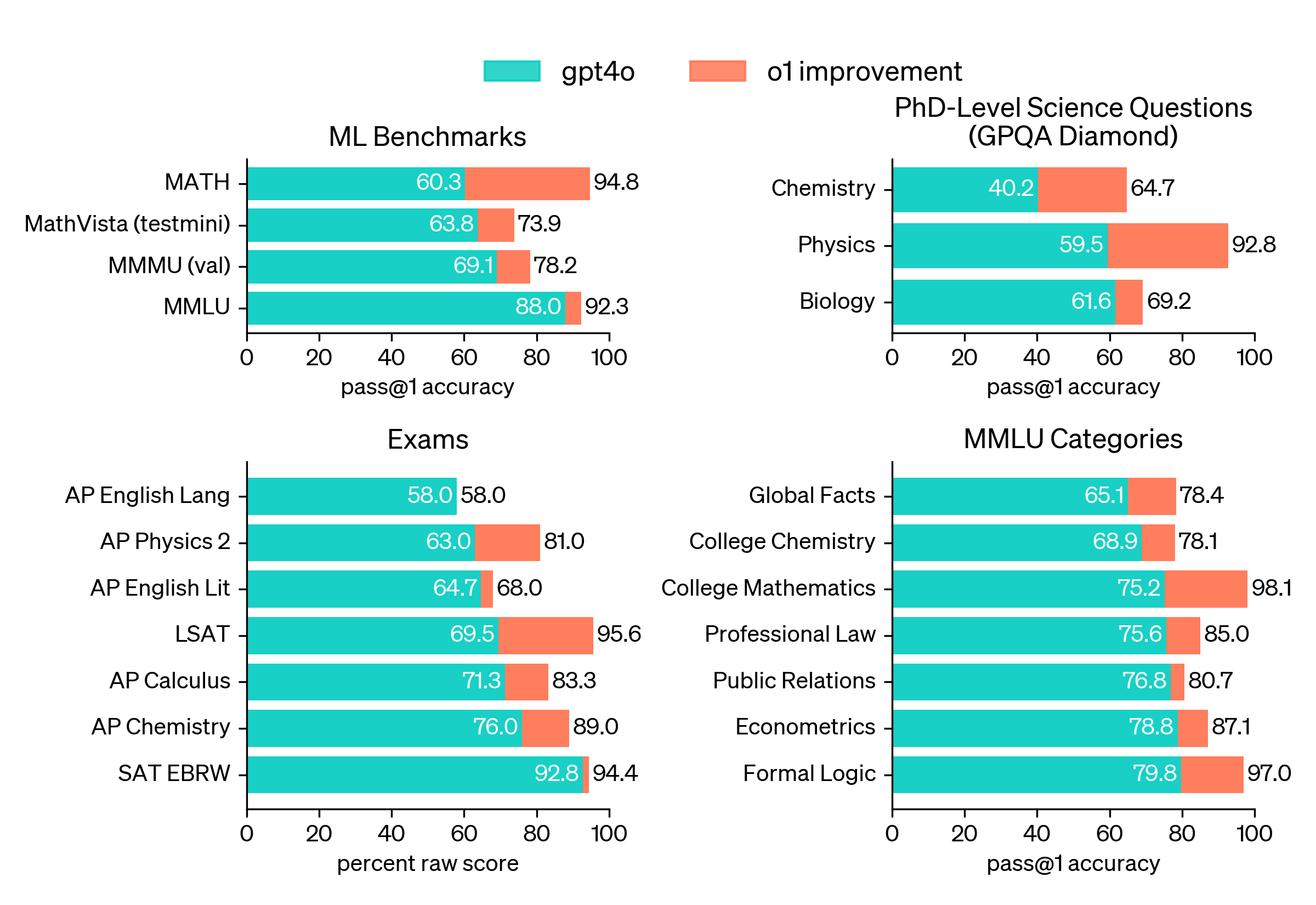

1. Advanced Reasoning and Mathematical Benchmarks:

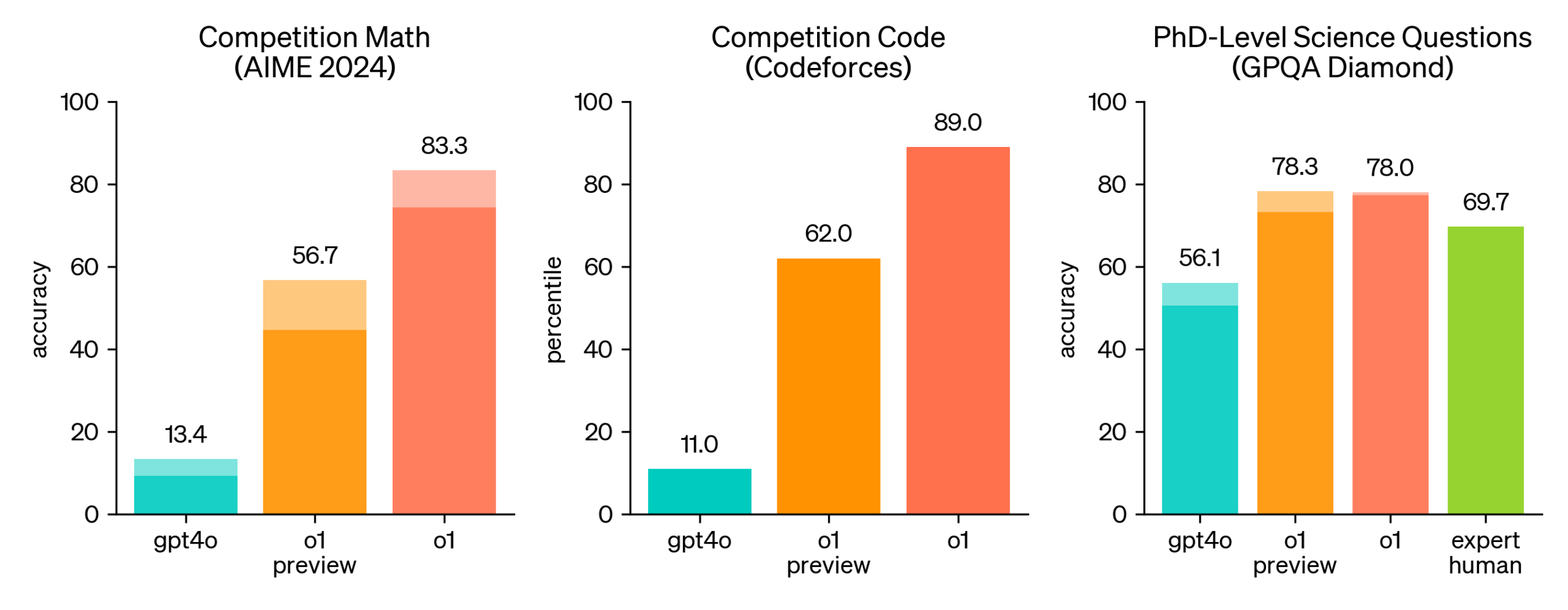

The o1 models excel in complex reasoning tasks, significantly outperforming GPT-4o in competitive math challenges. For example, in a qualifying exam for the International Mathematics Olympiad (IMO), the o1 model scored 83%, while GPT-4o only managed 13%.

This indicates a substantial improvement in handling high-level mathematical problems and suggests that the o1 models can perform on par with PhD-level experts in fields like physics, chemistry, and biology.

2. Competitive Programming and Coding:

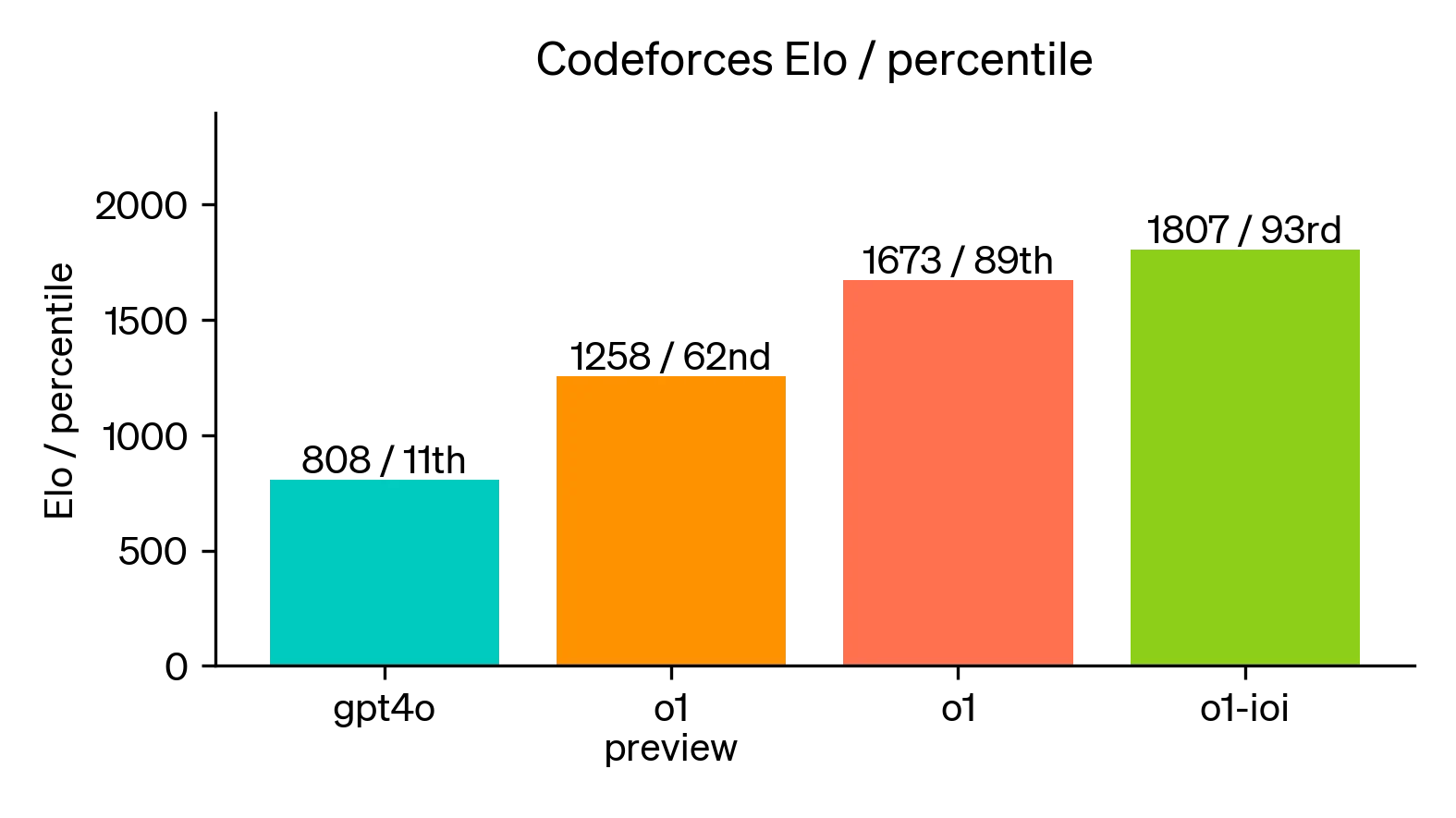

The OpenAI o1 models also show superior results in coding tasks. They rank in the 89th percentile on platforms like Codeforces, indicating their ability to handle complex coding problems and debug efficiently. This performance is a marked improvement over GPT-4o, which, while competent in coding, does not achieve the same level of proficiency in competitive programming scenarios.

Read more about Top AI Tools for Code Generation

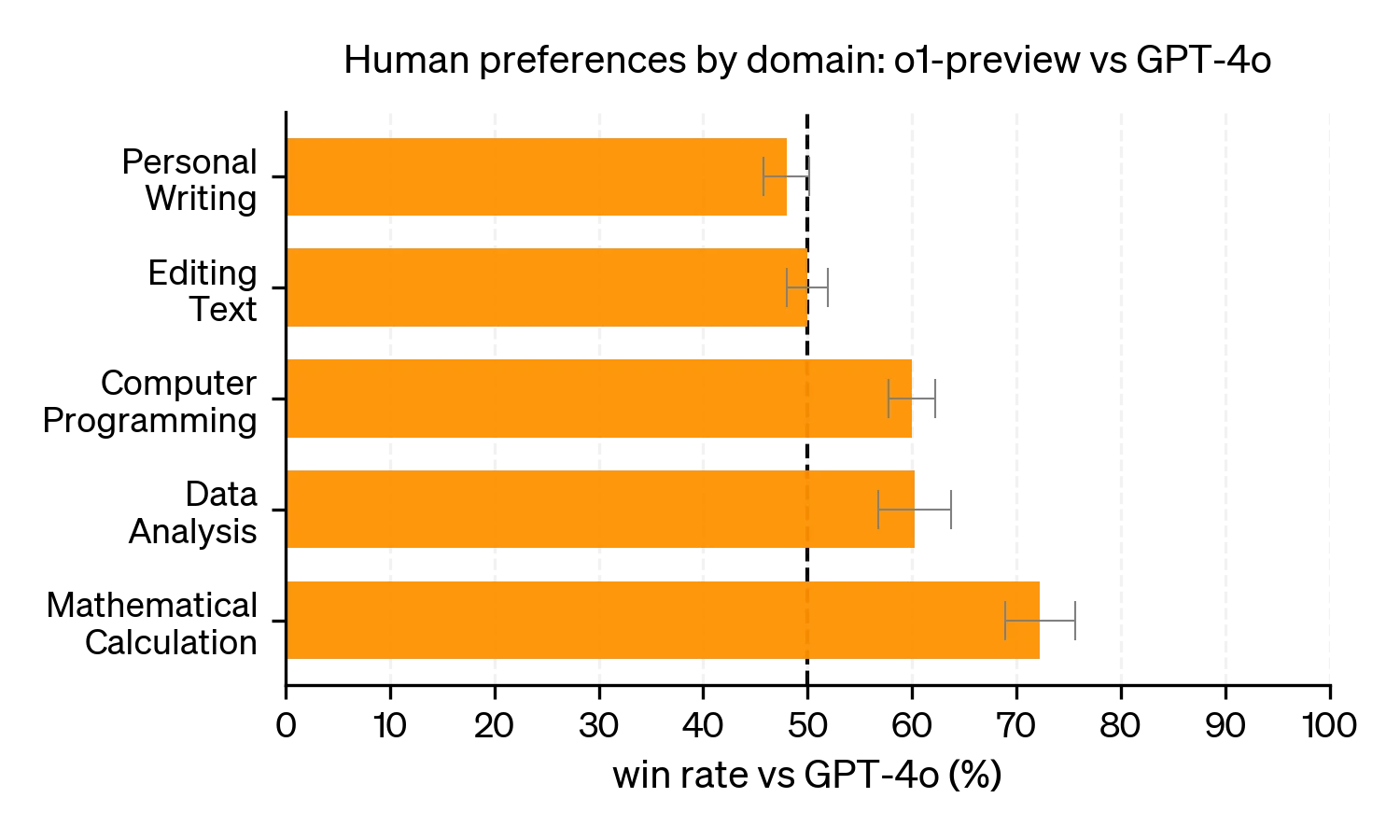

3. Human Evaluations and Safety:

In human preference tests, o1-preview consistently received higher ratings for tasks requiring deep reasoning and complex problem-solving. The integration of “chain of thought” reasoning into the model enhances its ability to manage multi-step reasoning tasks, making it a preferred choice for more complex applications.

Additionally, the o1 models have shown improved performance in handling potentially harmful prompts and adhering to safety protocols, outperforming GPT-4o in these areas.

Explore more about Evaluating Large Language Models

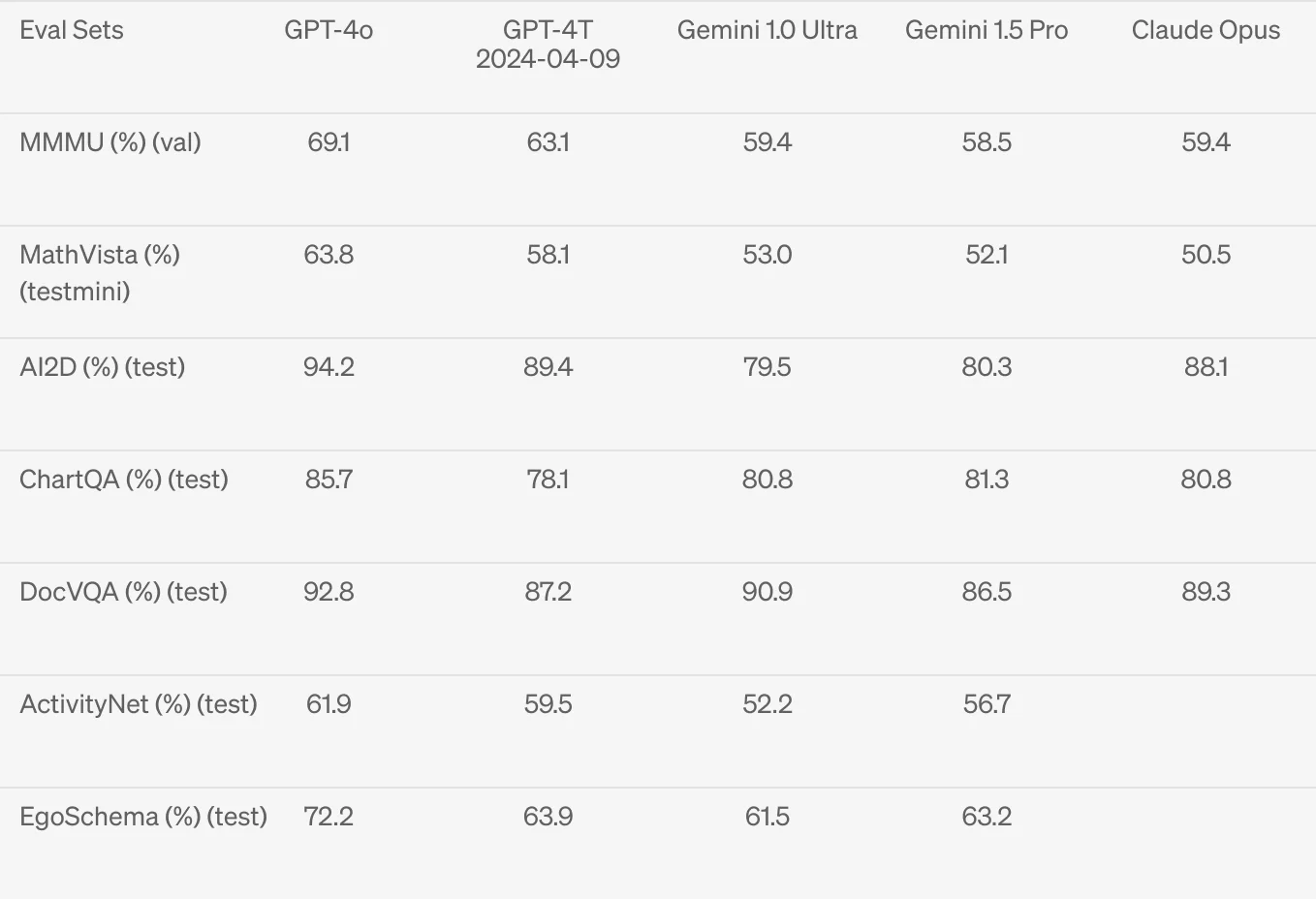

4. Standard ML Benchmarks:

On standard machine learning benchmarks, the OpenAI o1 models have shown broad improvements across the board. They have demonstrated robust performance in general-purpose tasks and outperformed GPT-4o in areas that require nuanced understanding and deep contextual analysis. This makes them suitable for a wide range of applications beyond just mathematical and coding tasks.

Use Cases and Applications of OpenAI Model, o1

Models like OpenAI’s o1 series are designed to excel in a range of specialized and complex tasks, thanks to their advanced reasoning capabilities. Here are some of the primary use cases and applications:

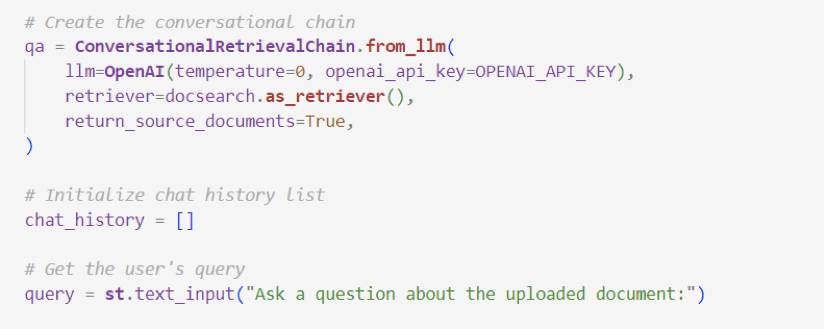

1. Advanced Coding and Software Development:

The OpenAI o1 models are particularly effective in complex code generation, debugging, and algorithm development. They have shown proficiency in coding competitions, such as those on Codeforces, by accurately generating and optimizing code. This makes them valuable for developers who need assistance with challenging programming tasks, multi-step workflows, and even generating entire software solutions.

2. Scientific Research and Analysis:

With their ability to handle complex calculations and logic, OpenAI o1 models are well-suited for scientific research. They can assist researchers in fields like chemistry, biology, and physics by solving intricate equations, analyzing data, and even suggesting experimental methodologies. They have outperformed human experts in scientific benchmarks, demonstrating their potential to contribute to advanced research problems.

3. Legal Document Analysis and Processing:

In legal and professional services, the OpenAI o1 models can be used to analyze lengthy contracts, case files, and legal documents. They can identify subtle differences, summarize key points, and even assist in drafting complex documents like SPAs and S-1 filings, making them a powerful tool for legal professionals dealing with extensive and intricate paperwork.

4. Mathematical Problem Solving:

The OpenAI o1 models have demonstrated exceptional performance in advanced mathematics, solving problems that require multi-step reasoning. This includes tasks like calculus, algebra, and combinatorics, where the model’s ability to work through problems logically is a major advantage. They have achieved high scores in competitions like the American Invitational Mathematics Examination (AIME), showing their strength in mathematical applications.

Read more about the key statistical distributions to know

5. Education and Tutoring:

With their capacity for step-by-step reasoning, o1 models can serve as effective educational tools, providing detailed explanations and solving complex problems in real-time. They can be used in educational platforms to tutor students in STEM subjects, help them understand complex concepts, and guide them through difficult assignments or research topics.

6. Data Analysis and Business Intelligence:

The ability of o1 models to process large amounts of information and perform sophisticated reasoning makes them suitable for data analysis and business intelligence. They can analyze complex datasets, generate insights, and even suggest strategic decisions based on data trends, helping businesses make data-driven decisions more efficiently.

These applications highlight the versatility and advanced capabilities of the o1 models, making them valuable across a wide range of professional and academic domains.

Limitations of o1

Despite the impressive capabilities of OpenAI’s o1 models, they do come with certain limitations that users should be aware of:

1. High Computational Costs:

The advanced reasoning capabilities of the OpenAI o1 models, including their use of “reasoning tokens” and extended context windows, make them more computationally intensive compared to earlier models like GPT-4o. This results in higher costs for processing and slower response times, which can be a drawback for applications that require real-time interactions or large-scale deployment.

2. Limited Availability and Access:

Currently, the o1 models are only available to a select group of users, such as those with API access through specific tiers or ChatGPT Plus subscribers. This restricted access limits their usability and widespread adoption, especially for smaller developers or organizations that may not meet the requirements for access.

3. Lack of Transparency in Reasoning:

While the o1 models are designed to reason through complex problems using internal reasoning tokens, these intermediate steps are not visible to the user. This lack of transparency can make it challenging for users to understand how the model arrives at its conclusions, reducing trust and making it difficult to validate the model’s outputs, especially in critical applications like healthcare or legal analysis.

4. Limited Feature Support:

The current o1 models do not support some advanced features available in other models, such as function calling, structured outputs, streaming, and certain types of media integration. This limits their versatility for applications that rely on these features, and users may need to switch to other models like GPT-4o for specific use cases.

Dig deeper into understanding GPT-4o

5. Higher Risk in Certain Applications:

Although the o1 models have improved safety mechanisms, they still pose a higher risk in certain domains, such as generating biological threats or other sensitive content. The complexity and capability of the model can make it more difficult to predict and control its behavior in risky scenarios, despite the improved alignment efforts.

6. Incomplete Implementation:

As the o1 models are currently in a preview state, they lack several planned features, such as support for different media types and enhanced safety functionalities. This incomplete implementation means that users may experience limitations in functionality and performance until these features are fully developed and integrated into the models.

In summary, while the o1 models offer groundbreaking advancements in reasoning and problem-solving, they are accompanied by challenges such as high computational costs, limited availability, lack of transparency in reasoning, and some missing features that users need to consider based on their specific use cases.

Final Thoughts: A Step Forward with Limitations

The OpenAI o1 model series represents a remarkable advancement in AI, with its ability to perform complex reasoning and handle intricate tasks more effectively than its predecessors. Its unique focus on step-by-step problem-solving has opened new possibilities for applications in coding, scientific research, and beyond.

However, these capabilities come with trade-offs. High computational costs, limited access, and incomplete feature support mean that while o1 offers significant benefits, it’s not yet a one-size-fits-all solution.

As OpenAI continues to refine and expand the o1 series, addressing these limitations will be crucial for broader adoption and impact. For now, o1 remains a powerful tool for those who can leverage its advanced reasoning capabilities, while also navigating its current constraints.