Data science is one of the most in-demand fields today, offering exciting career opportunities across industries. But breaking into this field requires more than just enthusiasm—it demands the right skills, hands-on experience, and a strong learning foundation. That’s where data science bootcamps come in.

With countless programs promising to turn you into a data science pro, choosing the right one can feel overwhelming. The best bootcamp isn’t just about coding and algorithms—it should align with your career goals, learning style, and industry needs.

In this guide, we’ll walk you through the essential factors to consider, from your career aspirations, the specific skills you need to acquire, program costs, and the bootcamp’s structure and location. By the end, you’ll have the insights needed to make an informed decision and kick-start your journey into the world of data science.

The Challenge: Choosing the Right Data Science Bootcamp

Once you’ve decided to pursue a data science bootcamp, the next step is finding the one that best aligns with your goals and needs. With so many options available, it’s important to look beyond just the course content and consider factors like career alignment, skill requirements, program format, and credibility.

In this section, we’ll break down the key aspects to evaluate—from assessing your current skill level to researching industry rankings and institutional reputation—so you can confidently choose a bootcamp that sets you up for success.

- Outline your career goals: What do you want to do with a data science degree? Do you want to be a data scientist, a data analyst, or a data engineer? Once you know your career goals, you can start to look for a bootcamp that will help you achieve them.

Explore Data Engineering Tools

- Research job requirements: What skills do you need to have to get a job in data science? Once you know the skills you need, you can start to look for a bootcamp that will teach you those skills.

- Assess your current skills: How much do you already know about data science? If you have some basic knowledge, you can look for a bootcamp that will build on your existing skills. If you don’t have any experience with data science, you may want to look for a bootcamp that is designed for beginners.

- Research programs: There are many different data science bootcamps available. Do some research to find a bootcamp that is reputable and that offers the skills you need.

- Consider structure and location: Do you want to attend an in-person bootcamp or an online bootcamp? Do you want to attend a bootcamp that is located near you or one that is online?

- Take note of relevant topics: What topics will be covered in the bootcamp? Make sure that the bootcamp covers the topics that are relevant to your career goals.

- Know the cost: How much does the bootcamp cost? Make sure that you can afford the cost of the Bootcamp.

- Research institution reputation: Choose a bootcamp from a reputable institution or university.

- Ranking ( mention switch up, course report, career karma, and other reputable rankings.

By following these tips, you can choose the right data science bootcamp for you and start your journey to a career in data science.

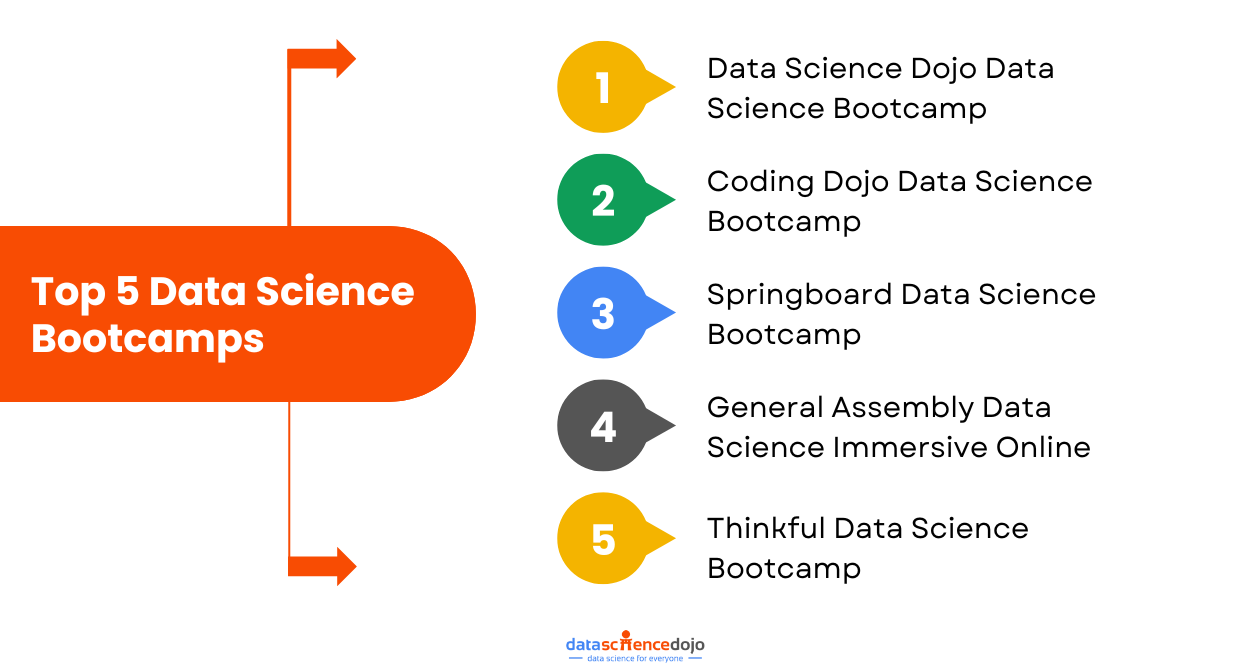

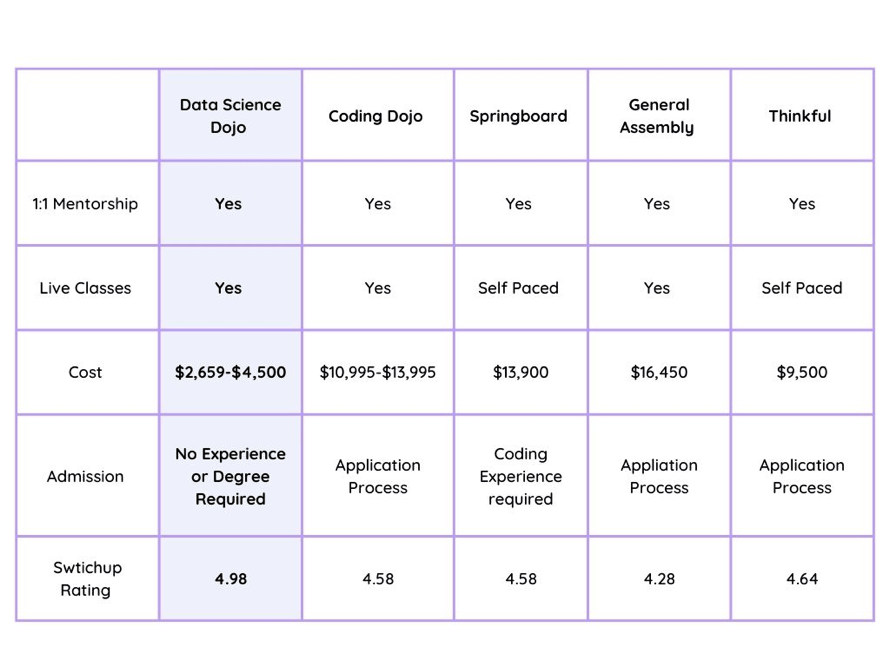

Best Picks – Top 5 Data Science Bootcamp to Look Out for

1. Data Science Dojo Data Science Bootcamp

Delivery Format: Online and In-person

Tuition: $2,659 to $4,500

Duration: 16 weeks

Data Science Dojo Bootcamp stands out as an exceptional option for individuals aspiring to become data scientists. It provides a supportive learning environment through personalized mentorship and live instructor-led sessions.

The program welcomes beginners, requiring no prior experience, and offers affordable tuition with convenient installment plans featuring 0% interest.

Data science interview AMA | Make your skills marketable

The bootcamp adopts a business-first approach, combining theoretical understanding with practical, hands-on projects. The team of instructors, possessing extensive industry experience, offers individualized assistance during dedicated office hours, ensuring a rewarding learning journey.

2. Coding Dojo Data Science Bootcamp Online Part-Time

Delivery Format: Online

Tuition: $11,745 to $13,745

Duration: 16 to 20 weeks

Next on the list, we have Coding Dojo. The bootcamp offers courses in data science and machine learning. The bootcamp is open to students with any background and does not require a four-year degree or prior programming experience.

Students can choose to focus on either data science and machine learning in Python or data science and visualization. The bootcamp offers flexible learning options, real-world projects, and a strong alumni network. However, it does not guarantee a job, and some prior knowledge of programming is helpful.

3. Springboard Data Science Bootcamp

Delivery Format: Online

Tuition: $14,950

Duration: 12 months long

Springboard’s Data Science Bootcamp is an online program that teaches students the skills they need to become data scientists. The program is designed to be flexible and accessible, so students can learn at their own pace and from anywhere in the world.

Explore the Top 54 shared data science quotes

Springboard also offers a job guarantee, which means that if you don’t land a job in data science within six months of completing the program, you’ll get your money back.

4. General Assembly Data Science Immersive Online

Delivery Format: Online, in real-time

Tuition: $16,450

Duration: Around 3 months

General Assembly’s online data science bootcamps offer an intensive learning experience. The attendees can connect with instructors and peers in real time through interactive classrooms. The course includes topics like Python, statistical modeling, decision trees, and random forests.

However, this intermediate-level course requires prerequisites, including a strong mathematical background and familiarity with Python.

5. Thinkful Data Science Bootcamp

Delivery Format: Online

Tuition: $16,950

Duration: 6 months

Thinkful offers a data science bootcamps that are known for its mentorship program. The bootcamp is available in both part-time and full-time formats. Part-time students can complete the program in 6 months by committing 20-30 hours per week.

Understand the Data Science Toolkit

Full-time students can complete the program in 5 months by committing 50 hours (about 2 days) per week. Payment plans, tuition refunds, and scholarships are available for all students. The program has no prerequisites, so both fresh graduates and experienced professionals can take it.

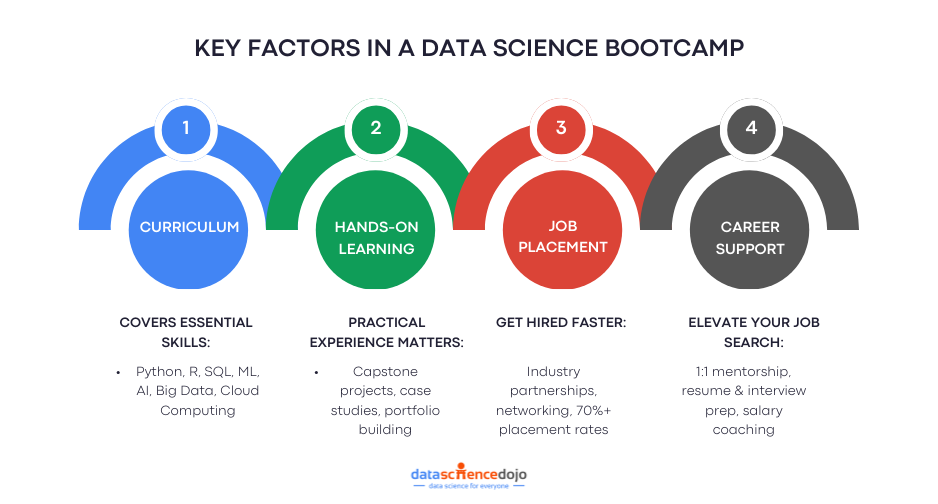

What to Look for in a Data Science Bootcamp

Not all data science bootcamps are created equal. While some offer a well-rounded curriculum with hands-on projects and strong career support, others may fall short in key areas. Choosing the right bootcamp means looking beyond marketing promises and carefully evaluating the features that truly impact your learning experience and job prospects.

In this section, we’ll break down the essential factors to consider—curriculum depth, instructor expertise, real-world applications, job placement support, and more—so you can make an informed decision and invest in a program that genuinely prepares you for a successful data science career.

Curriculum: The Core of Your Learning Journey

The curriculum is the backbone of any bootcamp, as it defines the skills and tools you will learn. A well-rounded curriculum should cover the most in-demand and industry-relevant topics, including:

- Programming Languages: Proficiency in Python, R, and SQL for handling data and automating workflows.

- Data Analysis & Visualization: Mastering tools like Pandas, Matplotlib, and visualization platforms like Tableau to interpret and present data effectively.

- Machine Learning & Artificial Intelligence: Gaining expertise in Scikit-learn, TensorFlow, and Natural Language Processing (NLP) for predictive analytics.

- Big Data & Cloud Computing: Understanding platforms like Spark, AWS, and Google Cloud to manage and process large-scale datasets efficiently.

A strong curriculum ensures that you are equipped with the technical skills required to tackle real-world data challenges.

Hands-On Learning Through Real-World Projects

Practical experience is crucial to transitioning theory into actionable skills. Look for bootcamps that emphasize hands-on learning by offering:

- Capstone Projects: Work on projects with real-world datasets to simulate work environments and challenges.

- Competitions and Case Studies: Opportunities like Kaggle competitions or case studies allow you to solve real problems and benchmark your skills.

- Portfolio Development: The chance to build a professional portfolio showcasing your expertise in various projects to potential employers.

By engaging in hands-on projects, you can demonstrate your ability to apply what you’ve learned to practical scenarios.

Industry Connections and Job Placement Opportunities

A bootcamp that bridges the gap between learning and employment is invaluable. Seek programs that provide:

- Corporate Partnerships: Collaborations with leading companies such as Google, Amazon, and startups to facilitate hiring opportunities.

- Networking Events: Access to industry professionals and alumni networks to build meaningful connections.

Get an Inside Look!

Read an in-depth review of Data Science Dojo’s Bootcamp

- High Job Placement Rates: Bootcamps with proven track records of placing graduates in data science roles, with placement rates above 70% being a strong indicator of success.

These elements ensure that your learning is directly tied to job opportunities, boosting your chances of employment.

Mentorship and Career Support

Comprehensive mentorship and career guidance can accelerate your professional growth and prepare you for the job market. Look for programs offering:

- Mentorship: Personalized guidance from experienced industry professionals to help you navigate challenges.

- Career Services: Support in resume building, LinkedIn profile optimization, and mock interview preparation.

- Salary Negotiation Coaching: Assistance in understanding your worth and negotiating offers effectively.

Such tailored support ensures you are well-prepared for job applications and interviews.

Are Data Science Bootcamps the Future?

Data science bootcamps have emerged as a powerful alternative to traditional degrees, offering a fast, practical, and cost-effective way to break into the field. With a strong focus on industry-relevant skills, hands-on projects, and career support, these programs help learners transition into high-paying roles more efficiently than conventional education pathways.

As the demand for data scientists continues to rise, bootcamps are playing a pivotal role in shaping the future of data education. For career switchers and aspiring professionals alike, the right bootcamp can provide not just the technical skills, but also the confidence, experience, and professional network needed to thrive in this dynamic industry.