Generative AI is being called the next big thing since the Industrial Revolution. Every day, a flood of new applications emerges, promising to revolutionize everything from mundane tasks to complex processes.

But how many actually do? How many of these tools become indispensable, and what sets them apart? It’s one thing to whip up a prototype of a large language model (LLM) application; it’s quite another to build a robust, scalable solution that addresses real-world needs and stands the test of time.

Hereby, the role of project managers is more important than ever! Especially, in the modern world of AI project management.

Explore AI Project Management and Generative AI’s Growing Influence

Throughout a generative AI project management process, project managers face a myriad of challenges and make key decisions that can be both technical, like ensuring data integrity and model accuracy, and non-technical, such as navigating ethical considerations and inference costs.

In this blog, we aim to provide you with a comprehensive guide to navigating these complexities and building LLM applications that matter.

The Generative AI Project Lifecycle

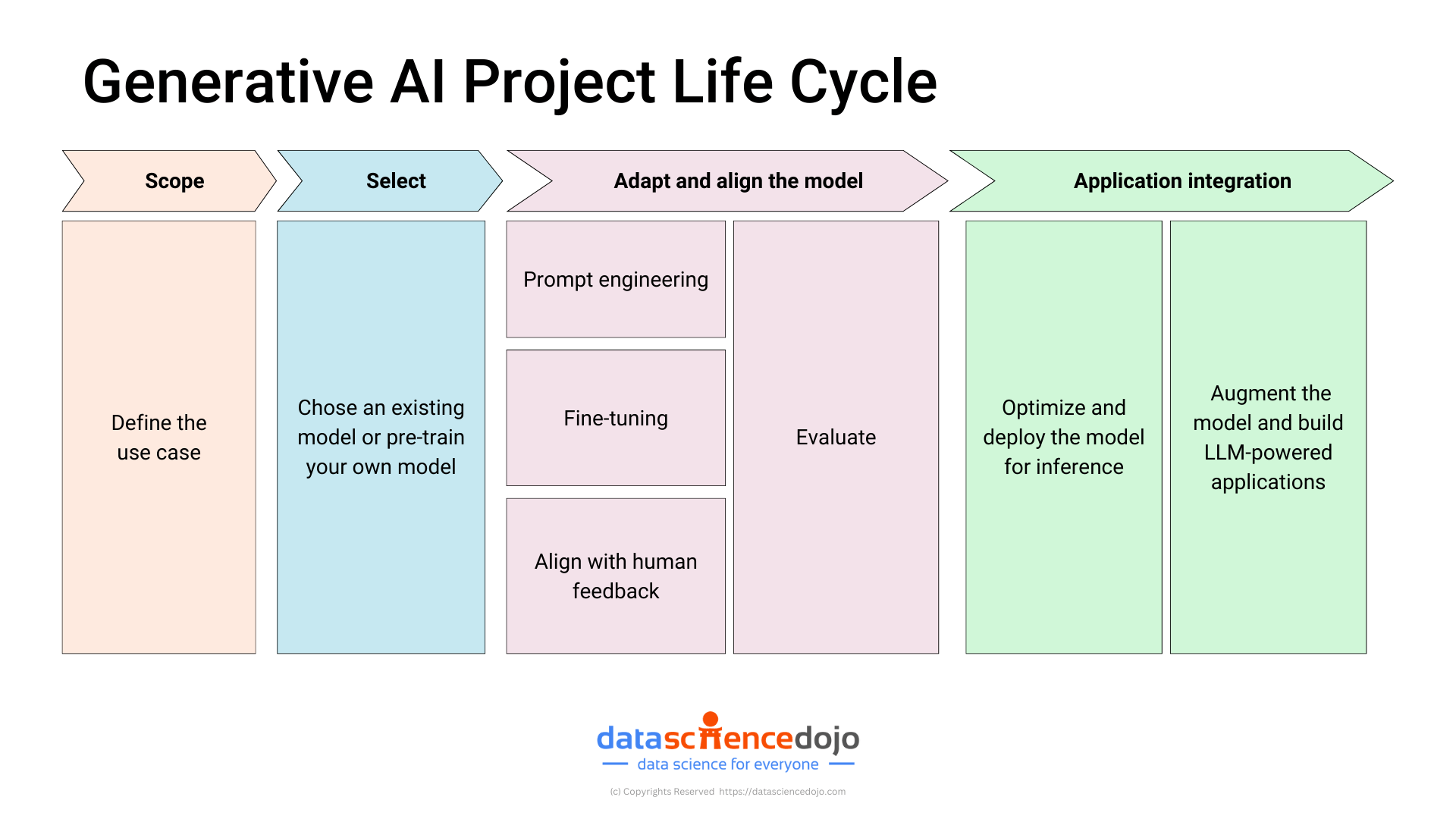

The generative AI lifecycle is meant to break down the steps required to build generative AI applications.

Each phase focuses on critical aspects of project management. By mastering this lifecycle, project managers can effectively steer their generative AI projects to success, ensuring they meet business goals and innovate responsibly in the AI space. Let’s dive deeper into each stage of the process.

Phase 1: Scope

Defining the Use Case: Importance of Clearly Identifying Project Goals and User Needs

The first and perhaps most crucial step in managing a generative AI project is defining the use case. This stage sets the direction for the entire project, acting as the foundation upon which all subsequent decisions are built.

A well-defined use case clarifies what the project aims to achieve and identifies the specific needs of the users. It answers critical questions such as: What problem is the AI solution meant to solve? Who are the end users? What are their expectations?

Understand how AI is helping Webmaster and content creators progress

Understanding these elements is essential because it ensures that the project is driven by real-world needs rather than technological capabilities alone. For instance, a generative AI project aimed at enhancing customer service might focus on creating a chatbot that can handle complex queries with a human-like understanding.

By clearly identifying these objectives, project managers can tailor the AI’s development to meet precise user expectations, thereby increasing the project’s likelihood of success and user acceptance.

Strategies for scope definition and stakeholder alignment

Defining the scope of a generative AI project involves detailed planning and coordination with all stakeholders. This includes technical teams, business units, potential users, and regulatory bodies. Here are key strategies to ensure effective scope definition and stakeholder alignment:

- Stakeholder workshops: Conduct workshops or meetings with all relevant stakeholders to gather input on project expectations, concerns, and constraints. This collaborative approach helps in understanding different perspectives and defining a scope that accommodates diverse needs.

- Feasibility studies: Carry out feasibility studies to assess the practical aspects of the project. This includes technological requirements, data availability, legal and ethical considerations, and budget constraints. Feasibility studies help in identifying potential challenges early in the project lifecycle, allowing teams to devise realistic plans or adjust the scope accordingly.

- Scope documentation: Create detailed documentation of the project scope that includes defined goals, deliverables, timelines, and success criteria. This document should be accessible to all stakeholders and serve as a point of reference throughout the project.

- Iterative feedback: Implement an iterative feedback mechanism to regularly check in with stakeholders. This process ensures that the project remains aligned with the evolving business goals and user needs, and can adapt to changes effectively.

- Risk assessment: Include a thorough risk assessment in the scope definition to identify potential risks associated with the project. Addressing these risks early on helps in developing strategies to mitigate them, ensuring the project’s smooth progression.

Know what are AI hallucinations and the risks associated with large language models

This phase is not just about planning but about building consensus and ensuring that every stakeholder has a clear understanding of the project’s goals and the path to achieving them. This alignment is crucial for the seamless execution and success of any generative AI initiative.

Phase 2: Select

Model selection: Criteria for choosing between an existing model or training a new one from scratch

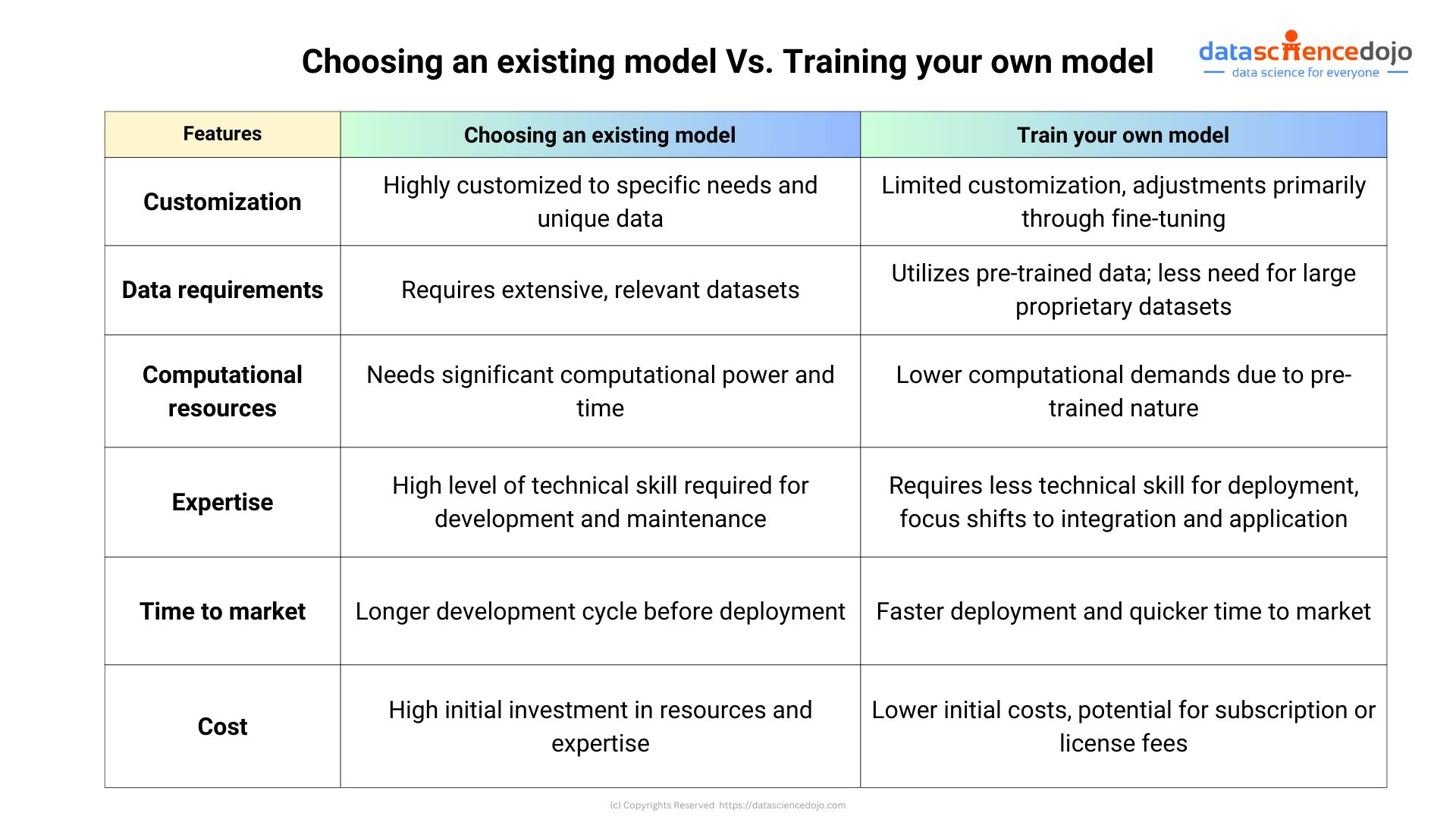

Once the project scope is clearly defined, the next critical phase is selecting the appropriate generative AI model. This decision can significantly impact the project’s timeline, cost, and ultimate success. Here are key criteria to consider when deciding whether to adopt an existing model or develop a new one from scratch:

- Project Specificity and Complexity: If the project requires highly specialized knowledge or needs to handle very complex tasks specific to a certain industry (like legal or medical), a custom-built model might be necessary. This is particularly true if existing models do not offer the level of specificity or compliance required.

- Resource Availability: Evaluate the resources available, including data, computational power, and expertise. Training new models from scratch requires substantial datasets and significant computational resources, which can be expensive and time-consuming. If resources are limited, leveraging pre-trained models that require less intensive training could be more feasible.

- Time to Market: Consider the project timeline. Using pre-trained models can significantly accelerate development phases, allowing for quicker deployment and faster time to market. Custom models, while potentially more tailored to specific needs, take longer to develop and optimize.

- Performance and Scalability: Assess the performance benchmarks of existing models against the project’s requirements. Pre-trained models often benefit from extensive training on diverse datasets, offering robustness and scalability that might be challenging to achieve with newly developed models in a reasonable timeframe.

- Cost-Effectiveness: Analyze the cost implications of each option. While pre-trained models might involve licensing fees, they generally require less financial outlay than the cost of data collection, training, and validation needed to develop a model from scratch.

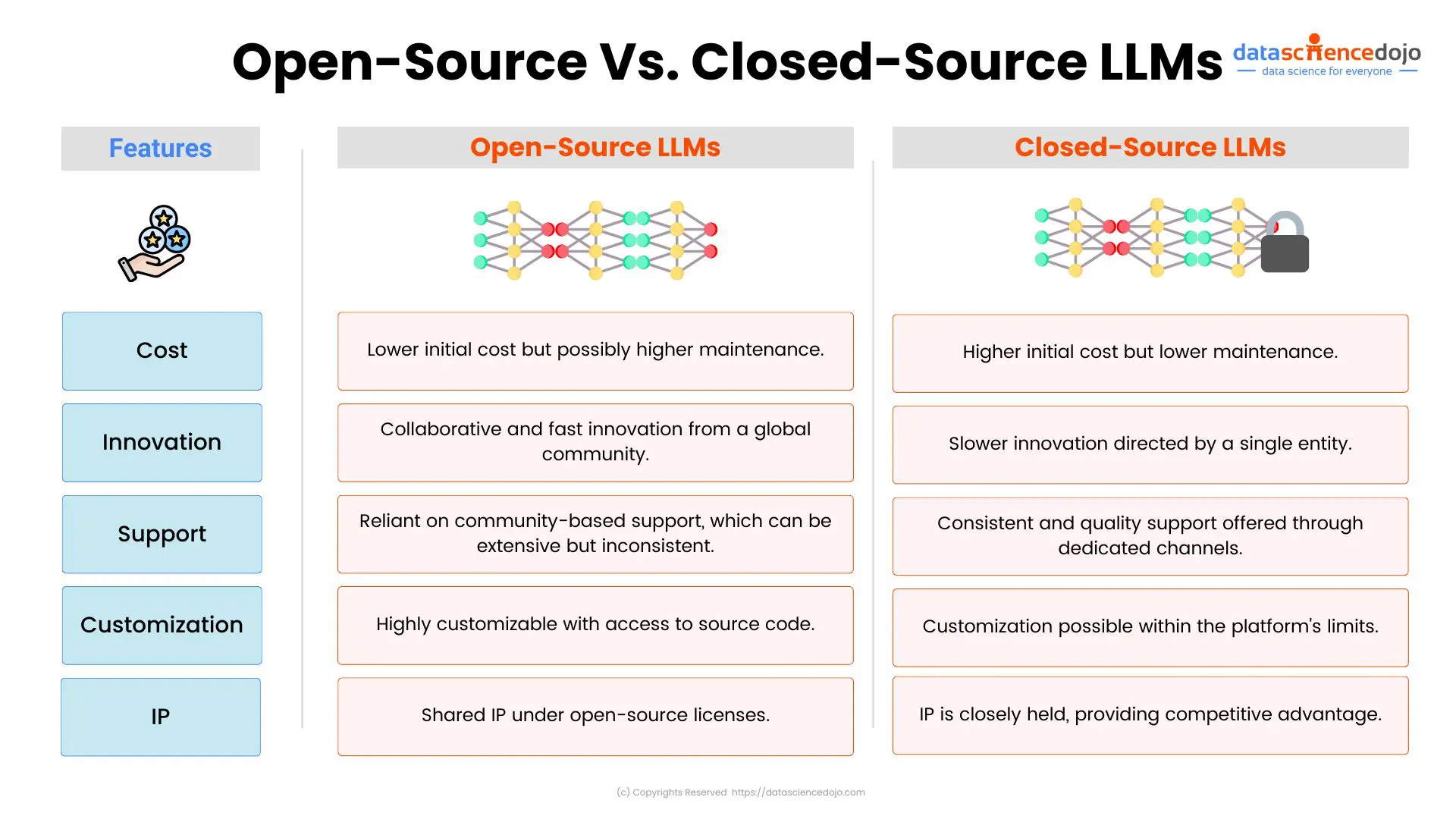

Finally, if you’ve chosen to proceed with an existing model, you will also have to decide if you’re going to choose an open-source model or a closed-source model. Here is the main difference between the two:

Dig deeper into understanding the comparison of open-source and closed-source LLMs

Phase 3: Adapt and align model

For project managers, this phase involves overseeing a series of iterative adjustments that enhance the model’s functionality, effectiveness, and suitability for the intended application.

How to go about adapting and aligning a model

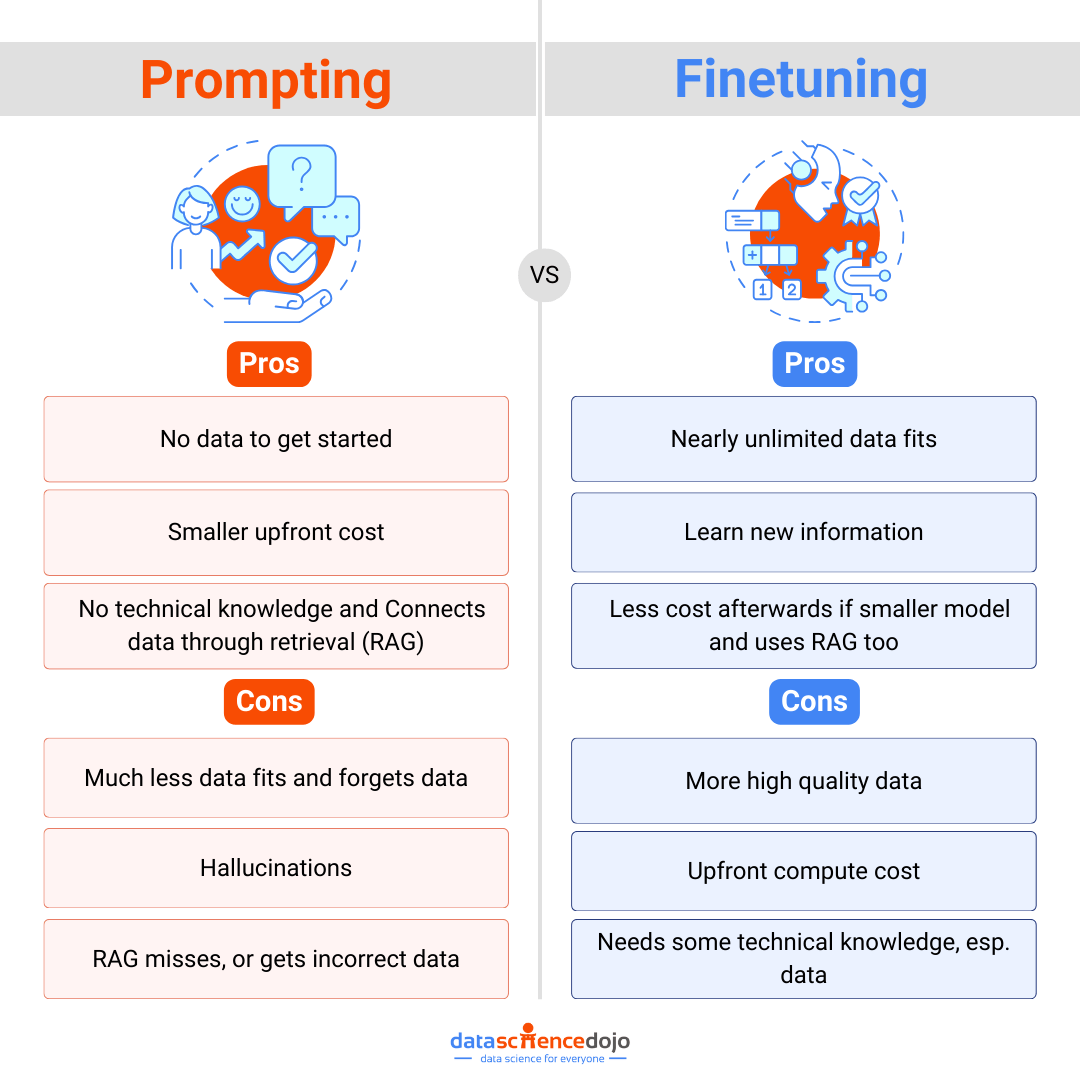

Effective adaptation and alignment of a model generally involve three key strategies: prompt engineering, fine-tuning, and human feedback alignment. Each strategy serves to incrementally improve the model’s performance:

Prompt Engineering

Techniques for Designing Effective Prompts: This involves crafting prompts that guide the AI to produce the desired outputs. Successful prompt engineering requires:

- Contextual relevance: Ensuring prompts are relevant to the task.

- Clarity and specificity: Making prompts clear and specific to reduce ambiguity.

- Experimentation: Trying various prompts to see how changes affect outputs.

Prompt engineering uses existing model capabilities efficiently, enhancing output quality without additional computational resources.

Fine-Tuning

Optimizing Model Parameters: This process adjusts the model’s parameters to better fit project-specific requirements, using methods like:

- Low-rank Adaptation (LoRA): Adjusts a fraction of the model’s weights to improve performance, minimizing computational demands.

- Prompt Tuning: Adds trainable tokens to model inputs, optimized during training, to refine responses.

These techniques are particularly valuable for projects with limited computing resources, allowing for enhancements without substantial retraining.

Confused if fine-tuning is a better approach or prompt-engineering? We’ve broken things down for you:

Here’s a guide to building high-performing models with fine-tuning, RLHF, and RAG

Human Feedback Alignment

Integrating User Feedback: Incorporating real-world feedback helps refine the model’s outputs, ensuring they remain relevant and accurate. This involves:

- Feedback Loops: Regularly updating the model based on user feedback to maintain and enhance relevance and accuracy.

- Ethical Considerations: Adjusting outputs to align with ethical standards and contextual appropriateness.

Evaluate

Rigorous evaluation is crucial after implementing these strategies. This involves:

- Using metrics: Employing performance metrics like accuracy and precision, and domain-specific benchmarks for quantitative assessment.

- User testing: Conducting tests to qualitatively assess how well the model meets user needs.

- Iterative improvement: Using evaluation insights for continuous refinement.

For project managers, understanding and effectively guiding this phase is key to the project’s success, ensuring the AI model not only functions as intended but also aligns perfectly with business objectives and user expectations.

Master LLM Evaluation Metrics, their uses, and real-life applications

Phase 4: Application Integration

Transitioning from a well-tuned AI model to a fully integrated application is crucial for the success of any generative AI project. This phase involves ensuring that the AI model not only functions optimally within a controlled test environment but also performs efficiently in real-world operational settings.

This phase covers model optimization for practical deployment and ensuring integration into existing systems and workflows.

Model Optimization: Techniques for efficient inference

Optimizing a generative AI model for inference ensures it can handle real-time data and user interactions efficiently. Here are several key techniques:

- Quantization: Simplifies the model’s computations, reducing the computational load and increasing speed without significantly losing accuracy.

- Pruning: Removes unnecessary model weights, making the model faster and more efficient.

- Model Distillation: Trains a smaller model to replicate a larger model’s behavior, requiring less computational power.

- Hardware-specific Optimizations: Adapt the model to better suit the characteristics of the deployment hardware, enhancing performance.

Building and deploying applications: Best practices

Successfully integrating a generative AI model into an application involves both technical integration and user experience considerations:

Technical Integration

- API Design: Create secure, scalable, and maintainable APIs that allow the model to interact = with other application components.

- Data Pipeline Integration: Integrate the model’s data flows effectively with the application’s data systems, accommodating real-time and large-scale data handling.

- Performance Monitoring: Set up tools to continuously assess the model’s performance, with alerts for any issues impacting user experience.

User Interface Design

- User-Centric Approach: Design the UI to make AI interactions intuitive and straightforward.

- Feedback Mechanisms: Incorporate user feedback features to refine the model continuously.

- Accessibility and Inclusivity: Ensure the application is accessible to all users, enhancing acceptance and usability.

Deployment Strategies

- Gradual Rollout: Begin with a limited user base and scale up after initial refinements.

- A/B Testing: Compare different model versions to identify the best performer under real-world conditions.

By focusing on these areas, project managers can ensure that the generative AI model is not only integrated into the application architecture effectively but also provides a positive and engaging user experience. This phase is critical for transitioning from a developmental model to a live application that meets business objectives and exceeds user expectations.

Ethical considerations and compliance for AI project management

Ethical considerations are crucial in the management of generative AI projects, given the potential impact these technologies have on individuals and society. Project managers play a key role in ensuring these ethical concerns are addressed throughout the project lifecycle:

Bias Mitigation

AI systems can inadvertently perpetuate or amplify biases present in their training data. Project managers must work closely with data scientists to ensure diverse datasets are used for training and testing the models. Implementing regular audits and bias checks during model training and after deployment is essential.

Transparency

Maintaining transparency in AI operations helps build trust and credibility. This involves clear communication about how AI models make decisions and their limitations. Project managers should ensure that documentation and reporting practices are robust, providing stakeholders with insight into AI processes and outcomes.

Explore the risks of LLMs and best practices to overcome them

Navigating Compliance with Data Privacy Laws and Other Regulations

Compliance with legal and regulatory requirements is another critical aspect managed by project managers in AI projects:

Data Privacy

Generative AI often processes large volumes of personal data. Project managers must ensure that the project complies with data protection laws such as GDPR in Europe, CCPA in California, or other relevant regulations. This includes securing data, managing consent where necessary, and ensuring data is used ethically.

Regulatory Compliance

Depending on the industry and region, AI applications may be subject to specific regulations. Project managers must stay informed about these regulations and ensure the project adheres to them. This might involve engaging with legal experts and regulatory bodies to navigate complex legal landscapes effectively.

Optimizing generative AI project management processes

Managing generative AI projects requires a mix of strong technical understanding and solid project management skills. As project managers navigate from initial planning through to integrating AI into business processes, they play a critical role in guiding these complex projects to success.

In managing these projects, it’s essential for project managers to continually update their knowledge of new AI developments and maintain a clear line of communication with all stakeholders. This ensures that every phase, from design to deployment, aligns with the project’s goals and complies with ethical standards and regulations.