Have you ever asked an AI tool a question without giving it any background information – just typing in your query and hitting enter? If so, you’ve already used zero-shot reasoning without even realizing it! This technique allows AI models to generate answers based purely on their pre-existing knowledge.

But here’s the catch: while it works well in some cases, it can also lead to vague, inaccurate, or even misleading responses.

So, how can you get better results from AI? The secret lies in effective prompting. By structuring your queries the right way you can significantly enhance an AI’s reasoning, accuracy, and transparency.

In this blog, we’ll break down the different prompting techniques, explore their impact, and show you how to get the most out of AI tools. Whether you’re a casual user or a professional working with large language models (LLMs), mastering these techniques can take your AI experience to the next level.

Let’s dive in!

What is Zero-Shot Reasoning?

Zero-shot reasoning is the ability of a large language model to generate responses without any prior specific training. It relies entirely on what it already “knows” from the vast amount of data it was trained on.

Zero-shot prompting involves posing a question or task to the model without providing any specific context or examples. To put it simply, it involves providing a single question or instruction to an LLM without any additional context or examples. Most of us use generative AI tools like this, right?

While it allows the model to generate responses based on its pre-existing knowledge, it can have limitations and lead to suboptimal results. That’s why it is always recommended to go through or cross-check the information provided by AI tools.

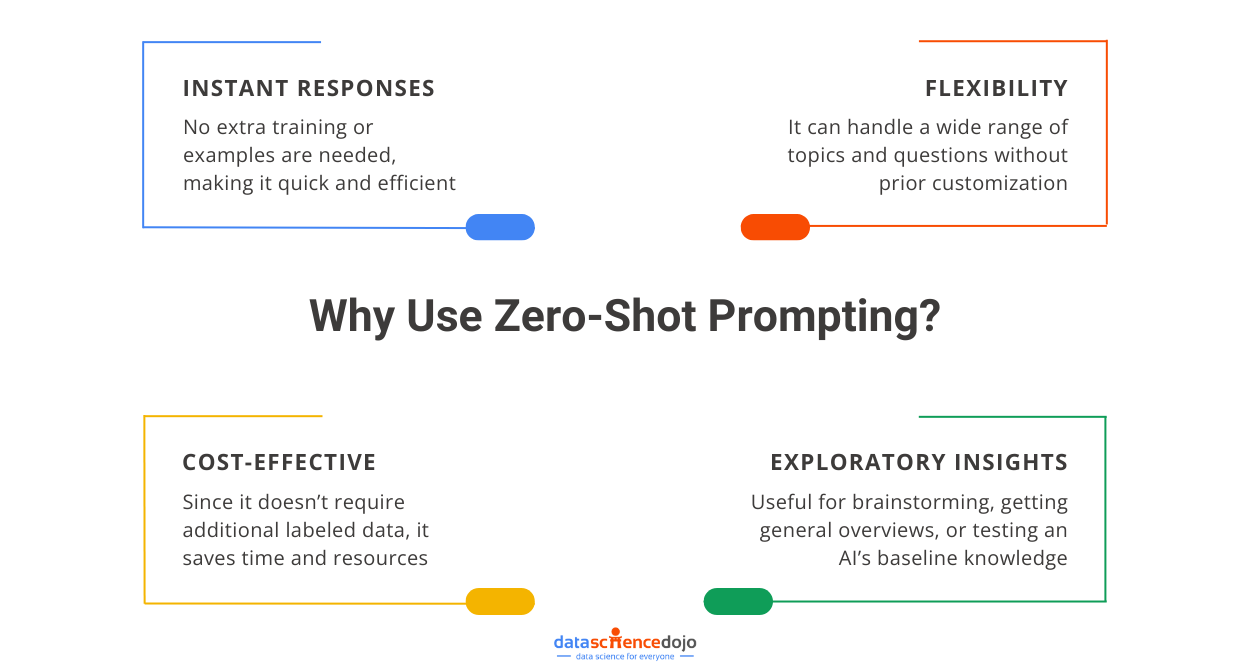

Benefits of Zero-Shot

Zero-shot reasoning is useful because it allows models to generate responses instantly without needing any extra training. This makes it fast, flexible, and cost-effective. It’s a great tool for quick insights, brainstorming, and exploring new topics without requiring pre-fed examples or specific datasets.

Despite the many challenges of zero-shot reasoning, it provides a strong starting point for more complex tasks. However, before we explore alternative techniques like few-shot or chain-of-thought prompting, let’s look deeper into the limitations of zero-shot reasoning.

Here’s a guide to becoming a prompt engineer

How Zero-Shot Prompting Impacts Response Quality?

Zero-shot prompting is a powerful tool, but it has its limitations. Since the model generates responses without extra context or examples, the quality of its answers can vary. Sometimes, it may provide useful insights, but other times, the responses may be vague, off-topic, or incomplete. Let’s break down why this happens.

Reliance on Pre-existing Knowledge

When you ask an AI a question using zero-shot prompting, it pulls from the knowledge it already has. But if the model hasn’t encountered the exact information before, it might struggle to give a relevant answer. In such cases, it may generate a response based on related topics, which can sometimes be helpful but other times feel too general or inaccurate.

Lack of Contextual Understanding

Without additional context, the model may struggle to understand the specific context or nuances of the prompt. This can result in generic or irrelevant responses that don’t address the actual question or task.

Imagine asking, “What’s the best way to improve engagement?” Without any context, the model doesn’t know if you mean social media engagement, student engagement in class, or something else entirely. This lack of clarity can lead to vague answers that don’t fully address what you are asking.

Explore the context window paradox in LLMs

Limited Generalization

AI models are great at recognizing patterns, but zero-shot prompting can struggle when dealing with entirely new or complex topics. Similarly, while LLMs have impressive generalization capabilities, zero-shot may pose challenges in cases where the prompt is novel or unfamiliar to the model.

It may not be able to generalize effectively from its preexisting knowledge and generate accurate responses. Instead of making logical connections, it might provide an answer that sounds confident but lacks real accuracy.

Inaccurate or Incomplete Responses

Due to the lack of guidance or examples, zero-shot prompts may lead to inaccurate or incomplete responses. The model may not grasp the full scope or requirements of the prompt, resulting in responses that don’t fully address the question or provide comprehensive information.

While zero-shot prompting is useful for quick answers, it has its flaws. The lack of context, difficulty with unfamiliar topics, and the potential for vague or incomplete responses mean that it’s not always the best approach.

Limitations of Zero-Shot Reasoning

Let’s explore some key limitations that can impact response quality.

1. Lack of Specificity and Precision

Zero-shot prompting doesn’t allow for specific instructions or guidance, which can limit the ability to elicit precise or specific responses from the model. This can be a challenge when seeking detailed or nuanced information. This means the AI might give a general answer instead of a precise one.

If you are looking for in-depth or highly specific information, the response may not fully meet your needs. For example, asking, “How do I improve website performance?” might get a broad answer instead of detailed steps for a specific platform like WordPress or Shopify.

2. No Clarification or Feedback Loop

Without the opportunity for clarification or feedback, zero-shot prompting may not provide a mechanism for the model to seek further information or refine its understanding of the prompt. This can hinder the model’s ability to provide accurate or relevant responses.

If the prompt is vague or missing details, the model won’t seek more information before responding. This can result in answers that miss the point or lack accuracy. In a real conversation, you’d ask follow-up questions to refine your understanding, but with zero-shot prompting, the AI just takes its best guess.

3. Struggles with Subjectivity and Ambiguity

Zero-shot prompts may struggle with subjective or ambiguous questions that require personal opinions or preferences. The model’s responses may vary widely depending on its interpretation of the prompt, leading to inconsistent or unreliable answers.

If you ask, “What’s the best book of all time?” the AI has no personal opinion, and the answer could vary based on different sources or criteria. Since it lacks human judgment, its responses to subjective topics might feel inconsistent or unreliable.

While zero-shot prompting allows large language models to generate responses based on their preexisting knowledge, it has limitations in terms of contextual understanding, accuracy, and specificity. Employing other prompting techniques, such as few-shot prompting or chain-of-thought prompting, can help address these limitations and improve the quality of responses from large language models.

The Importance of Prompting

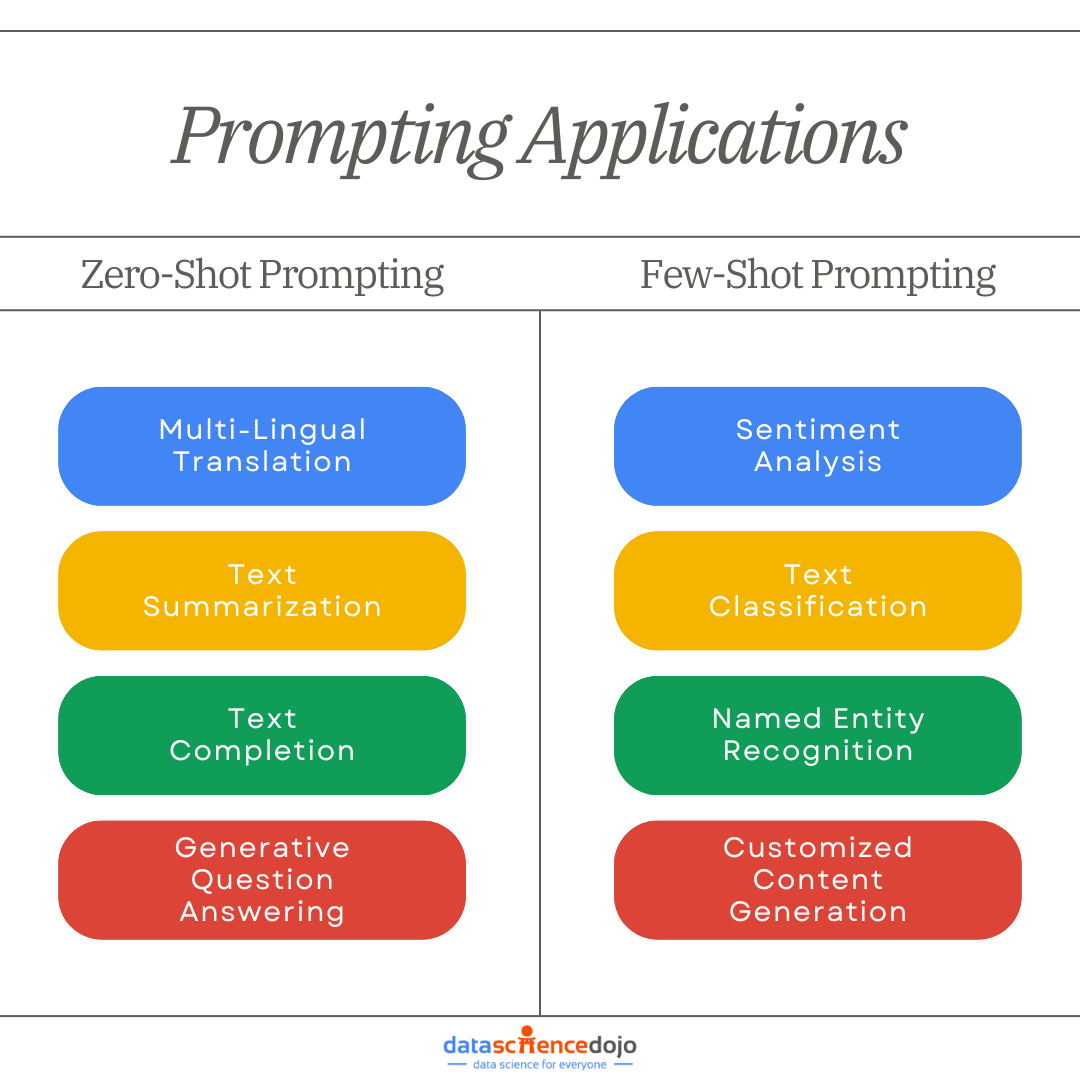

Proper prompting plays a significant role in the quality of responses generated by LLMs. The video highlights the difference between zero-shot prompting and few-shot prompting. Zero-shot prompting relies solely on the model’s preexisting knowledge, while few-shot prompting provides examples or guidance to help the model understand the task at hand.

Benefits of Proper Prompting Techniques

Using appropriate prompting techniques can significantly improve the quality of responses from LLMs. It helps the model better understand the task, generate accurate and relevant responses, and provide transparency into its reasoning process.

This is essential for Explainable AI (XAI), as it enables users to evaluate the correctness and relevance of the responses. Proper prompting techniques play a crucial role in improving the accuracy and transparency of responses generated by large language models.

You can learn more about Explainable AI here

Here are the key benefits:

Improved Understanding of the Task

By using appropriate prompts, we can provide clearer instructions or questions to the model. This helps the model better understand the task at hand, leading to more accurate and relevant responses. Clear and precise prompts ensure that the model focuses on the specific information needed to generate an appropriate answer.

Guidance with Few-Shot Prompting

Few-shot prompting involves providing examples or guidance to the model. By including relevant examples or context, we can guide the model towards the desired response. This technique helps the model generalize from the provided examples and generate accurate responses even for unseen or unfamiliar prompts.

Here’s a guide to understand few-shot prompting

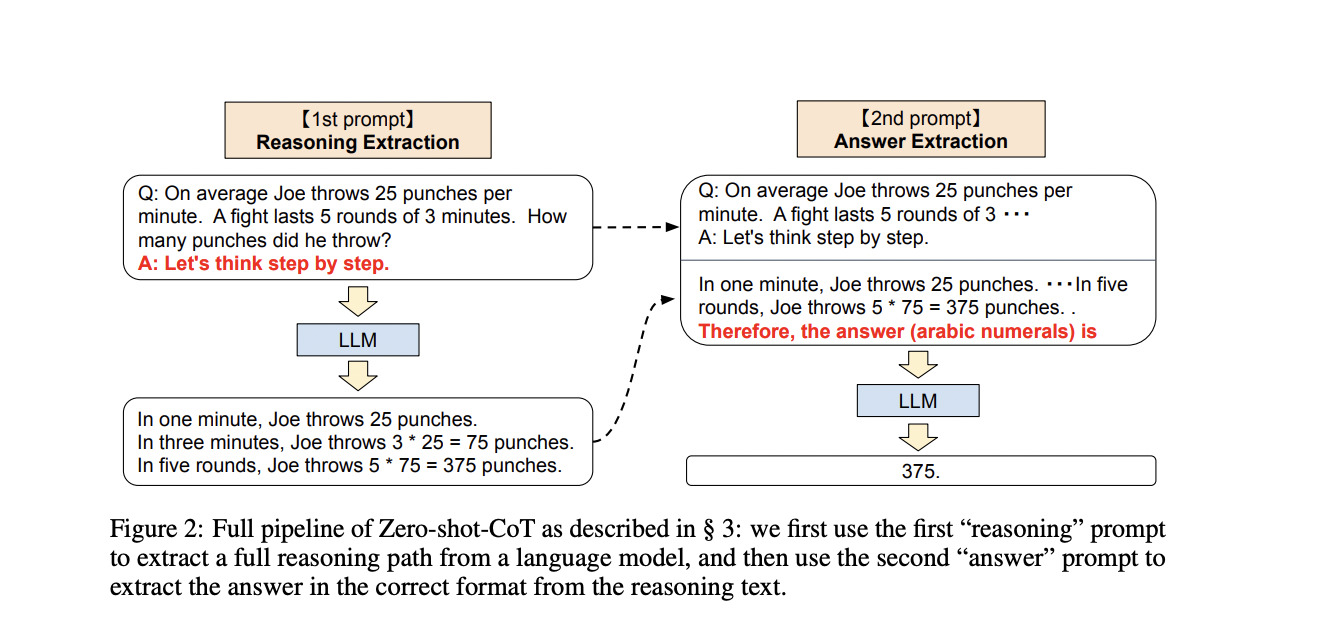

Enhanced Reasoning with Chain-of-Thought Prompting

Chain-of-thought prompting involves breaking down a problem into sequential steps for the model to follow. This technique helps the model reason through the problem and consider different possibilities or perspectives. By encouraging a structured thought process, chain-of-thought prompting aids the model in generating more accurate and well-reasoned responses.

Transparent Explanation of Responses

Proper prompting techniques also contribute to the transparency of responses. By guiding the model’s thinking process and encouraging it to document its chain of thought, users gain insights into how the model arrived at a particular answer. This transparency helps evaluate the correctness and relevance of the response and facilitates Explainable AI (XAI) principles.

Mitigation of Bias and Narrowness

Using proper prompts can help mitigate biases or narrowness in the model’s responses. By guiding the model to consider alternative perspectives or approaches, we can encourage more well-rounded and comprehensive answers. This helps avoid biased or limited responses and provides a broader understanding of the topic.

Read more about algorithmic biases in AI

Proper prompting techniques significantly improve the accuracy and transparency of responses from LLMs. They help the model understand the task, provide guidance, enhance reasoning, and mitigate biases. By employing these techniques, we can maximize the benefits of LLMs while ensuring accurate, relevant, and transparent responses.

Few-Shot Prompting

Few-shot prompting improves the reasoning capabilities of large language models. By providing relevant examples, the model can better understand the prompt and generate accurate and contextually appropriate responses. These examples help the model understand the pattern, context, and format of the desired response.

This technique is especially valuable when dealing with open-ended or subjective questions. Some key benefits of few-shot prompting include:

- More Accurate Responses – The model understands what kind of answer is expected and follows the pattern provided.

- Better Context Awareness – Few-shot examples help the model grasp specific nuances, reducing generic or off-topic answers.

- Improved Consistency – By setting a format, few-shot prompting ensures the model maintains a consistent tone and structure.

- Enhanced Reasoning Ability – The AI can generalize from the examples to provide better explanations, making it useful for complex topics.

This makes few-shot prompting a great choice when you need precise, structured, or detailed answers. It works especially well for technical topics where generic AI responses fall short, helping the model provide more industry-specific insights.

It’s also useful for creative or stylistic writing, ensuring the AI follows a specific tone or format. Plus, if your question requires step-by-step reasoning, few-shot prompting helps the model think more logically and provide well-rounded responses.

Hence, few-shot prompting is a powerful tool to get more refined and relevant AI responses. While we understand this technique, let’s look at another option of chain-of-thought prompting.

Chain-of-Thought Prompting

Chain-of-thought prompting is a specific type of few-shot prompting that enhances reasoning and generates more accurate and transparent responses. It involves breaking down a problem into sequential steps for the model to follow. This technique not only improves the quality of responses but also helps users understand how the model arrived at a particular answer.

Why Use Chain-of-Thought Prompting?

Chain-of-thought prompting offers several advantages when using large language models like chatGPT. Let’s explore some of them:

1. Transparency and Explanation

Chain-of-thought prompting encourages the model to provide detailed and transparent responses. By documenting its thinking process, the model explains how it arrived at a particular answer. This transparency helps users understand the reasoning behind the response and evaluate its correctness and relevance. This is particularly important for Explainable AI (XAI), where understanding the model’s reasoning is crucial.

2. Comprehensive and Well-Rounded Answers

Chain-of-thought prompting prompts the model to consider alternative perspectives and different approaches to a problem. By asking the model to think through various possibilities, it generates more comprehensive and well-rounded answers. This helps avoid narrow or biased responses and provides users with a broader understanding of the topic or question.

3. Improved Reasoning

Chain-of-thought prompting enhances the model’s reasoning capabilities. By breaking down complex problems into sequential steps, the model can follow a structured thought process. This technique improves the quality of the model’s responses by encouraging it to consider different aspects and potential solutions.

4. Contextual Understanding

Chain-of-thought prompting helps the model better understand the context of a question or task. By providing intermediate steps and guiding the model through a logical thought process, it gains a deeper understanding of the prompt. This leads to more accurate and contextually appropriate responses.

It’s important to note that while chain-of-thought prompting can enhance the performance of LLMs, it is not a foolproof solution. Models still have limitations and may produce incorrect or biased responses. However, by employing proper prompting techniques, we can maximize the benefits and improve the overall quality of the model’s responses.

Quick Knowledge Test – LLM Quiz

Which Prompting Technique Do You Use?

Zero-shot reasoning and large language models have ushered in a new era of AI capabilities. Prompting techniques, such as zero-shot and few-shot prompting, is crucial for everyone who wants to upgrade their area of work using these modern AI tools.

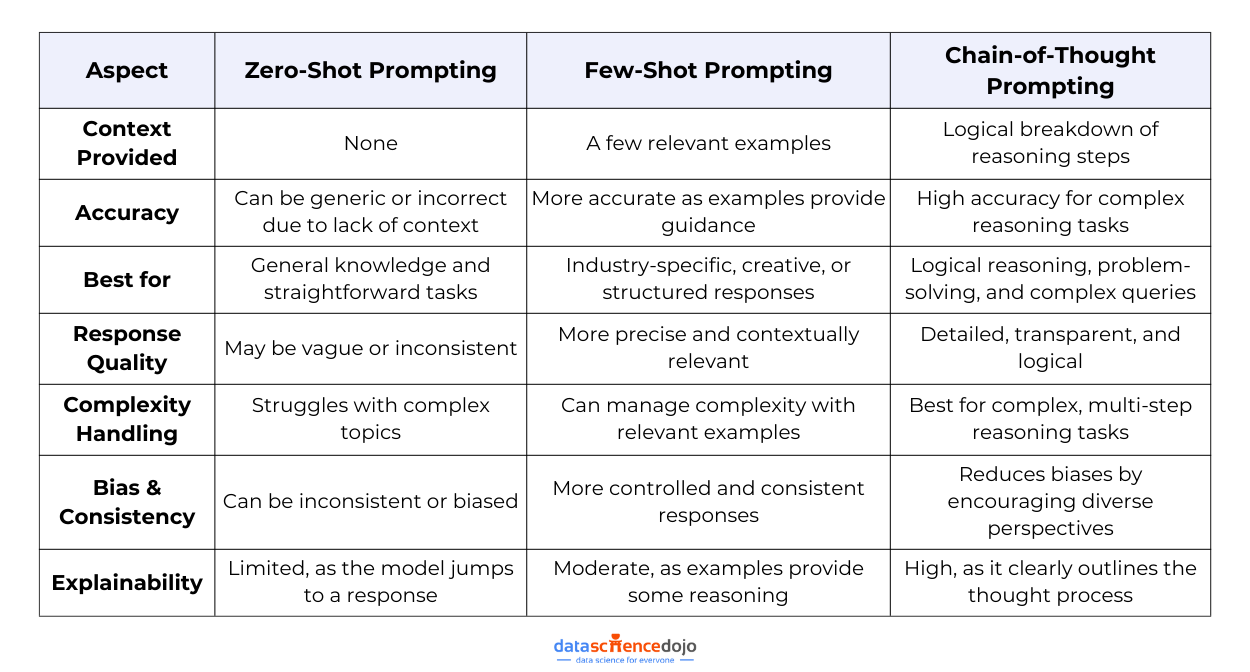

Below is a quick overview comparing the three prompting techniques we have discussed to give you an idea.

By understanding and using these techniques effectively, you can unlock the power of large language models and enhance their reasoning capabilities.

Mastering AI Prompting: The Key to Better Responses

Prompting isn’t just about asking AI a question, it’s about how you ask it. Zero-shot prompting works well for quick answers but often lacks depth. Few-shot prompting provides better accuracy by offering examples, while chain-of-thought prompting improves reasoning by breaking down complex queries.

By choosing the right technique, you can get more precise, logical, and insightful responses from AI. As AI continues to evolve, mastering these prompting techniques will be essential for anyone looking to maximize its potential.

Whether you’re writing, researching, or problem-solving, knowing how to guide AI effectively can make all the difference. So, next time you interact with an AI tool, think about your approach, because a well-structured prompt leads to a smarter response!

For an in-depth understanding of the role and importance of prompting in the world of LLMs, join our LLM bootcamp today!