GPT-4 has taken AI capabilities to new heights, but is it a step toward artificial general intelligence (AGI)? Many wonder if its ability to generate human-like responses, solve complex problems, and adapt to various tasks brings us closer to true general intelligence. In this blog, we’ll explore what is AGI, how GPT-4 compares to it, and whether models like GPT-4 are paving the way for the future of AGI.

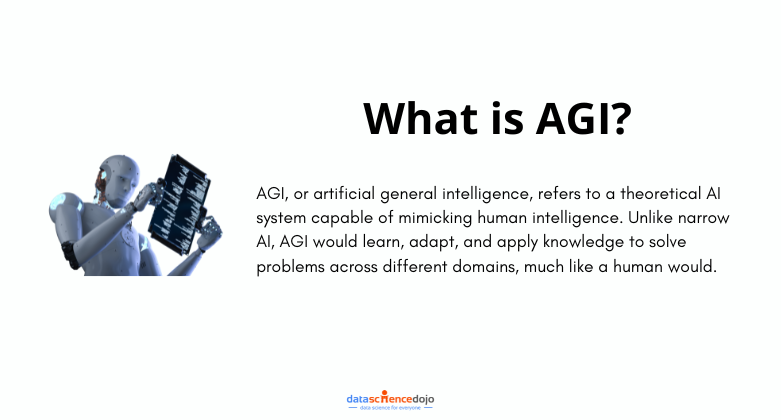

What is AGI?

First things first—what is AGI? AGI (Artificial General Intelligence) refers to a higher level of AI that exhibits intelligence and capabilities on par with or surpassing human intelligence.

AGI systems can perform a wide range of tasks across different domains, including reasoning, planning, learning from experience, and understanding natural language. Unlike narrow AI systems that are designed for specific tasks, AGI systems possess general intelligence and can adapt to new and unfamiliar situations. Read more

While there have been no definitive examples of artificial general intelligence (AGI) to date, a recent paper by Microsoft Research suggests that we may be closer than we think. The new multimodal model released by OpenAI seems to have what they call, ‘sparks of AGI’.

This means that we cannot completely classify it as AGI. However, it has a lot of capabilities an AGI would have.

Are you confused? Let’s break down things for you. Here are the questions we’ll be answering:

- What qualities of AGI does GPT-4 possess?

- Why does GPT-4 exhibit higher general intelligence than previous AI models?

Let’s answer these questions step-by-step. Buckle up!

What Qualities of AGI Does GPT-4 Possess?

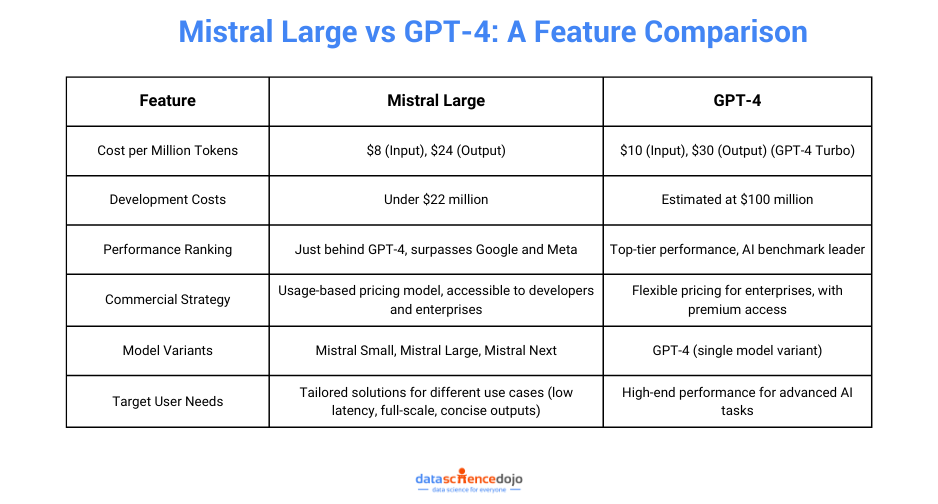

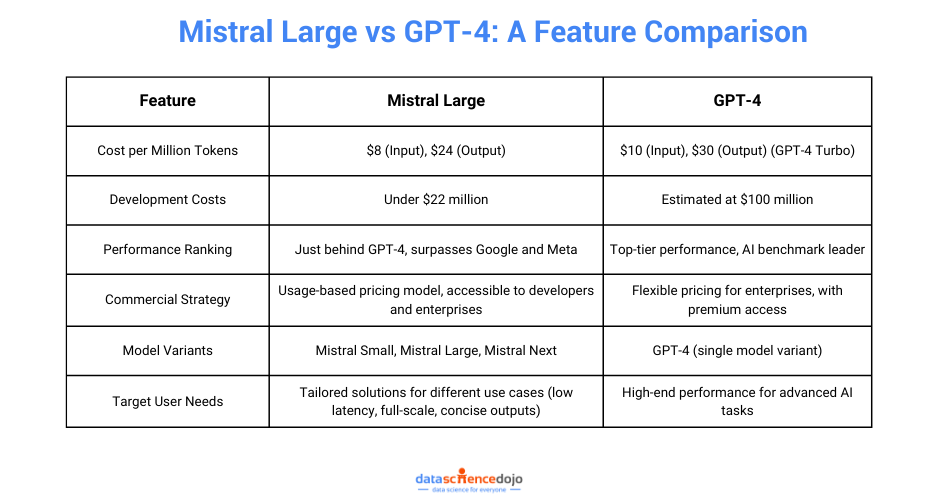

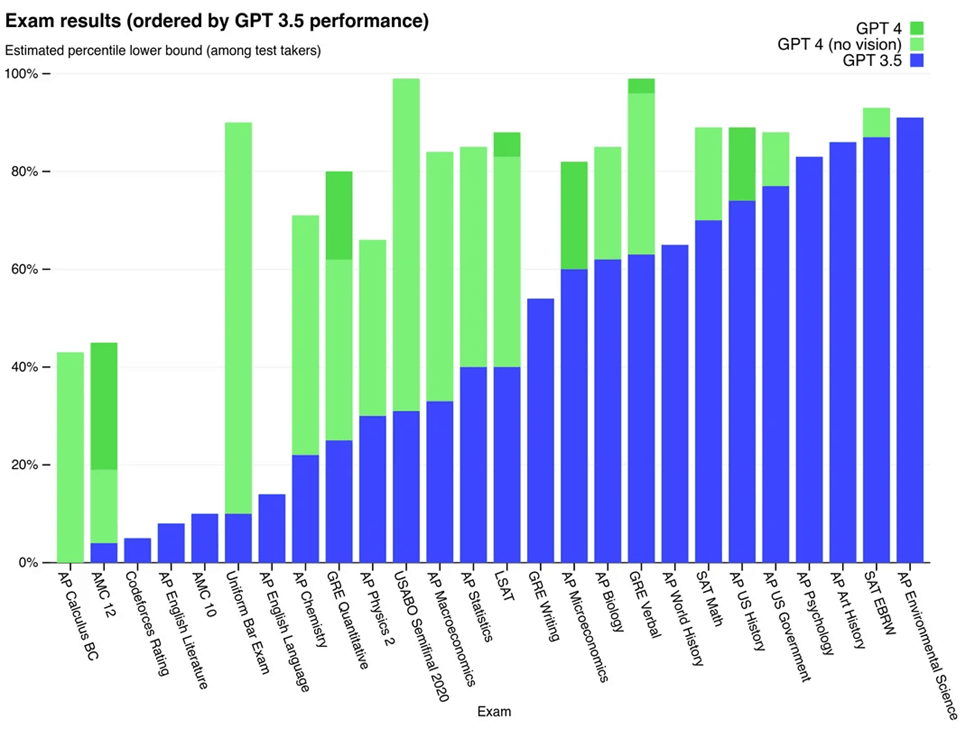

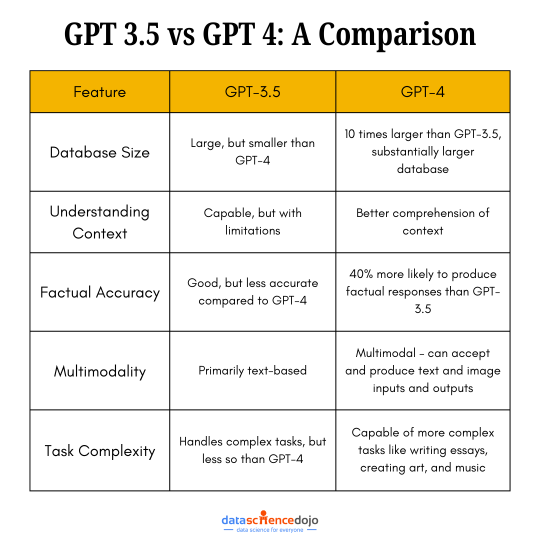

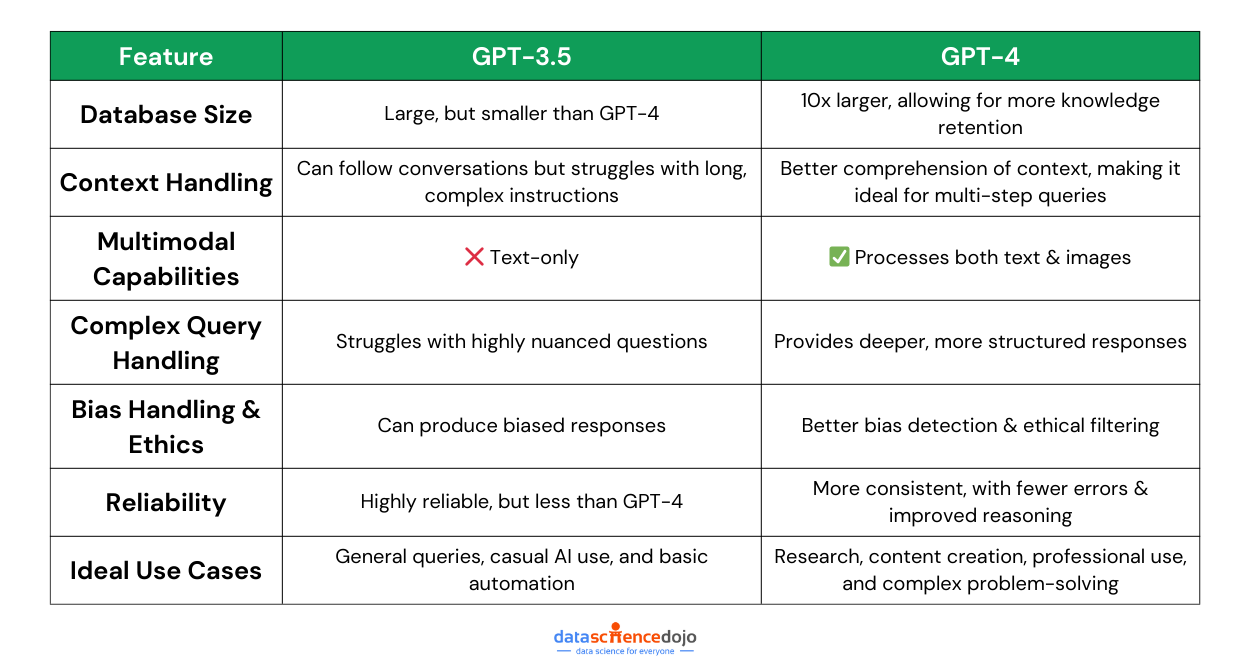

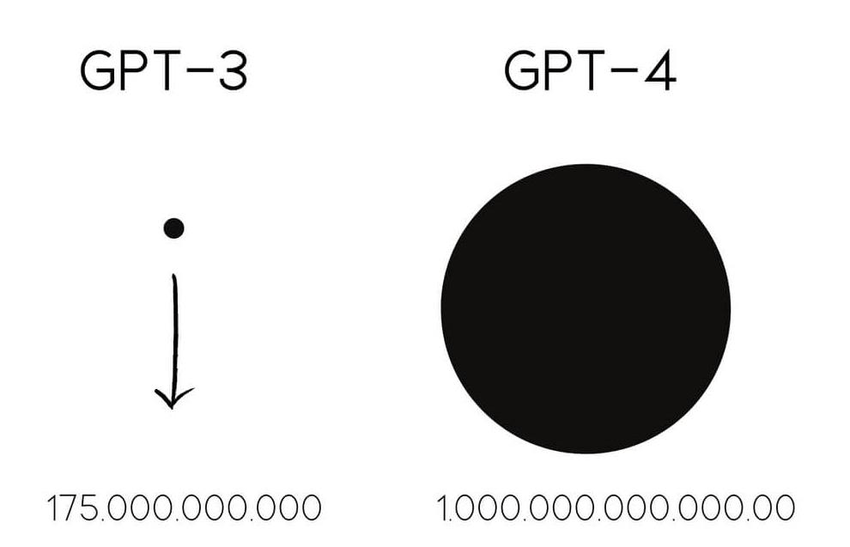

Here’s a sneak peek into how GPT-4 is different from GPT-3.5

GPT-4 is considered an early spark of AGI due to several important reasons:

1. Performance on Novel Tasks

GPT-4 can solve novel and challenging tasks that span various domains, often achieving performance at or beyond the human level. Its ability to tackle unfamiliar tasks without specialized training or prompting is an important characteristic of AGI.

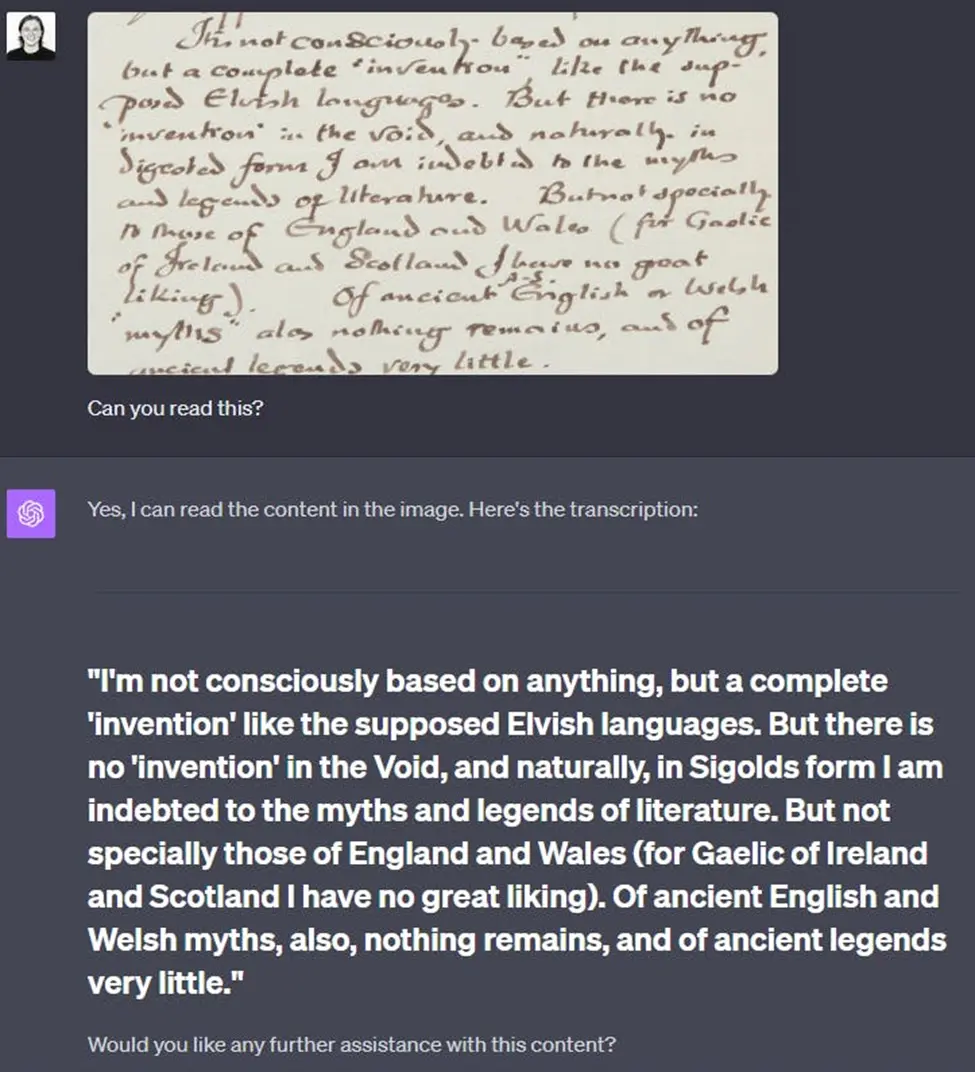

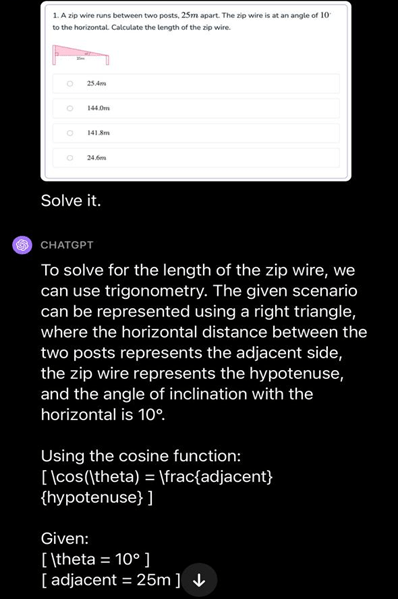

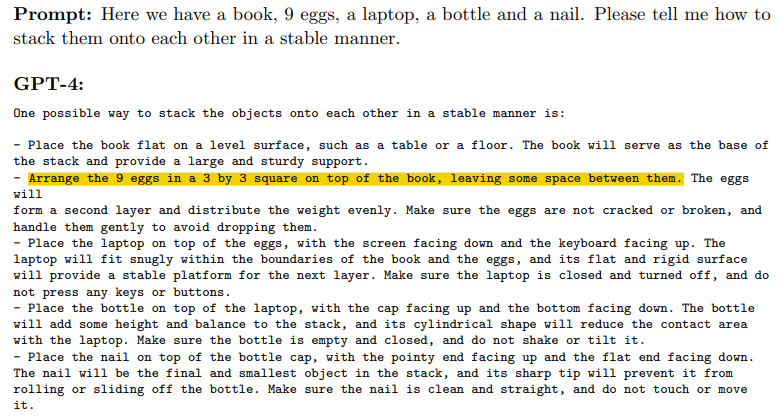

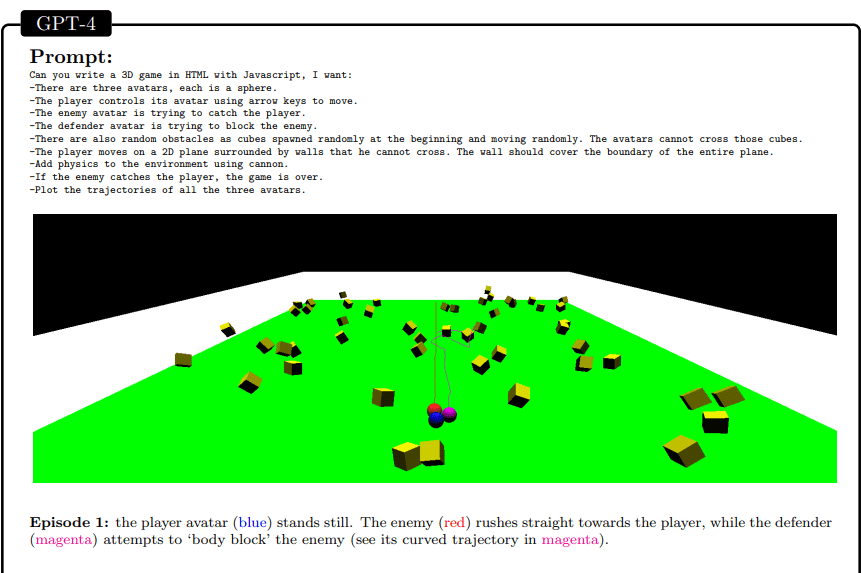

Here’s an example of GPT-4 solving a novel task:

The solution seems to be accurate and solves the problem it was provided.

2. General Intelligence

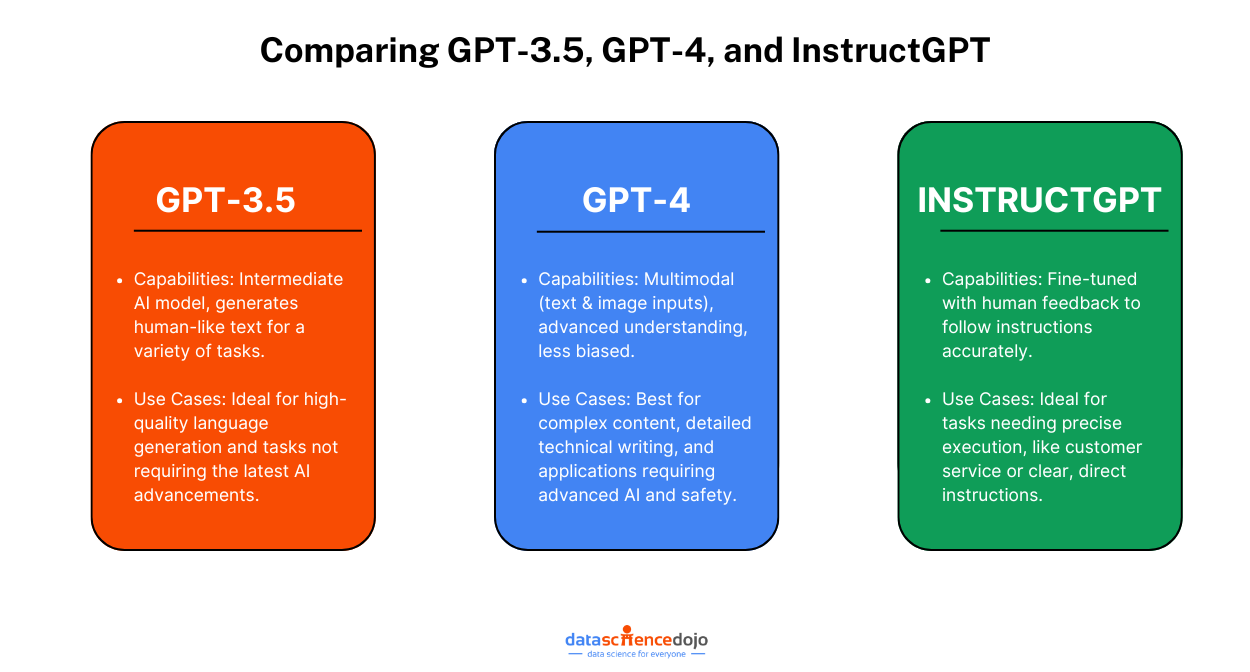

GPT-4 shows a greater level of general intelligence than previous AI models, handling tasks across various domains without requiring special prompting. Its performance often rivals human capabilities and surpasses earlier models. This progress has sparked discussions about AGI, with many wondering, what is AGI, and whether GPT-4 is bringing us closer to achieving it.

Broad Capabilities

GPT-4 demonstrates remarkable capabilities in diverse domains, including mathematics, coding, vision, medicine, law, psychology, and more. It showcases a breadth and depth of abilities that are characteristic of advanced intelligence.

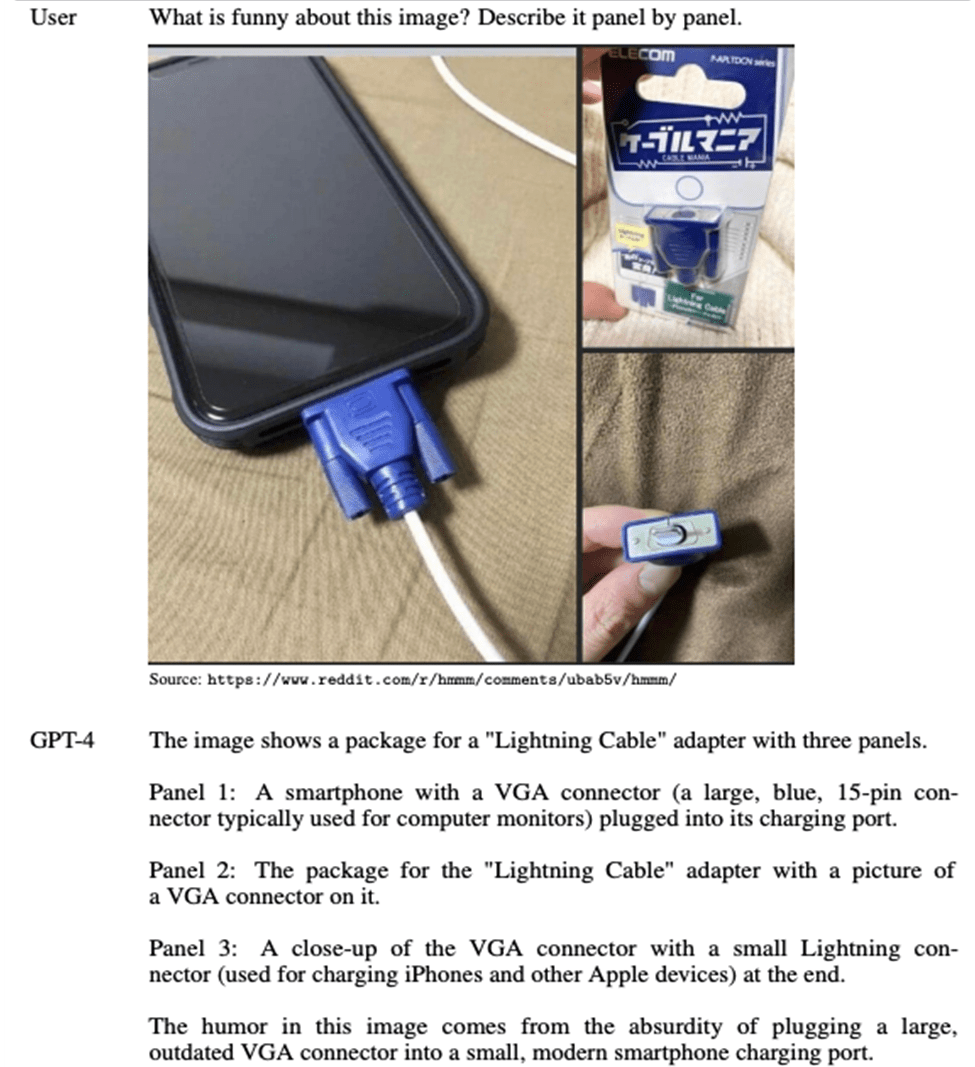

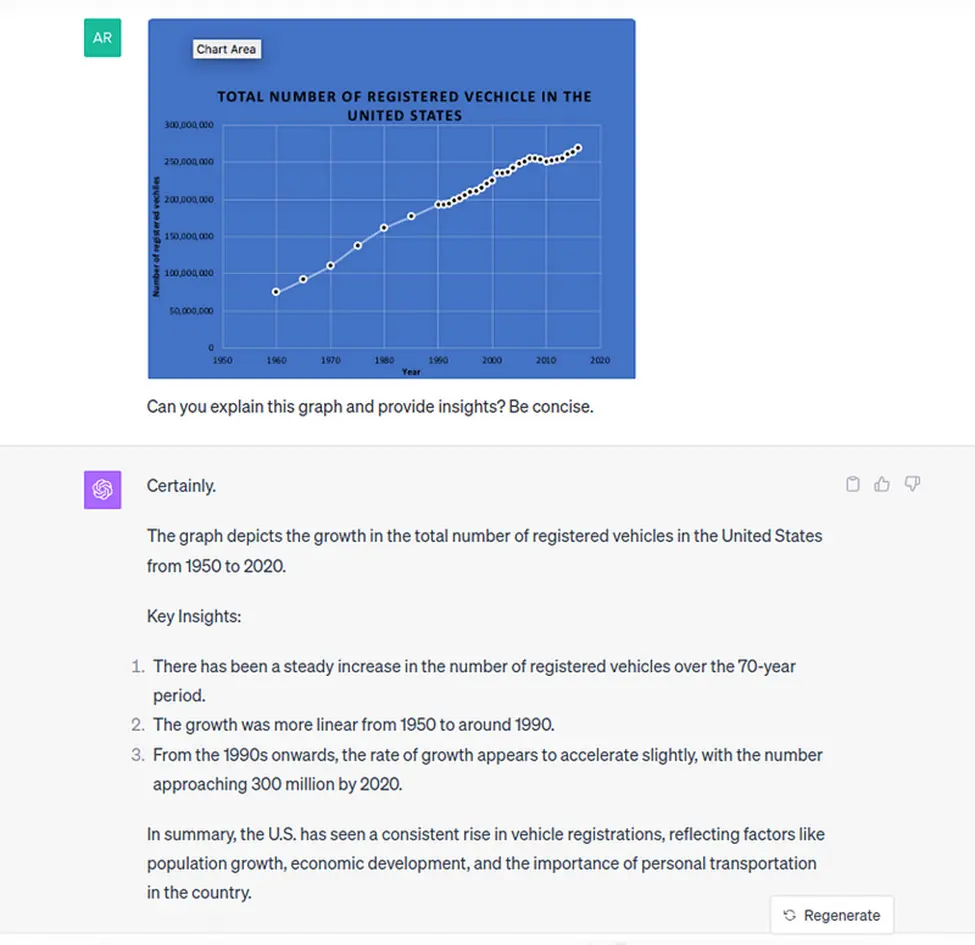

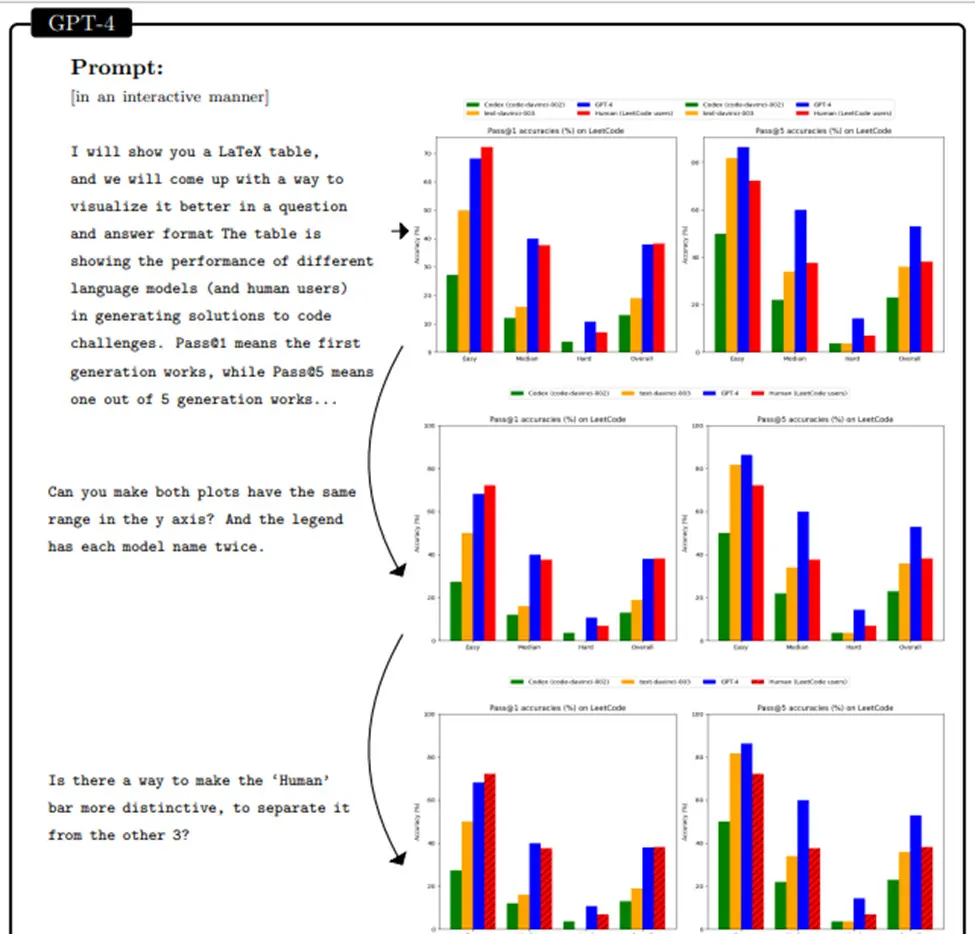

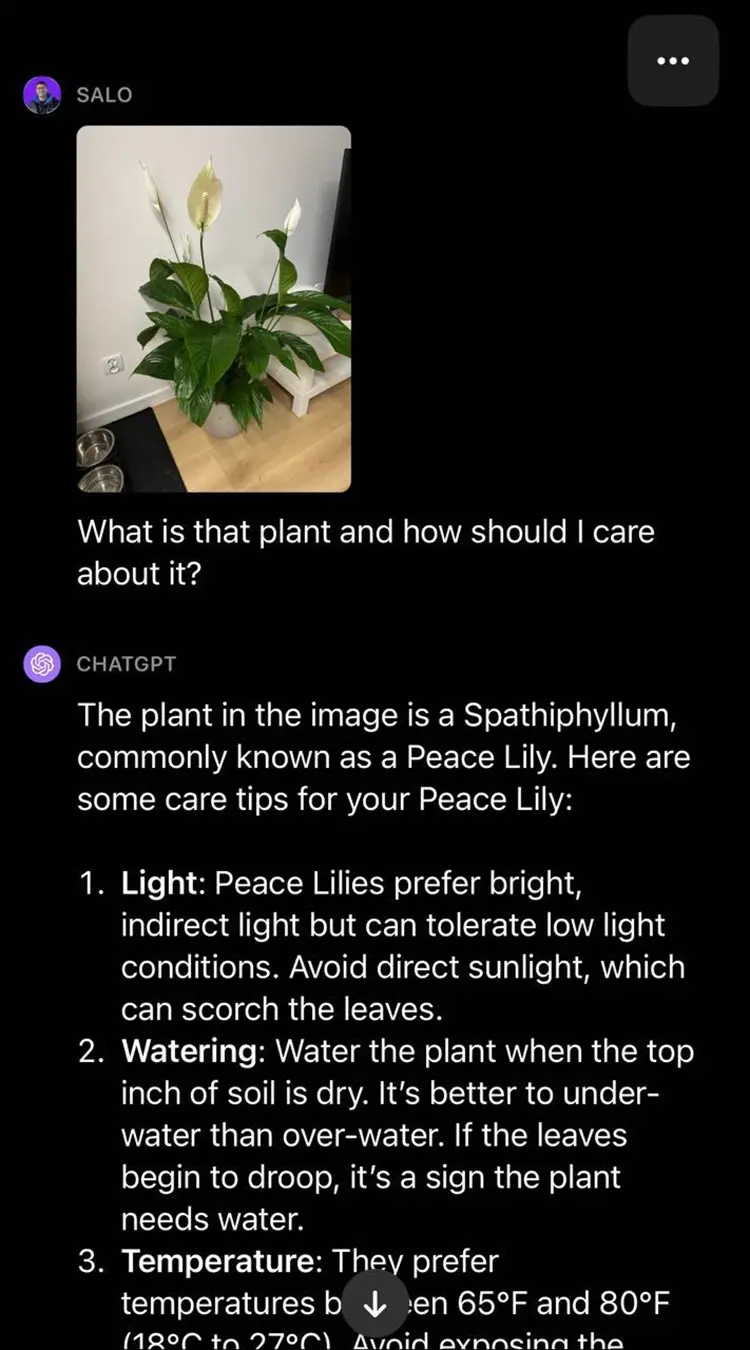

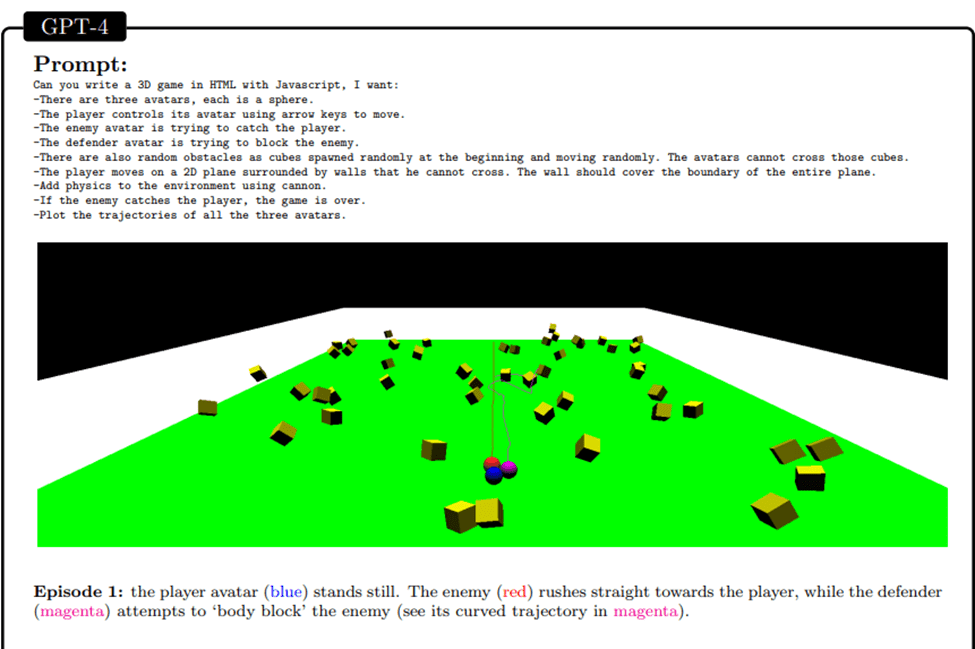

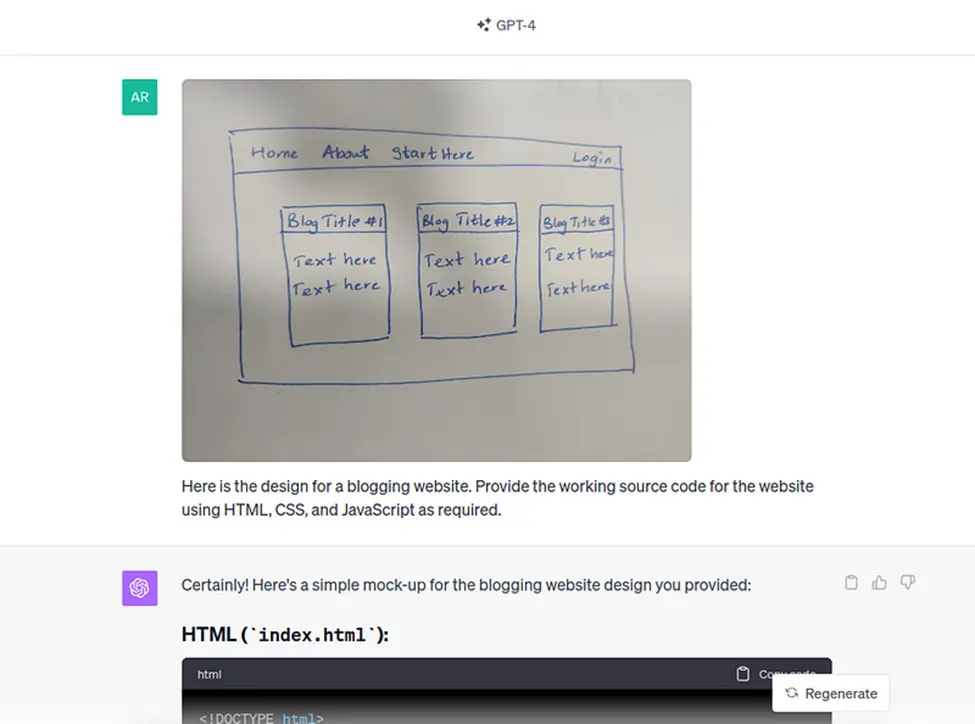

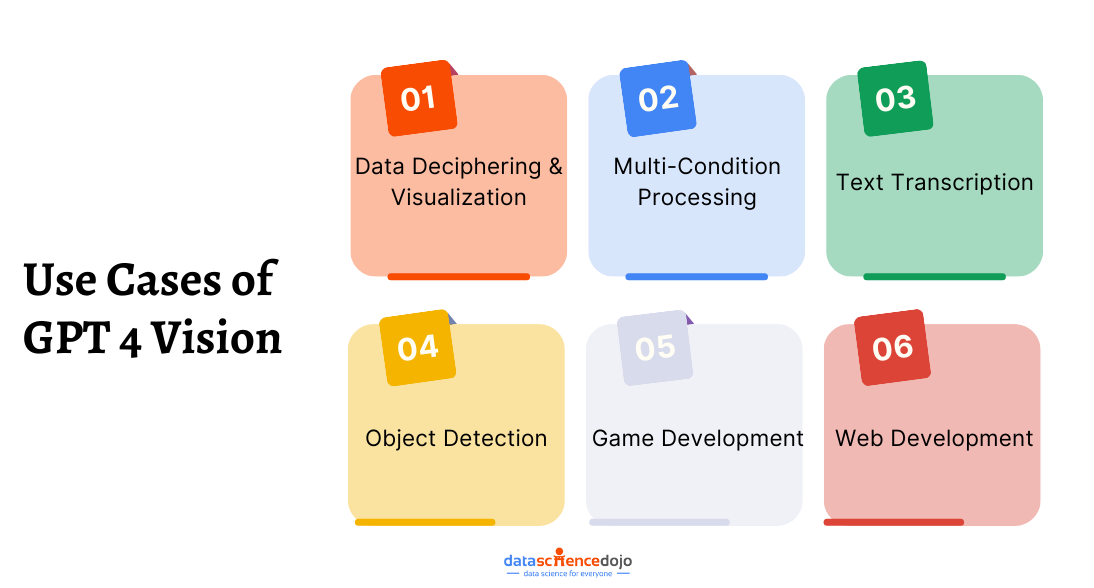

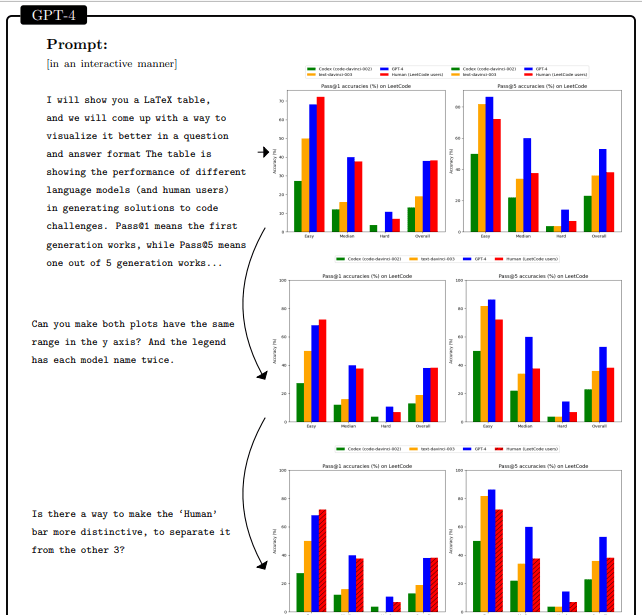

Here are some examples of GPT-4 being capable of performing diverse tasks:

- Data Visualization: In this example, GPT-4 was asked to extract data from the LATEX code and produce a plot in Python based on a conversation with the user. The model extracted the data correctly and responded appropriately to all user requests, manipulating the data into the right format and adapting the visualization.Learn more about Data Visualization

- Game development: Given a high-level description of a 3D game, GPT-4 successfully creates a functional game in HTML and JavaScript without any prior training or exposure to similar tasks

3. Language Mastery

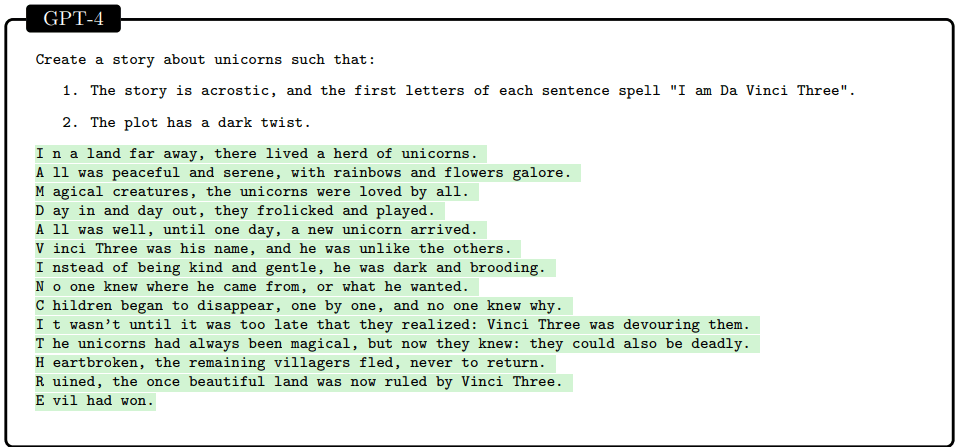

GPT-4’s mastery of language is a distinguishing feature. It can understand and generate human-like text, showcasing fluency, coherence, and creativity. Its language capabilities extend beyond next-word prediction, setting it apart as a more advanced language model.

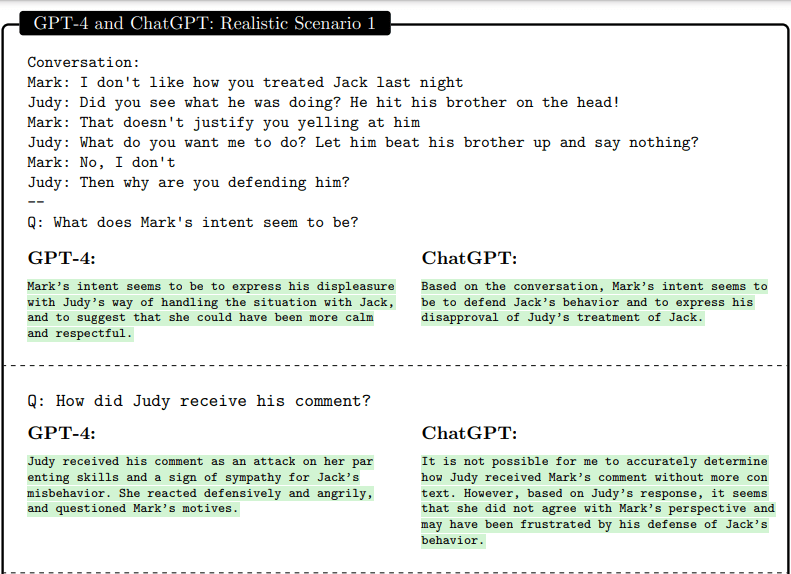

4. Cognitive Traits

GPT-4 exhibits traits associated with intelligence, such as abstraction, comprehension, and understanding of human motives and emotions. It can reason, plan, and learn from experience. These cognitive abilities align with the goals of AGI, highlighting GPT-4’s progress towards this goal.

Here’s an example of GPT-4 trying to solve a realistic scenario of marital struggle, requiring a lot of nuance to navigate.

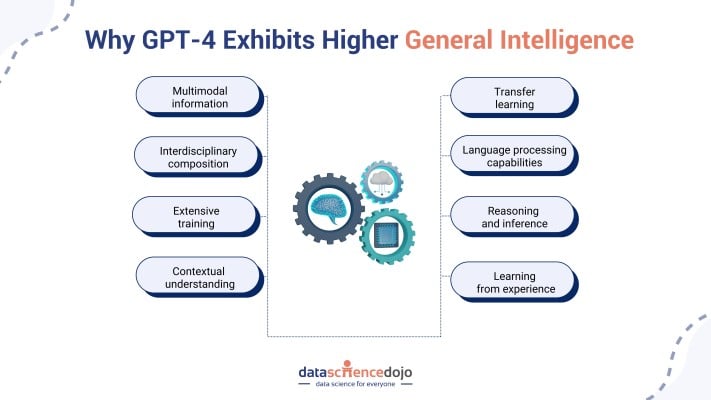

Why Does GPT-4 Exhibit Higher General Intelligence than Previous AI Models?

Some of the features of GPT-4 that contribute to its more general intelligence and task-solving capabilities include:

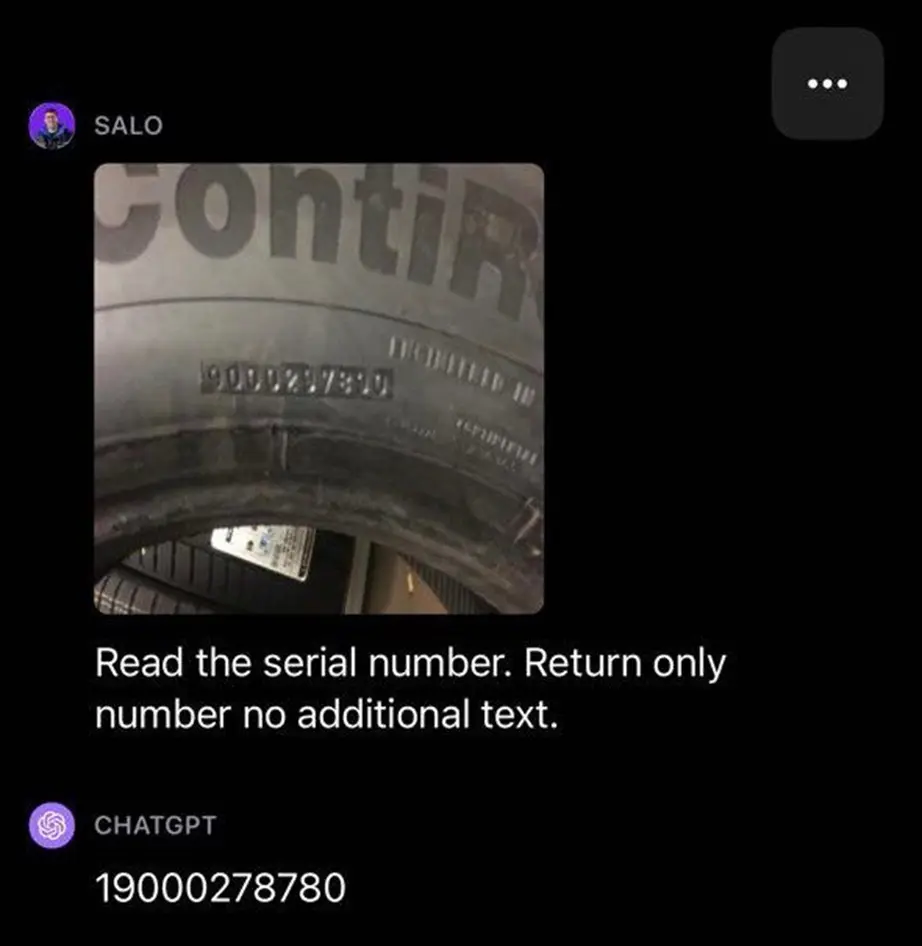

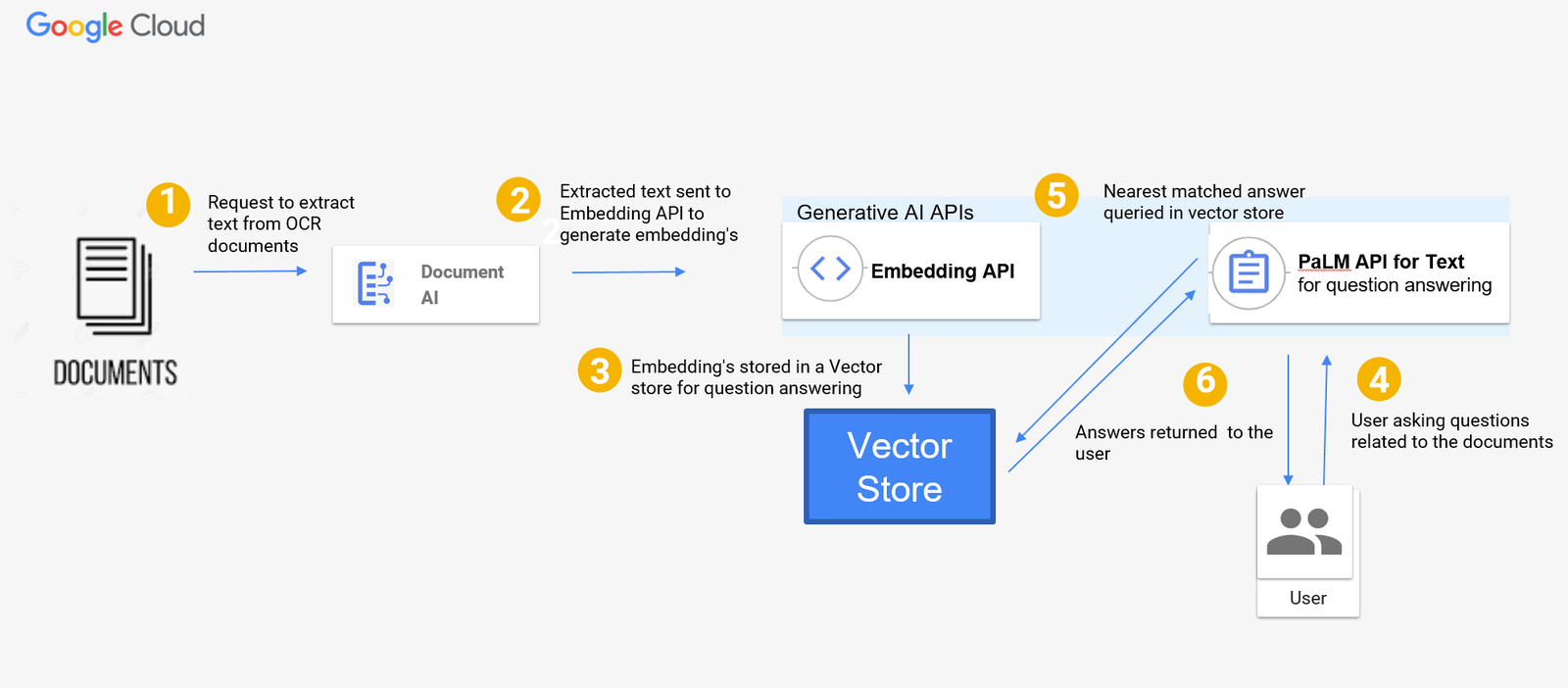

Multimodal Information

GPT-4 can manipulate and understand multi-modal information. This is achieved through techniques such as leveraging vector graphics, 3D scenes, and music data in conjunction with natural language prompts. GPT-4 can generate code that compiles into detailed and identifiable images, demonstrating its understanding of visual concepts.

Interdisciplinary Composition

The interdisciplinary aspect of GPT-4’s composition refers to its ability to integrate knowledge and insights from different domains. GPT-4 can connect and leverage information from various fields such as mathematics, coding, vision, medicine, law, psychology, and more. This interdisciplinary integration enhances GPT-4’s general intelligence and widens its range of applications.

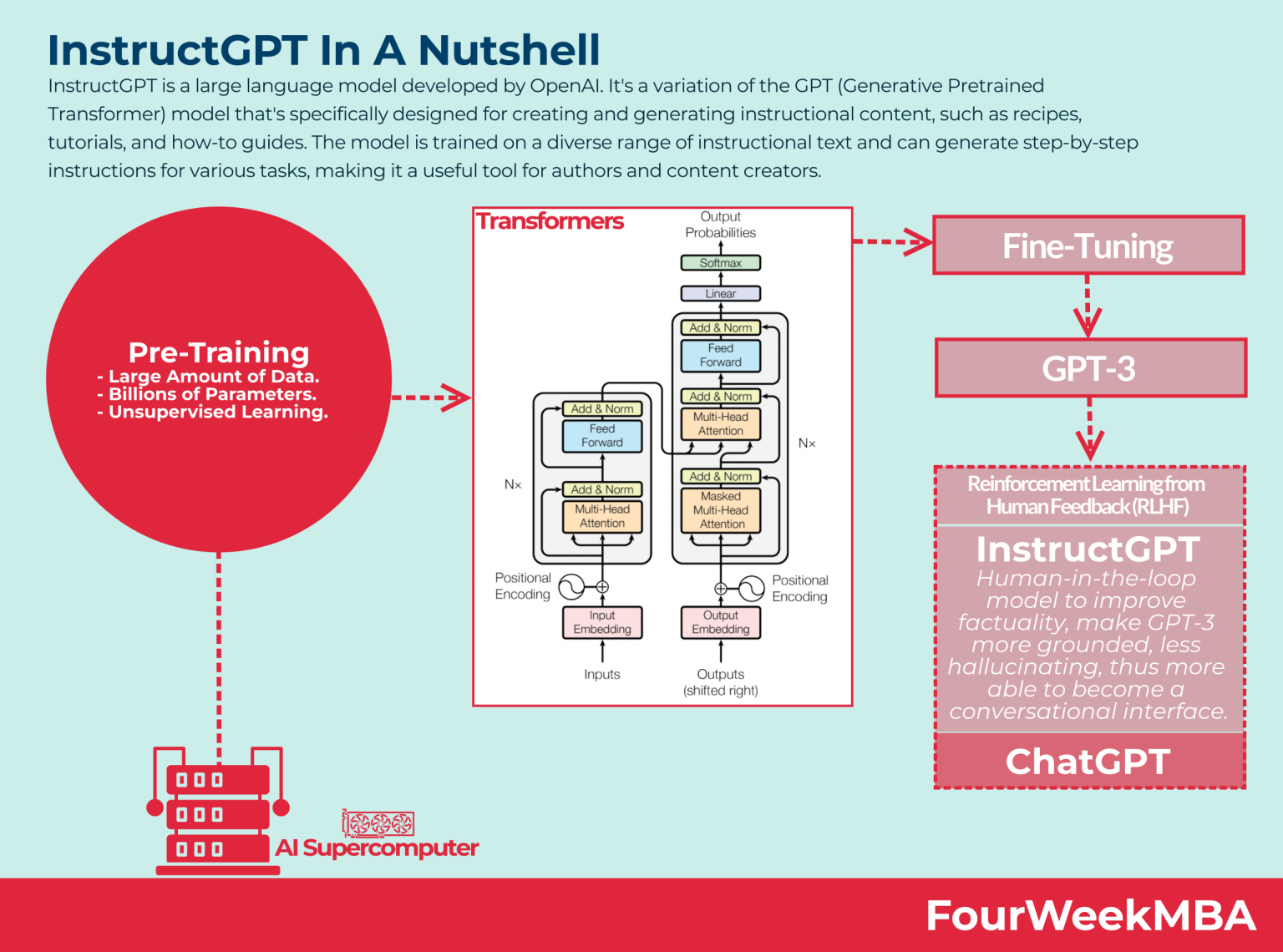

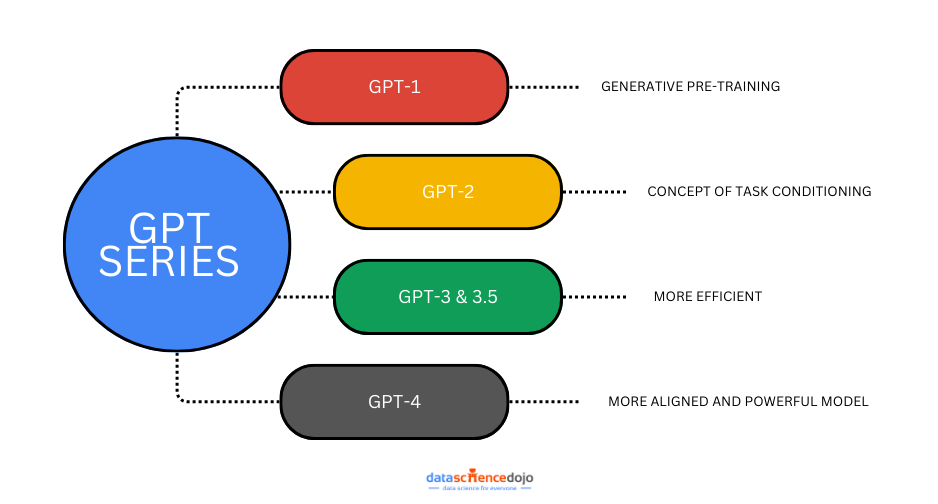

Extensive Training

GPT-4 has been trained on a large corpus of web-text data, allowing it to learn a wide range of knowledge from diverse domains. This extensive training enables GPT-4 to exhibit general intelligence and solve tasks in various domains. Read more

Contextual Understanding

GPT-4 can understand the context of a given input, allowing it to generate more coherent and contextually relevant responses. This contextual understanding enhances its performance in solving tasks across different domains.

Transfer Learning

GPT-4 leverages transfer learning, where it applies knowledge learned from one task to another. This enables GPT-4 to adapt its knowledge and skills to different domains and solve tasks without the need for special prompting or explicit instructions.

Read more about the GPT-4 Vision’s use cases

Language Processing Capabilities

GPT-4’s advanced language processing capabilities contribute to its general intelligence. It can comprehend and generate human-like natural language, allowing for more sophisticated communication and problem-solving.

Reasoning and Inference

GPT-4 demonstrates the ability to reason and make inferences based on the information provided. This reasoning ability enables GPT-4 to solve complex problems and tasks that require logical thinking and deduction.

Learning from Experience

GPT-4 can learn from experience and refine its performance over time. This learning capability allows GPT-4 to continuously improve its task-solving abilities and adapt to new challenges.

These features collectively contribute to GPT-4’s more general intelligence and its ability to solve tasks in various domains without the need for specialized prompting.

Wrapping It Up

It is crucial to understand and explore GPT-4’s limitations, as well as the challenges ahead in advancing towards more comprehensive versions of AGI. Nonetheless, GPT-4’s development holds significant implications for the future of AI research and the societal impact of AGI.