Artificial Intelligence is reshaping industries around the world, revolutionizing how businesses operate and deliver services. From healthcare where AI assists in diagnosis and treatment plans, to finance where it is used to predict market trends and manage risks, the influence of AI is pervasive and growing.

Learn how to use Custom Vision AI and Power BI to build a bird recognition app

As AI technologies evolve, they create new job roles and demand new skills, particularly in the field of AI engineering. AI engineering is more than just a buzzword; it’s becoming an essential part of the modern job market. Companies are increasingly seeking professionals who can not only develop AI solutions but also ensure these solutions are practical, sustainable, and aligned with business goals.

What is AI Engineering?

AI engineering is the discipline that combines the principles of data science, software engineering, and machine learning to build and manage robust AI systems. It involves not just the creation of AI models but also their integration, scaling, and management within an organization’s existing infrastructure.

Explore Mixtral of Experts: A Breakthrough in AI Model Innovation

The role of an AI engineer is multifaceted. They work at the intersection of various technical domains, requiring a blend of skills to handle data processing, algorithm development, system design, and implementation.

Understand how AI as a Service (AIaaS) will transform the Industry.

This interdisciplinary nature of AI engineering makes it a critical field for businesses looking to leverage AI to enhance their operations and competitive edge.

Latest Advancements in AI Affecting Engineering

Artificial Intelligence continues to advance at a rapid pace, bringing transformative changes to the field of engineering. These advancements are not just theoretical; they have practical applications that are reshaping how engineers solve problems and design solutions.

Machine Learning Algorithms

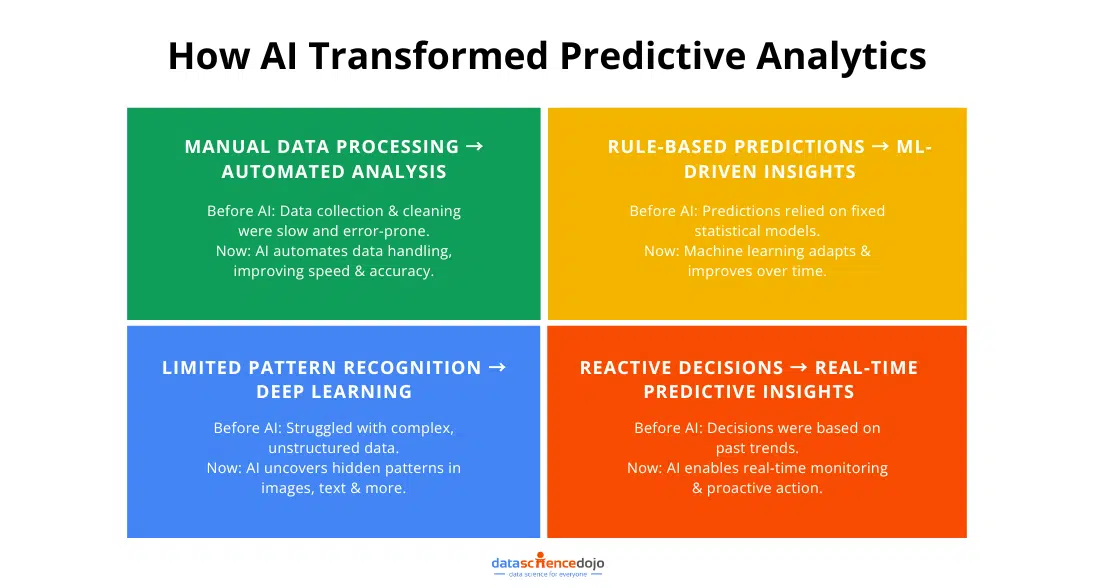

Recent improvements in machine learning algorithms have significantly enhanced their efficiency and accuracy. Engineers now use these algorithms to predict outcomes, optimize processes, and make data-driven decisions faster than ever before.

For example, predictive maintenance in manufacturing uses machine learning to anticipate equipment failures before they occur, reducing downtime and saving costs.

Read on to understand the Impact of Machine Learning on Demand Planning

Deep Learning

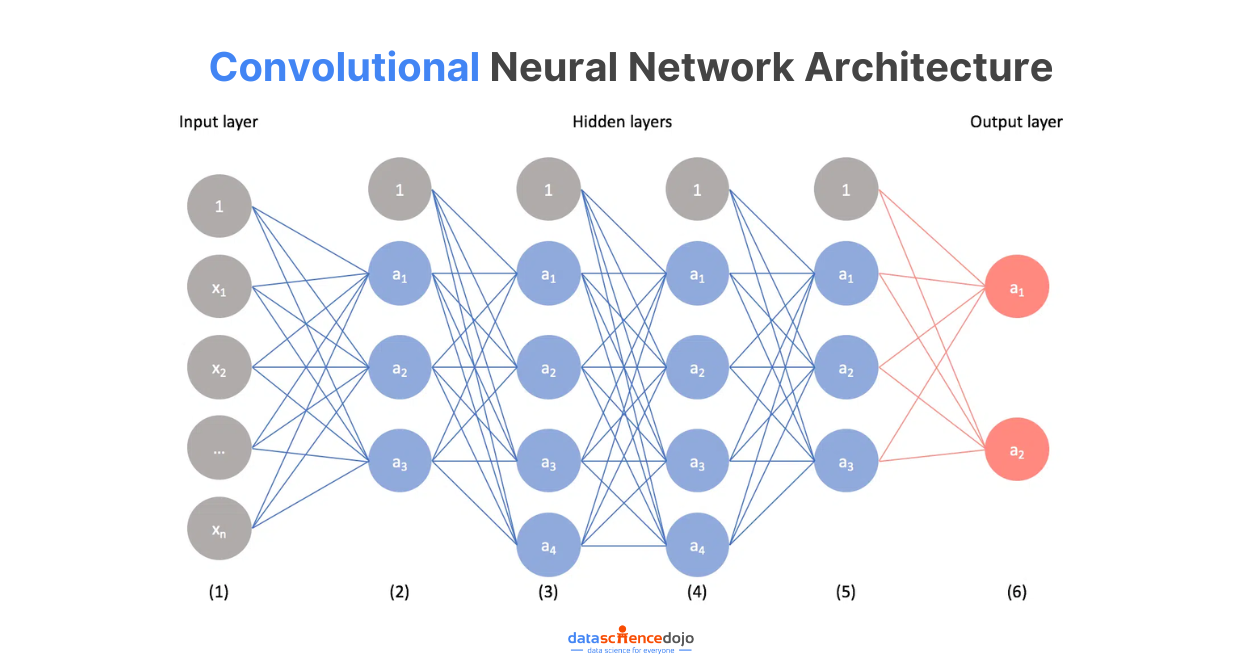

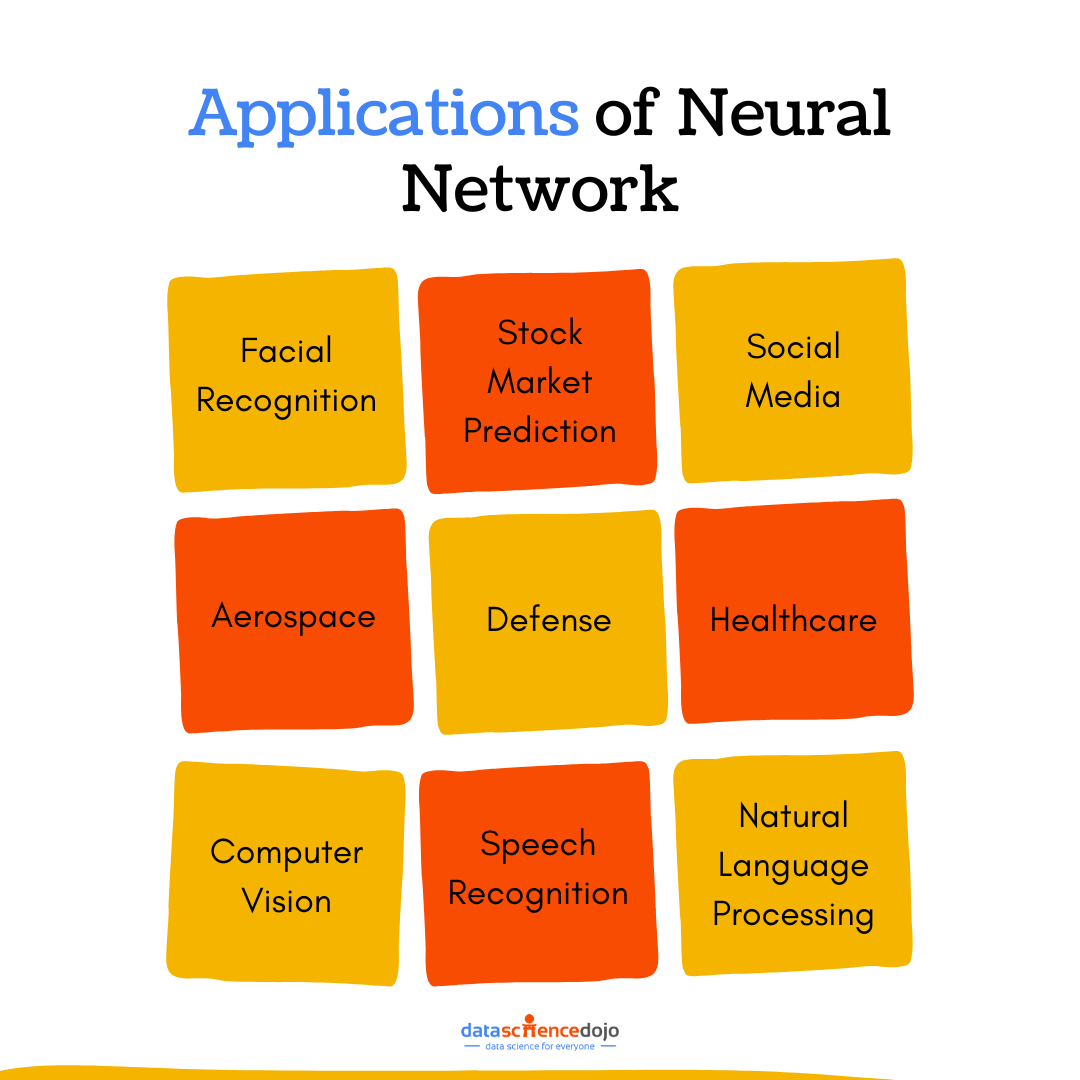

Deep learning, a subset of machine learning, uses structures called neural networks which are inspired by the human brain. These networks are particularly good at recognizing patterns, which is crucial in fields like civil engineering where pattern recognition can help in assessing structural damage from images automatically.

Know more about deep learning using Python in the Cloud

Neural Networks

Advances in neural networks have led to better model training techniques and improved performance, especially in complex environments with unstructured data. In software engineering, neural networks are used to improve code generation, bug detection, and even automate routine programming tasks.

Understand Neural Networks

AI in Robotics

Robotics combined with AI has led to the creation of more autonomous, flexible, and capable robots. In industrial engineering, robots equipped with AI can perform a variety of tasks from assembly to more complex functions like navigating unpredictable warehouse environments.

Automation

AI-driven automation technologies are now more sophisticated and accessible, enabling engineers to focus on innovation rather than routine tasks. Automation in AI has seen significant use in areas such as automotive engineering, where it helps in designing more efficient and safer vehicles through simulations and real-time testing data.

These advancements in AI are not only making engineering more efficient but also more innovative, as they provide new tools and methods for addressing engineering challenges. The ongoing evolution of AI technologies promises even greater impacts in the future, making it an exciting time for professionals in the field.

Importance of AI Engineering Skills in Today’s World

As Artificial Intelligence integrates deeper into various industries, the demand for skilled AI engineers has surged, underscoring the critical role these professionals play in modern economies.

Impact Across Industries

Healthcare

In the healthcare industry, AI engineering is revolutionizing patient care by improving diagnostic accuracy, personalizing treatment plans, and managing healthcare records more efficiently. AI tools help predict patient outcomes, support remote monitoring, and even assist in complex surgical procedures, enhancing both the speed and quality of healthcare services.

Learn how AI in healthcare has improved patient care

Finance

In finance, AI engineers develop algorithms that detect fraudulent activities, automate trading systems, and provide personalized financial advice to customers. These advancements not only secure financial transactions but also democratize financial advice, making it more accessible to the public.

Automotive

The automotive sector benefits from AI engineering through the development of autonomous vehicles and advanced safety features. These technologies reduce human error on the roads and aim to make driving safer and more efficient.

Economic and Social Benefits

Increased Efficiency

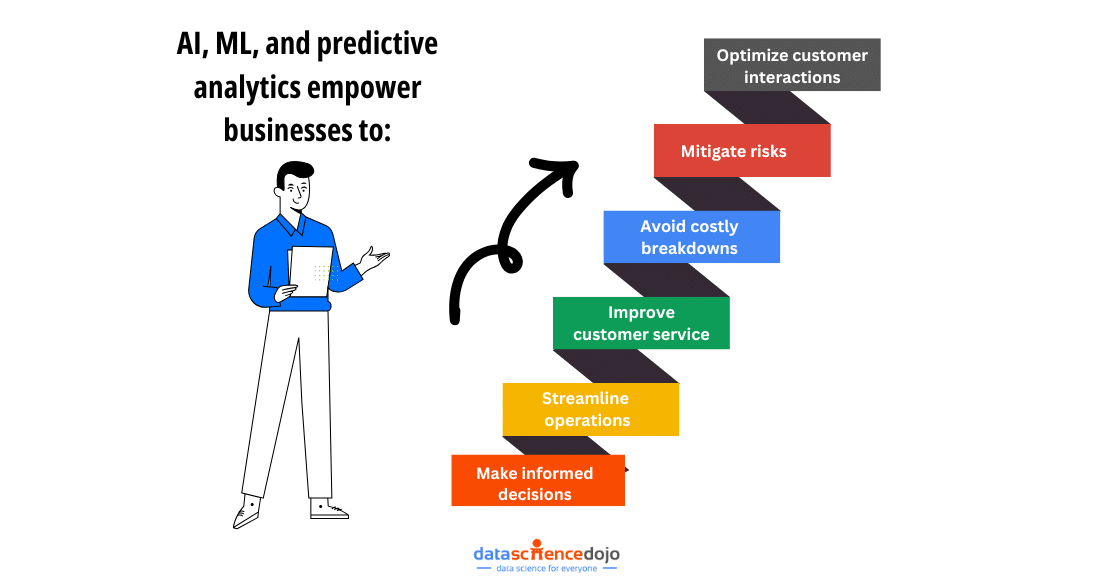

AI engineering streamlines operations across various sectors, reducing costs and saving time. For instance, AI can optimize supply chains in manufacturing or improve energy efficiency in urban planning, leading to more sustainable practices and lower operational costs.

Explore how AI is empowering the education industry

New Job Opportunities

As AI technologies evolve, they create new job roles in the tech industry and beyond. AI engineers are needed not just for developing AI systems but also for ensuring these systems are ethical, practical, and tailored to specific industry needs.

Innovation in Traditional Fields

AI engineering injects a new level of innovation into traditional fields like agriculture or construction. For example, AI-driven agricultural tools can analyze soil conditions and weather patterns to inform better crop management decisions, while AI in construction can lead to smarter building techniques that are environmentally friendly and cost-effective.

Know about 15 Spectacular AI, ML, and Data Science Movies

The proliferation of AI technology highlights the growing importance of AI engineering skills in today’s world. By equipping the workforce with these skills, industries can not only enhance their operational capacities but also drive significant social and economic advancements.

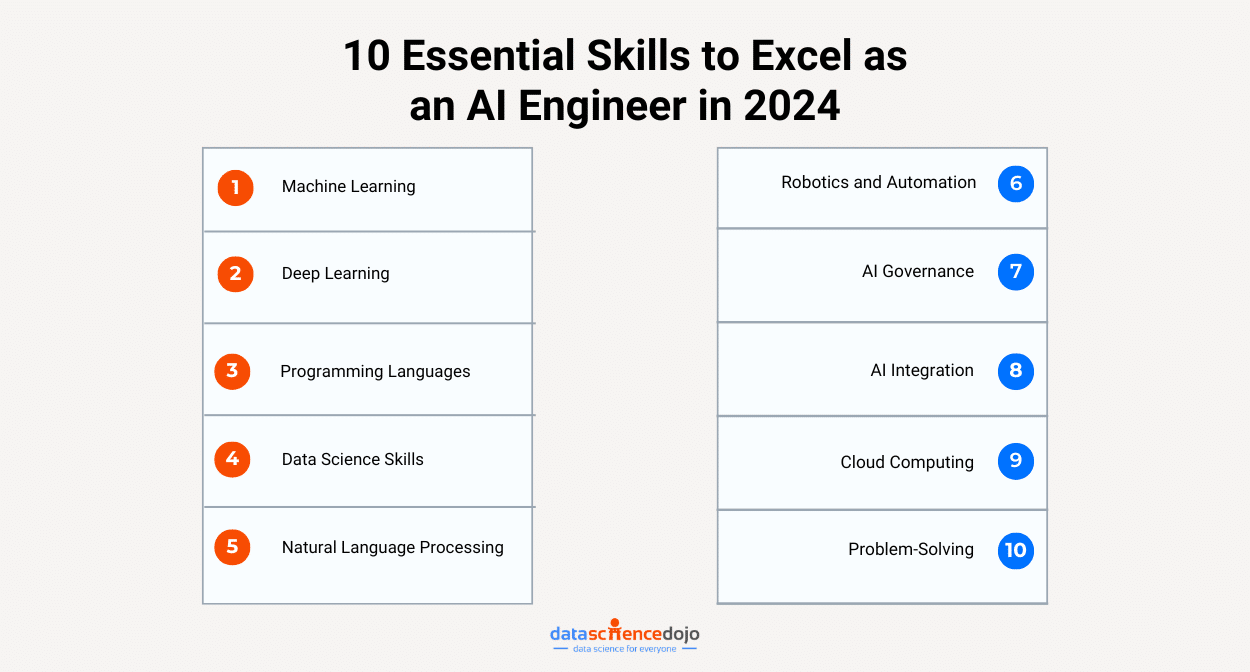

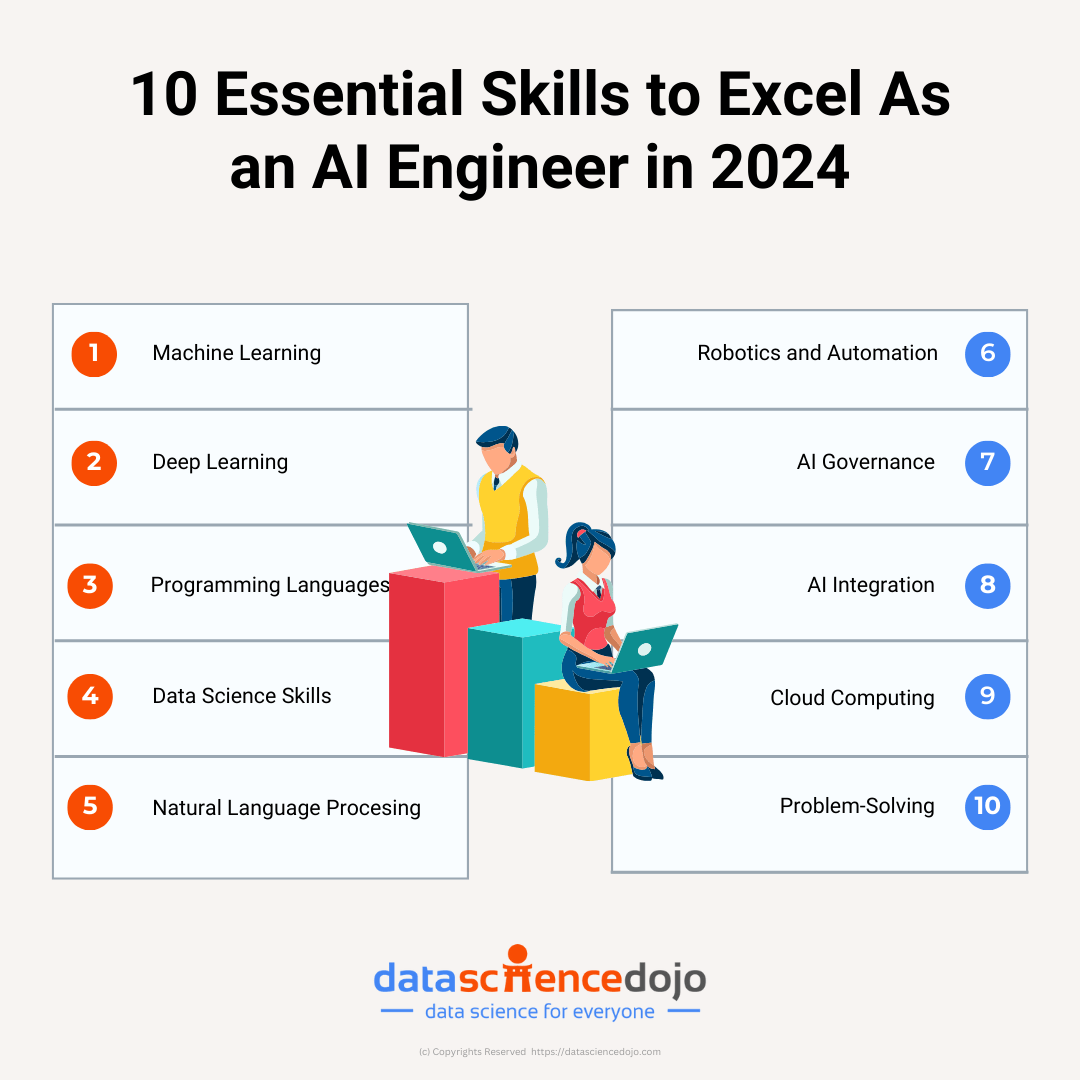

10 Must-Have AI Skills to Help You Excel

1. Machine Learning and Algorithms

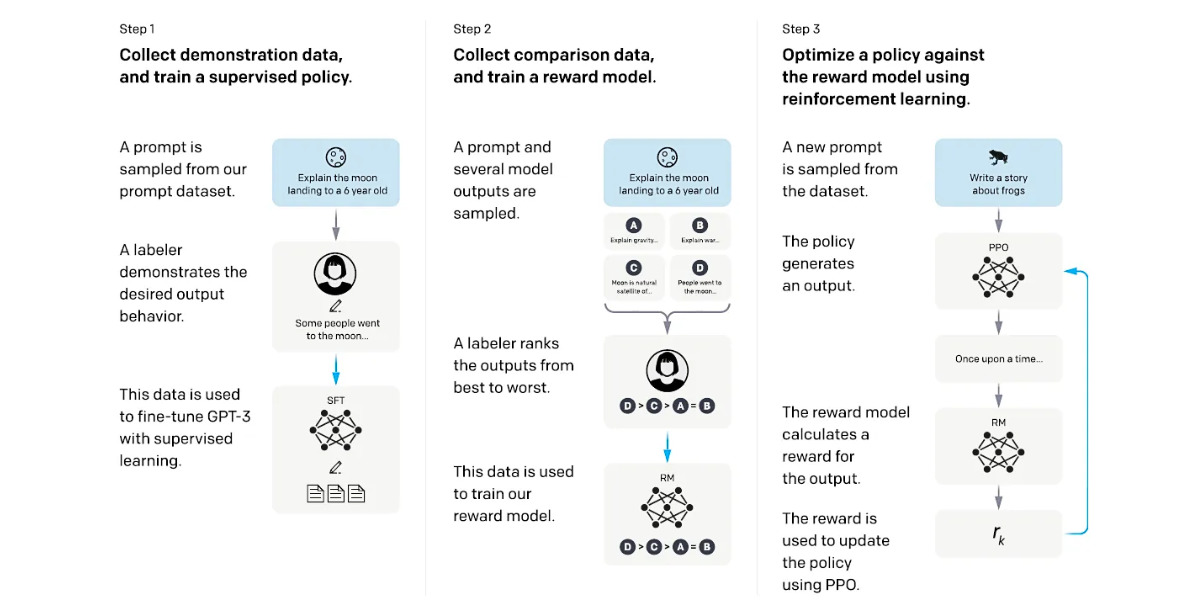

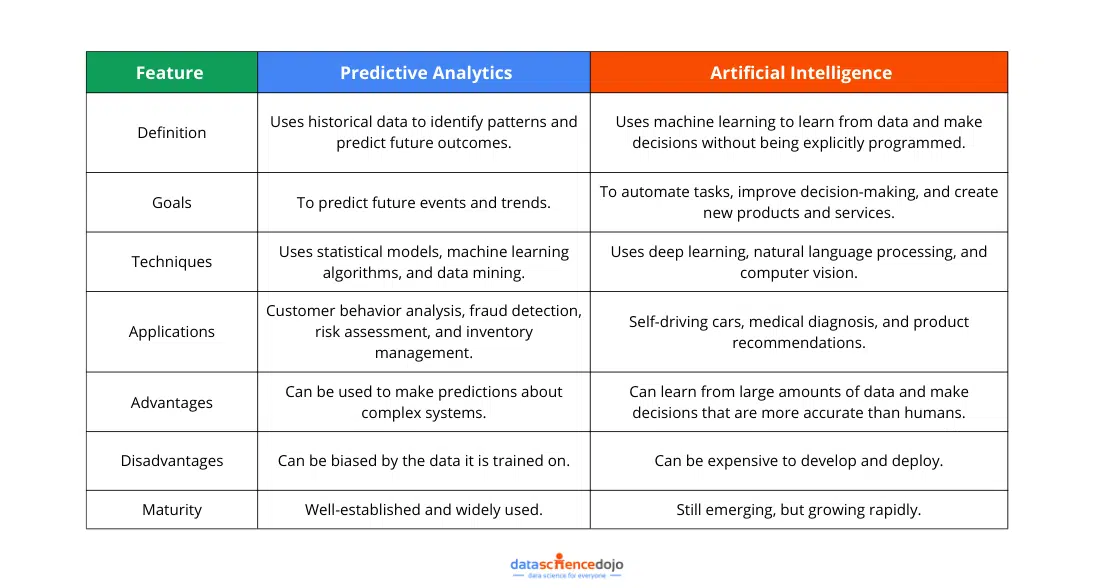

Machine learning algorithms are crucial tools for AI engineers, forming the backbone of many artificial intelligence systems. These algorithms enable computers to learn from data, identify patterns, and make decisions with minimal human intervention and are divided into supervised, unsupervised, and reinforcement learning.

Learn about Machine learning Roadmap for a successful career

For AI engineers, proficiency in these algorithms is vital as it allows for the automation of decision-making processes across diverse industries such as healthcare, finance, and automotive. Additionally, understanding how to select, implement, and optimize these algorithms directly impacts the performance and efficiency of AI models.

AI engineers must be adept in various tasks such as algorithm selection based on the task and data type, data preprocessing, model training and evaluation, hyperparameter tuning, and the deployment and ongoing maintenance of models in production environments.

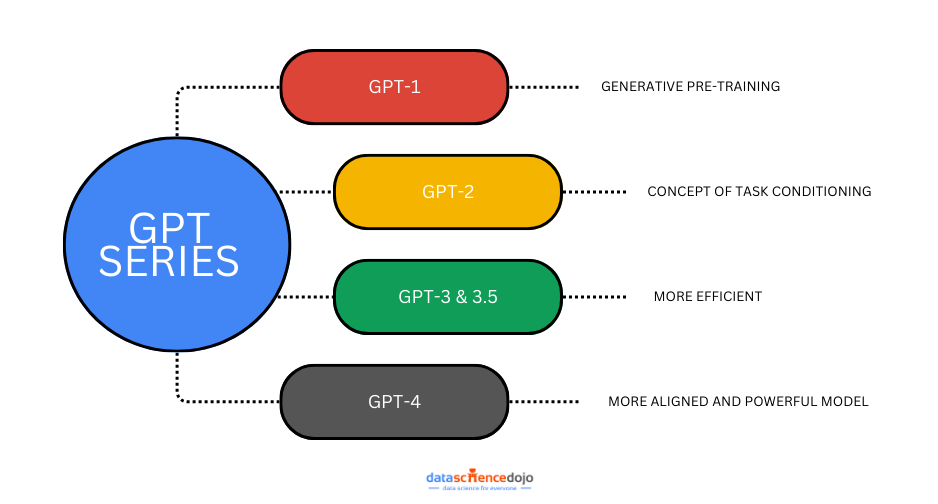

2. Deep Learning

Deep learning is a subset of machine learning based on artificial neural networks, where the model learns to perform tasks directly from text, images, or sounds. Deep learning is important for AI engineers because it is the key technology behind many advanced AI applications, such as natural language processing, computer vision, and audio recognition.

These applications are crucial in developing systems that mimic human cognition or augment capabilities across various sectors, including healthcare for diagnostic systems, automotive for self-driving cars, and entertainment for personalized content recommendations.

Explore the potential of Python-based Deep Learning

AI engineers working with deep learning need to understand the architecture of neural networks, including convolutional and recurrent neural networks, and how to train these models effectively using large datasets.

They also need to be proficient in using frameworks like TensorFlow or PyTorch, which facilitate the design and training of neural networks. Furthermore, understanding regularization techniques to prevent overfitting, optimizing algorithms to speed up training, and deploying trained models efficiently in production are essential skills for AI engineers in this domain.

3. Programming Languages

Programming languages are fundamental tools for AI engineers, enabling them to build and implement artificial intelligence models and systems. These languages provide the syntax and structure that engineers use to write algorithms, process data, and interface with hardware and software environments.

Python

Python is perhaps the most critical programming language for AI due to its simplicity and readability, coupled with a robust ecosystem of libraries like TensorFlow, PyTorch, and Scikit-learn, which are essential for machine learning and deep learning. Python’s versatility allows AI engineers to develop prototypes quickly and scale them with ease.

Navigate through 6 Popular Python Libraries for Data Science

R

R is another important language, particularly valued in statistics and data analysis, making it useful for AI applications that require intensive data processing. R provides excellent packages for data visualization, statistical testing, and modeling that are integral for analyzing complex datasets in AI.

Java

Java offers the benefits of high performance, portability, and easy management of large systems, which is crucial for building scalable AI applications. Java is also widely used in big data technologies, supported by powerful Java-based tools like Apache Hadoop and Spark, which are essential for data processing in AI.

C++

C++ is essential for AI engineering due to its efficiency and control over system resources. It is particularly important in developing AI software that requires real-time execution, such as robotics or games. C++ allows for higher control over hardware and graphical processes, making it ideal for applications where latency is a critical factor.

AI engineers should have a strong grasp of these languages to effectively work on a variety of AI projects.

4. Data Science Skills

Data science skills are pivotal for AI engineers because they provide the foundation for developing, tuning, and deploying intelligent systems that can extract meaningful insights from raw data.

These skills encompass a broad range of capabilities from statistical analysis to data manipulation and interpretation, which are critical in the lifecycle of AI model development.

Here’s a complete Data Science Toolkit

Statistical Analysis and Probability

AI engineers need a solid grounding in statistics and probability to understand and apply various algorithms correctly. These principles help in assessing model assumptions, validity, and tuning parameters, which are crucial for making predictions and decisions based on data.

Data Manipulation and Cleaning

Before even beginning to design algorithms, AI engineers must know how to preprocess data. This includes handling missing values, outlier detection, and normalization. Clean and well-prepared data are essential for building accurate and effective models, as the quality of data directly impacts the outcome of predictive models.

Big Data Technologies

With the growth of data-driven technologies, AI engineers must be proficient in big data platforms like Hadoop, Spark, and NoSQL databases. These technologies help manage large volumes of data beyond what is manageable with traditional databases and are essential for tasks that require processing large datasets efficiently.

Machine Learning and Predictive Modeling

Data science is not just about analyzing data but also about making predictions. Understanding machine learning techniques—from linear regression to complex deep learning networks—is essential. AI engineers must be able to apply these techniques to create predictive models and fine-tune them according to specific data and business requirements.

Explore Top 9 machine learning algorithms to use for SEO & marketing

Data Visualization

The ability to visualize data and model outcomes is crucial for communicating findings effectively to stakeholders. Tools like Matplotlib, Seaborn, or Tableau help in creating understandable and visually appealing representations of complex data sets and results.

In sum, data science skills enable AI engineers to derive actionable insights from data, which is the cornerstone of artificial intelligence applications.

5. Natural Language Processing (NLP)

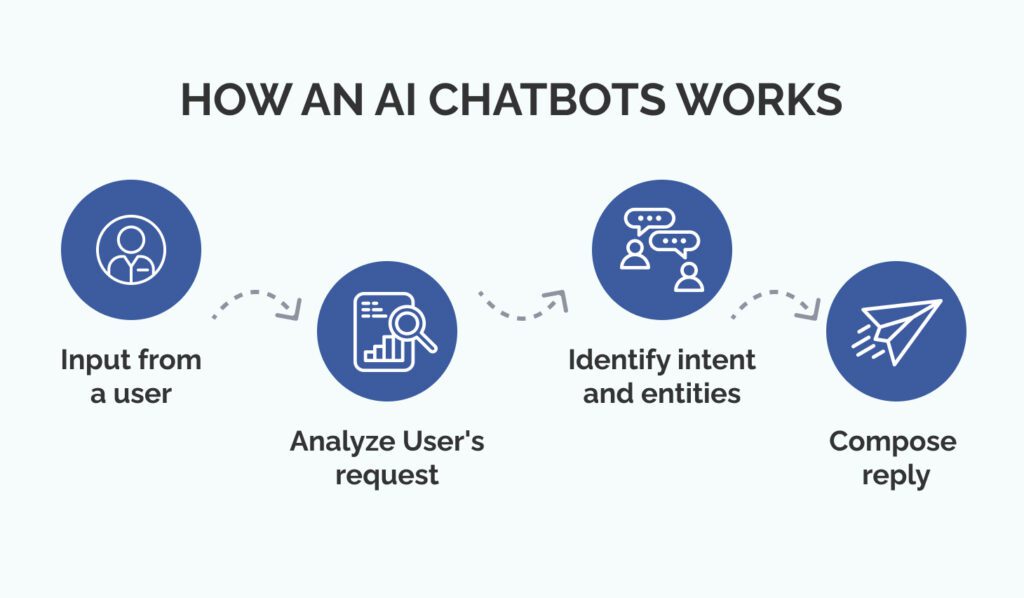

NLP involves programming computers to process and analyze large amounts of natural language data. This technology enables machines to understand and interpret human language, making it possible for them to perform tasks like translating text, responding to voice commands, and generating human-like text.

Understand Natural Language Processing and its Applications

For AI engineers, NLP is essential in creating systems that can interact naturally with users, extracting information from textual data, and providing services like chatbots, customer service automation, and sentiment analysis. Proficiency in NLP allows engineers to bridge the communication gap between humans and machines, enhancing user experience and accessibility.

Dig deeper into understanding the Tasks and Techniques Used in NLP

6. Robotics and Automation

This field focuses on designing and programming robots that can perform tasks autonomously. Automation in AI involves the application of algorithms that allow machines to perform repetitive tasks without human intervention.

AI engineers involved in robotics and automation can revolutionize industries like manufacturing, logistics, and even healthcare, by improving efficiency, precision, and safety. Knowledge of robotics algorithms, sensor integration, and real-time decision-making is crucial for developing systems that can operate in dynamic and sometimes unpredictable environments.

Know more about 10 AI startups revolutionizing healthcare

7. Ethics and AI Governance

Ethics and AI governance encompass understanding the moral implications of AI, ensuring technologies are used responsibly, and adhering to regulatory and ethical standards. As AI becomes more prevalent, AI engineers must ensure that the systems they build are fair and transparent, and do not infringe on privacy or human rights.

This includes deploying unbiased algorithms and protecting data privacy. Understanding ethics and governance is critical not only for building trust with users but also for complying with increasing global regulations regarding AI.

8. AI Integration

AI integration involves embedding AI capabilities into existing systems and workflows without disrupting the underlying processes.

For AI engineers, the ability to integrate AI smoothly means they can enhance the functionality of existing systems, bringing about significant improvements in performance without the need for extensive infrastructure changes. This skill is essential for ensuring that AI solutions deliver practical benefits and are adopted widely across industries.

9. Cloud and Distributed Computing

This involves using cloud platforms and distributed systems to deploy, manage, and scale AI applications. The technology allows for the handling of vast amounts of data and computing tasks that are distributed across multiple locations.

AI engineers must be familiar with cloud and distributed computing to leverage the computational power and storage capabilities necessary for large-scale AI tasks. Skills in cloud platforms like AWS, Azure, and Google Cloud are crucial for deploying scalable and accessible AI solutions. These platforms also facilitate collaboration, model training, and deployment, making them indispensable in the modern AI landscape.

These skills collectively equip AI engineers to not only develop innovative solutions but also ensure these solutions are ethically sound, effectively integrated, and capable of operating at scale, thereby meeting the broad and evolving demands of the industry.

10. Problem-solving and Creative Thinking

Problem-solving and creative thinking in the context of AI engineering involve the ability to approach complex challenges with innovative solutions and a flexible mindset. This skill set is about finding efficient, effective, and sometimes unconventional ways to address technical hurdles, develop new algorithms, and adapt existing technologies to novel applications.

For AI engineers, problem-solving and creative thinking are indispensable because they operate at the forefront of technology where standard solutions often do not exist. The ability to think creatively enables engineers to devise unique models that can overcome the limitations of existing AI systems or explore new areas of AI applications.

Learn more about the Digital Problem-Solving Tools

Additionally, problem-solving skills are crucial when algorithms fail to perform as expected or when integrating AI into complex systems, requiring a deep understanding of both the technology and the problem domain.

This combination of creativity and problem-solving drives innovation in AI, pushing the boundaries of what machines can achieve and opening up new possibilities for technological advancement and application.

Empowering Your AI Engineering Career

In conclusion, mastering the skills outlined—from machine learning algorithms and programming languages to ethics and cloud computing—is crucial for any aspiring AI engineer.

These competencies will not only enhance your ability to develop innovative AI solutions but also ensure you are prepared to tackle the ethical and practical challenges of integrating AI into various industries. Embrace these skills to stay competitive and influential in the ever-evolving field of artificial intelligence.