After DALL-E 3 and GPT-4, OpenAI has now introduced Sora as it steps into the realm of video generation with artificial intelligence. Let’s take a look at what we know about the platform so far and what it has to offer.

What is Sora?

It is a new generative AI Text-to-Video model that can create minute-long videos from a textual prompt. It can convert the text in a prompt into complex and detailed visual scenes, owing to its understanding of the text and the physical existence of objects in a video. Moreover, the model can express emotions in its visual characters.

Source: OpenAI

The above video was generated by using the following textual prompt on Sora:

Several giant wooly mammoths approach, treading through a snowy meadow, their long wooly fur lightly blows in the wind as they walk, snow covered trees and dramatic snow capped mountains in the distance, mid afternoon light with wispy clouds; and a sun high in the distance creates a warm glow, The low camera view is stunning, capturing the large furry mammal with beautiful photography, depth of field.

While it is a text-to-video generative model, OpenAI highlights that Sora can work with a diverse range of prompts, including existing images and videos. It enables the model to perform varying image and video editing tasks. It can create perfect looping videos, extend videos forward or backward, and animate static images.

Moreover, the model can also support image generation and interpolation between different videos. The interpolation results in smooth transitions between different scenes.

What is the current state of Sora?

Currently, OpenAI has only provided limited availability of Sora, primarily to graphic designers, filmmakers, and visual artists. The goal is to have people outside of the organization use the model and provide feedback. The human-interaction feedback will be crucial in improving the model’s overall performance.

Moreover, OpenAI has also highlighted that Sora has some weaknesses in its present model. It makes errors in comprehending and simulating the physics of complex scenes. Moreover, it produces confusing results regarding spatial details and has trouble understanding instances of cause and effect in videos.

Now, that we have an introduction to OpenAI’s new Text-to-Video model, let’s dig deeper into it.

OpenAI’s methodology to train generative models of videos

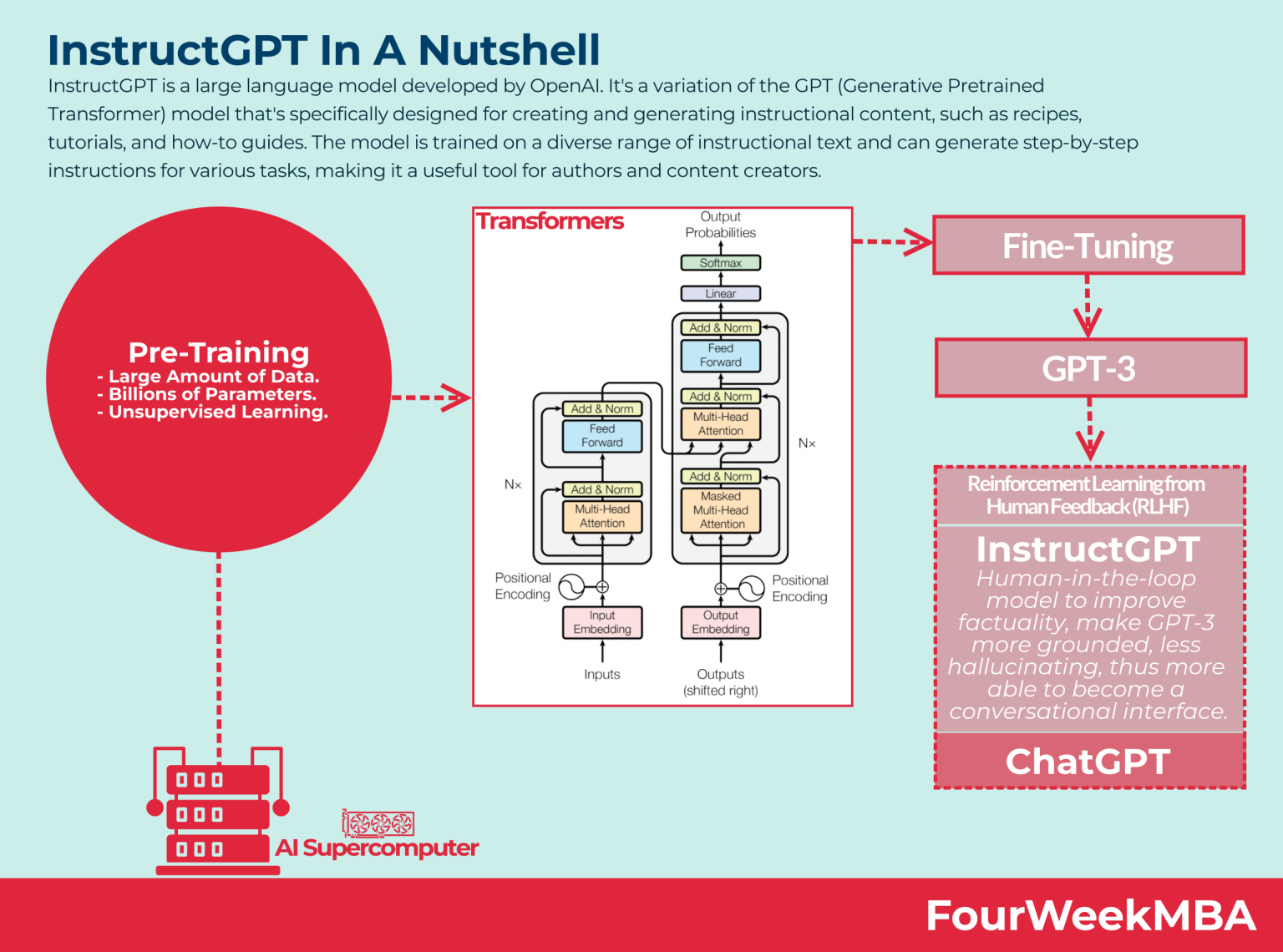

As explained in a research article by OpenAI, the generative models of videos are inspired by large language models (LLMs). The inspiration comes from the capability of LLMs to unite diverse modes of textual data, like codes, math, and multiple languages.

While LLMs use tokens to generate results, Sora uses visual patches. These patches are representations used to train generative models on varying videos and images. They are scalable and effective in the model-training process.

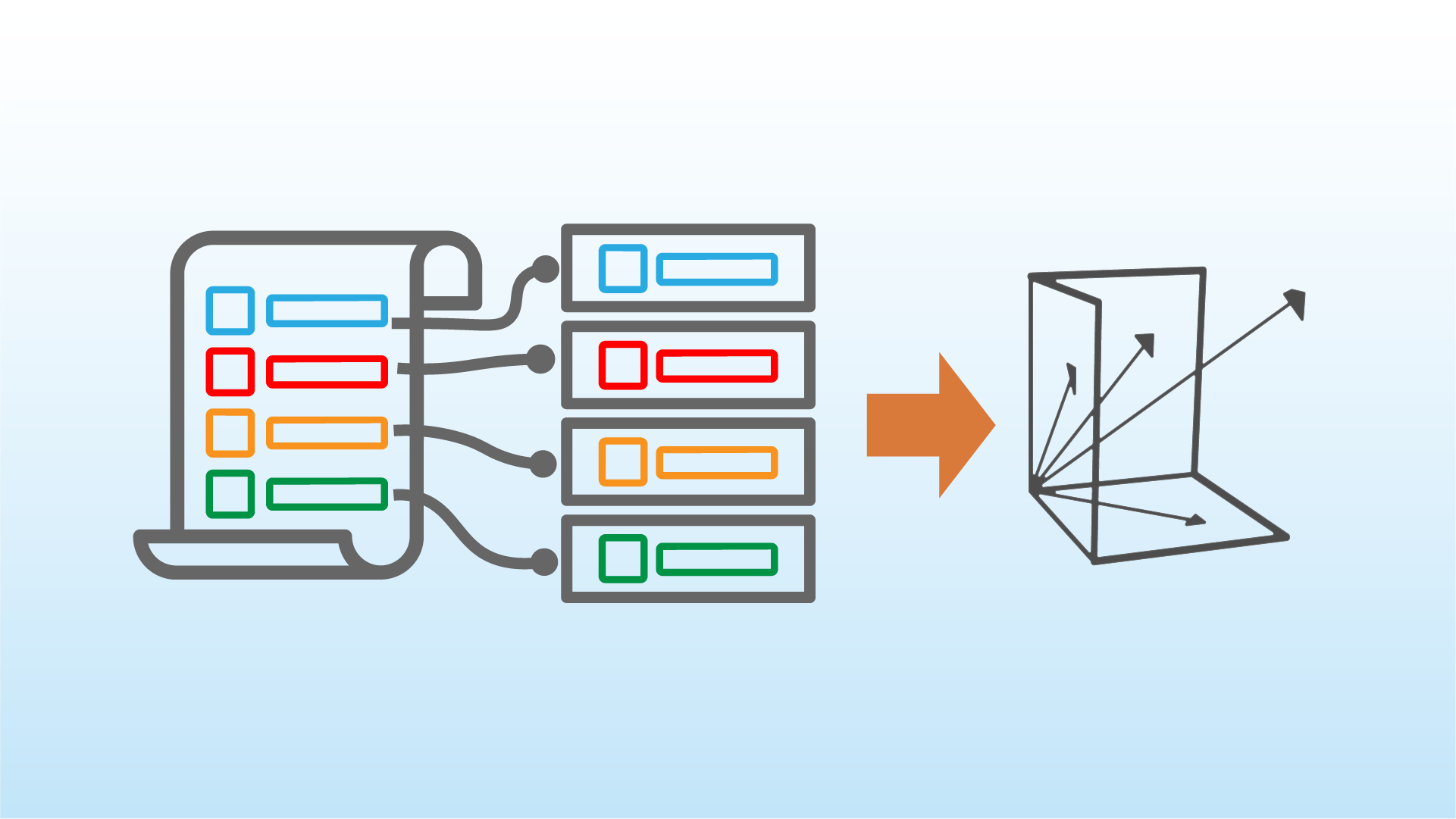

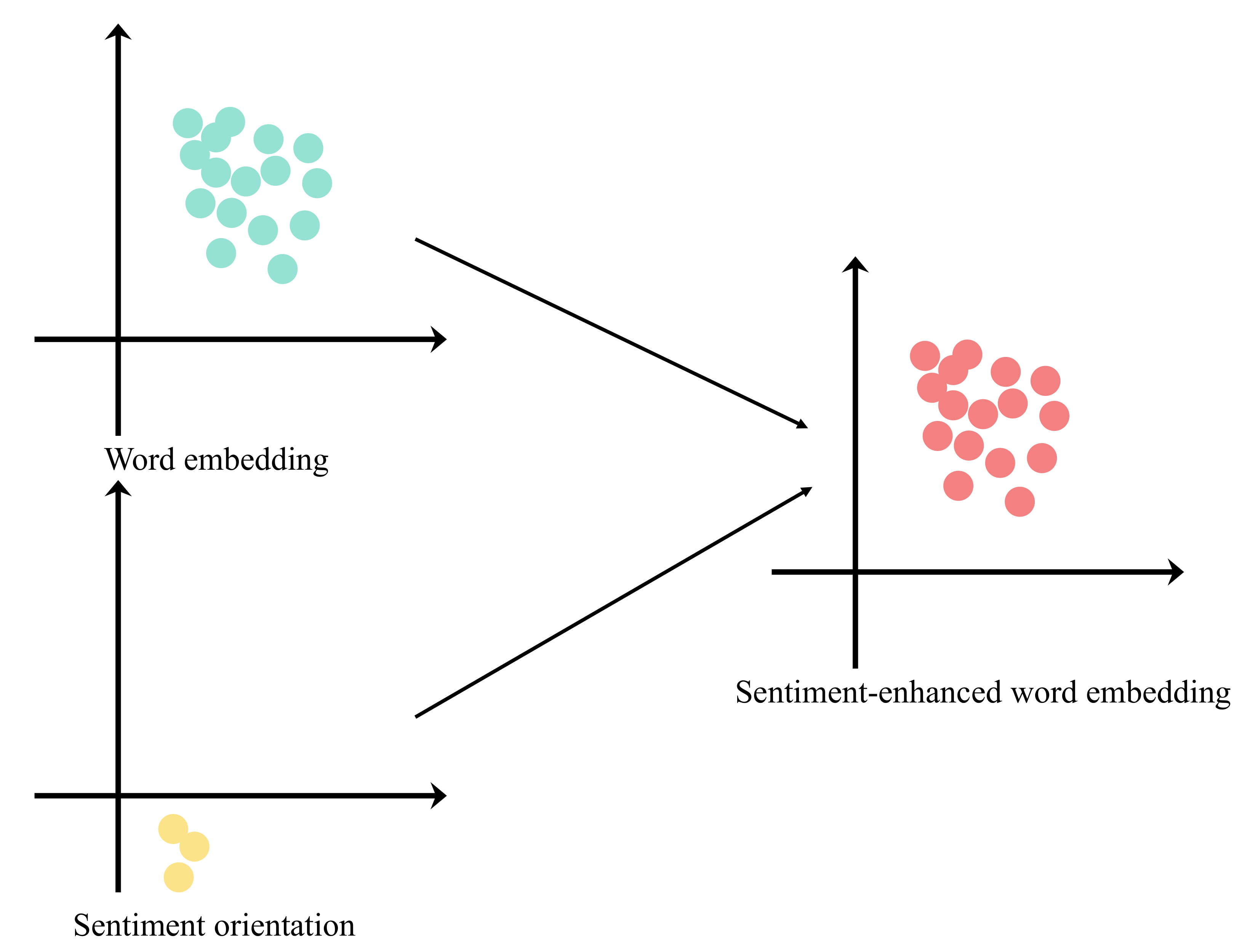

Compression of visual data to create patches

We need to understand how visual patches are created that Sora relies on to create complex and high-quality videos. OpenAI uses an AI-trained network to reduce the dimensionality of visual data. It is a process where a video input is initially compressed into a lower-dimensional latent space.

It results in a latent representation that is compressed both temporally and spatially, called patches. Sora operates within the same temporal space to generate videos. OpenAI simultaneously trains a decoder model to map the generated latent representations back to pixel space.

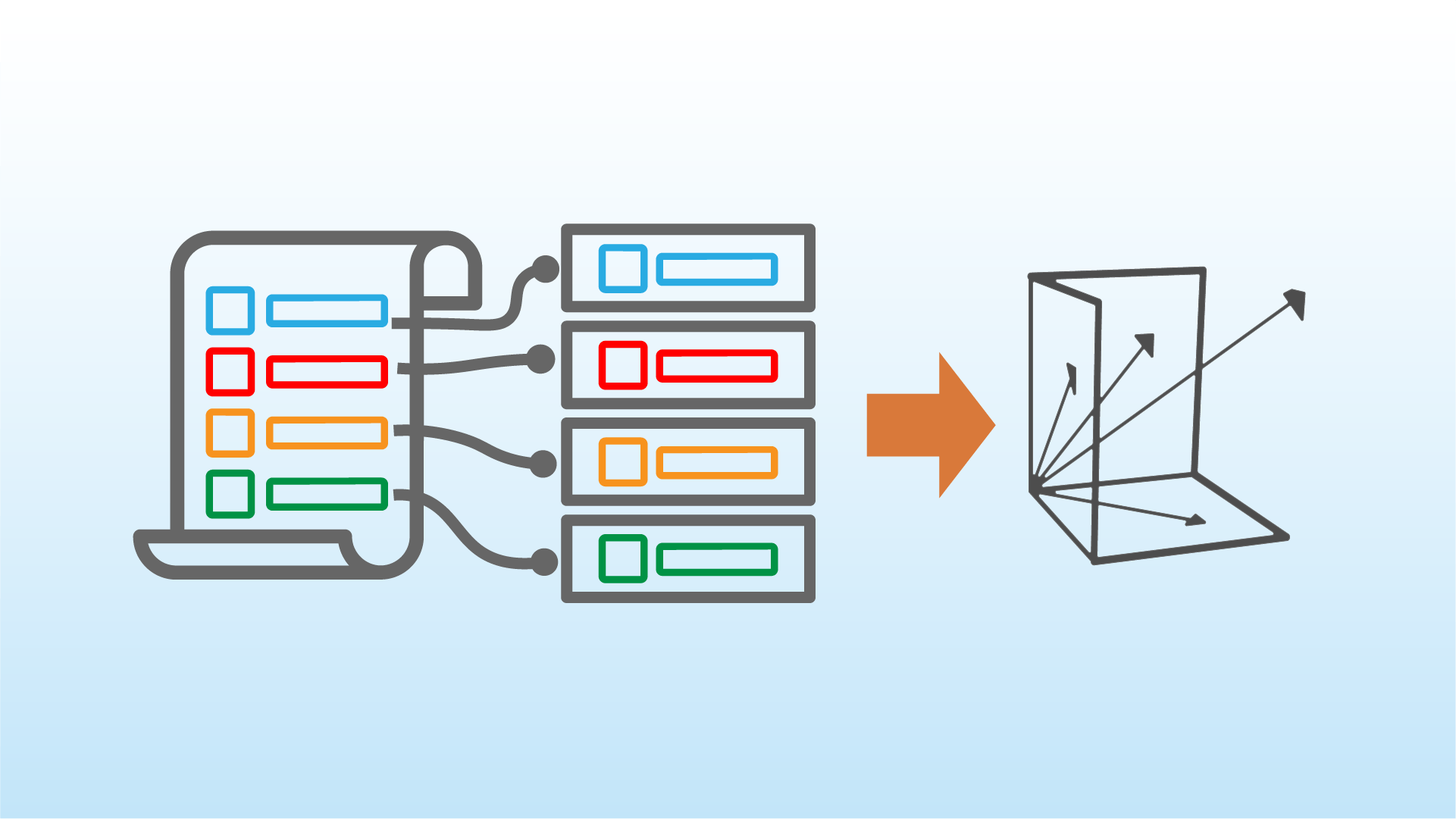

Generation of spacetime latent patches

When the Text-to-Video model is presented with a compressed video input, the AI model extracts from it a series of spacetime patches. These patches act as transformer tokens that are used to create a patch-based representation. It enables the model to train on videos and images of different resolutions, durations, and aspect ratios. It also enables control over the size of generated videos by arranging patches in a specific grid size.

What is Sora, architecturally?

Sora is a diffusion transformer that takes in noisy patches from the visual inputs and predicts the cleaner original patches. Like a typical diffusion transformer that produces effective results for various domains, it also ensures effective scaling of videos. The sample quality improves with an increase in training computation.

Below is an example from OpenAI’s research article that explains the reliance of quality outputs on training compute.

Source: OpenAI

This is the output produced with base compute. As you can see, the video results are not coherent and highly defined.

Let’s take a look at the same video with a higher compute.

Source: OpenAI

The same video with 4x compute produces a highly-improved result where the video characters can hold their shape and their movements are not as fuzzy. Moreover, you can also see that the video includes greater detail.

What happens when the computation times are increased even further?

Source: OpenAI

The results above were produced with 16x compute. As you can see, the video is in higher definition, where the background and characters include more details. Moreover, the movement of characters is more defined as well.

It shows that Sora’s operation as a diffusion transformer ensures higher quality results with increased training compute.

The future holds…

Sora is a step ahead in video generation models. While the model currently exhibits some inconsistencies, the demonstrated capabilities promise further development of video generation models. OpenAI talks about a promising future of the simulation of physical and digital worlds. Now, we must wait and see how Sora develops in the coming days of generative AI.