Have you heard about Microsoft’s latest tech marvel in the AI world? It’s called Phi-2, a nifty little language model that’s stirring up quite the excitement.

Despite its compact size of 2.7 billion parameters, this little dynamo is an upgrade from its predecessor, Phi-1.5. What’s cool is that it’s all set and ready for you to explore in the Azure AI Studio model catalogue.

Now, Phi-2 isn’t just any small language model. Microsoft’s team, led by Satya Nadella, showcased it at Ignite 2023, and guess what? They say it’s a real powerhouse, even giving the bigger players like Llama-2 and Gemini-2 a run for their money in generative AI tests.

This model isn’t just about crunching data; it’s about understanding language, making sense of the world, and reasoning logically. Microsoft even claims it can outdo models 25 times their size in certain tasks.

Read in detail about: Google launches Gemini AI

But here’s the kicker: training Phi-2 is a breeze compared to giants like GPT-4. It gets its smarts from a mix of high-quality data, including synthetic sets, everyday knowledge, and more. It’s built on a transformer framework, aiming to predict the next word in a sequence. And the training? Just 14 days on 96 A100 GPUs. Now, that’s efficient, especially when you think about GPT-4 needing up to 100 days and a whole lot more GPUs!

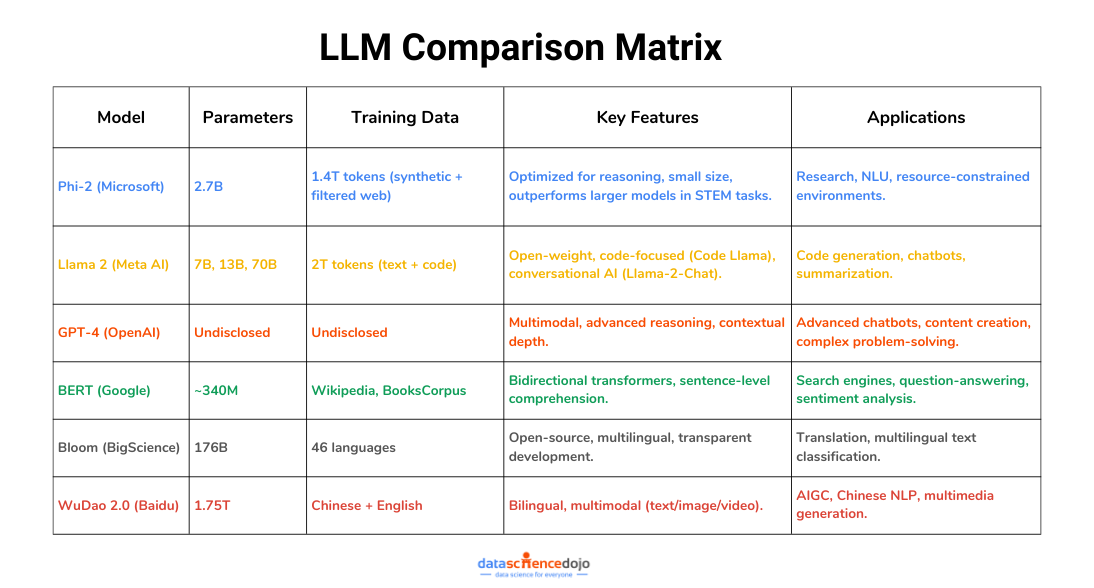

Comparative analysis of Phi-2

Comparing Phi 2, Llama 2, and other notable language models can provide insights into their unique strengths and applications.

-

Phi 2 (Microsoft):

- Size & Architecture: Transformer-based architecture optimized for next-word prediction, with a 2048-token context window.

- Training Data: Trained on 1.4 trillion tokens, including synthetic “textbook-quality” datasets (generated with GPT-3.5/GPT-4) and filtered web data (e.g., Falcon RefinedWeb, SlimPajama). Focuses on STEM, common sense, and theory of mind.

- Performance: Outperforms larger models like Mistral-7B and Llama-2-13B/70B in coding, math, and reasoning benchmarks.

- Efficiency: Designed for fast inference on consumer-grade GPUs.

Applications

- Research in language model efficiency and reasoning.

- Natural language understanding (NLU) tasks.

- Resource-constrained environments (e.g., edge devices).

Limitations

- Not aligned with human preferences (no RLHF), so outputs may require filtering.

-

Llama 2 (Meta AI)

- Size & Variants: Available in 7B, 13B, and 70B parameter versions (not 65B). Code Llama variants specialize in programming languages.

- Training Data: Trained on 2 trillion tokens, including text from Common Crawl, Wikipedia, GitHub, and public forums.

- Performance:

- Licensing: Free for research and commercial use (under 700M monthly users), but not fully open-source by OSI standards.

Applications

- Code generation and completion (via Code Llama).

- Chatbots and virtual assistants (Llama-2-Chat).

- Text summarization and question-answering.

Also learn about Claude 3.5 Sonnet

-

Other Notable Language Models

1. GPT-4 (OpenAI)

- Overview: A massive multimodal model (exact size undisclosed) optimized for text, image, and code tasks.

- Key Features:

- Superior reasoning and contextual understanding.

- Powers ChatGPT Plus and enterprise applications.

- Applications: Advanced chatbots, content creation, and complex problem-solving.

2. BERT (Google AI)

- Overview: Bidirectional transformer model focused on sentence-level comprehension.

- Key Features:

- Trained on Wikipedia and BooksCorpus.

- Revolutionized search engines (e.g., Google Search).

- Applications: Sentiment analysis, search query interpretation.

3. Bloom (BigScience)

- Overview: Open-source multilingual model with 176B parameters.

- Key Features:

- Trained in 46 languages, including underrepresented ones.

- Transparent development process.

- Applications: Translation, multilingual text classification.

4. WuDao 2.0 (Baidu & Tsinghua University)

- Overview: A 1.75 trillion parameter model trained on Chinese and English data.

- Key Features:

- Handles text, image, and video generation.

- Optimized for Chinese NLP tasks.

- Applications: AIGC (AI-generated content), bilingual research.

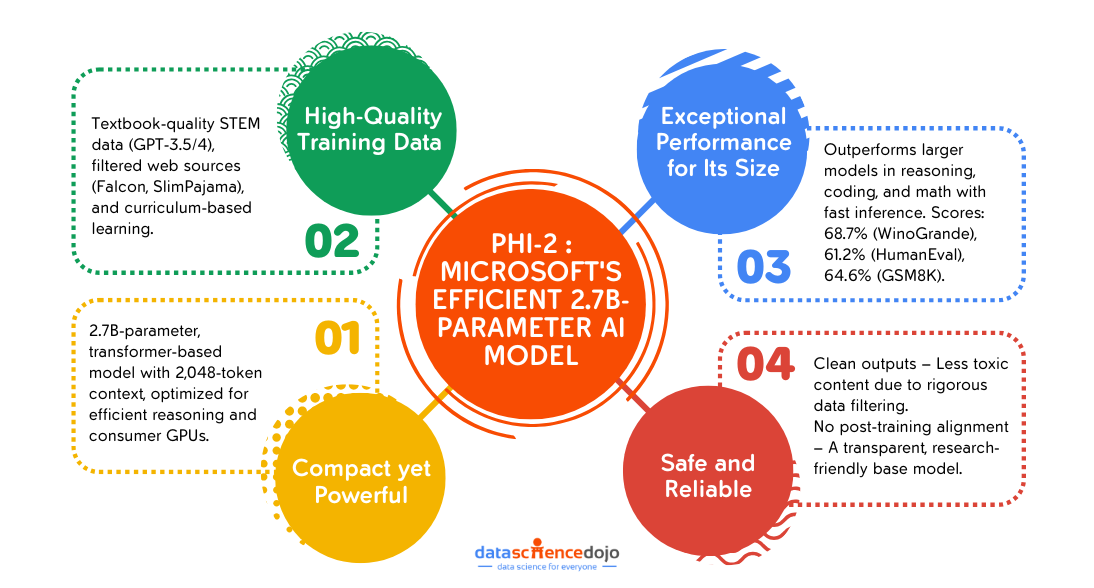

Phi-2 Features and Capabilities

Phi-2 stands out for several key features and capabilities including:

Key Features

1. Compact yet Powerful Architecture

- Transformer-Based Design: Built as a decoder-only transformer optimized for next-word prediction, enabling efficient text generation and reasoning.

- Small Parameter Count: At 2.7 billion parameters, it is lightweight compared to models like Llama 2-70B (70B) or GPT-4 (1.76T), making it ideal for deployment on consumer-grade GPUs (e.g., NVIDIA A100, RTX 3090).

- Extended Context Window: Supports sequences of up to 2,048 tokens, allowing it to handle multi-step reasoning tasks and maintain coherence in longer outputs.

2. High-Quality, Curated Training Data

- Synthetic “Textbook-Quality” Data:

- Generated using GPT-3.5/GPT-4 to create structured lessons in STEM, common-sense reasoning, and theory of mind.

- Focuses on logical progression (e.g., teaching physics concepts from basics to advanced principles).

- Filtered Web Data:

- Combines cleaned datasets like Falcon RefinedWeb and SlimPajama, rigorously scrubbed of low-quality, toxic, or biased content.

- Curriculum Learning Strategy:

- Trains the model on simpler concepts first, then gradually introduces complexity, mimicking human educational methods.

3. Exceptional Performance for Its Size

- Outperforms Larger Models:

- Surpasses Mistral-7B and Llama-2-13B/70B in reasoning, coding, and math tasks.

- Example benchmarks:

- Common-Sense Reasoning: Scores 68.7% on WinoGrande (vs. 70.1% for Llama-2-70B).

- Coding: Achieves 61.2% on HumanEval (Python), outperforming most 7B–13B models.

- Math: 64.6% on GSM8K (grade-school math problems).

- Speed and Efficiency:

- Faster inference than larger models due to its compact size, with minimal accuracy trade-offs.

4. Focus on Safety and Reliability

- Inherently Cleaner Outputs:

- Reduced toxic or harmful content generation due to rigorously filtered training data.

- No reliance on post-training alignment (e.g., RLHF), making it a “pure” base model for research.

- Transparency:

- Microsoft openly shares details about its training data composition, unlike many proprietary models.

5. Specialized Capabilities

- Common-Sense Reasoning:

- Excels at tasks requiring real-world logic (e.g., “If it’s raining, should I carry an umbrella?”).

- Language Understanding:

- Strong performance in semantic parsing, summarization, and question-answering.

- STEM Proficiency:

- Tackles math, physics, and coding problems with accuracy rivaling models 5x its size.

6. Deployment Flexibility

- Edge Device Compatibility:

- Runs efficiently on laptops, IoT devices, or cloud environments with limited compute.

- Cost-Effective:

- Lower hardware and energy costs compared to massive models, ideal for startups or academic projects.

Applications

- Research:

- Ideal for studying reasoning in small models and data quality vs. quantity trade-offs.

- Serves as a base model for fine-tuning experiments.

- Natural Language Understanding (NLU):

- Effective for tasks like text classification, sentiment analysis, and entity recognition.

- Resource-Constrained Environments:

- Deployable on edge devices (e.g., laptops, IoT) due to low hardware requirements.

- Cost-effective for startups or academic projects with limited compute budgets.

- Education:

- Potential for tutoring systems in STEM, leveraging its synthetic textbook-trained knowledge.

Limitations

- No Alignment: Unlike ChatGPT or Llama-2-Chat, Phi-2 lacks reinforcement learning from human feedback (RLHF), so outputs may require post-processing for safety.

- Niche Generalization: Struggles with highly specialized domains (e.g., legal or medical jargon) due to its focus on common sense and STEM.

- Multilingual Gaps: Primarily trained on English; limited non-English capabilities.

Why These Features Matter

Phi-2 challenges the “bigger is better” paradigm by proving that data quality and architectural precision can offset scale. Its features make it a groundbreaking tool for:

- Researchers studying efficient AI training methods.

- Developers needing lightweight models for real-world applications.

- Educators exploring AI-driven tutoring systems in STEM.

Read in detail about: Multimodality revolution

In summary, while Phi 2 and Llama 2 are both advanced language models, they serve different purposes. Phi 2 excels in language understanding and reasoning, making it suitable for research and development, while Llama 2 focuses on code generation and software development applications. Other models, like GPT-3 or BERT, have broader applications and are often used in content generation and natural language understanding tasks.