The emergence of Large language models such as GPT-4 has been a transformative development in AI. These models have significantly advanced capabilities across various sectors, most notably in areas like content creation, code generation, and language translation, marking a new era in AI’s practical applications.

However, the deployment of these models is not without its challenges. LLMs demand extensive computational resources, consume a considerable amount of energy, and require substantial memory capacity.

These requirements can render LLMs impractical for certain applications, especially those with limited processing power or in environments where energy efficiency is a priority. In response to these limitations, there has been a growing interest in the development of small language models (SLMs).

Learn how LLM Development Making Chatbots Smarter

These models are designed to be more compact and efficient, addressing the need for AI solutions that are viable in resource-constrained environments. Let’s explore these models in greater detail and the rationale behind them.

What are Small Language Models?

Small Language Models (SLMs) represent an intriguing segment of AI. Unlike their larger counterparts, GPT-4 and LlaMa 2, which boast billions, and sometimes trillions of parameters, SLMs operate on a much smaller scale, typically encompassing thousands to a few million parameters.

Understand the Comparative Analysis of GPT-3.5 and GPT-4

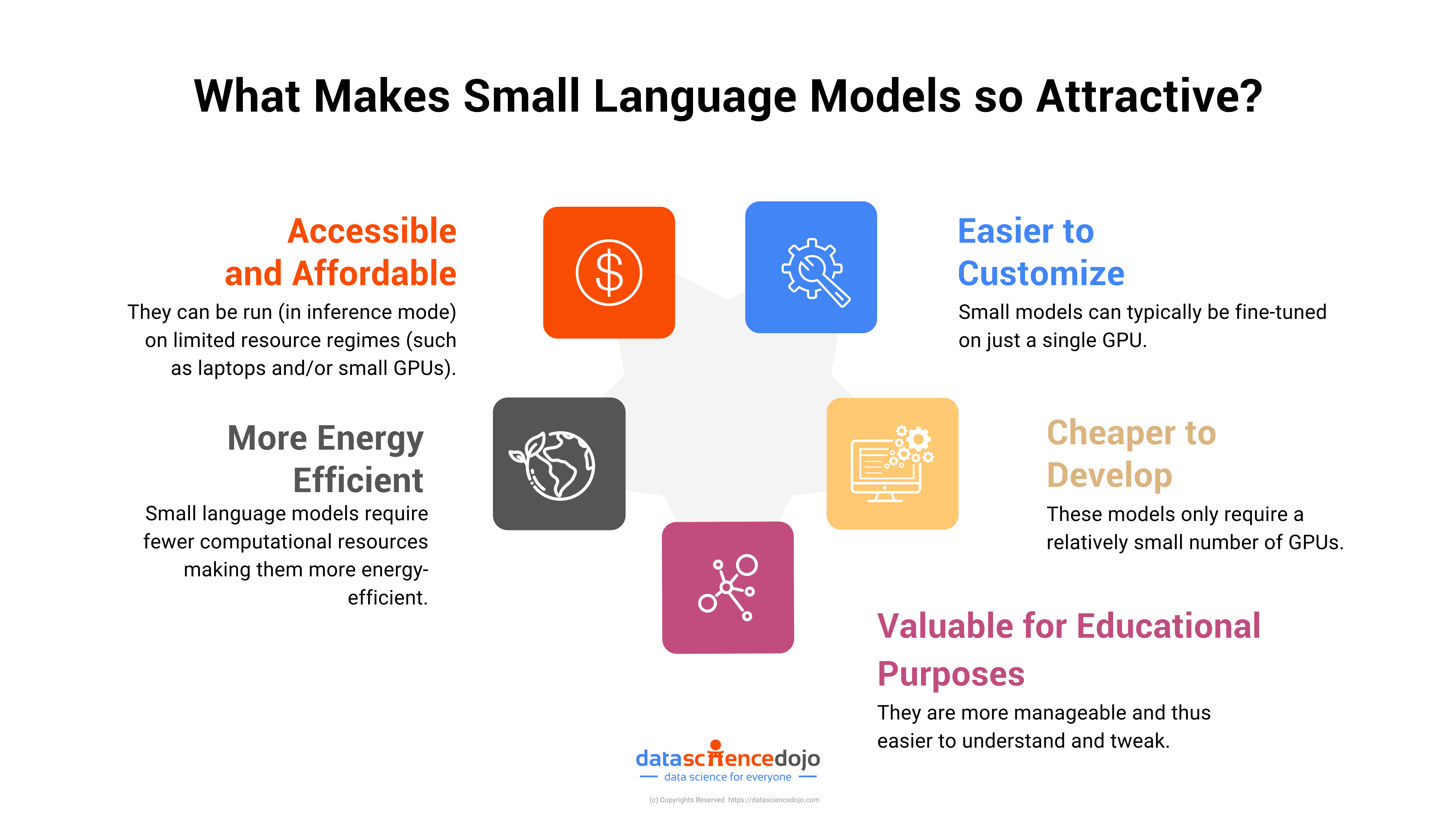

This relatively modest size translates into lower computational demands, making lesser-sized language models accessible and feasible for organizations or researchers who might not have the resources to handle the more substantial computational load required by larger models.

However, since the race behind AI has taken its pace, companies have been engaged in a cut-throat competition of who’s going to make the bigger language model because bigger language models are translated into better language models.

Given this, how do SLMs fit into this equation, let alone outperform large language models?

How can Small Language Models Function Well with Fewer Parameters?

There are several reasons why lesser-sized language models fit into the equation of language models.

The answer lies in the training methods. Different techniques like transfer learning allow smaller models to leverage pre-existing knowledge, making them more adaptable and efficient for specific tasks. For instance, distilling knowledge from LLMs into SLMs can result in models that perform similarly but require a fraction of the computational resources.

Explore LLM Finance in the Financial Industry

Domain-Specific Applications

Secondly, compact models can be more domain-specific. By training them on specific datasets, these models can be tailored to handle specific tasks or cater to particular industries, making them more effective in certain scenarios.

For example, a healthcare-specific SLM might outperform a general-purpose LLM in understanding medical terminology and making accurate diagnoses.

Limitations and Considerations

Despite these advantages, it’s essential to remember that the effectiveness of an SLM largely depends on its training and fine-tuning process, as well as the specific task it’s designed to handle. Thus, while lesser-sized language models can outperform LLMs in certain scenarios, they may not always be the best choice for every application.

Know the potential of Generative AI and LLMs to empower non-profit organizations

Collaborative Advancements in Small Language Models

Hugging Face, along with other organizations, is playing a pivotal role in advancing the development and deployment of SLMs. The company has created a platform known as Transformers, which offers a range of pre-trained SLMs and tools for fine-tuning and deploying these models.

Understand Transformer models as the future of Natural Language Processing

This platform serves as a hub for researchers and developers, enabling collaboration and knowledge sharing. It expedites the advancement of lesser-sized language models by providing necessary tools and resources, thereby fostering innovation in this field.

Similarly, Google has contributed to the progress of lesser-sized language models by creating TensorFlow, a platform that provides extensive resources and tools for the development and deployment of these models.

Both Hugging Face’s Transformers and Google’s TensorFlow facilitate the ongoing improvements in SLMs, thereby catalyzing their adoption and versatility in various applications.

Independent Contributions

Moreover, smaller teams and independent developers are also contributing to the progress of lesser-sized language models. For example, “TinyLlama” is a small, efficient open-source language model developed by a team of developers, and despite its size, it outperforms similar models in various tasks.

The model’s code and checkpoints are available on GitHub, enabling the wider AI community to learn from, improve upon, and incorporate this model into their projects.

These collaborative efforts within the AI community not only enhance the effectiveness of SLMs but also greatly contribute to the overall progress in the field of AI.

Phi-2: Microsoft’s small language model with 2.7 billion parameters

What are the Potential Implications of SLMs in our Personal Lives?

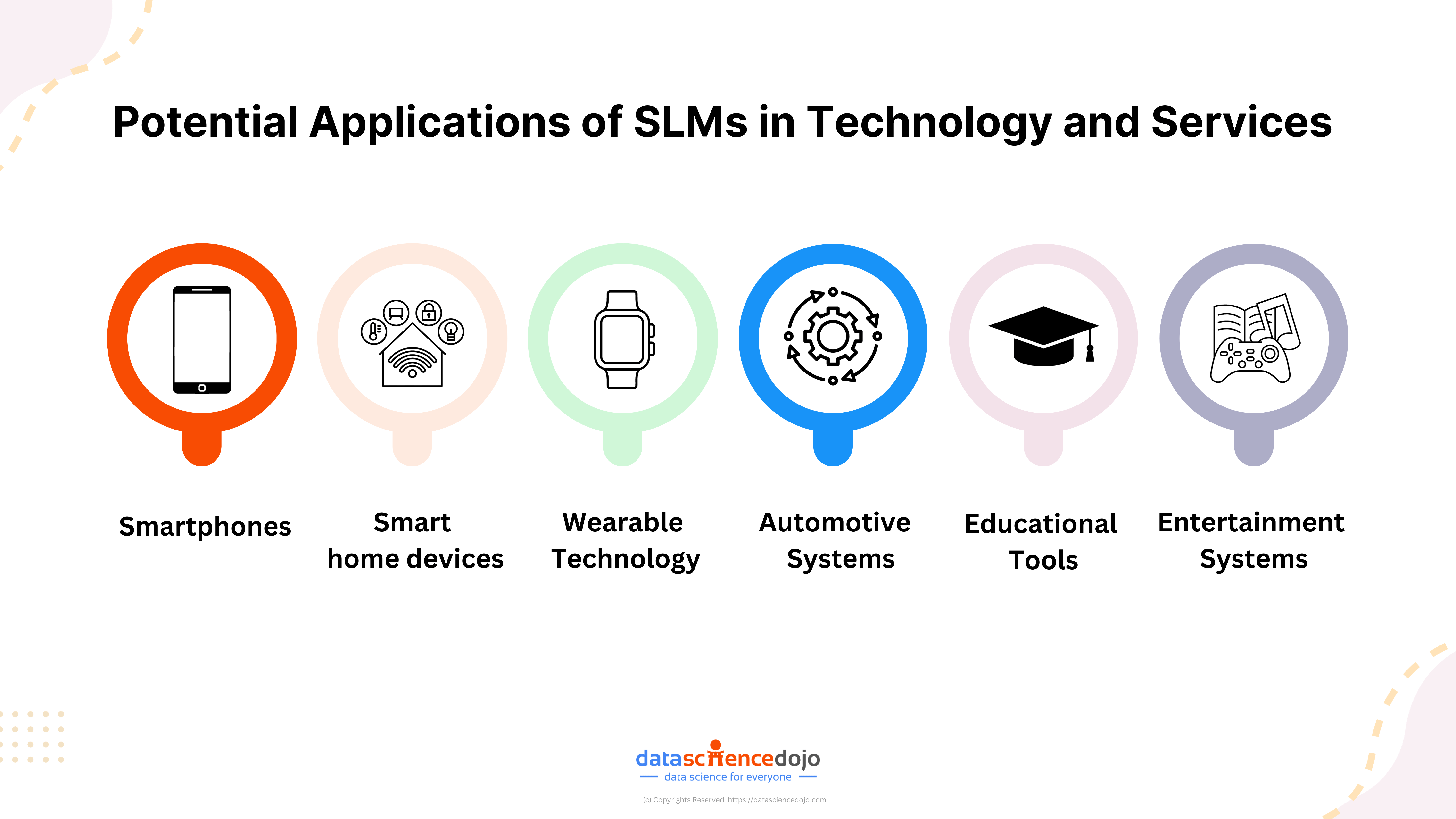

Small Language Models have the potential to significantly enhance various facets of our personal lives, from smartphones to home automation. Here’s an expanded look at the areas where they could be integrated:

Smartphones

SLMs are well-suited for the limited hardware of smartphones, supporting on-device processing that quickens response times, enhances privacy and security, and aligns with the trend of edge computing in mobile technology.

This integration paves the way for advanced personal assistants capable of understanding complex tasks and providing personalized interactions based on user habits and preferences.

Additionally, SLMs in smartphones could lead to more sophisticated, cloud-independent applications, improved energy efficiency, and enhanced data privacy.

They also hold the potential to make technology more accessible, particularly for individuals with disabilities, through features like real-time language translation and improved voice recognition.

Learn how to create a Voice-Controlled Python Chatbot using web scraping

The deployment of lesser-sized language models in mobile technology could significantly impact various industries, leading to more intuitive, efficient, and user-focused applications and services.

Smart Home Devices

Integration of small language models in small home devices to have voice-activated controls and personalized settings that are more efficient, accessible, and easier.

Voice-Activated Controls: SLMs can be embedded in smart home devices like thermostats, lights, and security systems for voice-activated control, making home automation more intuitive and user-friendly.

Personalized Settings: They can learn individual preferences for things like temperature and lighting, adjusting settings automatically for different times of day or specific occasions.

Wearable Technology

Whether it is to monitor health and offer translation services in real time, small language models have been integrated into wearable technology.

Health Monitoring: In devices like smartwatches or fitness trackers, lesser-sized language models can provide personalized health tips and reminders based on the user’s activity levels, sleep patterns, and health data.

Know about 10 AI startups that are revolutionizing Healthcare

Real-Time Translation: Wearables equipped with SLMs could offer real-time translation services, making international travel and communication more accessible.

Automotive Systems

Enhanced Navigation and Assistance: In cars, lesser-sized language models can offer advanced navigation assistance, integrating real-time traffic updates, and suggesting optimal routes.

Voice Commands: They can enhance the functionality of in-car voice command systems, allowing drivers to control music, make calls, or send messages without taking their hands off the wheel.

Educational Tools

Personalized Learning: Educational apps powered by SLMs can adapt to individual learning styles and paces, providing personalized guidance and support to students.

Language Learning: They can be particularly effective in language learning applications, offering interactive and conversational practice.

Entertainment Systems

The integration of lesser-sized language models across these domains, including smartphones, promises not only convenience and efficiency but also a more personalized and accessible experience in our daily interactions with technology.

As these models continue to evolve, their potential applications in enhancing personal life are vast and ever-growing.

Learn about Natural Language Processing and its Applications

Smart TVs and Gaming Consoles: SLMs can be used in smart TVs and gaming consoles for voice-controlled operation and personalized content recommendations based on viewing or gaming history.

Do SLMs Pose any Challenges?

Small Language Models do present several challenges despite their promising capabilities

- Limited Context Comprehension: Due to the lower number of parameters, SLMs may have less accurate and nuanced responses compared to larger models, especially in complex or ambiguous situations.

- Need for Specific Training Data: The effectiveness of these models heavily relies on the quality and relevance of their training data. Optimizing these models for specific tasks or applications requires expertise and can be complex.

- Local CPU Implementation Challenges: Running a compact language model on local CPUs involves considerations like optimizing memory usage and scaling options. Regular saving of checkpoints during training is necessary to prevent data loss.

- Understanding Model Limitations: Predicting the performance and potential applications of lesser-sized language models can be challenging, especially in extrapolating findings from smaller models to their larger counterparts.

Embracing the Future with Small Language Models

The journey through the landscape of SLMs underscores a pivotal shift in the field of artificial intelligence. As we have explored, lesser-sized language models emerge as a critical innovation, addressing the need for more tailored, efficient, and sustainable AI solutions.

Learn about the different types of AI as a Service

Example

Their ability to provide domain-specific expertise, coupled with reduced computational demands, opens up new frontiers in various industries, from healthcare and finance to transportation and customer service.

The rise of platforms like Hugging Face’s Transformers and Google’s TensorFlow has democratized access to these powerful tools, enabling even smaller teams and independent developers to make significant contributions.

The case of “Tiny Llama” exemplifies how a compact, open-source language model can punch above its weight, challenging the notion that bigger always means better.

Conclusion

As the AI community continues to collaborate and innovate, the future of lesser-sized language models is bright and promising.

Learn how to use Custom Vision AI and Power BI to build a Bird Recognition App

Their versatility and adaptability make them well-suited to a world where efficiency and specificity are increasingly valued. However, it’s crucial to navigate their limitations wisely, acknowledging the challenges in training, deployment, and context comprehension.

In conclusion, compact language models stand not just as a testament to human ingenuity in AI development but also as a beacon guiding us toward a more efficient, specialized, and sustainable future in artificial intelligence.