Have you ever thought about the leap from “Good to Great” as James Collins describes in his book? This is precisely what we aim to achieve with large language models (LLMs) today. We are at a stage where language models are surely competent, but the challenge is to elevate them to excellence.

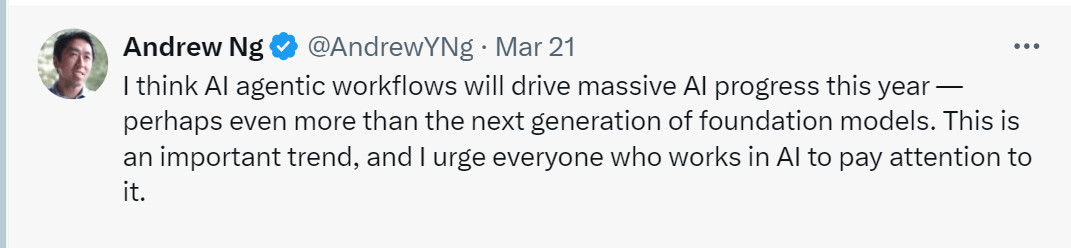

While there are numerous approaches that are being discussed currently to enhance LLMs, one approach that seems to be very promising is incorporating agentic workflows in LLMs.

Let’s dig deeper into what are AI agents, and how can they improve the results generated by LLMs.

Explore LangChain Agents

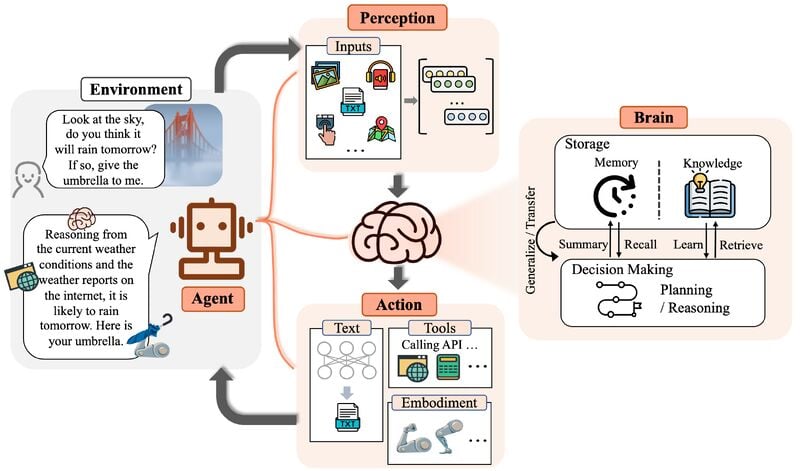

What are Agentic Workflows

Agentic workflows are all about making LLMs smarter by integrating them into structured processes. This helps the AI deliver higher-quality results. Right now, large language models usually operate on a zero-shot mode.

This equates to asking someone to write an 800-word blog on AI agents in one go, without any edits. It’s not ideal, right? That’s where AI agents come in. They let the LLM go over the task multiple times, fine-tuning the results each time.

This process uses extra tools and smarter decision-making to really leverage what LLMs can do, especially for specific, targeted projects.

Read more about AI agents

How AI Agents Enhance Large Language Models

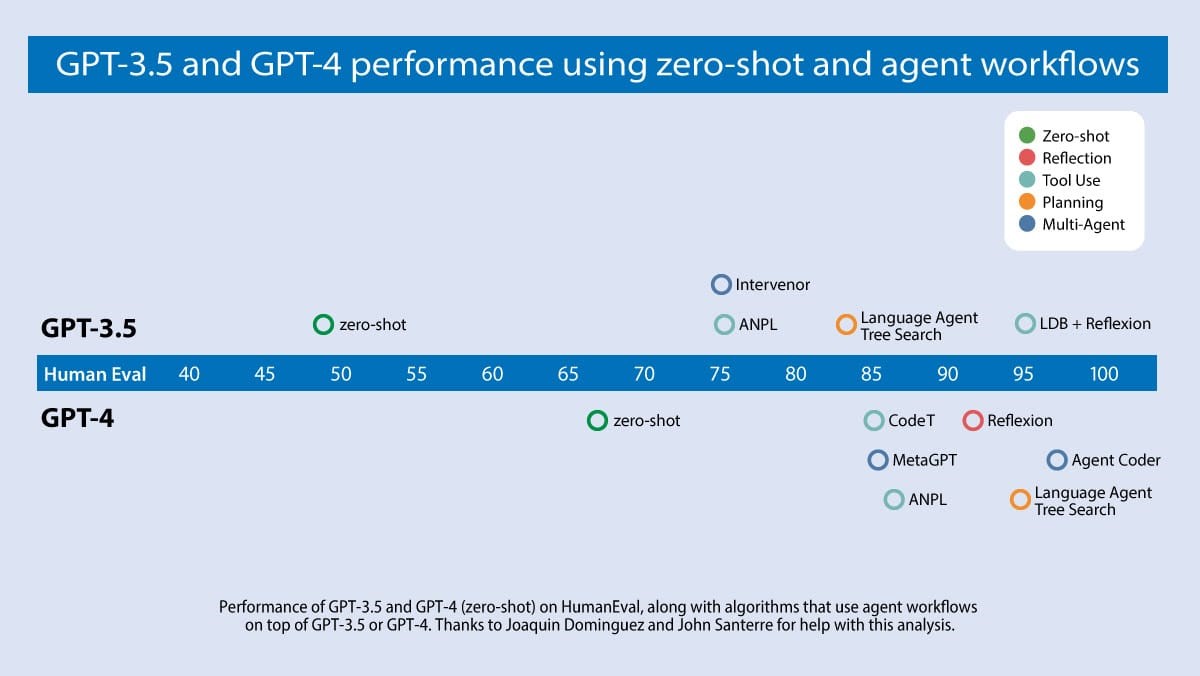

Agent workflows have been proven to dramatically improve the performance of language models. For example, GPT 3.5 observed an increase in coding accuracy from 48.1% to 95.1% when moving from zero-shot prompting to an agent workflow on a coding benchmark.

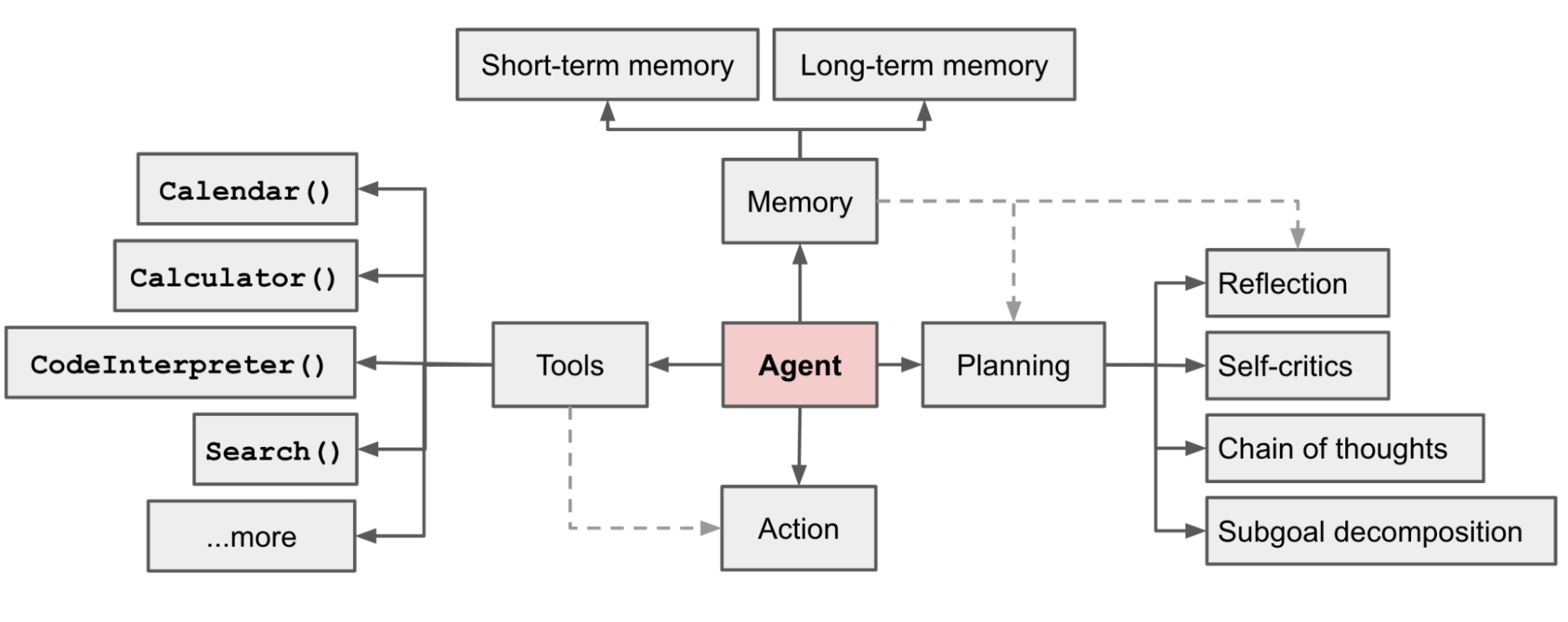

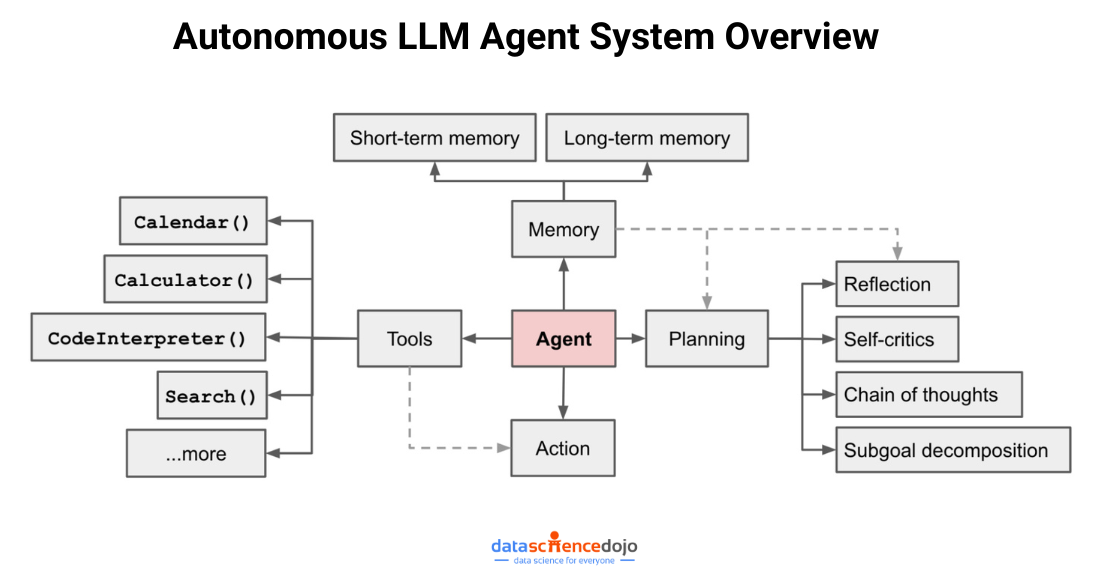

Building Blocks for AI Agents

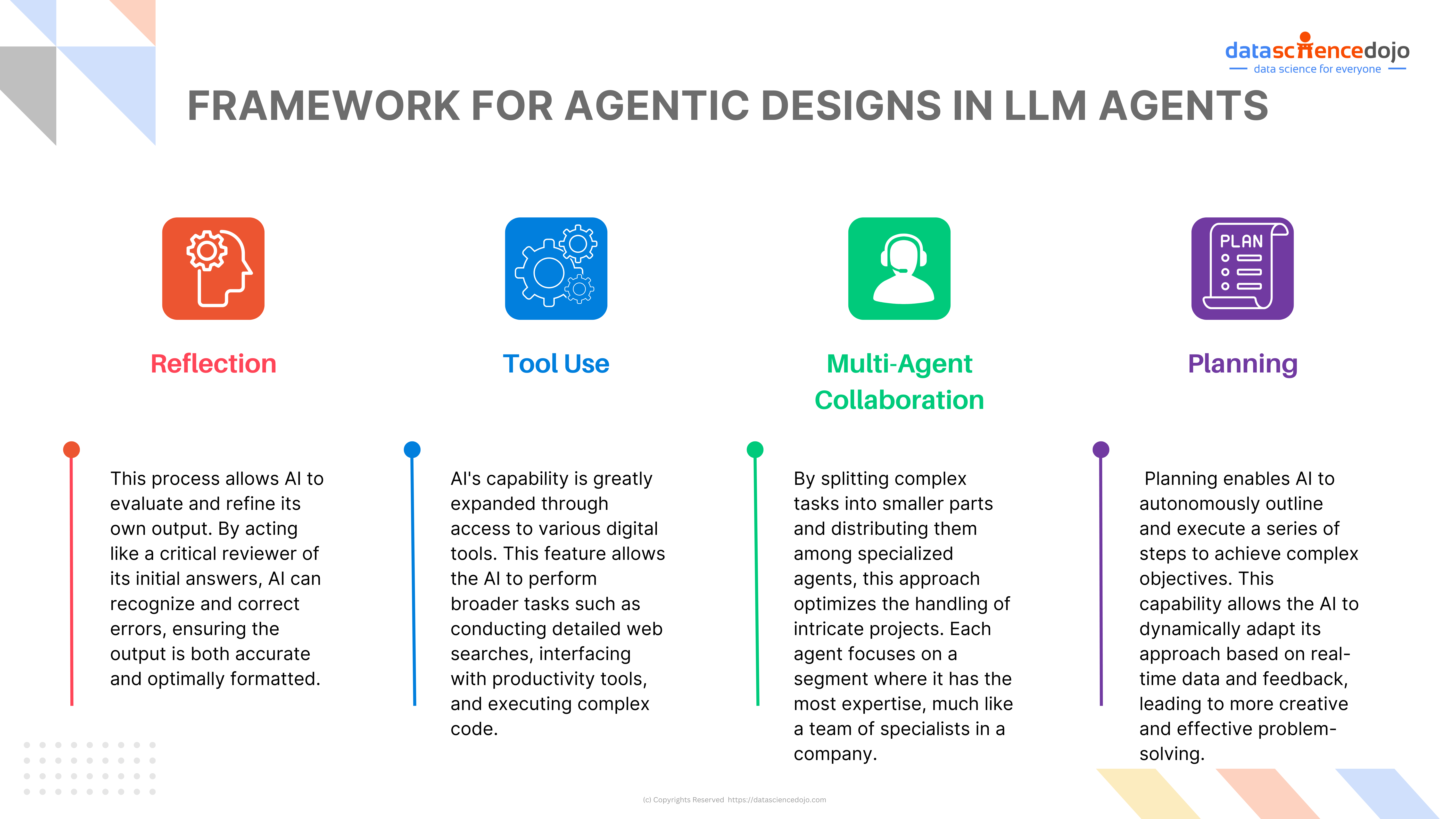

There is a lot of work going on globally about different strategies to create AI agents. To put the research into perspective, here’s a framework for categorizing design patterns for building agents.

1. Reflection

Reflection refers to a design pattern where an LLM generates an output and then reflects on its creation to identify improvement areas. This process of self-critique allows the model to automatically provide constructive criticism of its output, much like a human would revise their work after writing a first draft.

Understand LLM Finance

Reflection leads to performance gains in AI agents by enabling them to self-criticize and improve through an iterative process. When an LLM generates an initial output, it can be prompted to reflect on that output by checking for issues related to correctness, style, efficiency, and whatnot.

Reflection in Action

Here’s an example process of how Reflection leads to improved code:

- Initially, an LLM receives a prompt to write code for a specific task, X.

- Once the code is generated, the LLM reviews its work, assessing the code’s accuracy, style, and efficiency, and provides suggestions for improvements.

- The LLM identifies any issues or opportunities for optimization and proposes adjustments based on this evaluation.

- The LLM is prompted to refine the code, this time incorporating the insights gained from its own review.

- This review and revision cycle continues, with the LLM providing ongoing feedback and making iterative enhancements to the code.

Know how LLM Development is making Chatbots Smarter

2. Tool Use

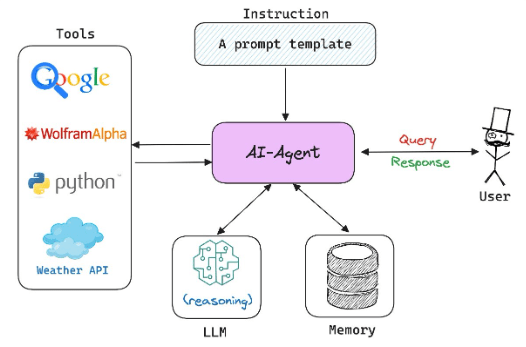

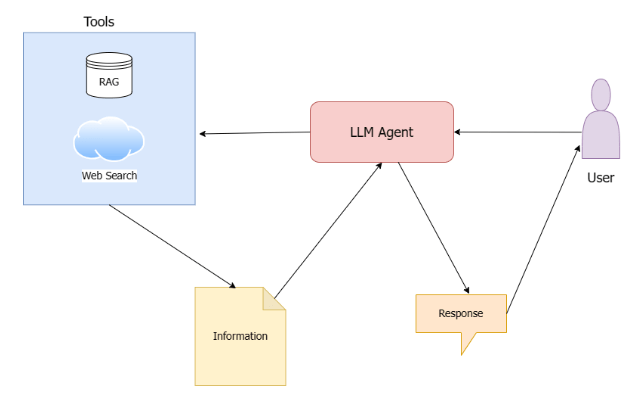

Incorporating different tools in the agenetic workflow allows the language model to call upon various tools for gathering information, taking actions, or manipulating data to accomplish tasks. This pattern extends the functionality of LLMs beyond generating text-based responses, allowing them to interact with external systems and perform more complex operations.

One can argue that some of the current consumer-facing products like ChatGPT are already capitalizing on different tools like web-search. Well, what we are proposing is different and massive. Here’s how:

Explore fun facts for Data Scientists using ChatGPT

Access to Multiple Tools:

We are talking about AI Agents with the ability to access a variety of tools to perform a broad range of functions, from searching different sources (e.g., web, Wikipedia, arXiv) to interfacing with productivity tools (e.g., email, calendars).

This will allow LLMs to perform more complex tasks, such as managing communications, scheduling meetings, or conducting in-depth research—all in real-time.

Developers can use heuristics to include the most relevant subset of tools in the LLM’s context at each processing step, similar to how retrieval augmented generation (RAG) systems choose subsets of text for contextual relevance.

Code Execution

One of the significant challenges with current LLMs is their limited ability to perform accurate computations directly from a trained model. For instance, asking a typical LLM a math-related query like calculating compound interest might not yield the correct result.

This is where the integration of tools like Python into LLMs becomes invaluable. By allowing LLMs to execute Python code, they can precisely calculate and solve complex mathematical queries.

This capability not only enhances the functionality of LLMs in academic and professional settings but also boosts user trust in their ability to handle technical tasks effectively.

3. Multi-Agent Collaboration

Handling complex tasks can often be too challenging for a single AI agent, much like it would be for an individual person. This is where multi-agent collaboration becomes crucial. By dividing these complex tasks into smaller, more manageable parts, each AI agent can focus on a specific segment where its expertise can be best utilized.

This approach mirrors how human teams operate, with different specialists taking on different roles within a project. Such collaboration allows for more efficient handling of intricate tasks, ensuring each part is managed by the most suitable agent, thus enhancing overall effectiveness and results.

How different AI agents can perform specialized roles within a single workflow?

In a multi-agent collaboration framework, various specialized agents work together within a single system to efficiently handle complex tasks. Here’s a straightforward breakdown of the process:

- Role Specialization: Each agent has a specific role based on its expertise. For example, a Product Manager agent might create a Product Requirement Document (PRD), while an Architect agent focuses on technical specifications.

- Task-Oriented Dialogue: The agents communicate through task-oriented dialogues, initiated by role-specific prompts, to effectively contribute to the project.

- Memory Stream: A memory stream records all past dialogues, helping agents reference previous interactions for more informed decisions, and maintaining continuity throughout the workflow.

- Self-Reflection and Feedback: Agents review their decisions and actions, using self-reflection and feedback mechanisms to refine their contributions and ensure alignment with the overall goals.

- Self-Improvement: Through active teamwork and learning from past projects, agents continuously improve, enhancing the system’s overall effectiveness.

This framework allows for streamlined and effective management of complex tasks by distributing them among specialized LLM agents, each handling aspects they are best suited for.

Such systems not only manage to optimize the execution of subtasks but also do so cost-effectively, scaling to various levels of complexity and broadening the scope of applications that LLMs can address.

Furthermore, the capacity for planning and tool use within the multi-agent framework enriches the solution space, fostering creativity and improved decision-making akin to a well-orchestrated team of specialists.

4. Planning

Planning is a design pattern that empowers large language models to autonomously devise a sequence of steps to achieve complex objectives.

Rather than relying on a single tool or action, planning allows an agent to dynamically determine the necessary steps to accomplish a task, which might not be pre-determined or decomposable into a set of subtasks in advance.

By decomposing a larger task into smaller, manageable subtasks, planning allows for a more systematic approach to problem-solving, leading to potentially higher-quality and more comprehensive outcomes.

Impact of Planning on Outcome Quality

The impact of Planning on outcome quality is multifaceted:

Adaptability: It gives AI agents the flexibility to adapt their strategies on the fly, making them capable of handling unexpected changes or errors in the workflow.

Dynamism: Planning allows agents to dynamically decide on the execution of tasks, which can result in creative and effective solutions to problems that are not immediately obvious.

Autonomy: It enables AI systems to work with minimal human intervention, enhancing efficiency and reducing the time to resolution.

Challenges of Planning

The use of Planning also presents several challenges:

- Predictability: The autonomous nature of Planning can lead to less predictable results, as the sequence of actions determined by the agent may not always align with human expectations.

- Complexity: As the complexity of tasks increases, so does the challenge for the LLM to predict precise plans. This necessitates further optimization of LLMs for task planning to handle a broader range of tasks effectively.

Despite these challenges, the field is rapidly evolving, and improvements in planning abilities are expected to enhance the quality of outcomes further while mitigating the associated challenges

The Future of Agentic Workflows in LLMs

This strategic approach to developing LLM agent through agentic workflows offers a promising path to not just enhancing their performance but also expanding their applicability across various domains.

The ongoing optimization and integration of these workflows are crucial for achieving the high standards of reliability and ethical responsibility required in advanced AI systems.