The field of artificial intelligence is booming with constant breakthroughs leading to ever-more sophisticated applications. This rapid growth translates directly to job creation. Thus, AI jobs are a promising career choice in today’s world.

As AI integrates into everything from healthcare to finance, new professions are emerging, demanding specialists to develop, manage, and maintain these intelligent systems. The future of AI is bright, and brimming with exciting job opportunities for those ready to embrace this transformative technology.

In this blog, we will explore the top 10 AI jobs and careers that are also the highest-paying opportunities for individuals in 2025.

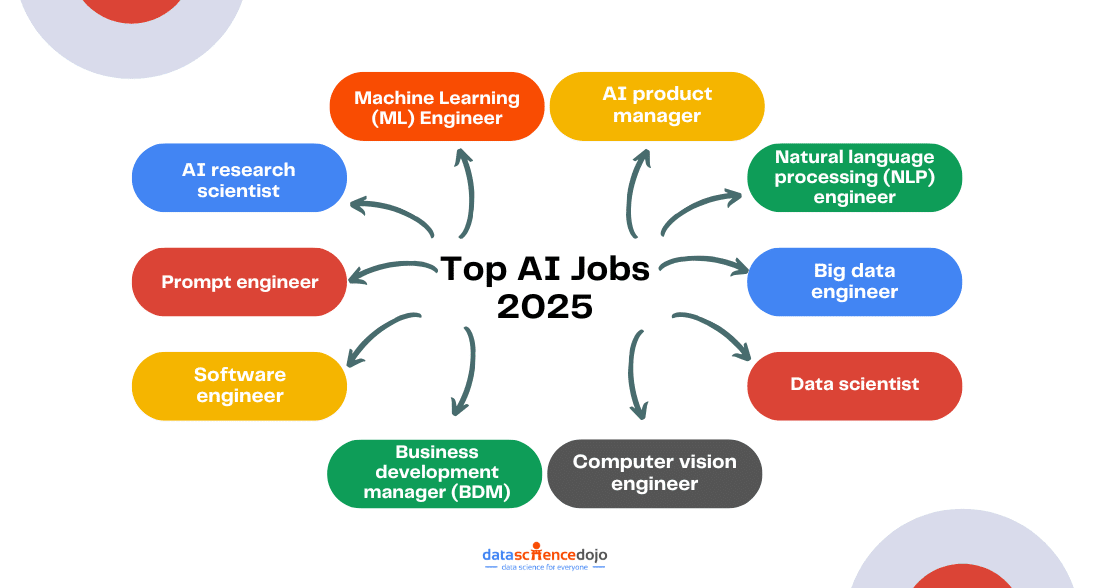

Top 10 Highest-Paying AI Jobs in 2025

Our list will serve as your one-stop guide to the 10 best AI jobs you can seek in 2025.

Let’s explore the leading roles with hefty paychecks within the exciting world of AI.

Machine Learning (ML) Engineer

Potential pay range – US$82,000 to 160,000/yr

Machine learning engineers are the bridge between data science and engineering. They are responsible for building intelligent machines that transform our world. Integrating the knowledge of data science with engineering skills, they can design, build, and deploy machine learning (ML) models.

Hence, their skillset is crucial to transform raw into algorithms that can make predictions, recognize patterns, and automate complex tasks. With growing reliance on AI-powered solutions and digital transformation with generative AI, it is a highly valued skill with its demand only expected to grow. They consistently rank among the highest-paid AI professionals.

AI product manager

Potential pay range – US$125,000 to 181,000/yr

They are the channel of communication between technical personnel and the upfront business stakeholders. They play a critical role in translating cutting-edge AI technology into real-world solutions. Similarly, they also transform a user’s needs into product roadmaps, ensuring AI features are effective, and aligned with the company’s goals.

The versatility of this role demands a background of technical knowledge with a flare for business understanding. The modern-day businesses thriving in the digital world marked by constantly evolving AI technology rely heavily on AI product managers, making it a lucrative role to ensure business growth and success.

Natural Language Processing (NLP) Engineer

Potential pay range – US$164,000 to 267,000/yr

As the name suggests, these professionals specialize in building systems for processing human language, like large language models (LLMs). With tasks like translation, sentiment analysis, and content generation, NLP engineers enable ML models to understand and process human language.

With the rise of voice-activated technology and the increasing need for natural language interactions, it is a highly sought-after skillset in 2025. Chatbots and virtual assistants are some of the common applications developed by NLP engineers for modern businesses.

Learn more about the many applications of NLP to understand the role better

Big Data Engineer

Potential pay range – US$206,000 to 296,000/yr

They operate at the backend to build and maintain complex systems that store and process the vast amounts of data that fuel AI applications. They design and implement data pipelines, ensuring data security and integrity, and developing tools to analyze massive datasets.

This is an important role for rapidly developing AI models as robust big data infrastructures are crucial for their effective learning and functionality. With the growing amount of data for businesses, the demand for big data engineers is only bound to grow in 2025.

Data Scientist

Potential pay range – US$118,000 to 206,000/yr

Their primary goal is to draw valuable insights from data. Hence, they collect, clean, and organize data to prepare it for analysis. Then they proceed to apply statistical methods and machine learning algorithms to uncover hidden patterns and trends. The final step is to use these analytic findings to tell a concise story of their findings to the audience.

Read more about the essential skills for a data science job

Hence, the final goal becomes the extraction of meaning from data. Data scientists are the masterminds behind the algorithms that power everything from recommendation engines to fraud detection. They enable businesses to leverage AI to make informed decisions. With the growing AI trend, it is one of the sought-after AI jobs.

Here’s a guide to help you ace your data science interview as you explore this promising career choice in today’s market.

Computer Vision Engineer

Potential pay range – US$112,000 to 210,000/yr

These engineers specialize in working with and interpreting visual information. They focus on developing algorithms to analyze images and videos, enabling machines to perform tasks like object recognition, facial detection, and scene understanding. Some common applications of it include driving cars, and medical image analysis.

With AI expanding into new horizons and avenues, the role of computer vision engineers is one new position created out of the changing demands of the field. The demand for this role is only expected to grow, especially with the increasing use and engagement of visual data in our lives. Computer vision engineers play a crucial role in interpreting this huge chunk of visual data.

AI Research Scientist

Potential pay range – US$69,000 to 206,000/yr

The role revolves around developing new algorithms and refining existing ones to make AI systems more efficient, accurate, and capable. It requires both technical expertise and creativity to navigate through areas of machine learning, NLP, and other AI fields.

Since an AI research scientist lays the groundwork for developing next-generation AI applications, the role is not only important for the present times but will remain central to the growth of AI. It’s a challenging yet rewarding career path for those passionate about pushing the frontiers of AI and shaping the future of technology.

Curious about how AI is reshaping the world? Tune in to our Future of Data and AI Podcast now!

Business Development Manager (BDM)

Potential pay range – US$36,000 to 149,000/yr

They identify and cultivate new business opportunities for AI technologies by understanding the technical capabilities of AI and the specific needs of potential clients across various industries. They act as strategic storytellers who build narratives that showcase how AI can solve real-world problems, ensuring a positive return on investment.

Among the different AI jobs, they play a crucial role in the growth of AI. Their job description is primarily focused on getting businesses to see the potential of AI and invest in its growth, benefiting themselves and society as a whole. Keeping AI growth in view, it is a lucrative career path at the forefront of technological innovation.

Software Engineer

Potential pay range – US$66,000 to 168,000/yr

Software engineers have been around the job market for a long time, designing, developing, testing, and maintaining software applications. However, with AI’s growth spurt in modern-day businesses, their role has just gotten more complex and important in the market.

Their ability to bridge the gap between theory and application is crucial for bringing AI products to life. In 2025, this expertise is well-compensated, with software engineers specializing in AI to create systems that are scalable, reliable, and user-friendly. As the demand for AI solutions continues to grow, so too will the need for skilled software engineers to build and maintain them.

Prompt Engineer

Potential pay range – US$32,000 to 95,000/yr

They belong under the banner of AI jobs that took shape with the growth and development of AI. Acting as the bridge between humans and large language models (LLMs), prompt engineers bring a unique blend of creativity and technical understanding to create clear instructions for the AI-powered ML models.

As LLMs are becoming more ingrained in various industries, prompt engineering has become a rapidly evolving AI job and its demand is expected to rise significantly in 2025. It’s a fascinating career path at the forefront of human-AI collaboration.

Interested to know more? Here are the top 5 must-know AI skills and jobs

The Potential and Future of AI Jobs

The world of AI is brimming with exciting career opportunities. From the strategic vision of AI product managers to the groundbreaking research of AI scientists, each role plays a vital part in shaping the future of this transformative technology. Some key factors that are expected to mark the future of AI jobs include:

- a rapid increase in demand

- growing need for specialization for deeper expertise to tackle new challenges

- human-AI collaboration to unleash the full potential

- increasing focus on upskilling and reskilling to stay relevant and competitive

If you’re looking for a high-paying and intellectually stimulating career path, the AI field offers a wealth of options. This blog has just scratched the surface – consider this your launchpad for further exploration. With the right skills and dedication, you can be a part of the revolution and help unlock the immense potential of AI.