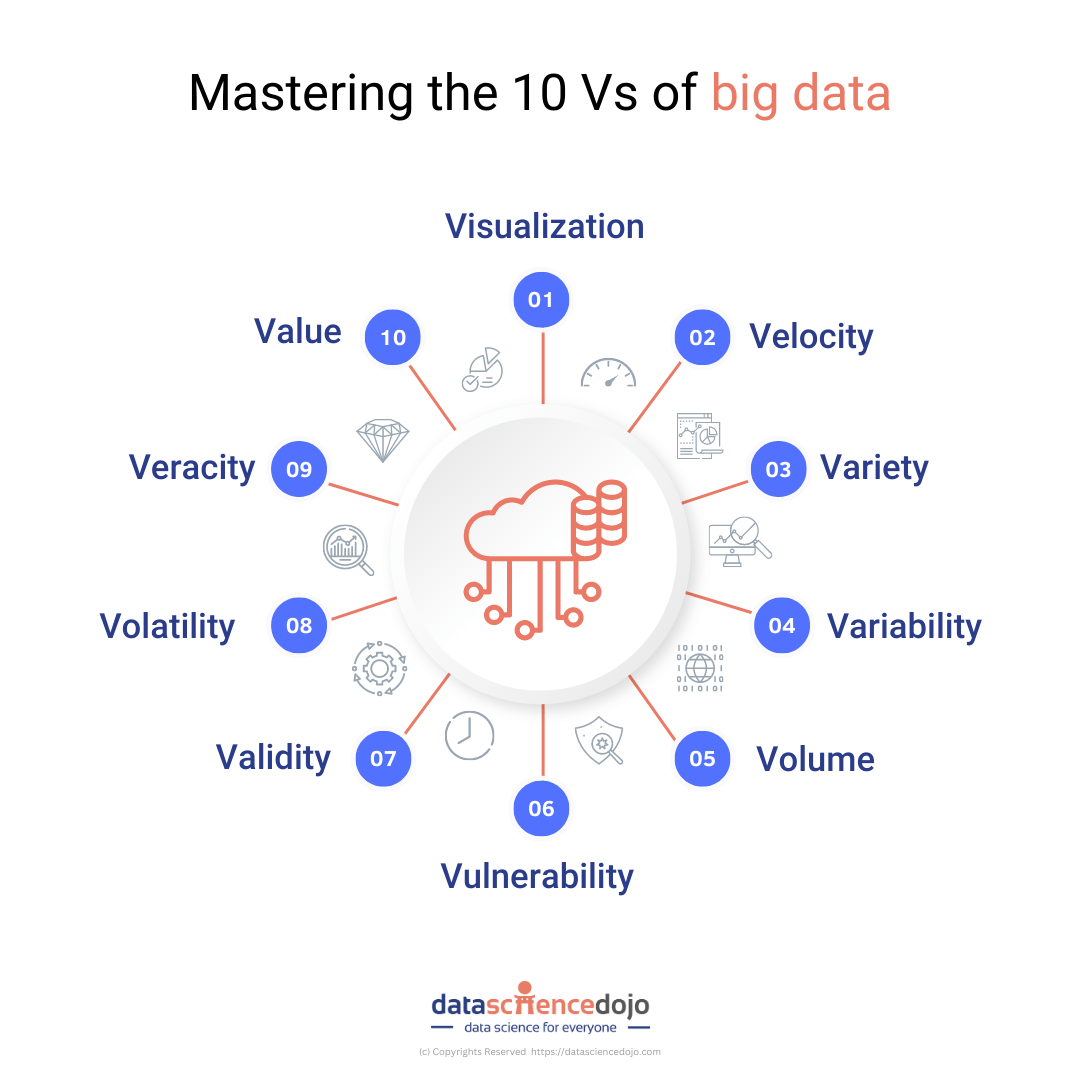

Big data is conventionally understood in terms of its scale. This one-dimensional approach, however, runs the risk of simplifying the complexity of big data. In this blog, we discuss the 10 Vs as metrics to gauge the complexity of big data.

When we think of “big data,” it is easy to imagine a vast, intangible collection of customer information and relevant data required to grow your business. But the term “big data” isn’t about size – it’s also about the potential to uncover valuable insights by considering a range of other characteristics. In other words, it’s not just about the amount of data we have, but also how we use and analyze it.

Volume

The most obvious feature is the volume that captures the sheer scale of a certain dataset. Consider, for example, 40,000 apps added to the app store each year. Similarly, 1 in 40,000 searches are made over Google every second.

Big numbers carry the immediate appeal of big data. Whether it is the 2.2 billion active monthly users on Facebook or the 2.2 billion cups of coffee that are consumed in single day, big numbers capture qualities about large swathes of population, conveying insights that can feel universal in their scale.

As another example, consider the 294 billion emails being sent every day. In comparison, there are 300 billion stars in the Milky Way. Somehow, the largeness of these numbers in a human context can help us make better sense of otherwise unimaginable quantities like the stars in the Milky Way!

Velocity

In nearly all the examples considered above, velocity of the data was also an important feature. Velocity adds to volume, allowing us to grapple with data as a dynamic quantity. In big data it refers to how quickly data is generated and how fast it moves. It is one of the three Vs of big data, along with volume and variety. Velocity is important for businesses that need their data to be quickly available for making informed decisions.

Variety

Variety, here, refers to the several types of data that are constantly in circulation and is an integral quality of big data. Different data sets are unstructured. This includes data shared over social media and instant messaging regularly such as videos, audio, and phone recordings.

Then, there is the 10% semi-structured data in circulation including emails, webpages, zipped files, etc. Lastly, there is the rarity of structured data such as financial transactions.

Data types are a defining feature of big data as unstructured data needs to be cleaned and structured before it can be used for data analytics. In fact, the availability of clean data is among the top challenges facing data scientists. According to Forbes, most data scientists spend 60% of their time cleaning data.

Variability

Variability is a measure of the inconsistencies in data and is often confused with variety. To understand variability, let us consider an example. You go to a coffee shop every day and purchase the same latte each day. However, it may smell or taste slightly or significantly different each day.

This kind of inconsistency in data is an important feature as it places limits on the reproducibility of data. This is particularly relevant in sentiment analysis which is much harder for AI models as compared to humans. Sentiment analysis requires an additional level of input, i.e., context.

An example of variability in big data can be seen when investigating the amount of time spent on phones daily by diverse groups of people. The data collected from different samples (high school students, college students, and adult full-time employees) can vary, resulting in variability. Another example could be a soda shop offering different blends of soda but having different taste every day, which is variability.

Variability also accounts for the inconsistent speed at which data is downloaded and stored across various systems, creating a unique experience for customers consuming the same data.

Veracity

Veracity refers to the reliability of the data source. Numerous factors can contribute to the reliability of the input they provide at a particular time in a particular situation.

Veracity is particularly important for making data-driven decisions for businesses as reproducibility of patterns relies heavily on the credibility of initial data inputs.

Validity

Validity pertains to the accuracy of data for its intended use. For example, you may acquire a dataset pertaining to data related to your subject of inquiry, increasing the task of forming a meaningful relationship and inquiry. Registered charity data contact lists

Volatility

Volatility refers to the time considerations placed on a particular data set. It involves considering if data acquired a year ago would be relevant for analysis for predictive modeling today. This is specific to the analyses being performed. Similarly, volatility also means gauging whether a particular data set is historic or not. Usually, data volatility comes under data governance and is assessed by data engineers.

Vulnerability

Big data is often about consumers. We often overlook the potential harm in sharing our shopping data, but the reality is that it can be used to uncover confidential information about an individual. For instance, Target accurately predicted a teenage girl’s pregnancy before her own parents knew it. To avoid such consequences, it’s important to be mindful of the information we share online.

Visualization

With a new data visualization tool being released every month or so, visualizing data is key to insightful results. The traditional x-y plot no longer suffices for the kind of complex detailing that goes into categorizations and patterns across various parameters obtained via big data analytics.

Value

BIG data is nothing if it cannot produce meaningful value. Consider, again, the example of Target using a 16-year-old’s shopping habits to predict her pregnancy. While in this case, it violates privacy, in most other cases, it can generate incredible customer value by bombarding them with the specific product advertisement they require.

Learn about 10 Vs of big data by George Firican

Enable smart decision making with big data visualization

The 10 Vs of big data are Volume, Velocity, Variety, Veracity, Variability, Value, Viscosity, Volume growth rate, Volume change rate, and Variance in volume change rate. These are the characteristics of big data and help to understand its complexity.

The skills needed to work with big data involve coding, although the level of knowledge required for coding is not as deep as that of a programmer. Big Data and Data Science are two concepts that play a crucial role in enabling data-driven decision making. 90% of the world’s data has been created in the last two years, providing an incredible amount of data being created daily.

Companies employ data scientists to use data mining and big data to learn more about consumers and their behaviors. Both Data Mining and Big Data Analysis are major elements of data science.

Small Data, on the other hand, is collected in a more controlled manner, whereas Big Data refers to data sets that are too large or complex to be processed by traditional data processing applications.