Artificial intelligence has come a long way, and two of the biggest names in AI today are Google’s Gemini and OpenAI’s GPT-4. These two models represent cutting-edge advancements in natural language processing (NLP), machine learning, and multimodal AI. But what really sets them apart?

If you’ve ever wondered which AI model is better, how they compare in real-world applications, and why this battle between Google and OpenAI matters, you’re in the right place. Let’s break it all down in a simple way.

Understanding Gemini AI and GPT-4:

Before diving into the details, let’s get a clear picture of what Gemini and GPT4 actually are and why they’re making waves in the AI world.

What is Google Gemini?

Google Gemini is Google DeepMind’s latest AI model, designed as a direct response to OpenAI’s GPT 4. Unlike traditional text-based AI models, Gemini was built from the ground up as a multimodal AI, meaning it can seamlessly understand and generate text, images, audio, video, and even code.

Key Features of Gemini:

- Multimodal from the start – It doesn’t just process text; it can analyze images, audio, and even video in a single workflow.

- Advanced reasoning abilities – Gemini is designed to handle complex logic-based tasks better than previous models.

- Optimized for efficiency – Google claims that Gemini is more computationally efficient, meaning faster responses and lower energy consumption.

- Deep integration with Google products – Expect Gemini to be embedded into Google Search, Google Docs, and Android devices.

Google has released multiple versions of Gemini, including Gemini 1, Gemini Pro, and Gemini Ultra, each with varying levels of power and capabilities.

Also explore: Multimodality in LLMs

What is GPT-4?

GPT-4, developed by OpenAI, is one of the most advanced AI models currently in widespread use. It powers ChatGPT Plus, Microsoft’s Copilot (formerly Bing AI), and many enterprise AI applications. Unlike Gemini, GPT-4 was initially a text-based model, though later it received some multimodal capabilities through GPT-4V (Vision).

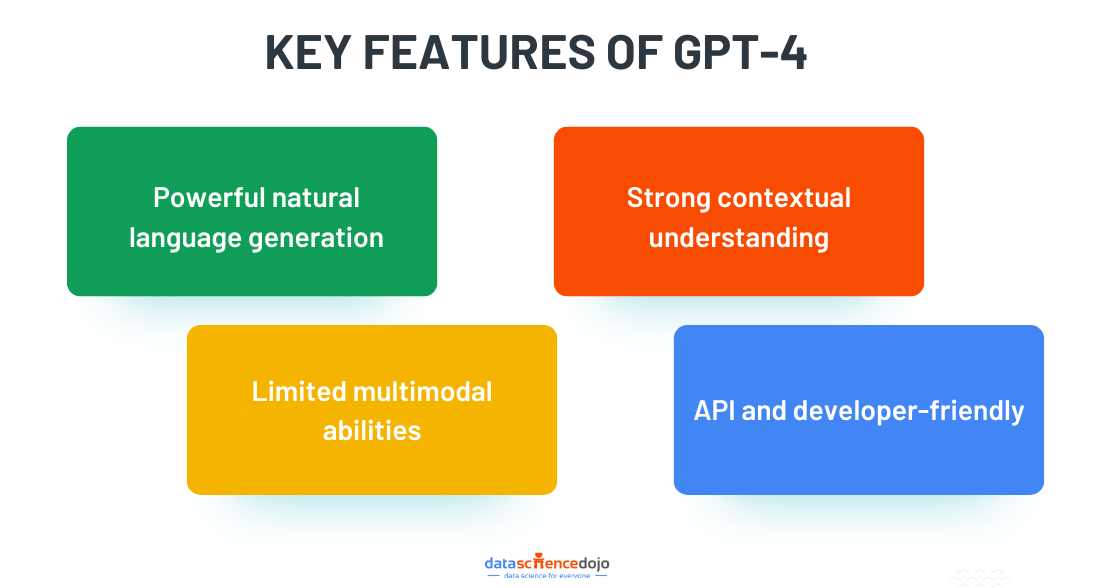

Key Features of GPT-4:

- Powerful natural language generation – GPT-4 produces high-quality, human-like responses across a wide range of topics.

- Strong contextual understanding – It retains long conversations better than previous versions and provides detailed, accurate responses.

- Limited multimodal abilities – GPT-4 can process images but lacks deep native multimodal integration like Gemini.

- API and developer-friendly – OpenAI provides robust API access, allowing businesses to integrate GPT-4 into their applications.

Key Objectives of Each Model

While both Gemini and GPT 4 aim to revolutionize AI interactions, their core objectives differ slightly:

- Google’s Gemini focuses on deep multimodal AI, meaning it was designed from the ground up to handle text, images, audio, and video together. Google also wants to integrate Gemini into its ecosystem, making AI a core part of Search, Android, and Workspace tools like Google Docs.

- OpenAI’s GPT-4 prioritizes high-quality text generation and conversational AI while expanding into multimodal capabilities. OpenAI has also emphasized API accessibility, making GPT-4 a preferred choice for developers building AI-powered applications.

Another interesting read on GPT-4o

Brief History and Development

- GPT-4 was released in March 2023, following the success of GPT-3.5. It was built on OpenAI’s transformer-based deep learning architecture, trained on an extensive dataset, and fine-tuned with human feedback for better accuracy and reduced bias.

- Gemini was launched in December 2023 as Google’s response to GPT-4. It was developed by DeepMind, a division of Google, and represents Google’s first AI model designed to be natively multimodal rather than having multimodal features added later.

Core Technological Differences Between Gemini AI and GPT-4

Both Gemini and GPT-4 are powerful AI models, but they have significant differences in how they’re built, trained, and optimized. Let’s break down the key technological differences between these two AI giants.

Architecture: Differences in Training Data and Structure

One of the most fundamental differences between Gemini and GPT-4 lies in their underlying architecture and training methodology:

- GPT-4 is based on a large-scale transformer model, similar to GPT-3, but with improvements in context retention, response accuracy, and text-based reasoning. It was trained on an extensive dataset, including books, articles, and internet data, but without real-time web access.

- Gemini, on the other hand, was designed natively as a multimodal AI model, meaning it was built from the ground up to process and integrate multiple data types (text, images, audio, video, and code). Google trained Gemini using its state-of-the-art AI infrastructure (TPUs) and leveraged Google’s vast search and real-time web data to enhance its capabilities.

Processing Capabilities: How Gemini and GPT-4 Generate Responses

The way these AI models process information and generate responses is another key differentiator:

- GPT-4 is primarily a text-based model with added multimodal abilities (through GPT-4V). It relies on token-based processing, meaning it generates responses one token at a time while predicting the most likely next word or phrase.

- Gemini, being multimodal from inception, processes and understands multiple data types simultaneously. This gives it a significant advantage when dealing with image recognition, complex problem-solving, and real-time data interpretation.

- Key Takeaway: Gemini’s ability to process different types of inputs at once gives it an edge in tasks that require integrated reasoning across different media formats.

Give it a read too: Claude vs ChatGPT

Model Size and Efficiency

While exact details of these AI models’ size and parameters are not publicly disclosed, Google has emphasized that Gemini is designed to be more efficient than previous models:

- GPT-4 is known to be massive, requiring high computational power and cloud-based resources. Its responses are highly detailed and context-aware, but it can sometimes be slower and more resource-intensive.

- Gemini was optimized for efficiency, meaning it requires fewer resources while maintaining high performance. Google’s Tensor Processing Units (TPUs) allow Gemini to run faster and more efficiently, especially in handling multimodal inputs.

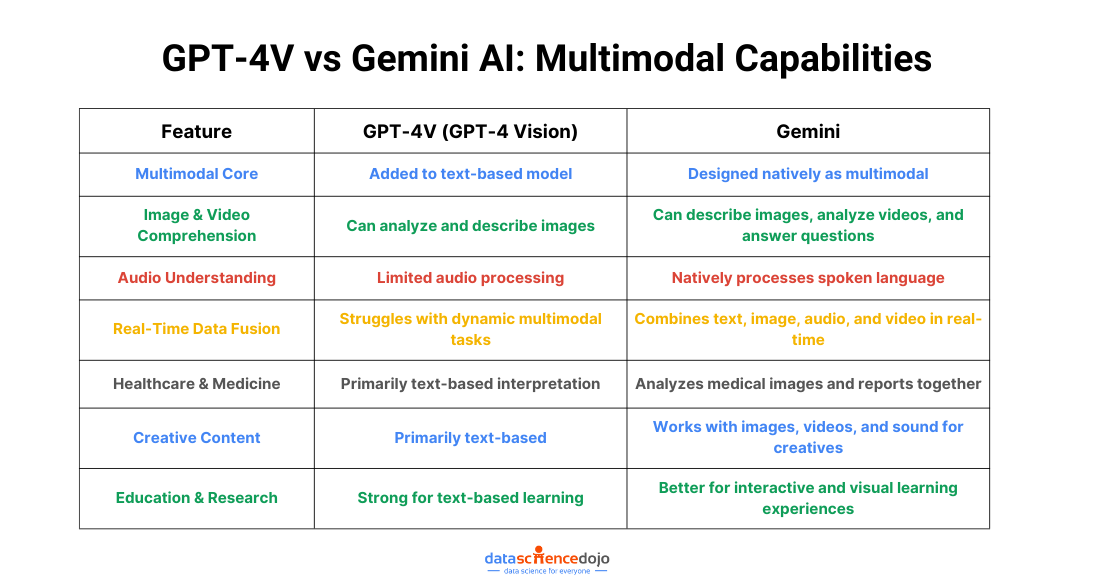

Multimodal Capabilities: Which Model Excels?

One of the biggest game-changers in AI development today is multimodal learning—the ability of an AI model to handle text, images, videos, and more within the same interaction. So, which model does this better?

How Gemini’s Native Multimodal AI Differs from GPT-4’s Approach

- GPT-4V (GPT-4 Vision) introduced some multimodal capabilities, allowing the model to analyze and describe images, but it’s not truly multimodal at its core. Instead, multimodal abilities were added on top of its existing text-based model.

- Gemini was designed natively as a multimodal AI, meaning it can seamlessly integrate text, images, audio, video, and code from the start. This makes it far more flexible in real-world applications, especially in fields like medicine, research, and creative AI development.

Image, Video, and Text Comprehension in Gemini

Gemini’s multimodal processing abilities allow it to:

- Interpret images and videos naturally – It can describe images, analyze video content, and even answer questions about what it sees.

- Understand audio inputs – Unlike GPT 4, Gemini can process spoken language natively, making it ideal for voice-based applications.

- Handle real-time data fusion – Gemini can combine text, image, and audio inputs in a single query, whereas GPT 4 struggles with dynamic, real-time multimodal tasks.

Real-World Applications of Multimodal AI

- Healthcare & Medicine: Gemini can analyze medical images and reports together, whereas GPT 4 primarily relies on text-based interpretation.

- Creative Content: Gemini’s ability to work with images, videos, and sound makes it a more versatile tool for artists, designers, and musicians.

- Education & Research: While GPT-4 is great for text-based learning, Gemini’s multimodal understanding makes it better for interactive and visual learning experiences.

Read more about AI in healthcare

Performance in Real-World Applications

Now that we’ve explored the technological differences between Gemini and GPT 4, let’s see how they perform in real-world applications. Whether you’re a developer, content creator, researcher, or business owner, understanding how these AI models deliver results in practical use cases is essential.

Coding Capabilities: Which AI is Better for Programming?

Both Gemini and GPT 4 can assist with programming, but they have different strengths and weaknesses when it comes to coding tasks:

GPT-4:

- GPT 4 is well-known for code generation, debugging, and code explanations.

- It supports multiple programming languages including Python, JavaScript, C++, and more.

- Its strong contextual understanding allows it to provide detailed explanations and optimize code efficiently.

- ChatGPT Plus users get access to GPT 4, making it widely available for developers.

Gemini:

- Gemini is optimized for complex reasoning tasks, which helps in solving intricate coding problems.

- It is natively multimodal, meaning it can interpret and analyze visual elements in code, such as debugging screenshots.

- Google has hinted that Gemini is more efficient at handling large-scale coding tasks, though real-world performance testing is still ongoing.

Also learn about the evolution of GPT series

Content Creation: Blogging, Storytelling, and Marketing Applications

AI-powered content creation is booming, and both Gemini and GPT 4 offer powerful tools for writers, marketers, and businesses.

GPT-4:

- Excellent at long-form content generation such as blogs, essays, and reports.

- Strong creative writing skills, making it ideal for storytelling and scriptwriting.

- Better at structuring marketing content like email campaigns and SEO-optimized articles.

- Fine-tuned for coherence and readability, reducing unnecessary repetition.

Gemini:

- More contextually aware when integrating images and videos into content.

- Potentially better for real-time trending topics, thanks to its live data access via Google.

- Can generate interactive content that blends text, visuals, and audio.

- Designed to be more energy-efficient, which may lead to faster response times in certain scenarios.

Scientific Research and Data Analysis: Accuracy and Depth

AI is playing a crucial role in scientific discovery, data interpretation, and academic research. Here’s how Gemini and GPT 4 compare in these areas:

GPT-4:

- Can analyze large datasets and provide text-based explanations.

- Good at summarizing complex research papers and extracting key insights.

- Has been widely tested in legal, medical, and academic fields for generating reliable responses.

Gemini:

- Designed for more advanced reasoning, which may help in hypothesis testing and complex problem-solving.

- Google’s access to live web data allows for more up-to-date insights in fast-moving fields like medicine and technology.

- Its multimodal abilities allow it to process visual data (such as graphs, tables, and medical scans) more effectively.

Read about the comparison of GPT 3 and GPT 4

The Future of AI: What’s Next for Gemini and GPT?

As AI technology evolves at a rapid pace, both Google and OpenAI are pushing the boundaries of what their models can do. The competition between Gemini and GPT-4 is just the beginning, and both companies have ambitious roadmaps for the future.

Google’s Roadmap for Gemini AI

Google has big plans for Gemini AI, aiming to make it faster, more powerful, and deeply integrated into everyday tools. Here’s what we know so far:

- Improved Multimodal Capabilities: Google is focused on enhancing Gemini’s ability to process images, video, and audio in more sophisticated ways. Future versions will likely be even better at understanding real-world context.

- Integration with Google Products: Expect Gemini-powered AI assistants to become more prevalent in Google Search, Android, Google Docs, and other Workspace tools.

- Enhanced Reasoning and Problem-Solving: Google aims to improve Gemini’s ability to handle complex tasks, making it more useful for scientific research, medical AI, and high-level business applications.

- Future Versions (Gemini Ultra, Pro, and Nano): Google has already introduced different versions of Gemini (Ultra, Pro, and Nano), with more powerful models expected soon to compete with OpenAI’s next-generation AI.

OpenAI’s Plans for GPT-5 and Future Enhancements

OpenAI is already working on GPT-5, which is expected to be a major leap forward. While official details remain scarce, here’s what experts anticipate:

You might also like: DALL·E, GPT-3, and MuseNet: A Comparison

- Better Long-Form Memory and Context Retention: One of the biggest improvements in GPT-5 could be better memory, allowing it to remember user interactions over extended conversations.

- More Advanced Multimodal Abilities: While GPT 4V introduced some image processing features, GPT-5 is expected to compete more aggressively with Gemini’s multimodal capabilities.

- Improved Efficiency and Cost Reduction: OpenAI is likely working on making GPT-5 faster and more cost-effective, reducing the computational overhead needed for AI processing.

- Stronger Ethical AI and Bias Reduction: OpenAI is continuously working on reducing biases and improving AI alignment, making future models more neutral and responsible.

Which AI Model Should You Choose?

Now that we’ve talked a lot about Gemini AI and GPT 4, the question remains: Which AI model is best for you? The answer depends on your specific needs and use cases.

Best Use Cases for Gemini vs. GPT-4

| Use Case | Best AI Model | Why? |

| Text-based writing & blogging | GPT 4 | GPT 4 provides more structured and coherent text generation. |

| Creative storytelling & scriptwriting | GPT 4 | Known for its strong storytelling and narrative-building abilities. |

| Programming & debugging | GPT-4 (currently) | Has been widely tested in real-world coding applications. |

| Multimodal applications (text, images, video, audio) | Gemini | Built for native multimodal processing, unlike GPT 4, which has limited multimodal capabilities. |

| Real-time information retrieval | Gemini | Access to Google Search allows for more up-to-date answers. |

| Business AI integration | Both | GPT-4 integrates well with Microsoft, while Gemini is built for Google Workspace. |

| Scientific research & data analysis | Gemini (for complex reasoning) | Better at processing visual data and multimodal problem-solving. |

| Security & ethical concerns | TBD | Both models are working on reducing biases, but ethical AI development is ongoing. |

Frequently Asked Questions (FAQs)

- What is the biggest difference between Gemini and GPT 4?

The biggest difference is that Gemini is natively multimodal, meaning it was built from the ground up to process text, images, audio, and video together. GPT-4, on the other hand, is primarily a text-based model with some added multimodal features (via GPT-4V).

- Is Gemini more powerful than GPT 4?

It depends on the use case. Gemini is more powerful in multimodal AI, while GPT-4 remains superior in text-based reasoning and structured writing tasks.

- Can Gemini replace GPT 4?

Not yet. GPT-4 has a stronger presence in business applications, APIs, and structured content generation, while Gemini is still evolving. However, Google’s fast-paced development could challenge GPT-4’s dominance in the future.

- Which AI is better for content creation?

GPT-4 is currently the best choice for blogging, marketing content, and storytelling, thanks to its highly structured text generation. However, if you need AI-generated multimedia content, Gemini may be the better option.

- How do these AI models handle biases and misinformation?

Both models have bias-mitigation techniques, but neither is completely free from bias. GPT 4 relies on human feedback tuning (RLHF), while Gemini pulls real-time data (which can introduce new challenges in misinformation filtering). Google and OpenAI are both working on improving AI ethics and fairness.

Conclusion

In the battle between Google’s Gemini AI and OpenAI’s GPT 4, the defining difference lies in their core capabilities and intended use cases. GPT-4 remains the superior choice for text-heavy applications, excelling in long-form content creation, coding, and structured responses, with strong API support and enterprise integration.

Gemini AI sets itself apart from GPT-4 with its native multimodal capabilities, real-time data access, and deep integration with Google’s ecosystem. Unlike GPT-4, which is primarily text-based, Gemini seamlessly processes text, images, video, and audio, making it more versatile for dynamic applications. Its ability to pull live web data and optimized efficiency on Google’s TPUs give it a significant edge. While GPT 4 excels in structured text generation, Gemini represents the next evolution of AI with a more adaptive, real-world approach.