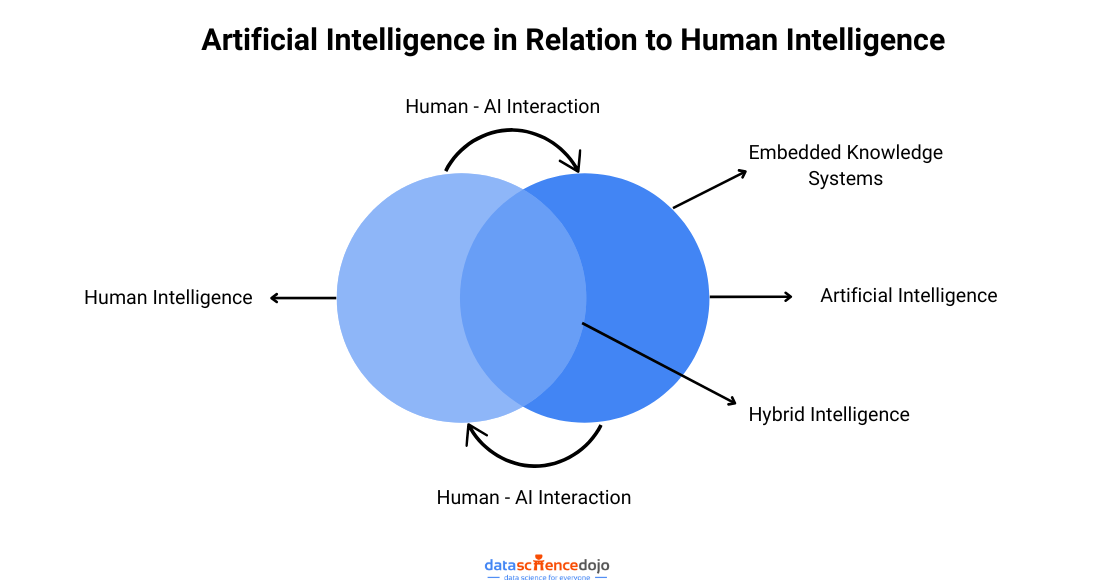

Ever talked to a computer and felt like it really got you? That’s the magic of human-computer interaction powered by Large Language Models (LLMs). These advanced AIs, like GPT-3, can chat, answer questions, and even write stories that sound almost human.

But while it all seems amazing, there’s more to the story. How do these models actually work? And what challenges come with using them in our everyday lives?

In this blog, we’ll dive into how LLMs are changing the way we interact with computers, making conversations smoother and smarter — and we’ll also explore some of the tricky parts that come with this powerful technology.

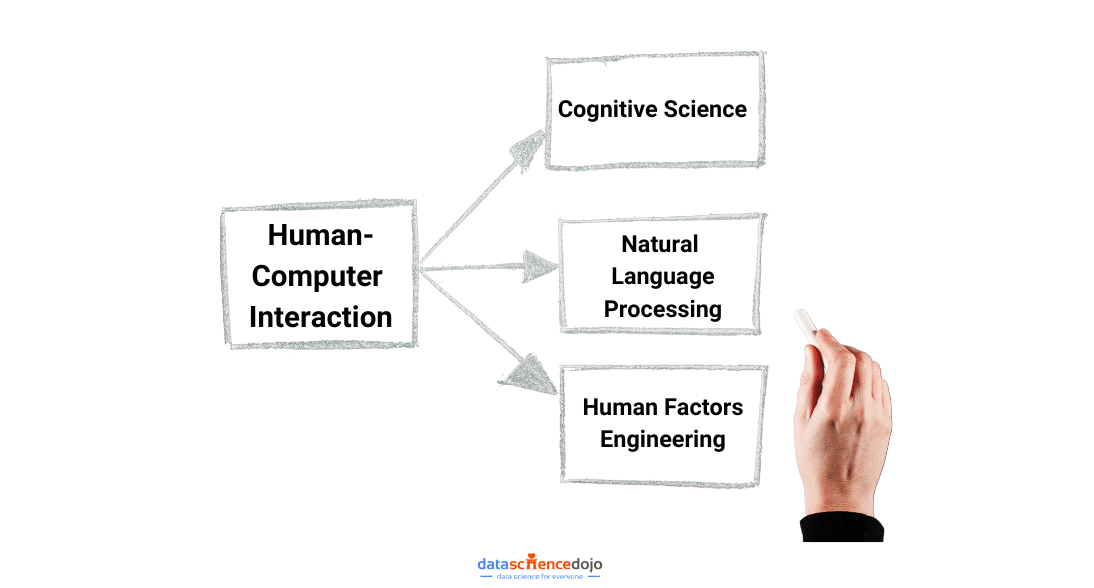

Human-Computer Interaction: How LLMs Master Language at Scale?

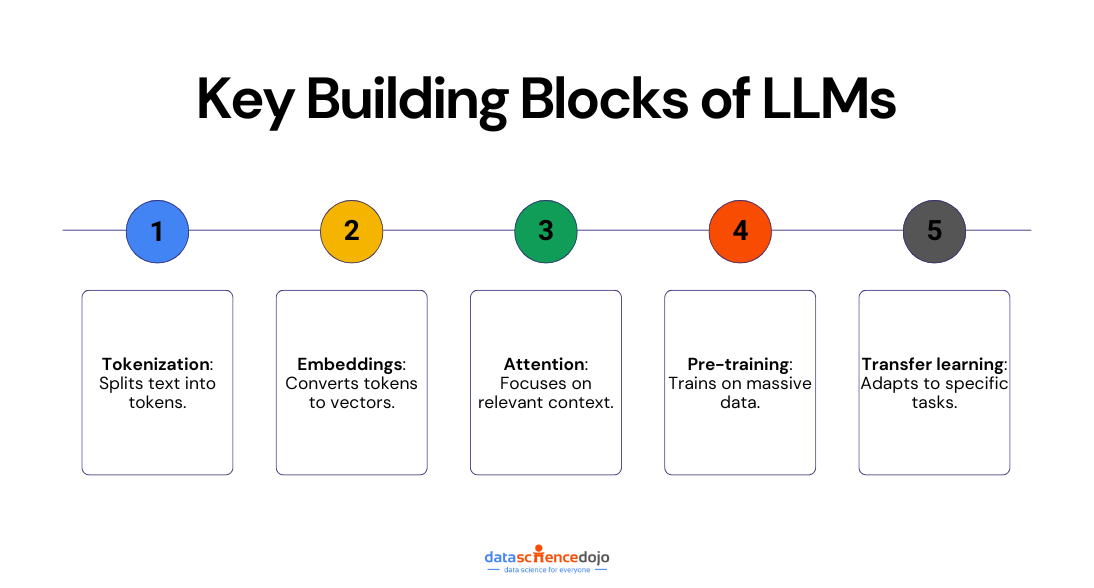

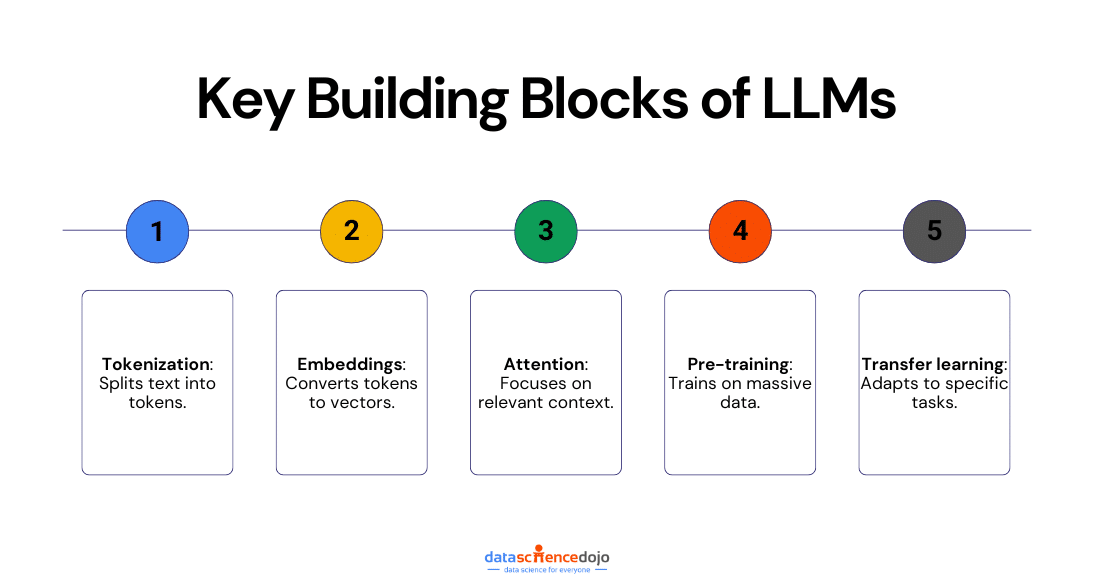

At their core, LLMs are intricate neural networks engineered to comprehend and craft human language on an extraordinary scale. These colossal models ingest vast and diverse datasets, spanning literature, news, and social media dialogues from the internet.

Their primary mission? Predicting the next word or token in a sentence based on the preceding context. Through this predictive prowess, they acquire grammar, syntax, and semantic acumen, enabling them to generate coherent, contextually fitting text.

This training hinges on countless neural network parameter adjustments, fine-tuning their knack for spotting patterns and associations within the data.

Challenges of Large Language Models

Consequently, when prompted with text, these models draw upon their immense knowledge to produce human-like responses, serving diverse applications from language understanding to content creation. Yet, such incredible power also raises valid concerns deserving closer scrutiny.

Ethical Concerns Surrounding Large Language Models:

Large Language Models (LLMs) like GPT-3 have raised numerous ethical and social implications that need careful consideration.

These transformative AI systems, while undeniably powerful, have cast a spotlight on a spectrum of concerns that extend beyond their technical capabilities. Here are some of the key concerns:

1. Bias and Fairness:

LLMs are often trained on large datasets that may contain biases present in the text. This can lead to models generating biased or unfair content. Addressing and mitigating bias in LLMs is a critical concern, especially when these models are used in applications that impact people’s lives, such as in hiring processes or legal contexts.

In 2016, Microsoft launched a chatbot called Tay on Twitter. Tay was designed to learn from its interactions with users and become more human-like over time. However, within hours of being launched, Tay was flooded with racist and sexist language. As a result, Tay began to repeat this language, and Microsoft was forced to take it offline.

Read more –> Algorithmic biases – Is it a challenge to achieve fairness in AI?

2. Misinformation and Disinformation:

LLMs can generate highly convincing fake news, disinformation, and propaganda. One of the gravest concerns surrounding the deployment of Large Language Models (LLMs) lies in their capacity to produce exceptionally persuasive counterfeit news articles, disinformation, and propaganda.

These AI systems possess the capability to fabricate text that closely mirrors the style, tone, and formatting of legitimate news reports, official statements, or credible sources. This issue was brought forward in this research.

3. Dependency and Deskilling:

Excessive reliance on Large Language Models (LLMs) for various tasks presents multifaceted concerns, including the erosion of critical human skills. Overdependence on AI-generated content may diminish individuals’ capacity to perform tasks independently and reduce their adaptability in the face of new challenges.

In scenarios where LLMs are employed as decision-making aids, there’s a risk that individuals may become overly dependent on AI recommendations. This can impair their problem-solving abilities, as they may opt for AI-generated solutions without fully understanding the underlying rationale or engaging in critical analysis.

4. Privacy and Security Threats:

Large Language Models (LLMs) pose significant privacy and security threats due to their capacity to inadvertently leak sensitive information, profile individuals, and re-identify anonymized data. They can be exploited for data manipulation, social engineering, and impersonation, leading to privacy breaches, cyberattacks, and the spread of false information.

LLMs enable the generation of malicious content, automation of cyberattacks, and obfuscation of malicious code, elevating cybersecurity risks. Addressing these threats requires a combination of data protection measures, cybersecurity protocols, user education, and responsible AI development practices to ensure the responsible and secure use of LLMs.

5. Lack of Accountability:

The lack of accountability in the context of Large Language Models (LLMs) arises from the inherent challenge of determining responsibility for the content they generate. This issue carries significant implications, particularly within legal and ethical domains.

When AI-generated content is involved in legal disputes, it becomes difficult to assign liability or establish an accountable party, which can complicate legal proceedings and hinder the pursuit of justice.

Moreover, in ethical contexts, the absence of clear accountability mechanisms raises concerns about the responsible use of AI, potentially enabling malicious or unethical actions without clear repercussions.

Thus, addressing this accountability gap is essential to ensure transparency, fairness, and ethical standards in the development and deployment of LLMs.

6. Filter Bubbles and Echo Chambers:

Large Language Models (LLMs) contribute to filtering bubbles and echo chambers by generating content that aligns with users’ existing beliefs, limiting exposure to diverse viewpoints.

This can hinder healthy public discourse by isolating individuals within their preferred information bubbles and reducing engagement with opposing perspectives, posing challenges to shared understanding and constructive debate in society.

Navigating the Solutions: Mitigating Flaws in Large Language Models

As we delve deeper into the world of AI and language technology, it’s crucial to confront the challenges posed by Large Language Models (LLMs). In this section, we’ll explore innovative solutions and practical approaches to address the flaws we discussed.

Our goal is to harness the potential of LLMs while safeguarding against their negative impacts. Let’s dive into these solutions for responsible and impactful use.

1. Set Clear Ethical Guidelines:

Establish comprehensive and ongoing bias audits of LLMs during development. This involves reviewing training data for biases, diversifying training datasets, and implementing algorithms that reduce biased outputs. Include diverse perspectives in AI ethics and development teams and promote transparency in the fine-tuning process.

Guardrails AI can enforce policies designed to mitigate bias in LLMs by establishing predefined fairness thresholds. For example, it can restrict the model from generating content that includes discriminatory language or perpetuates stereotypes. It can also encourage the use of inclusive and neutral language.

Guardrails serve as a proactive layer of oversight and control, enabling real-time intervention and promoting responsible, unbiased behavior in LLMs.

Read more –> LLM Use-Cases: Top 10 industries that can benefit from using large language models

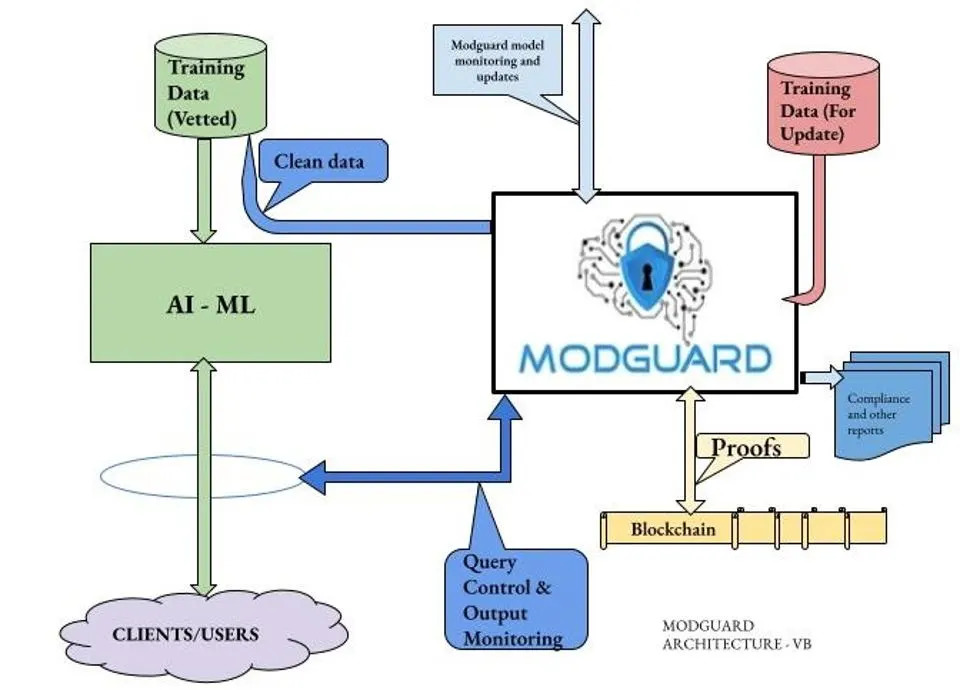

The architecture of an AI-based guardrail system

2. Prevent Misinformation Spread:

Develop and promote robust fact-checking tools and platforms to counter misinformation. Encourage responsible content generation practices by users and platforms. Collaborate with organizations that specialize in identifying and addressing misinformation.

Enhance media literacy and critical thinking education to help individuals identify and evaluate credible sources.

Additionally, Guardrails can combat misinformation in Large Language Models (LLMs) by implementing real-time fact-checking algorithms that flag potentially false or misleading information, restricting the dissemination of such content without additional verification.

These guardrails work in tandem with the LLM, allowing for the immediate detection and prevention of misinformation, thereby enhancing the model’s trustworthiness and reliability in generating accurate information.

3. Promote Human-AI Collaboration:

To address dependency and deskilling caused by over-reliance on AI systems, it’s essential to promote human-AI collaboration that augments human abilities rather than replacing them. This means positioning AI, including LLMs, as a supportive tool that empowers users to be more productive, creative, and efficient, rather than making them passive recipients of AI-generated outputs.

Organizations should invest in lifelong learning and reskilling programs to help individuals adapt to the rapid advancements in AI. These programs can equip users with the skills to critically engage with AI tools, ensuring they retain essential decision-making and problem-solving capabilities.

Additionally, fostering a culture of responsible AI use is crucial. Users should be encouraged to view AI as an enhancement tool—a partner in their tasks—rather than a complete solution. This mindset shift can help prevent skill degradation and maintain human agency in decision-making processes.

4. Strengthening Data Privacy & AI Security:

Strengthen data anonymization techniques to protect sensitive information. Implement robust cybersecurity measures to safeguard against AI-generated threats. Developing and adhering to ethical AI development standards to ensure privacy and security are paramount considerations.

Moreover, Guardrails can enhance privacy and security in Large Language Models (LLMs) by enforcing strict data anonymization techniques during model operation, implementing robust cybersecurity measures to safeguard against AI-generated threats, and educating users on recognizing and handling AI-generated content that may pose security risks.

These guardrails provide continuous monitoring and protection, ensuring that LLMs prioritize data privacy and security in their interactions, contributing to a safer and more secure AI ecosystem.

5. Enforcing AI Accountability:

Establish clear legal frameworks for AI accountability, addressing issues of responsibility and liability. Develop digital signatures and metadata for AI-generated content to trace sources.

Promote transparency in AI development by documenting processes and decisions. Encourage industry-wide standards for accountability in AI use. Guardrails can address the lack of accountability in Large Language Models (LLMs) by enforcing transparency through audit trails that record model decisions and actions, thereby holding AI accountable for its outputs.

6. Encourage Interdisciplinary Collaboration:

Promote diverse content recommendation algorithms that expose users to a variety of perspectives. Encourage cross-platform information sharing to break down echo chambers. Invest in educational initiatives that expose individuals to diverse viewpoints and promote critical thinking to combat the spread of filter bubbles and echo chambers.

In a Nutshell

The path forward requires vigilance, collaboration, and an unwavering commitment to harness the power of LLMs while mitigating their pitfalls.

By championing fairness, transparency, and responsible AI use, we can unlock a future where these linguistic giants elevate society, enabling us to navigate the evolving digital landscape with wisdom and foresight. The use of Guardrails for AI is paramount in AI applications, safeguarding against misuse and unintended consequences.

The journey continues, and it’s one we embark upon with the collective goal of shaping a better, more equitable, and ethically sound AI-powered world.