Becoming an LLM master is an invaluable skill for AI practitioners, developers, and data scientists looking to excel in the rapidly evolving field of artificial intelligence. Whether you’re fine-tuning models, building intelligent applications, or mastering prompt engineering, effectively leveraging Large Language Models (LLMs) can set you apart in the AI-driven world.

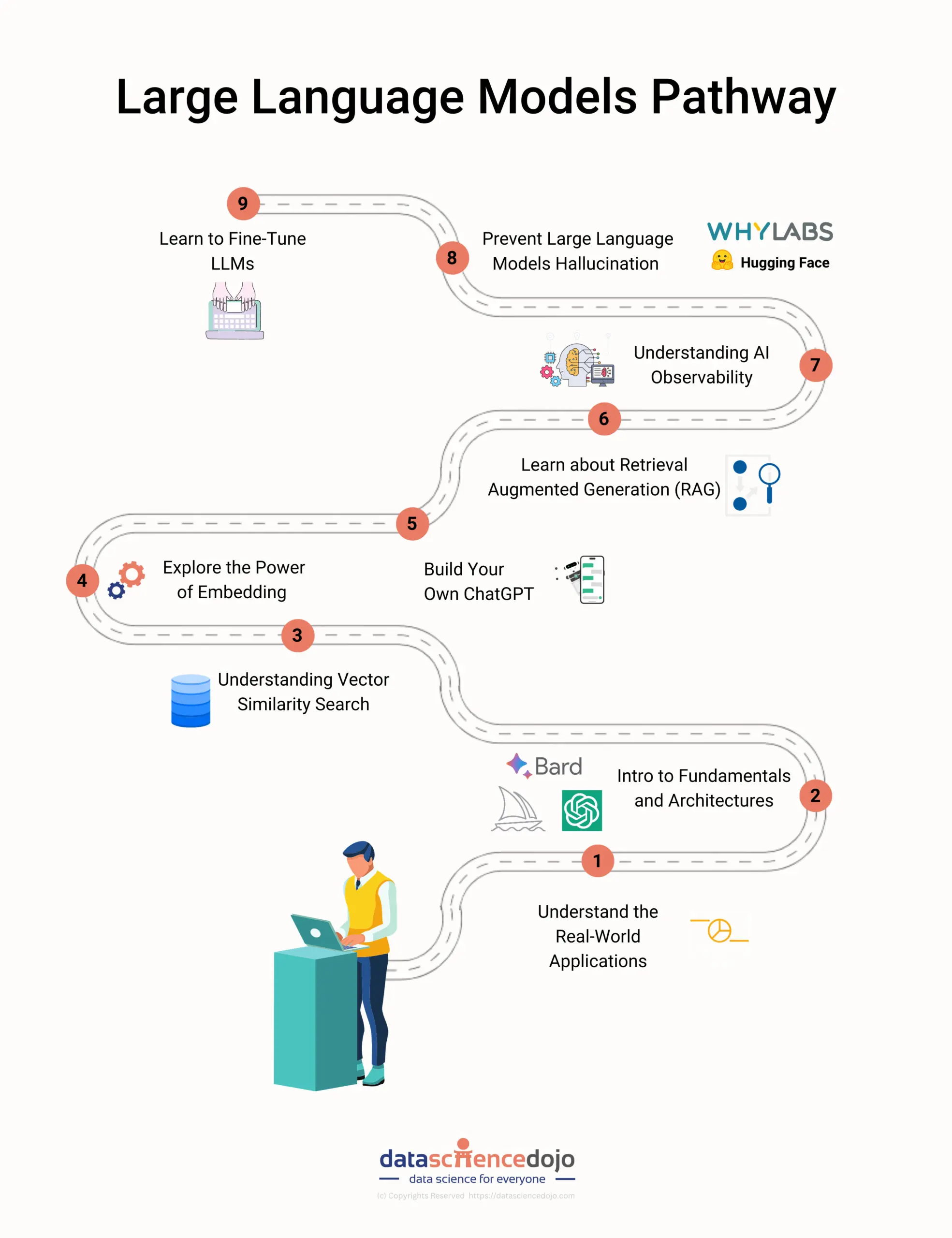

In this blog, we’ll walk you through the key steps to becoming an LLM expert—from understanding foundational concepts to implementing advanced techniques. Whether you’re just starting out or refining your expertise, this structured roadmap will equip you with the knowledge and tools needed to master LLMs.

Want to build a custom LLM application? Check out our in-person Large Language Model Bootcamp.

However, becoming an LLM expert requires more than just theoretical knowledge; it demands a deep understanding of model architectures, training methodologies, and best practices. To help you navigate this journey, we’ve outlined a 7-step approach to mastering LLMs:

Step 1: Understand LLM Basics

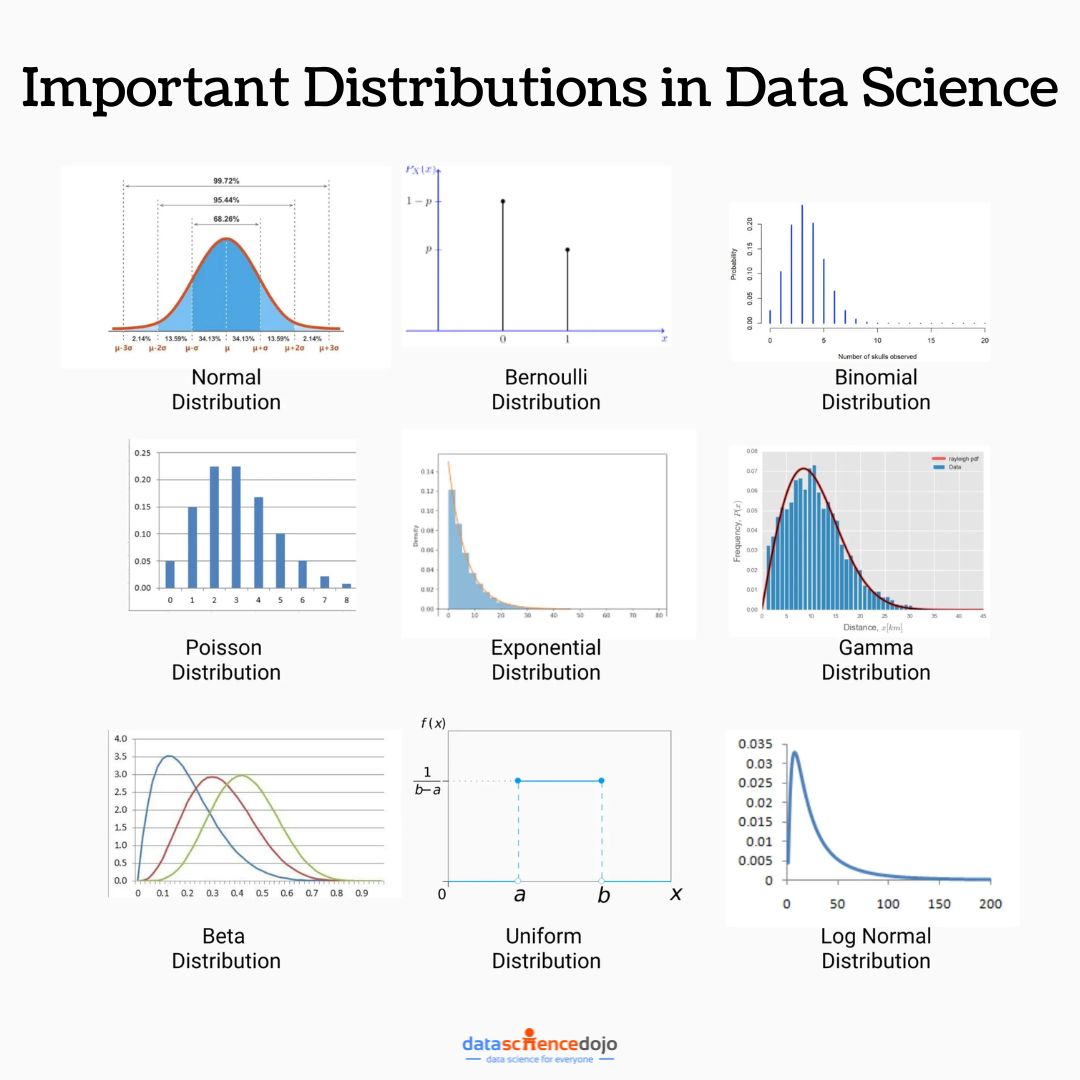

Before diving into the complexities of Large Language models, it’s crucial to establish a solid foundation in the fundamental concepts. This includes understanding the following:

- Natural Language Processing (NLP): NLP is the field of computer science that deals with the interaction between computers and human language. It encompasses tasks like machine translation, text summarization, and sentiment analysis.

Read more about attention mechanisms in natural language processing

- Deep Learning: LLMs are powered by deep learning, a subfield of machine learning that utilizes artificial neural networks to learn from data. Familiarize yourself with the concepts of neural networks, such as neurons, layers, and activation functions.

- Transformer: The transformer architecture is a cornerstone of modern LLMs. Understand the components of the transformer architecture, including self-attention, encoder-decoder architecture, and positional encoding.

Step 2: Explore LLM Architectures

Large Language models come in various architectures, each with its strengths and limitations. Explore different LLM architectures, such as:

- BERT (Bidirectional Encoder Representations from Transformers): BERT is a widely used LLM that excels in natural language understanding tasks, such as question answering and sentiment analysis.

- GPT (Generative Pre-training Transformer): GPT is known for its ability to generate human-quality text, making it suitable for tasks like creative writing and chatbots.

- XLNet (Generalized Autoregressive Pre-training for Language Understanding): XLNet is an extension of BERT that addresses some of its limitations, such as its bidirectional nature.

Step 3: Pre-Training LLMs

Pre-training is a crucial step in the development of LLMs. It involves training the LLM on a massive dataset of text and code to learn general language patterns and representations. Explore different pre-training techniques, such as:

- Masked Language Modeling (MLM): In MLM, random words are masked in the input text, and the LLM is tasked with predicting the missing words.

- Next Sentence Prediction (NSP): In NSP, the LLM is given two sentences and asked to determine whether they are consecutive sentences from a text or not.

- Contrastive Language-Image Pre-training (CLIP): CLIP involves training the LLM to match text descriptions with their corresponding images.

Step 4: Fine-Tuning LLMs

Fine-tuning involves adapting a pre-trained LLM to a specific task or domain. This is done by training the LLM on a smaller dataset of task-specific data. Explore different fine-tuning techniques, such as:

Learn more about fine-tuning Large Language models

- Task-specific loss functions: Define loss functions that align with the specific task, such as accuracy for classification tasks or BLEU score for translation tasks.

- Data augmentation: Augment the task-specific dataset to improve the LLM’s generalization ability.

- Early stopping: Implement early stopping to prevent overfitting and optimize the LLM’s performance.

This talk below can help you get started with fine-tuning GPT 3.5 Turbo.

Step 5: Alignment and Post-Training

Alignment and post-training are essential steps to ensure that Large Language models are aligned with human values and ethical considerations. This includes:

- Bias mitigation: Identify and mitigate biases in the LLM’s training data and outputs.

- Fairness evaluation: Evaluate the fairness of the LLM’s decisions and identify potential discriminatory patterns.

- Explainability: Develop methods to explain the LLM’s reasoning and decision-making processes.

Step 6: Evaluating LLMs

Evaluating LLMs is crucial to assess their performance and identify areas for improvement. Explore different evaluation metrics, such as:

- Accuracy: Measure the proportion of correct predictions for classification tasks.

- Fluency: Assess the naturalness and coherence of the LLM’s generated text.

- Relevance: Evaluate the relevance of the LLM’s outputs to the given prompts or questions.

Read more about: Evaluating large language models

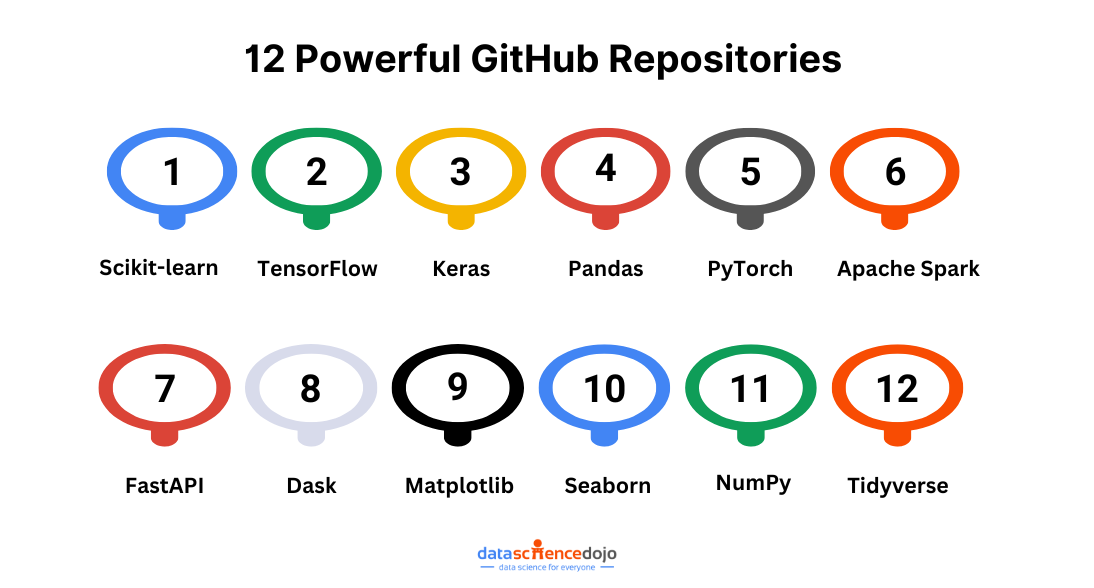

Step 7: Build LLM Apps

With a strong understanding of Large Language models, you can start building applications that leverage their capabilities. Explore different application scenarios, such as:

- Chatbots: Develop chatbots that can engage in natural conversations with users.

- Content creation: Utilize LLMs to generate creative content, such as poems, scripts, or musical pieces.

- Machine translation: Build machine translation systems that can accurately translate languages.

Start Learning to Become an LLM Master

Mastering large language models is an ongoing journey that requires continuous learning and exploration. By following these seven steps, you can gain a comprehensive understanding of LLMs, their underlying principles, and the techniques involved in their development and application.

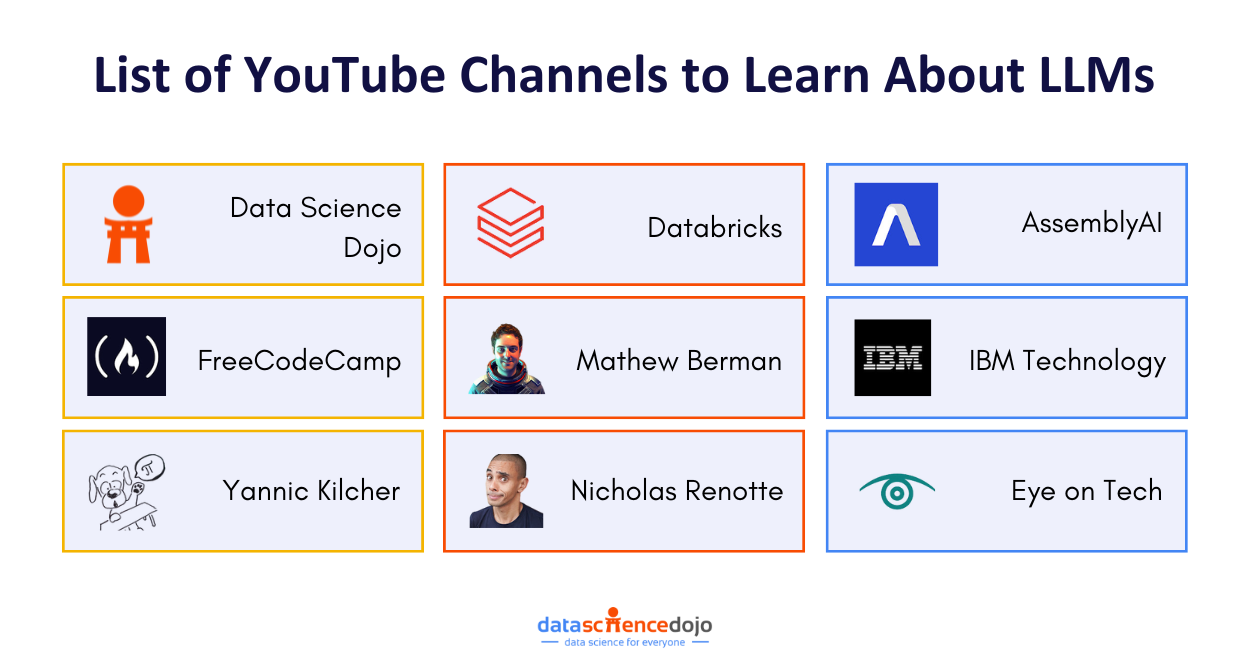

As Large Language models continue to evolve, stay informed about the latest advancements and contribute to the responsible and ethical development of these powerful tools. Here’s a list of YouTube channels that can help you stay updated in the world of large language models.

7 Steps to Mastering Large Language Models (LLMs)

Large language models (LLMs) have revolutionized the field of natural language processing (NLP), enabling machines to generate human-quality text, translate languages, and answer questions in an informative way. These advancements have opened up a world of possibilities for applications in various domains, from customer service to education.

Want to build a custom llm application? Checkout our in-person Large Language Model bootcamp.

However, mastering LLMs requires a comprehensive understanding of their underlying principles, architectures, and training techniques.

This 7-step guide will provide you with a structured approach to mastering LLMs:

Step 1: Understand LLM Basics

Before diving into the complexities of LLMs, it’s crucial to establish a solid foundation in the fundamental concepts. This includes understanding the following:

- Natural Language Processing (NLP): NLP is the field of computer science that deals with the interaction between computers and human language. It encompasses tasks like machine translation, text summarization, and sentiment analysis.

- Deep Learning: LLMs are powered by deep learning, a subfield of machine learning that utilizes artificial neural networks to learn from data. Familiarize yourself with the concepts of neural networks, such as neurons, layers, and activation functions.

- Transformer: The transformer architecture is a cornerstone of modern LLMs. Understand the components of the transformer architecture, including self-attention, encoder-decoder architecture, and positional encoding.

Step 2: Explore LLM Architectures

LLMs come in various architectures, each with its strengths and limitations. Explore different LLM architectures, such as:

- BERT (Bidirectional Encoder Representations from Transformers): BERT is a widely used LLM that excels in natural language understanding tasks, such as question answering and sentiment analysis.

- GPT (Generative Pre-training Transformer): GPT is known for its ability to generate human-quality text, making it suitable for tasks like creative writing and chatbots.

- XLNet (Generalized Autoregressive Pre-training for Language Understanding): XLNet is an extension of BERT that addresses some of its limitations, such as its bidirectional nature.

Step 3: Pre-training LLMs

Pre-training is a crucial step in the development of LLMs. It involves training the LLM on a massive dataset of text and code to learn general language patterns and representations. Explore different pre-training techniques, such as:

- Masked Language Modeling (MLM): In MLM, random words are masked in the input text, and the LLM is tasked with predicting the missing words.

- Next Sentence Prediction (NSP): In NSP, the LLM is given two sentences and asked to determine whether they are consecutive sentences from a text or not.

- Contrastive Language-Image Pre-training (CLIP): CLIP involves training the LLM to match text descriptions with their corresponding images.

Step 4: Fine-tuning LLMs

Fine-tuning involves adapting a pre-trained LLM to a specific task or domain. This is done by training the LLM on a smaller dataset of task-specific data. Explore different fine-tuning techniques, such as:

- Task-specific loss functions: Define loss functions that align with the specific task, such as accuracy for classification tasks or BLEU score for translation tasks.

- Data augmentation: Augment the task-specific dataset to improve the LLM’s generalization ability.

- Early stopping: Implement early stopping to prevent overfitting and optimize the LLM’s performance.

This talk below can help you get started with fine-tuning GPT 3.5 Turbo.

Video to embed: https://www.youtube.com/watch?v=acRsajB6d4w

Step 5: Alignment and Post-training

Alignment and post-training are essential steps to ensure that LLMs are aligned with human values and ethical considerations. This includes:

- Bias mitigation: Identify and mitigate biases in the LLM’s training data and outputs.

- Fairness evaluation: Evaluate the fairness of the LLM’s decisions and identify potential discriminatory patterns.

- Explainability: Develop methods to explain the LLM’s reasoning and decision-making processes.

Step 6: Evaluating LLMs

Evaluating LLMs is crucial to assess their performance and identify areas for improvement. Explore different evaluation metrics, such as:

- Accuracy: Measure the proportion of correct predictions for classification tasks.

- Fluency: Assess the naturalness and coherence of the LLM’s generated text.

- Relevance: Evaluate the relevance of the LLM’s outputs to the given prompts or questions.

Step 7: Build LLM Apps

With a strong understanding of LLMs, you can start building applications that leverage their capabilities. Explore different application scenarios, such as:

- Chatbots: Develop chatbots that can engage in natural conversations with users.

- Content creation: Utilize LLMs to generate creative content, such as poems, scripts, or musical pieces.

- Machine translation: Build machine translation systems that can accurately translate languages.

Conclusion

Mastering large language models (LLMs) is an ongoing journey that requires continuous learning and exploration. By following these seven steps, you can gain a comprehensive understanding of LLMs, their underlying principles, and the techniques involved in their development and application.

As LLMs continue to evolve, stay informed about the latest advancements and contribute to the responsible and ethical development of these powerful tools. Here’s a list of YouTube channel that can help you stay updated in the world of large language models.