In today’s digital landscape, the ability to leverage data effectively has become a key factor for success in businesses across various industries. As a result, companies are increasingly investing in data science teams to help them extract valuable insights from their data and develop sophisticated analytical models.

Empowering data science teams can lead to better-informed decision-making, improved operational efficiencies, and ultimately, a competitive advantage in the marketplace.

Empowering Data Science Teams for Maximum Impact

To upskill teams with data science, businesses need to invest in their training and development. Data science is a complex and multidisciplinary field that requires specialized skills, such as data engineering, machine learning, and statistical analysis. Therefore, businesses must provide their data science teams with access to the latest tools, technologies, and training resources. This will enable them to develop their skills and knowledge, keep up to date with the latest industry trends, and stay at the forefront of data science.

Another way to empower teams with data science is to give them autonomy and ownership over their work. This involves giving them the freedom to experiment and explore different solutions without undue micromanagement. Data professionals need to have the freedom to make decisions and choose the tools and methodologies that work best for them. This approach can lead to increased innovation, creativity, and productivity, and improved job satisfaction and engagement.

Why Investing in Your Data Science Team is Critical in Today’s Data-Driven World?

There is an overload of information on why empowering data science teams is essential. Considering there is a burgeoning amount of web pages information, here is a condensed version of the five major reasons that make or break data science teams:

- Improved Decision Making: Data science teams help businesses make more informed and accurate decisions based on data analysis, leading to better outcomes.

- Competitive Advantage: Companies that effectively leverage data science have a competitive advantage over those that do not, as they can make more data-driven decisions and respond quickly to changing market conditions.

- Innovation: Data science teams are key drivers of innovation in organizations, as they can help identify new opportunities and develop creative solutions to complex business challenges.

- Cost Savings: Data science teams can help identify areas of inefficiency or waste within an organization, leading to cost savings and increased profitability.

- Talent Attraction and Retention: Empowering teams can also help attract and retain top talent, as data scientists are in high demand and are drawn to companies that prioritize data-driven decision-making.

Empowering Your Business with Data Science Dojo

Data Science Dojo is a company that offers data science training and consulting services to businesses. By partnering with Data Science Dojo, businesses can unlock the full potential of their data and empower their Data experts.

Data Science Dojo provides a range of data science training programs designed to meet businesses’ specific needs, from beginner-level training to advanced machine learning workshops. The training is delivered by experienced data scientists with a wealth of real-world experience in solving complex business problems using data science.

The benefits of partnering with Data Science Dojo are numerous. By investing in data science training, businesses can unlock the full potential of their data and make more informed decisions. This can lead to increased efficiency, reduced costs, and improved customer satisfaction.

Data science can also be used to identify new revenue streams and gain a competitive edge in the market. With the help of Data Science Dojo, businesses can build a data-driven culture that empowers their data science teams and drives innovation.

Transforming Data Science Teams: The Power of Saturn Cloud

Empowering data science teams and Saturn Cloud are deeply connected, as Saturn Cloud is a powerful platform designed to enhance collaboration, streamline workflows, and provide the necessary infrastructure for efficient machine learning development. By leveraging Saturn Cloud, businesses can optimize their data science processes and drive innovation with greater ease and flexibility.

What is Saturn Cloud?

Saturn Cloud is a cloud-based platform that offers data science teams a scalable, efficient, and flexible environment for developing, testing, and deploying machine learning models. By integrating with existing tools and frameworks, Saturn Cloud enables seamless transitions for businesses moving their data science workflows to the cloud. It provides robust computational resources, ensuring that teams can work without constraints while maintaining security and compliance.

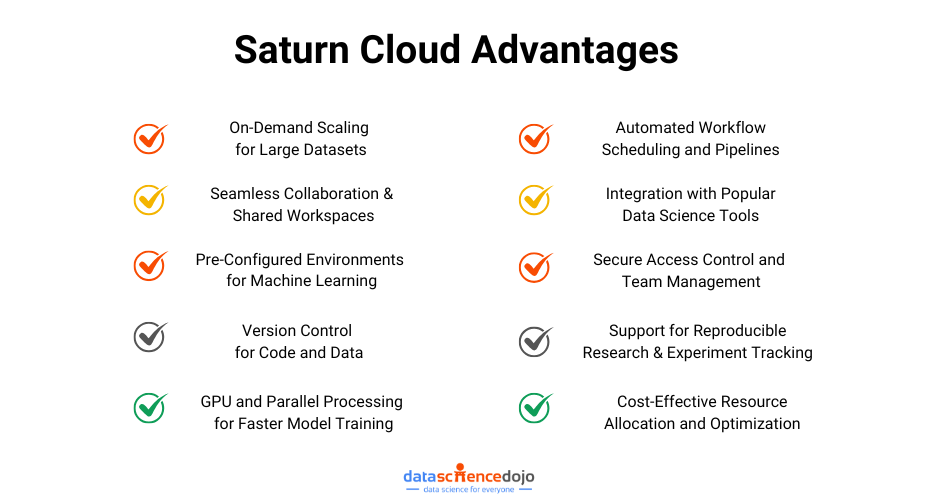

Benefits of Using Saturn Cloud for Data Science Teams

1. Harnessing The Power of Cloud

Saturn Cloud eliminates the need for expensive on-premises infrastructure by offering a cloud-based alternative that allows businesses to scale their computing resources effortlessly. This cost-effective approach helps organizations manage their budgets while ensuring optimal performance, security, and compliance with regulatory standards.

2. Making Data Science in the Cloud Easy

Saturn Cloud simplifies cloud-based data science by providing tools such as JupyterLab notebooks, machine learning libraries, and pre-configured frameworks. Data scientists can continue using familiar tools without needing extensive retraining, reducing onboarding time and enhancing productivity. The platform also supports multi-language compatibility, making it accessible for teams with diverse technical expertise.

3. Improving Collaboration and Productivity

One of Saturn Cloud’s standout features is its collaborative workspace, which facilitates seamless teamwork. Team members can share resources, collaborate on code, and exchange insights in real-time. Additionally, built-in version control ensures that changes to code and datasets are tracked, allowing for easy rollback when necessary. These capabilities enhance efficiency, reduce development time, and accelerate the deployment of new data-driven solutions.

In a Nutshell

Data science is a critical driver of innovation, providing businesses with the insights needed to make informed decisions and maintain a competitive edge. To maximize the potential of their data science teams, organizations must invest in the right tools and platforms. Saturn Cloud empowers data science teams by offering a scalable, collaborative, and user-friendly environment, enabling businesses to unlock valuable data-driven insights and drive forward-thinking strategies. By leveraging Saturn Cloud, organizations can streamline their workflows, enhance productivity, and ultimately transform their approach to data science.