Machine learning algorithms require the use of various parameters that govern the learning process. These parameters are called hyperparameters, and their optimal values are often unknown a priori. Hyperparameter tuning is the process of selecting the best values of these parameters to improve the performance of a model. In this article, we will explore the basics of hyperparameter tuning and the popular strategies used to accomplish it.

Understanding Hyperparameters

In machine learning, a model has two types of parameters: Hyperparameters and learned parameters. The learned parameters are updated during the training process, while the hyperparameters are set before the training begins.

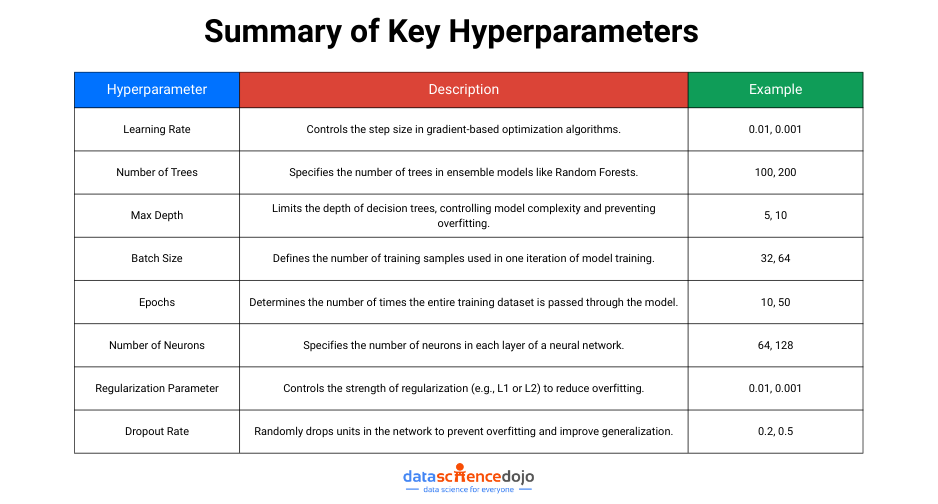

Hyperparameters control the model’s behavior, and their values are usually set based on domain knowledge or heuristics. Examples of hyperparameters include learning rate, regularization coefficient, batch size, and the number of hidden layers.

Why Is Hyperparameter Tuning Important?

Hyperparameter tuning plays a critical role in the success of machine learning models. Hyperparameters are configuration settings used to control the training process—such as learning rate, number of trees in a random forest, or the number of hidden layers in a neural network. Unlike model parameters, which are learned from data, hyperparameters must be set before the learning process begins.

Choosing the right hyperparameter values can significantly enhance model performance. Poorly selected hyperparameters may lead to underfitting, where the model fails to capture patterns in the data, or overfitting, where it memorizes the training data but performs poorly on unseen data. Both cases result in suboptimal model accuracy and reliability.

On the other hand, carefully tuned hyperparameters help strike a balance between bias and variance, enabling the model to generalize well to new data. This translates to more accurate predictions, better decision-making, and higher trust in the model’s output—especially important in critical applications like healthcare, finance, and autonomous systems.

In essence, hyperparameter tuning is not just a technical step; it is a strategic process that can unlock the full potential of your machine learning models and elevate the overall effectiveness of your data science projects.

Strategies for Hyperparameter Tuning

There are different strategies used for hyperparameter tuning, and some of the most popular ones are grid search and randomized search.

Grid search: This strategy evaluates a range of hyperparameter values by exhaustively searching through all possible combinations of parameter values in a grid. The best combination is selected based on the model’s performance metrics.

Randomized Search: This strategy evaluates a random set of hyperparameter values within a given range. This approach can be faster than grid search and can still produce good results.

H3: general hyperparameter tuning strategy

To effectively tune hyperparameters, it is crucial to follow a general strategy. According to, a general hyperparameter tuning strategy consists of three phases:

- Preprocessing and feature engineering

- Initial modeling and hyperparameter selection

- Refining hyperparameters

Preprocessing and Feature Engineering

This foundational phase focuses on preparing the data for modeling. Key steps include data cleaning (handling missing values, removing duplicates), data normalization (scaling features to a common range), and feature engineering (creating or selecting relevant features). Some preprocessing techniques themselves involve hyperparameters—for example, determining the number of features to select using methods like recursive feature elimination (RFE) or setting thresholds for variance in feature selection. Making the right choices here can improve model efficiency and predictive power.

Initial Modeling and Hyperparameter Selection

Once the data is prepped, the next step is to choose the appropriate machine learning model and define the initial set of hyperparameters to explore. This includes selecting the model architecture (e.g., decision tree, random forest, neural network) and key model-specific settings like the learning rate, number of estimators, or number of layers and neurons. A wide but reasonable range of hyperparameter values is selected at this stage to ensure that the tuning process has enough flexibility to discover optimal configurations.

Refining Hyperparameters

In the final phase, hyperparameters are fine-tuned based on model performance. This involves iterative testing of different value combinations using techniques like GridSearchCV, RandomizedSearchCV, or more advanced methods like Bayesian optimization and Hyperopt. The goal is to identify the set of hyperparameters that yield the best cross-validation score, balancing model accuracy with generalization. Fine-tuning often results in substantial performance gains, especially when initial settings are far from ideal.

Most Common Questions Asked About Hyperparameters

Q: Can hyperparameters be learned during training?

A: No, hyperparameters are set before the training begins and are not updated during the training process.

Q: Why is it necessary to set the hyperparameters?

A: Hyperparameters control the learning process of a model, and their values can significantly affect its performance. Setting the hyperparameters helps to improve the model’s accuracy and prevent overfitting.

Methods for Hyperparameter Tuning in Machine Learning

Hyperparameter tuning is an essential step in machine learning to fine-tune models and improve their performance. Several methods are used to tune hyperparameters, including grid search, random search, and bayesian optimization. Here’s a brief overview of each method:

Ready to take your machine learning skills to the next level? Click on the video to learn more about building robust models.

1. Grid Search

Grid search is a commonly used method for hyperparameter tuning. In this method, a predefined set of hyperparameters is defined, and each combination of hyperparameters is tried to find the best set of values.

Grid search is suitable for small and quick searches of hyperparameter values that are known to perform well generally. However, it may not be an efficient method when the search space is large.

2. Random Search

Unlike grid search, in a random search, only a part of the parameter values are tried out. In this method, the parameter values are sampled from a given list or specified distribution, and the number of parameter settings that are sampled is given by n_iter.

Random search is appropriate for discovering new hyperparameter values or new combinations of hyperparameters, often resulting in better performance, although it may take more time to complete.

3. Bayesian Optimization

Bayesian optimization is a method for hyperparameter tuning that aims to find the best set of hyperparameters by building a probabilistic model of the objective function and then searching for the optimal values. This method is suitable when the search space is large and complex.

Bayesian optimization is based on the principle of Bayes’s theorem, which allows the algorithm to update its belief about the objective function as it evaluates more hyperparameters. This method can converge quickly and may result in better performance than grid search and random search.

Choosing the Right Method for Hyperparameter Tuning

In conclusion, hyperparameter tuning is essential in machine learning, and several methods can be used to fine-tune models. Grid search is a simple and efficient method for small search spaces, while the random search can be used for discovering new hyperparameter values.

Bayesian optimization is a powerful method for complex and large search spaces that can result in better performance by building a probabilistic model of the objective function. It’s choosing the right method based on the problem at hand is essential.