In this blog, we are going to discuss the value addition provided by programming languages for data analysts.

Data analysts have one simple goal – to provide organizations with insights that inform better business decisions. And, to do this, the analytical process has to be successful. Unfortunately, as many data analysts would agree, encountering different types of analysis bugs when analyzing data is part of the data analytical process.

However, these bugs don’t have to be many if only preventive measures are taken every step of the way. This is where programming languages prove valuable for data analysts. Programming languages are one such valuable tool that helps data analysts to prevent and solve a number of data problems. These languages contain different bug-preventing attributes that make this possible. Here are some of these characteristics.

Type safety/strong typing

When there is an inconsistency between varying data types for the variables, methods, and constants, the program behaves undesirably. In other words, type errors occur. For instance, this error can occur when a programmer treats a string as an integer or vice versa.

Type safety is an attribute of programming languages that discourages type errors in a program. Type safety or type soundness demand programmers to define the type of each variable. This means that programmers must declare the data type that is meant to be in the box as well as give the box a variable name. This ensures that the programmer only interprets values as per the rules of the declared data type, which prevents confusion about the data type.

Immutability

If an object is immutable, then its value or state can’t be changed. Immutability in programming languages allows developers to use variables that can’t be muted or changed. This means that users can only create programs using constants. How does this prevent problems? Immutable objects ensure thread safety as compared to mutable objects. In a multithreaded application, a thread doesn’t have to worry about the other threads as it acts on an immutable object.

The reason here is that the thread knows that the object can’t be modified by anyone. The immutable approach in data analysis ensures that the original data set is not modified. In case a bug is identified in the code, the original data helps find a solution faster. In addition, immutability is valuable in creating safer data backups. In immutable data storage, data is safe from data corruption, deletion, and tampering.

Expressiveness

Expressiveness in a programming language can be defined as the extent of ideas that can be communicated and represented in that language. If a language allows users to communicate their intent easily and detect errors early, that language can be termed as expressive. Programming languages that are expressive allow programmers to write shorter codes.

Moreover, a shorter code has less incidental complexity/ boilerplate, which makes it easier to identify errors. Talking of expressiveness, it is important to know that programming languages are English based.

When working with multilingual websites, it would be important to translate the languages to English for successful data analysis. However, there is the risk of distortion or meaning loss when applying analysis techniques to translated data. Working with professional translation companies eliminates these risks.

In addition, working in a language that they can understand makes it easy to spot errors.

Static and dynamic typing

These attributes of programming languages are used for error detection. They allow programmers to catch bugs and solve them before they cause havoc. The type-checking process in static typing happens at compile time.

If there is an error in the code such as invalid type arguments, missing functions or a discrepancy between the type of variable and data value assigned to it, static typing catches these bugs before the program runs the code. This means zero chances of running an erroneous code.

On the other hand, in dynamic typing, type-checking occurs during runtime. However, it gives the programmer a chance to correct the code if it detects any bugs before the worst happens.

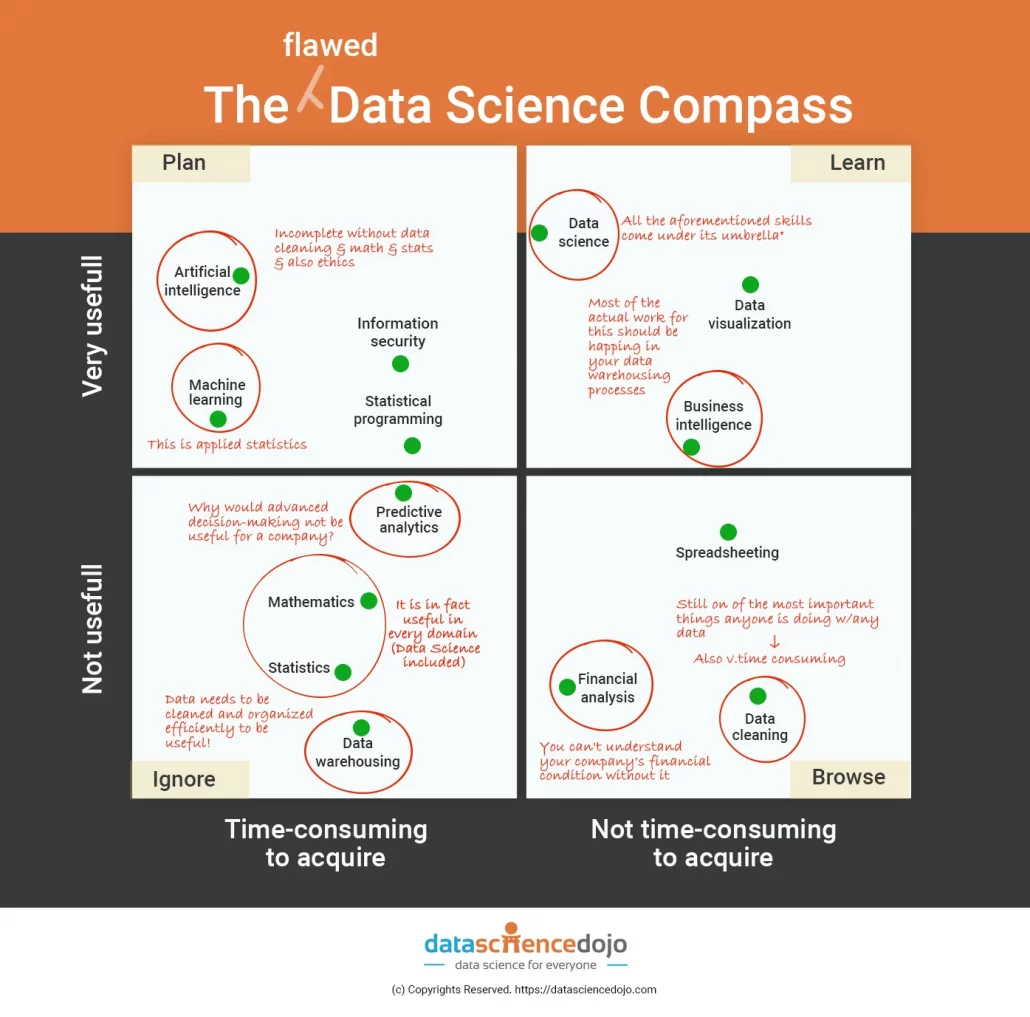

Programming learning – Data analysts

Among the tools that data analysts require in their line of work are programming languages. Ideally, programming languages are every programmer’s defense against different types of bugs. This is because they come with characteristics that reduce the chances of writing codes that are prone to errors. These attributes include those listed above and are available in different programming languages such as Java, Python, and Scala, which are best suited for data analysts.