While language models in generative AI focus on textual data, vision language models (VLMs) bridge the gap between textual and visual data. Before we explore Moondream 2, let’s understand VLMs better.

Understanding vision language models

VLMs combine computer vision (CV) and natural language processing (NLP), enabling them to understand and connect visual information with textual data.

Some key capabilities of VLMs include image captioning, visual question answering, and image retrieval. It learns these tasks by training on datasets that pair images with their corresponding textual description. There are several large vision language models available in the market including GPT-4v, LLaVA, and BLIP-2.

However, these are large vision models requiring heavy computational resources to produce effective results, and that too at slow inference speeds. The solution has been presented in the form of small VLMs that provide a balance between efficiency and performance.

In this blog, we will look deeper into Moondream 2, a small vision language model.

What is Moondream 2?

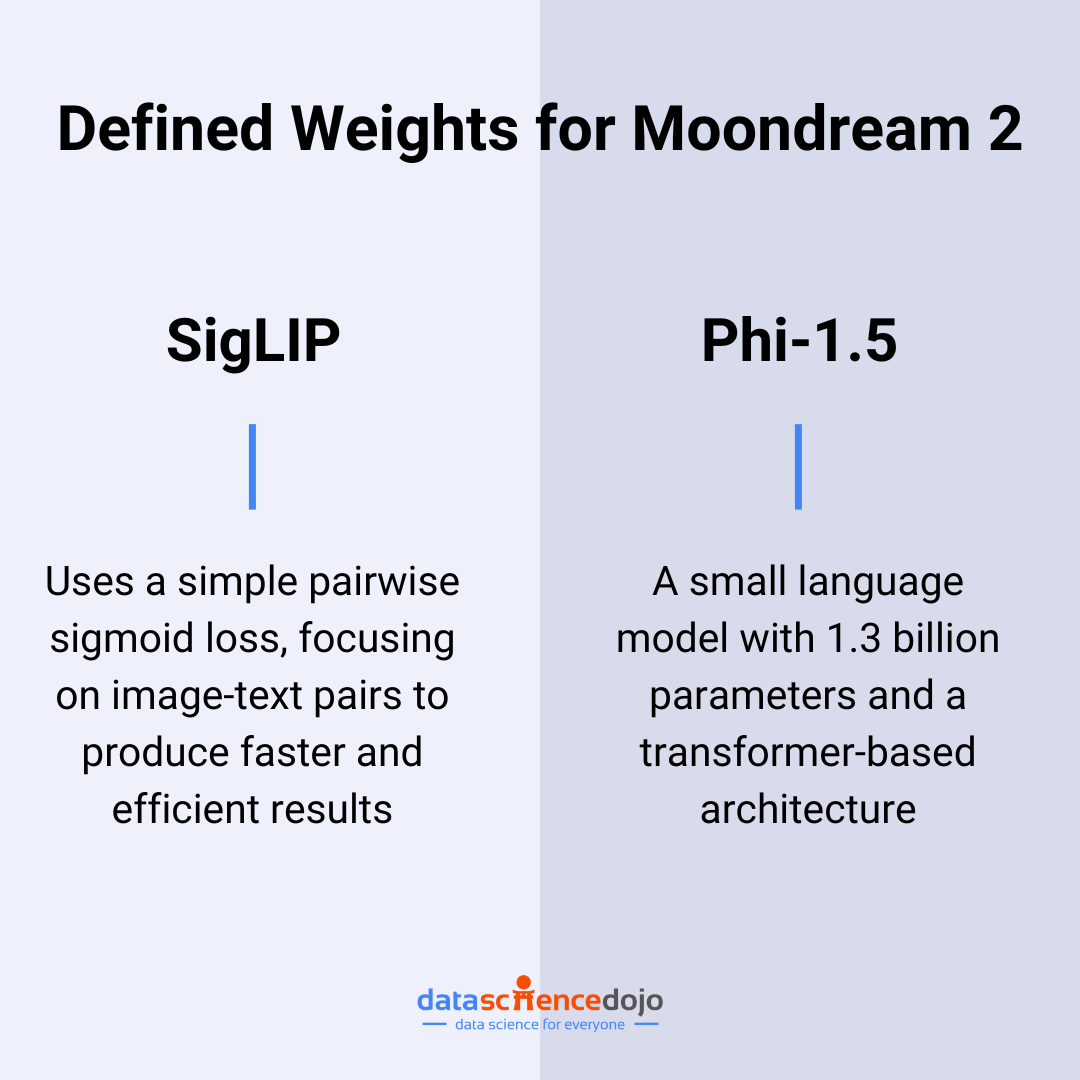

Moondream 2 is an open-source vision language model. With only 1.86 billion parameters, it is a tiny VLM with weights from SigLIP and Phi-1.5. It is designed to operate seamlessly on devices with limited computational resources.

Let’s take a closer look at the defined weights for Moondream2.

SigLIP (Sigmoid Loss for Language Image Pre-Training)

It is a newer and simpler method that helps the computer learn just by looking at pictures and their captions, one at a time, making it faster and more effective, especially when training with lots of data. It is similar to a CLIP (Contrastive Language–Image Pre-training) model.

However, Moondream 2 has replaced softmax loss in CLIP with a simple pairwise sigmoid loss. The change ensures better performance because sigmoid loss only focuses on image-text pairs. Without the need for a global view of all pairwise data within a batch, the process becomes faster and more efficient.

Learn computer vision using Python

Phi-1.5

It is a small language model with 1.3 billion parameters and transformer-based architecture. Developed by Microsoft, the model was trained on 30 billion words, including information from its predecessor, Phi-1 and 20 billion words artificially created by another AI model, GPT-3.5.

With its unique training method, Phi-1.5 has mastered different skills required of a language model, like understanding common sense, following complex instructions, comprehending language, and retaining information. Moreover, it even outperforms its larger counterparts.

A closer look into Moondream 2

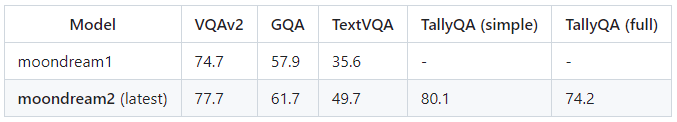

It is the latest and updated version of the model previously known as Moondream 1. Below is a quick comparison of the two models, highlighting the basic parameters of improvement.

Like typical vision language models, Moondream 2 interprets the visual data to provide textual responses based on the information. Some demos included in its repository highlight its capabilities. Let’s look at these demos below:

Demo 1

Moondream 2 was provided with the following image:

Based on this image, the VLM was asked some relevant questions.

Question: What is the girl doing?

Answer: The girl is sitting at a table and eating a large hamburger.

Question: What color is the girl’s hair?

Answer: The girl’s hair is white.

The interaction with Moondream 2 highlights that the VLM is capable of understanding the basic image properties like the girl’s hair color. Moreover, it can also interpret the actions of the actors in an image from their position in a specific instance.

Demo 2

The VLM was provided with another image.

The demo focused on asking the following:

Question: What is this?

Answer: The image features a computer server rack, which is a large metal structure designed to hold and organize multiple computer components, such as motherboards, cooling systems, and other peripherals. The rack is filled with various computer parts, including multiple computer chips, wires, and other electronic components. The rack is placed on a carpeted floor, and there is a couch in the background, suggesting that the setup is likely in a living or working space.

Question: What is behind the stand?

Answer: There is a brick wall behind the stand.

The above demo highlights the ability of Moondream 2 to explore and interpret complex visual outputs in great detail. The VLM provides in-depth textual information from the visual data. It also presents spacial understanding of the image components.

Hence, Moondream 2 is a promising addition to the world of vision language models with its refined capabilities to interpret visual data and provide in-depth textual output. Since we understand the strengths of the VLM, it is time to explore its drawbacks or weaknesses.

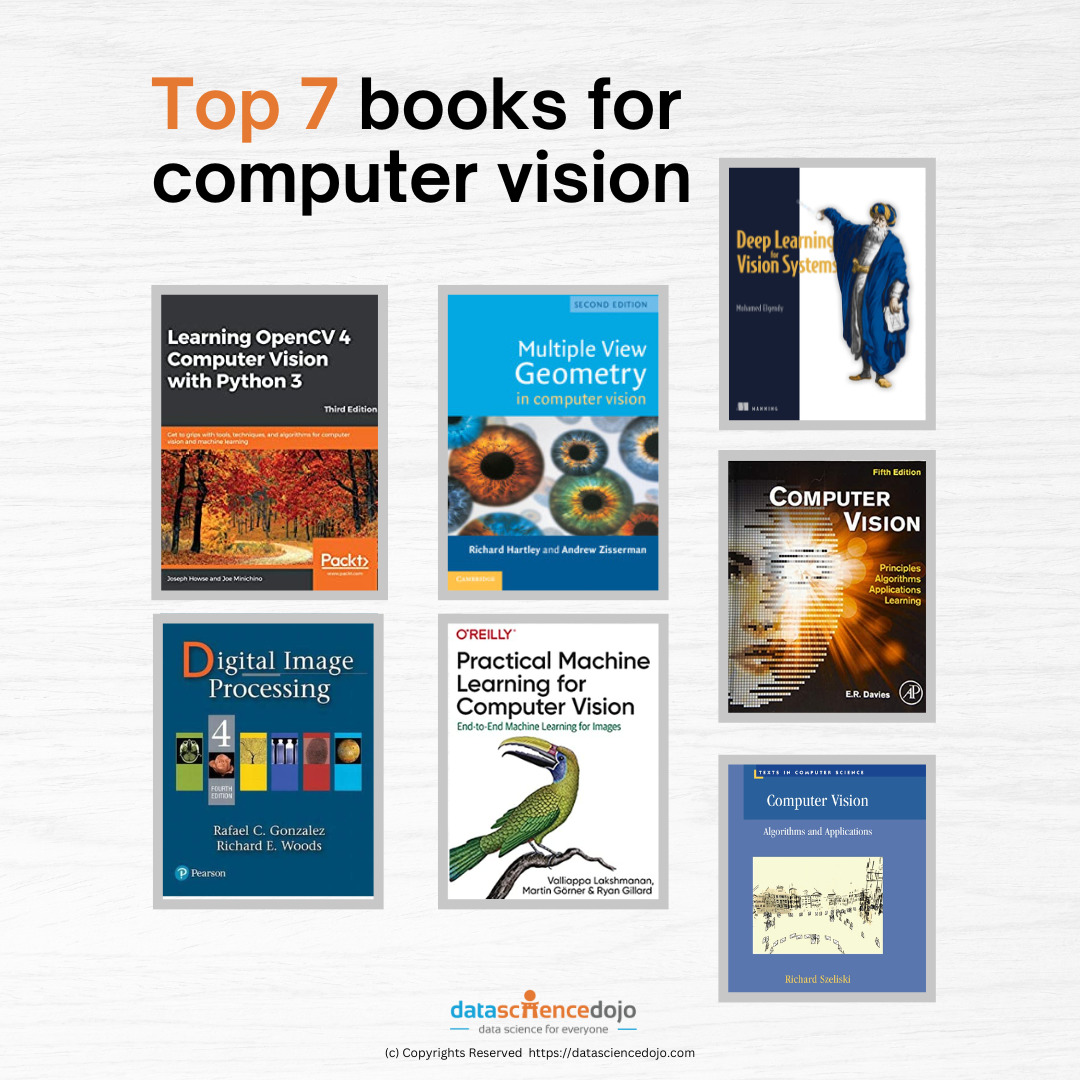

Here’s a list of 7 books you must explore when learning about computer vision

Limitations of Moondream 2

Before you explore the world of Moondream 2, you must understand its limitations when dealing with visual and textual data.

Generating inaccurate statements

It is important to understand that Moondream 2 may generate inaccurate statements, especially for complex topics or situations requiring real-world understanding. The model might also struggle to grasp subtle details or hidden meanings within instructions.

Presenting unconscious bias

Like any other VLM, Moondream 2 is also a product of the data is it trained on. Thus, it can reflect the biases of the world, perpetuating stereotypes or discriminatory views.

As a user, it’s crucial to be aware of this potential bias and to approach the model’s outputs with a critical eye. Don’t blindly accept everything it generates; use your own judgment and fact-check when necessary.

Mirroring prompts

VLMs will reflect the prompts provided to them. Hence, if a user prompts the model to generate offensive or inappropriate content, the model may comply. It’s important to be mindful of the prompts and avoid asking the model to create anything harmful or hurtful.

In conclusion…

To sum it up, Moondream 2 is a promising step in the development of vision language models. Powered by its key components and compact size, the model is efficient and fast. However, like any language model we use nowadays, Moondream 2 also requires its users to be responsible for ensuring the creation of useful content.

If you are ready to experiment with Moondream 2 now, install the necessary files and start right away! Here’s a look at what the VLM’s user interface looks like.