The world of large language models (LLMs) is evolving at breakneck speed. With each new release, the bar for performance, efficiency, and accessibility is raised. Enter Deep Seek v3.1—the latest breakthrough in open-source AI that’s making waves across the data science and AI communities.

Whether you’re a developer, researcher, or enterprise leader, understanding Deep Seek v3.1 is crucial for staying ahead in the rapidly changing landscape of artificial intelligence. In this guide, we’ll break down what makes Deep Seek v3.1 unique, how it compares to other LLMs, and how you can leverage its capabilities for your projects.

Uncover how brain-inspired architectures are pushing LLMs toward deeper, multi-step reasoning.

What is Deep Seek v3.1?

Deep Seek v3.1 is an advanced, open-source large language model developed by DeepSeek AI. Building on the success of previous versions, v3.1 introduces significant improvements in reasoning, context handling, multilingual support, and agentic AI capabilities.

Key Features at a Glance

-

Hybrid Inference Modes:

Supports both “Think” (reasoning) and “Non-Think” (fast response) modes for flexible deployment.

-

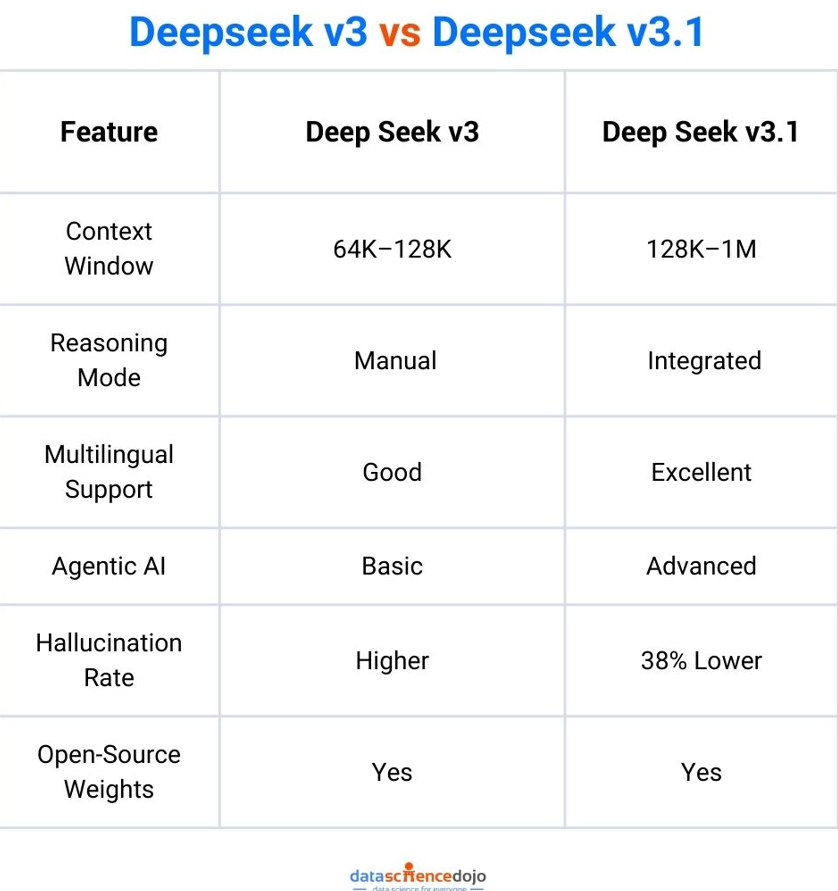

Expanded Context Window:

Processes up to 128K tokens (with enterprise versions supporting up to 1 million tokens), enabling analysis of entire codebases, research papers, or lengthy legal documents.

-

Enhanced Reasoning:

Up to 43% improvement in multi-step reasoning over previous models.

-

Superior Multilingual Support:

Over 100 languages, including low-resource and Asian languages.

-

Reduced Hallucinations:

38% fewer hallucinations compared to earlier versions.

-

Open-Source Weights:

Available for research and commercial use via Hugging Face.

-

Agentic AI Skills:

Improved tool use, multi-step agent tasks, and API integration for building autonomous AI agents.

Catch up on the evolution of LLMs and their applications in our comprehensive LLM guide.

Deep Dive: Technical Architecture of Deep Seek v3.1

Model Structure

-

Parameters:

671B total, 37B activated per token (Mixture-of-Experts architecture)

-

Training Data:

840B tokens, with extended long-context training phases

-

Tokenizer:

Updated for efficiency and multilingual support

-

Context Window:

128K tokens (with enterprise options up to 1M tokens)

-

Hybrid Modes:

Switch between “Think” (deep reasoning) and “Non-Think” (fast inference) via API or UI toggle

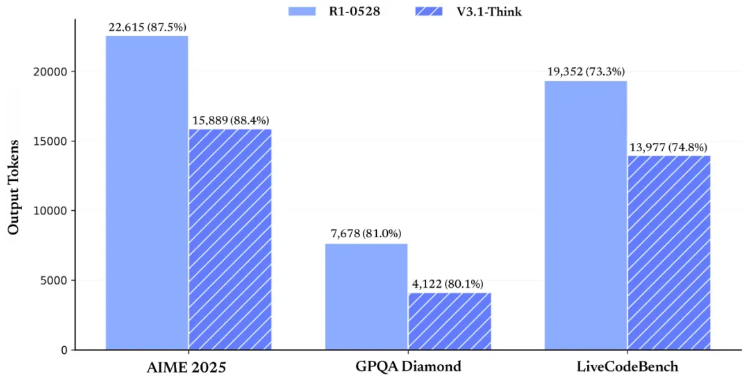

Hybrid Inference: Think vs. Non-Think

-

Think Mode:

Activates advanced reasoning, multi-step planning, and agentic workflows—ideal for complex tasks like code generation, research, and scientific analysis.

-

Non-Think Mode:

Prioritizes speed for straightforward Q&A, chatbots, and real-time applications.

Agentic AI & Tool Use

Deep Seek v3.1 is designed for the agent era, supporting:

-

Strict Function Calling:

For safe, reliable API integration

-

Tool Use:

Enhanced post-training for multi-step agent tasks

-

Code & Search Agents:

Outperforms previous models on SWE/Terminal-Bench and complex search tasks

Explore how agentic AI is transforming workflows in our Agentic AI Bootcamp.

Benchmarks & Performance: How Does Deep Seek v3.1 Stack Up?

Benchmark Results

Real-World Performance

- Code Generation: Outperforms many closed-source models in code benchmarks and agentic tasks.

- Multilingual Tasks: Near-native proficiency in 100+ languages.

- Long-Context Reasoning: Handles entire codebases, research papers, and legal documents without losing context.

Learn more about LLM benchmarks and evaluation in our LLM Benchmarks Guide.

What’s New in Deep Seek v3.1 vs. Previous Versions?

Use Cases: Where Deep Seek v3.1 Shines

1. Software Development

- Advanced Code Generation: Write, debug, and refactor code in multiple languages.

- Agentic Coding Assistants: Build autonomous agents for code review, documentation, and testing.

2. Scientific Research

- Long-Context Analysis: Summarize and interpret entire research papers or datasets.

- Multimodal Reasoning: Integrate text, code, and image understanding for complex scientific workflows.

3. Business Intelligence

- Automated Reporting: Generate insights from large, multilingual datasets.

- Data Analysis: Perform complex queries and generate actionable business recommendations.

4. Education & Tutoring

- Personalized Learning: Multilingual tutoring with step-by-step explanations.

- Content Generation: Create high-quality, culturally sensitive educational materials.

5. Enterprise AI

- API Integration: Seamlessly connect Deep Seek v3.1 to internal tools and workflows.

- Agentic Automation: Deploy AI agents for customer support, knowledge management, and more.

See how DeepSeek is making high-powered LLMs accessible on budget hardware in our in-depth analysis.

Open-Source Commitment & Community Impact

Deep Seek v3.1 is not just a technical marvel—it’s a statement for open, accessible AI. By releasing both the full and smaller (7B parameter) versions as open source, DeepSeek AI empowers researchers, startups, and enterprises to innovate without the constraints of closed ecosystems.

- Download & Deploy: Hugging Face Model Card

- Community Integrations: Supported by major platforms and frameworks

- Collaborative Development: Contributions and feedback welcomed via GitHub and community forums

Explore the rise of open-source LLMs and their enterprise benefits in our open-source LLMs guide.

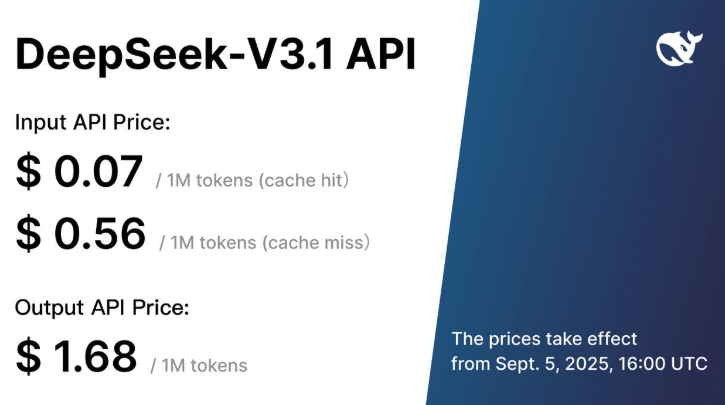

Pricing & API Access

-

API Pricing:

Competitive, with discounts for off-peak usage

-

API Modes:

Switch between Think/Non-Think for cost and performance optimization

-

Enterprise Support:

Custom deployments and support available

Getting Started with Deep Seek v3.1

-

Try Online:

Use DeepSeek’s web interface for instant access (DeepSeek Chat)

-

Download the Model:

Deploy locally or on your preferred cloud (Hugging Face)

-

Integrate via API:

Connect to your applications using the documented API endpoints

-

Join the Community:

Contribute, ask questions, and share use cases on GitHub and forums

Ready to build custom LLM applications? Check out our LLM Bootcamp.

Challenges & Considerations

-

Data Privacy:

As with any LLM, ensure sensitive data is handled securely, especially when using cloud APIs.

-

Bias & Hallucinations:

While Deep Seek v3.1 reduces hallucinations, always validate outputs for critical applications.

-

Hardware Requirements:

Running the full model locally requires significant compute resources; consider using smaller versions or cloud APIs for lighter workloads.

Learn about LLM evaluation, risks, and best practices in our LLM evaluation guide.

Frequently Asked Questions (FAQ)

Q1: How does Deep Seek v3.1 compare to GPT-4 or Llama 3?

A: Deep Seek v3.1 matches or exceeds many closed-source models in reasoning, context handling, and multilingual support, while remaining fully open-source and highly customizable.

Q2: Can I fine-tune Deep Seek v3.1 on my own data?

A: Yes! The open-source weights and documentation make it easy to fine-tune for domain-specific tasks.

Q3: What are the hardware requirements for running Deep Seek v3.1 locally?

A: The full model requires high-end GPUs (A100 or similar), but smaller versions are available for less resource-intensive deployments.

Q4: Is Deep Seek v3.1 suitable for enterprise applications?

A: Absolutely. With robust API support, agentic AI capabilities, and strong benchmarks, it’s ideal for enterprise-scale AI solutions.

Conclusion: The Future of Open-Source LLMs Starts Here

Deep Seek v3.1 is more than just another large language model—it’s a leap forward in open, accessible, and agentic AI. With its hybrid inference modes, massive context window, advanced reasoning, and multilingual prowess, it’s poised to power the next generation of AI applications across industries.

Whether you’re building autonomous agents, analyzing massive datasets, or creating multilingual content, Deep Seek v3.1 offers the flexibility, performance, and openness you need.

Ready to get started?

- Download Deep Seek v3.1

- Try the online demo

- Join Data Science Dojo’s LLM Bootcamp to master LLMs and agentic AI