Generative AI represents a significant leap forward in the field of artificial intelligence. Unlike traditional AI, which is programmed to respond to specific inputs with predetermined outputs, generative AI can create new content that is indistinguishable from that produced by humans.

It utilizes machine learning models trained on vast amounts of data to generate a diverse array of outputs, ranging from text to images and beyond. However, as the impact of AI has advanced, so has the need to handle it responsibly.

Explore Top 7 Generative AI courses offered online

In this blog, we will explore how AI can be handled responsibly, producing outputs within the ethical and legal standards set in place. Hence answering the question of ‘What is responsible AI?’ in detail.

However, before we explore the main principles of responsible AI, let’s understand the concept.

What is Responsible AI?

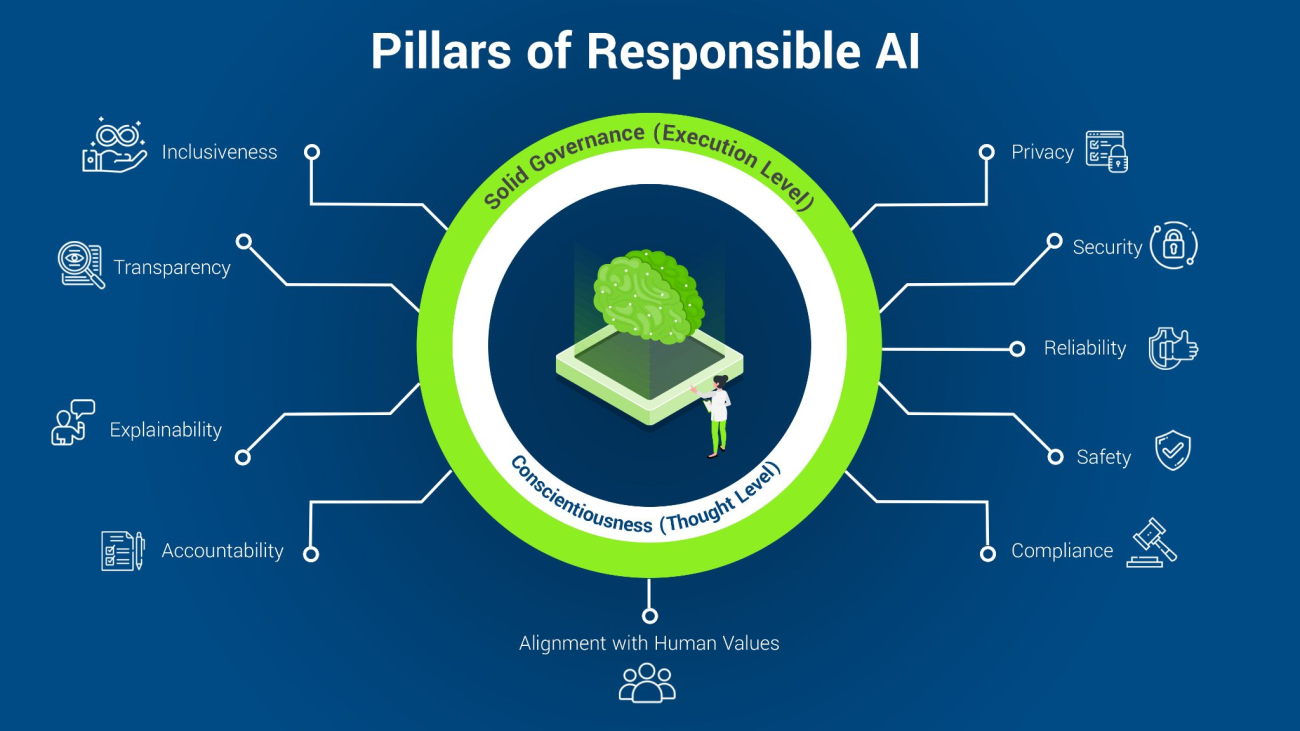

Responsible AI is a multifaceted approach to the development, deployment, and use of Artificial Intelligence (AI) systems. It ensures that our interaction with AI remains within ethical and legal standards while remaining transparent and aligning with societal values.

Responsible AI refers to all principles and practices that aim to ensure AI systems are fair, understandable, secure, and robust. The principles of responsible AI also allow the use of generative AI within our society to be governed effectively at all levels.

Explore some key ethical issues in AI that you must know

The Importance of Responsibility in AI Development

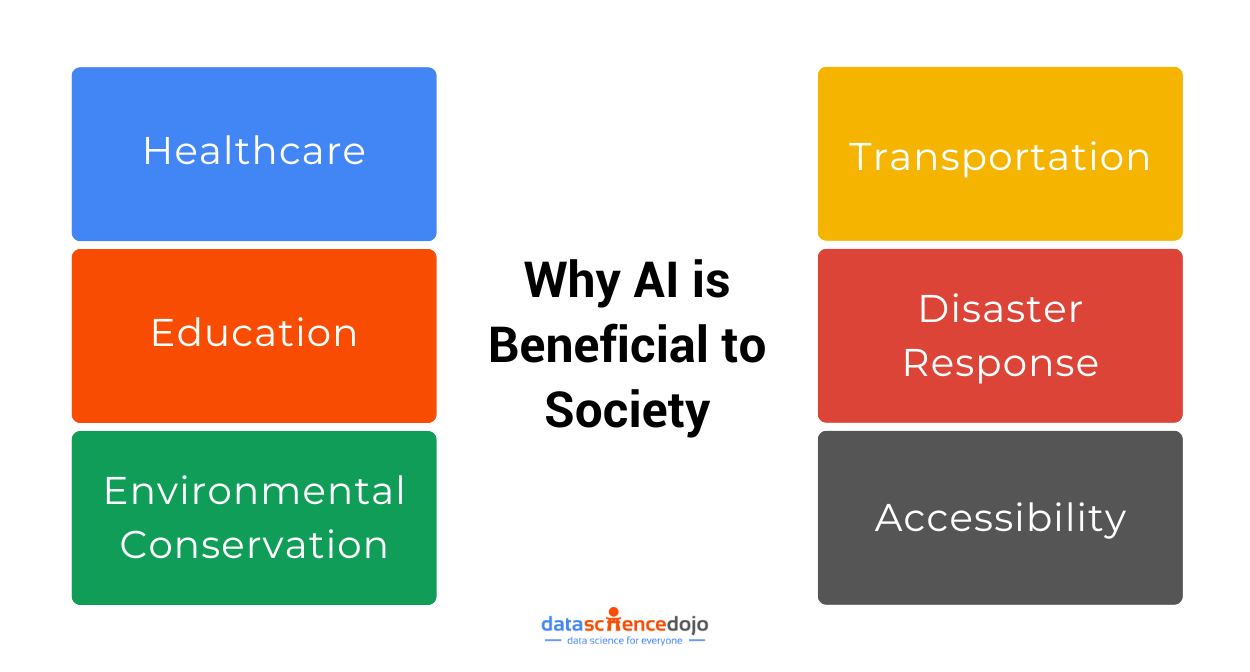

With great power comes great responsibility, a sentiment that holds particularly true in the realm of AI development. As generative AI technologies grow more sophisticated, they also raise ethical concerns and the potential to significantly impact society.

Understand the Generative AI roadmap

It’s crucial for those involved in AI creation — from data scientists to developers — to adopt a responsible approach that carefully evaluates and mitigates any associated risks. To dive deeper into Generative AI’s impact on society and its ethical, social, and legal implications, tune in to our podcast now!

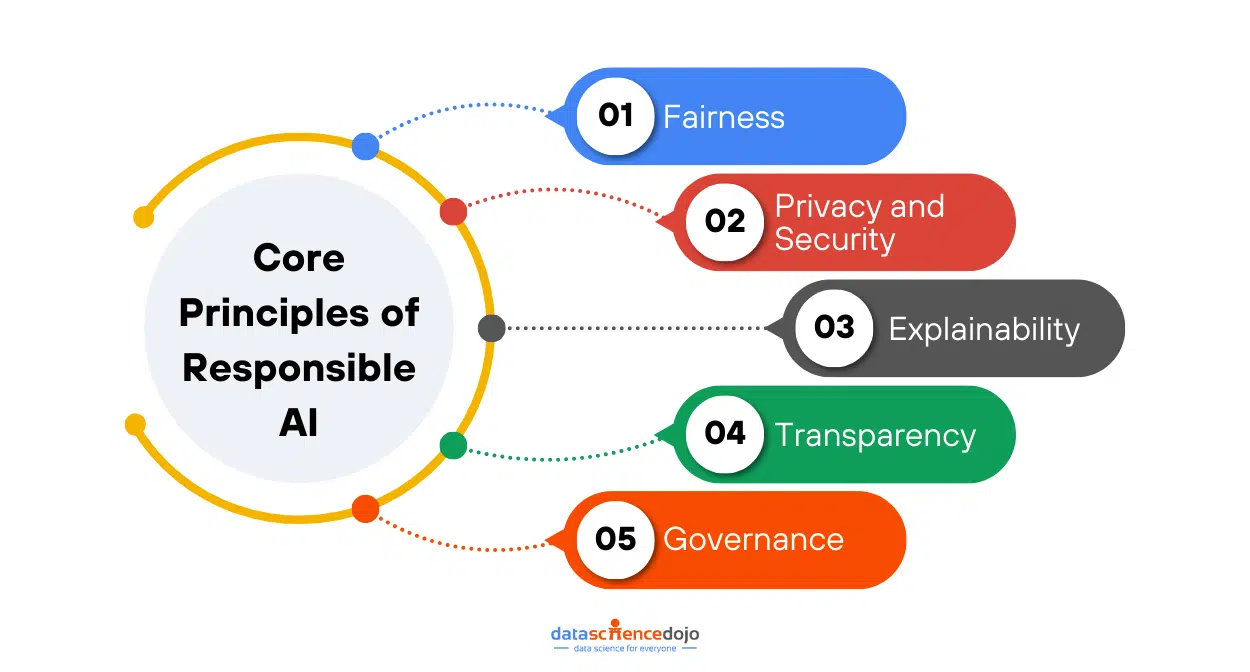

Core Principles of Responsible AI

Let’s delve into the core responsible AI principles:

Fairness

This principle is concerned with how an AI system impacts different groups of users, such as by gender, ethnicity, or other demographics. The goal is to ensure that AI systems do not create or reinforce unfair biases and that they treat all user groups equitably.

Discover how to use custom vision AI and Power BI to build a bird recognition app

Privacy and Security

AI systems must protect sensitive data from unauthorized access, theft, and exposure. Ensuring privacy and security is essential to maintain user trust and to comply with legal and ethical standards concerning data protection.

Explainability

This entails implementing mechanisms to understand and evaluate the outputs of an AI system. It’s about making the decision-making process of AI models transparent and understandable to humans, which is crucial for trust and accountability, especially in high-stakes scenarios for instance in finance, legal, and healthcare industries.

Transparency

This principle is about communicating information about an AI system so that stakeholders can make informed choices about their use of the system. Transparency involves disclosing how the AI system works, the data it uses, and its limitations, which is fundamental for gaining user trust and consent.

Governance

It refers to the processes within an organization to define, implement, and enforce responsible AI practices. This includes establishing clear policies, procedures, and accountability mechanisms to govern the development and use of AI systems.

These principles are integral to the development and deployment of AI systems that are ethical, fair, and respectful of user rights and societal norms.

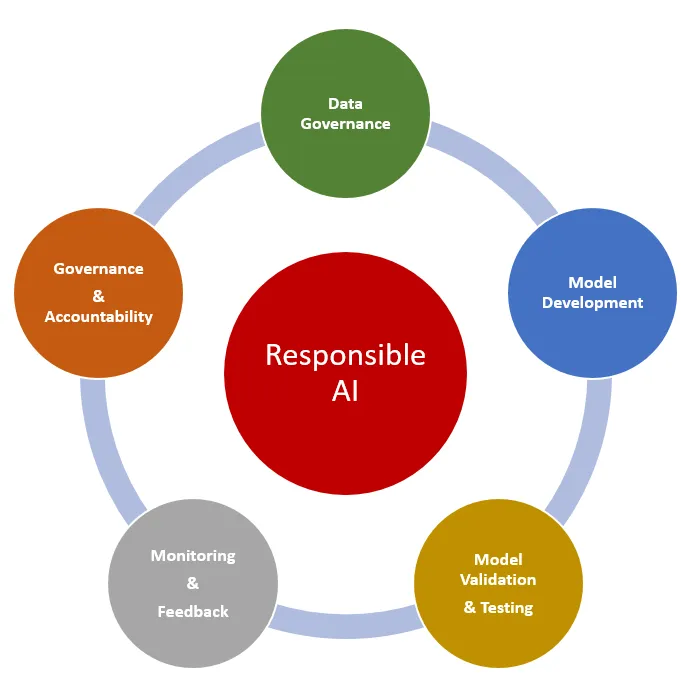

How to build Responsible AI?

Here’s a step-by-step guide to building trustworthy AI systems.

Identify potential harms

This step is about recognizing and understanding the various risks and negative impacts that generative AI applications could potentially cause. It’s a proactive measure to consider what could go wrong and how these risks could affect users and society at large.

This includes issues of privacy invasion, amplification of biases, unfair treatment of certain user groups, and other ethical concerns.

Measure the presence of these harms

Once potential harms have been identified, the next step is to measure and evaluate how and to what extent these issues are manifested in the AI system’s outputs.

This involves rigorous testing and analysis to detect any harmful patterns or outcomes produced by the AI. It is an essential process to quantify the identified risks and understand their severity.

Learn to build AI-based chatbots in Python

Mitigate the harms

After measuring the presence of potential harms, it’s crucial to actively work on strategies and solutions to reduce their impact and presence. This might involve adjusting the training data, reconfiguring the AI model, implementing additional filters, or any other measures that can help minimize the negative outcomes.

Moreover, clear communication with users about the risks and the steps taken to mitigate them is an important aspect of this component, ensuring transparency and maintaining trust.

Operate the solution responsibly

The final component emphasizes the need to operate and maintain the AI solution in a responsible manner. This includes having a well-defined plan for deployment that considers all aspects of responsible usage.

Explore 15 Spectacular AI, ML, and Data Science Movies

It also involves ongoing monitoring, maintenance, and updates to the AI system to ensure it continues to operate within the ethical guidelines laid out. This step is about the continuous responsibility of managing the AI solution throughout its lifecycle.

Let’s take a practical example to further understand how we can build trustworthy and responsible AI models.

Case study: Building a responsible AI chatbot

Designing AI chatbots requires careful thought not only about their functional capabilities but also their interaction style and the underlying ethical implications. When deciding on the personality of the AI, we must consider whether we want an AI that always agrees or one that challenges users to encourage deeper thinking or problem-solving.

How do we balance representing diverse perspectives without reinforcing biases?

The balance between representing diverse perspectives and avoiding the reinforcement of biases is a critical consideration. AI chatbots are often trained on historical data, which can reflect societal biases.

Here’s a guide on LLM chatbots, explaining all you need to know

For instance, if you ask an AI to generate an image of a doctor or a nurse, the resulting images may reflect gender or racial stereotypes due to biases in the training data.

However, the chatbot should not be overly intrusive and should serve more as an assistive or embedded feature rather than the central focus of the product. It’s important to create an AI that is non-intrusive and supports the user contextually, based on the situation, rather than dominating the interaction.

The design process should also involve thinking critically about when and how AI should maintain a high level of integrity, acknowledging the limitations of AI without consciousness or general intelligence. AI needs to be designed to sound confident but not to the extent that it provides false or misleading answers.

Additionally, the design of AI chatbots should allow users to experience natural and meaningful interactions. This can include allowing the users to choose the personality of the AI, which can make the interaction more relatable and engaging.

By following these steps, developers and organizations can strive to build AI systems that are ethical, fair, and trustworthy, thus fostering greater acceptance and more responsible utilization of AI technology.

Interested in learning how to implement AI guardrails in RAG-based solutions? Tune in to our podcast with the CEO of LlamaIndex now.