Did you know that neural networks are behind the technologies you use daily, from voice assistants to facial recognition? These powerful computational models mimic the brain’s neural pathways, allowing machines to recognize patterns and learn from data.

As the backbone of modern AI, neural networks tackle complex problems traditional algorithms struggle with, enhancing applications like medical diagnostics and financial forecasting. This beginner’s guide will simplify neural networks, exploring their types, applications, and transformative impact on technology.

Exlpore Top 5 AI skills and AI jobs to know about in 2024

Let’s break down this fascinating concept into digestible pieces, using real-world examples and simple language.

What is a Neural Network?

Imagine a neural network as a mini-brain in your computer. It’s a collection of algorithms designed to recognize patterns, much like how our brain identifies patterns and learns from experiences.

Know more about 101 Machine Learning Algorithms for data science with cheat sheets

For instance, when you show numerous pictures of cats and dogs, it learns to distinguish between the two over time, just like a child learning to differentiate animals.

Structure of Neural Networks

Think of it as a layered cake. Each layer consists of nodes, similar to neurons in the brain. These layers are interconnected, with each layer responsible for a specific task.

Understand Applications of Neural Networks in 7 Different Industries

For example, in facial recognition software, one layer might focus on identifying edges, another on recognizing shapes, and so on, until the final layer determines the face’s identity.

How do Neural Networks learn?

Learning happens through a process called training. Here, the network adjusts its internal settings based on the data it receives. Consider a weather prediction model: by feeding it historical weather data, it learns to predict future weather patterns.

Backpropagation and gradient descent

These are two key mechanisms in learning. Backpropagation is like a feedback system – it helps the network learn from its mistakes. Gradient descent, on the other hand, is a strategy to find the best way to improve learning. It’s akin to finding the lowest point in a valley – the point where the network’s predictions are most accurate.

Practical application: Recognizing hand-written digits

A classic example is teaching a neural network to recognize handwritten numbers. By showing it thousands of handwritten digits, it learns the unique features of each number and can eventually identify them with high accuracy.

Learn more about Hands-on Deep Learning using Python in Cloud

Architecture of Neural Networks

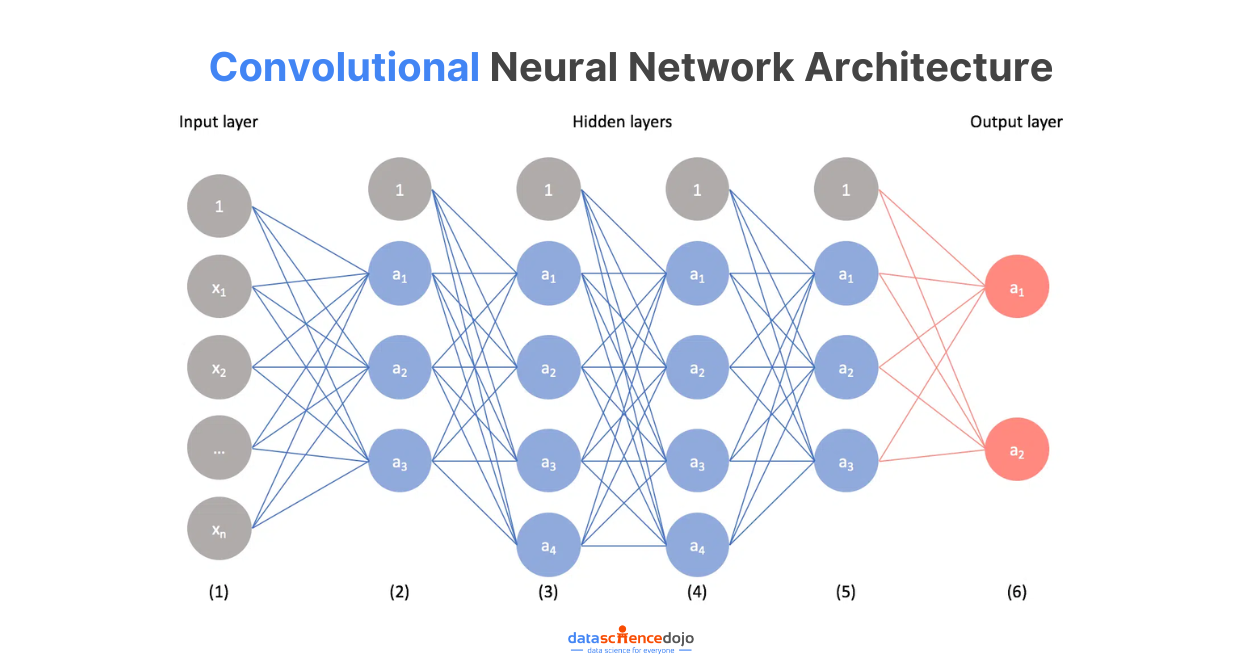

Neural networks work by mimicking the structure and function of the human brain, using a system of interconnected nodes or “neurons” to process and interpret data. Here’s a breakdown of their architecture:

Basic Structure

A typical neural network consists of an input layer, one or more hidden layers, and an output layer.

-

- Input layer: This is where the network receives its input data.

- Hidden layers: These layers, located between the input and output layers, perform most of the computational work. Each layer consists of neurons that apply specific transformations to the data.

- Output layer: This layer produces the final output of the network.

Neurons

The fundamental units of a neural network, neurons in each layer are interconnected and transmit signals to each other. Each neuron typically applies a mathematical function to its input, which determines its activation or output.

Weights and Biases: Connections between neurons have associated weights and biases, which are adjusted during the training process to optimize the network’s performance.

Activation Functions: These functions determine whether a neuron should be activated or not, based on the weighted sum of its inputs. Common activation functions include sigmoid, tanh, and ReLU (Rectified Linear Unit).

Learning Process: The learning process is called backpropagation, where the network adjusts its weights and biases based on the error of its output compared to the expected result. This process is often coupled with an optimization algorithm like gradient descent, which minimizes the error or loss function.

Types of Neural Networks

There are various types of neural network architectures, each suited for different tasks. For example, Convolutional Neural Networks (CNNs) are used for image processing, while Recurrent Neural Networks (RNNs) are effective for sequential data like speech or text.

Convolutional Neural Networks (CNNs)

Neural networks encompass a variety of architectures, each uniquely designed to address specific types of tasks, leveraging their structural and functional distinctions. Among these architectures, CNNs stand out as particularly adept at handling image processing tasks.

These networks excel in analyzing visual data because they apply convolutional operations across grid-like data structures, making them highly effective in recognizing patterns and features within images.

This capability is crucial for applications such as facial recognition, medical imaging, and autonomous vehicles where visual data interpretation is paramount.

Recurrent Neural Networks (RNNs)

On the other hand, Recurrent Neural Networks (RNNs) are tailored to manage sequential data, such as speech or text. RNNs are designed with feedback loops that allow them to maintain a memory of previous inputs, which is essential for processing sequences where the context of prior data influences the interpretation of subsequent data.

This makes RNNs particularly useful in applications like natural language processing, where understanding the sequence and context of words is critical for tasks such as language translation, sentiment analysis, and voice recognition.

Explore a guide on Natural Language Processing and its Applications

In these scenarios, RNNs can effectively model temporal dynamics and dependencies, providing a more nuanced understanding of sequential data compared to other neural network architectures.

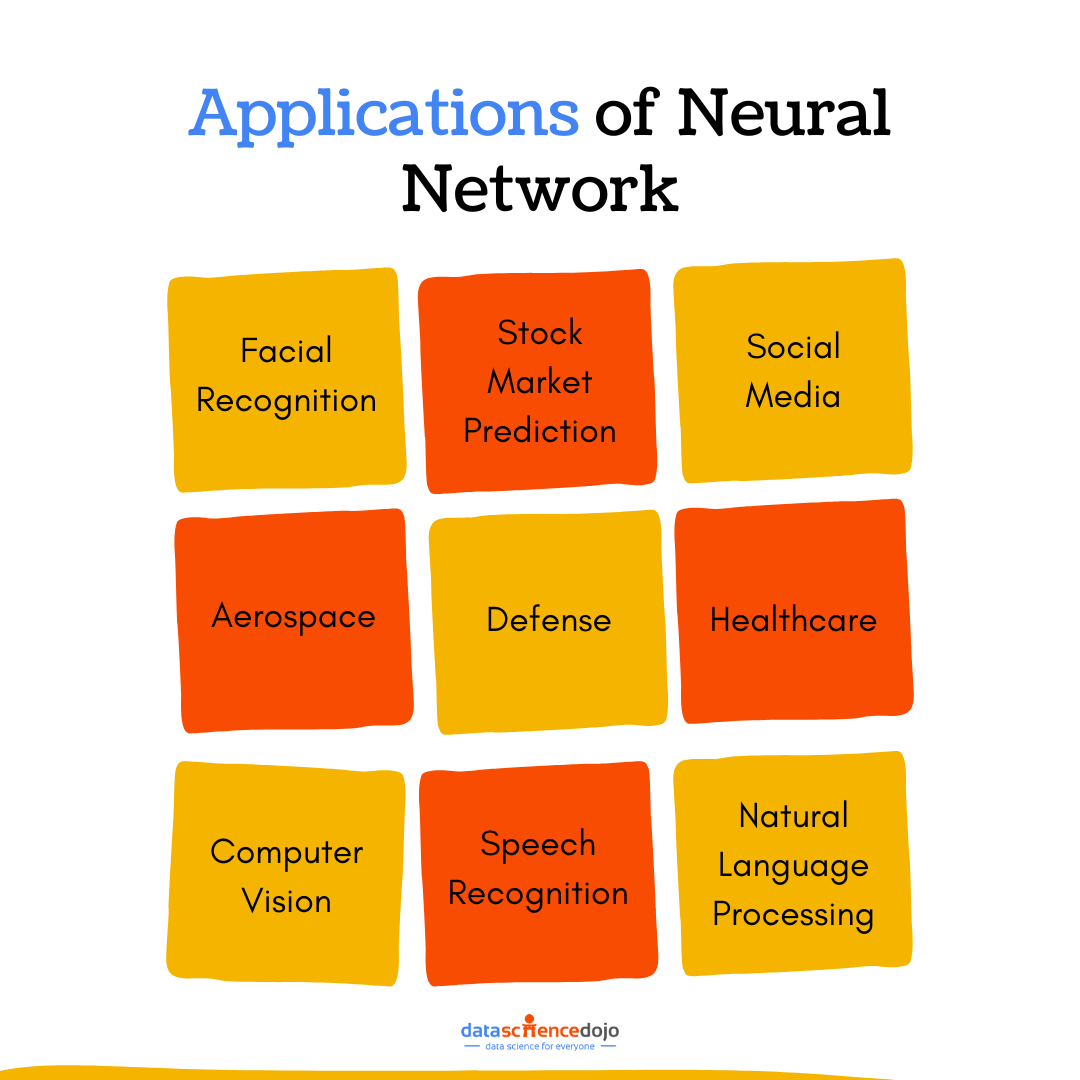

Applications of Neural Networks

Neural networks have become integral to various industries, enhancing capabilities and driving innovation. They have a wide range of applications in various fields, revolutionizing how tasks are performed and decisions are made. Here are some key real-world applications:

Facial recognition: Neural networks are at the core of facial recognition technologies, which are widely used in security systems to identify individuals and grant access. They power smartphone unlocking features, ensuring secure yet convenient access for users. Moreover, social media platforms utilize these networks for tagging photos and streamlining user interaction by automatically recognizing faces and suggesting tags.

Stock market prediction: In the financial sector, historical stock market data could be analyzed to predict trends and identify patterns that suggest future market behavior. This capability aids investors and financial analysts in making informed decisions, potentially increasing returns and minimizing risks.

Know more about Social Media Recommendation Systems to Unlock User Engagement

Social media: Social media platforms leverage neural networks to analyze user data, delivering personalized content and targeted advertisements. By understanding user behavior and preferences, these networks enhance user engagement and satisfaction through tailored experiences.

Aerospace: In aerospace, neural networks contribute to flight path optimization, ensuring efficient and safe travel routes. They are also employed in predictive maintenance, identifying potential issues in aircraft before they occur, thus reducing downtime and enhancing safety. Additionally, these networks simulate aerodynamic properties to improve aircraft design and performance.

Defense: Defense applications of neural networks include surveillance, where they help detect and monitor potential threats. They are also pivotal in developing autonomous weapons systems and enhancing threat detection capabilities, ensuring national security and defense readiness.

Healthcare: Neural networks revolutionize healthcare by assisting in medical diagnosis and drug discovery. They analyze complex medical data, enabling the development of personalized medicine tailored to individual patient needs. This approach improves treatment outcomes and patient care.

Learn how AI in Healthcare has improved Patient Care

Computer vision: In computer vision, neural networks are fundamental for tasks such as image classification, object detection, and scene understanding. These capabilities are crucial in various applications, from autonomous vehicles to advanced security systems.

Speech recognition: Neural networks enhance speech recognition technologies, powering voice-activated assistants like Siri and Alexa. They also improve transcription services and facilitate language translation, making communication more accessible across language barriers.

Understand easily build AI-based chatbots in Python

Natural language processing (NLP): In NLP, neural networks play a key role in understanding, interpreting, and generating human language. Applications include chatbots that provide customer support and text analysis tools that extract insights from large volumes of data.

Learn more about the 5 Main Types of Neural Networks

These applications demonstrate the versatility and power of neural networks in handling complex tasks across various domains. Neural networks are pivotal across numerous sectors, driving efficiency and innovation. As these technologies continue to evolve, their impact is expected to expand, offering even greater potential for advancements in various fields. Embracing these technologies can provide a competitive edge, fostering growth and development

Conclusion

In summary, neural networks process input data through a series of layers and neurons, using weights, biases, and activation functions to learn and make predictions or classifications. Their architecture can vary greatly depending on the specific application.

They are a powerful tool in AI, capable of learning and adapting in ways similar to the human brain. From voice assistants to medical diagnosis, they are reshaping how we interact with technology, making our world smarter and more connected.