Applications powered by large language models (LLMs) are revolutionizing the way businesses operate, from automating customer service to enhancing data analysis. In today’s fast-paced technological landscape, staying ahead means leveraging these powerful tools to their full potential.

For instance, a global e-commerce company striving to provide exceptional customer support around the clock can implement LangChain to develop an intelligent chatbot. It will ensure seamless integration of the business’s internal knowledge base and external data sources.

As a result, the enterprise can build a chatbot capable of understanding and responding to customer inquiries with context-aware, accurate information, significantly reducing response times and enhancing customer satisfaction.

LangChain stands out by simplifying the development and deployment of LLM-powered applications, making it easier for businesses to integrate advanced AI capabilities into their processes.

In this blog, we will explore what is LangChain, its key features, benefits, and practical use cases. We will also delve into related tools like LlamaIndex, LangGraph, and LangSmith to provide a comprehensive understanding of this powerful framework.

What is LangChain?

LangChain is an innovative open-source framework crafted for developing powerful applications using LLMs. These advanced AI systems, trained on massive datasets, can produce human-like text with remarkable accuracy.

It makes it easier to create LLM-driven applications by providing a comprehensive toolkit that simplifies the integration and enhances the functionality of these sophisticated models.

LangChain was launched by Harrison Chase and Ankush Gola in October 2022. It has gained popularity among developers and AI enthusiasts for its robust features and ease of use.

Explore and learn about streaming LangChain

Its initial goal was to link LLMs with external data sources, enabling the development of context-aware, reasoning applications. Over time, LangChain has advanced into a useful toolkit for building LLM-powered applications.

By integrating LLMs with real-time data and external knowledge bases, LangChain empowers businesses to create more sophisticated and responsive AI applications, driving innovation and improving service delivery across various sectors.

What are the Features of LangChain?

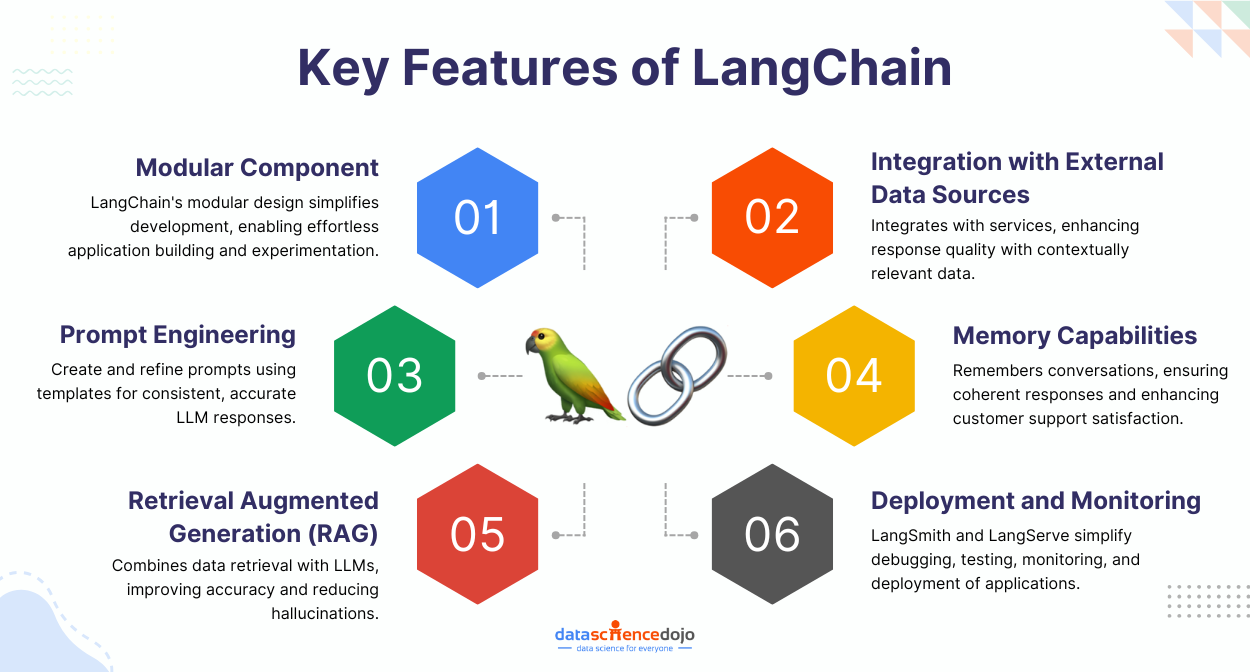

LangChain is revolutionizing the development of AI applications with its comprehensive suite of features. From modular components that simplify complex tasks to advanced prompt engineering and seamless integration with external data sources, LangChain offers everything developers need to build powerful, intelligent applications.

1. Modular Components

LangChain stands out with its modular design, making it easier for developers to build applications.

Imagine having a box of LEGO bricks, each representing a different function or tool. With LangChain, these bricks are modular components, allowing you to snap them together to create sophisticated applications without needing to write everything from scratch.

For example, if you’re building a chatbot, you can combine modules for natural language processing (NLP), data retrieval, and user interaction. This modularity ensures that you can easily add, remove, or swap out components as your application’s needs change.

Ease of Experimentation

This modular design makes the development an enjoyable and flexible process. The LangChain framework is designed to facilitate easy experimentation and prototyping.

For instance, if you’re uncertain which language model will give you the best results, LangChain allows you to quickly swap between different models without rewriting your entire codebase. This ease of experimentation is useful in AI development where rapid iteration and testing are crucial.

Thus, by breaking down complex tasks into smaller, manageable components and offering an environment conducive to experimentation, LangChain empowers developers to create innovative, high-quality applications efficiently.

2. Integration with External Data Sources

LangChain excels in integrating with external data sources, creating context-aware applications that are both intelligent and responsive. Let’s dive into how this works and why it’s beneficial.

Data Access

The framework is designed to support extensive data access from external sources. Whether you’re dealing with file storage services like Dropbox, Google Drive, and Microsoft OneDrive, or fetching information from web content such as YouTube and PubMed, LangChain has you covered.

It also connects effortlessly with collaboration tools like Airtable, Trello, Figma, and Notion, as well as databases including Pandas, MongoDB, and Microsoft databases. All you need to do is configure the necessary connections. LangChain takes care of data retrieval and providing accurate responses.

Rich Context-Aware Responses

Data access is not the only focal point, it is also about enhancing the response quality using the context of information from external sources. When your application can tap into a wealth of external data, it can provide answers that are not only accurate but also contextually relevant.

By enabling rich and context-aware responses, LangChain ensures that applications are informative, highly relevant, and useful to their users. This capability transforms simple data retrieval tasks into powerful, intelligent interactions, making LangChain an invaluable tool for developers across various industries.

Read about the context-window paradox in LLMs

For instance, a healthcare application could integrate patient data from a secure database with the latest medical research. When a doctor inquires about treatment options, the application provides suggestions based on the patient’s history and the most recent studies, ensuring that the doctor has the best possible information.

3. Prompt Engineering

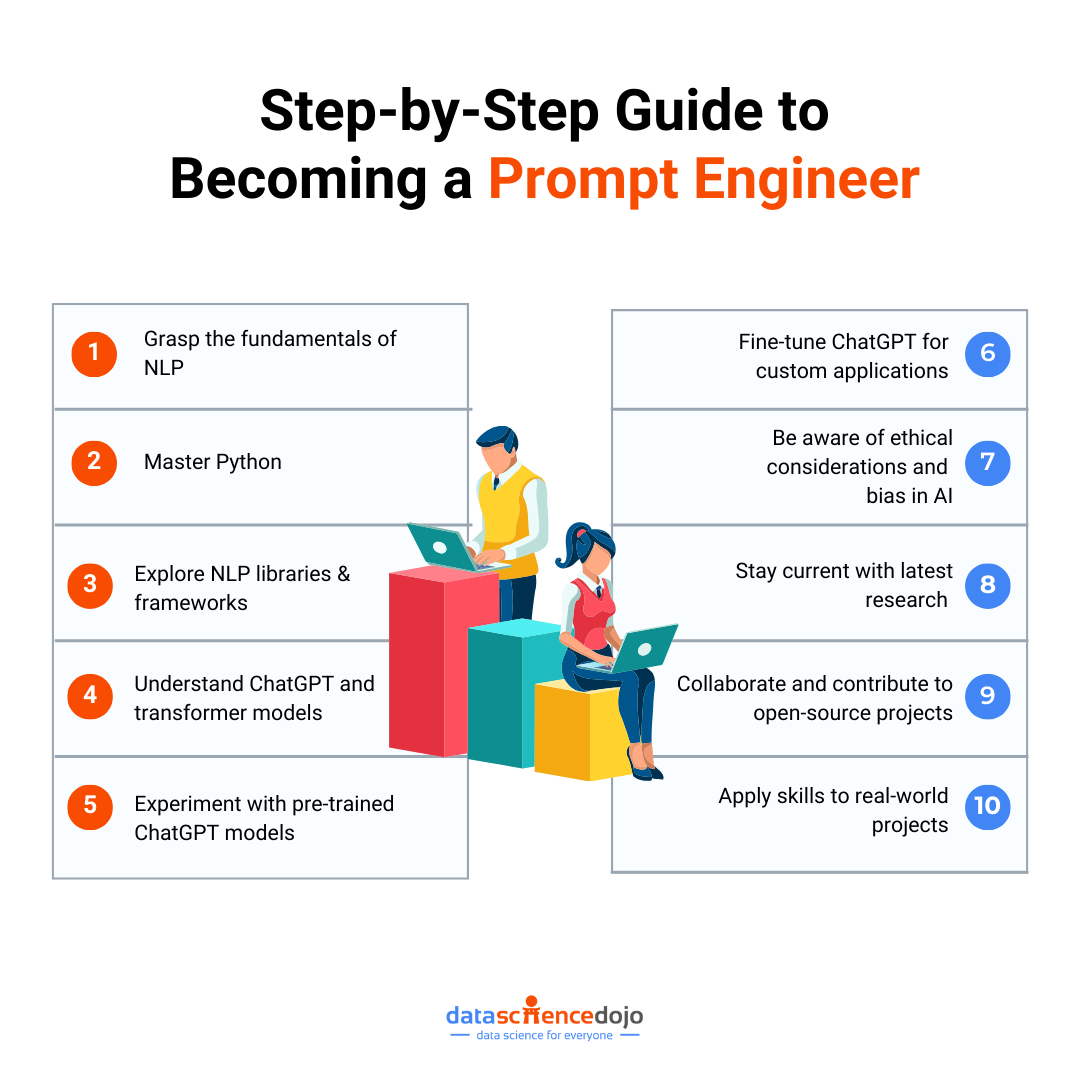

Prompt engineering is one of the coolest aspects of working with LangChain. It’s all about crafting the right instructions to get the best possible responses from LLMs. Let’s unpack this with two key elements: advanced prompt engineering and the use of prompt templates.

Advanced Prompt Engineering

LangChain takes prompt engineering to the next level by providing robust support for creating and refining prompts. It helps you fine-tune the questions or commands you give to your LLMs to get the most accurate and relevant responses, ensuring your prompts are clear, concise, and tailored to the specific task at hand.

For example, if you’re developing a customer service chatbot, you can create prompts that guide the LLM to provide helpful and empathetic responses. You might start with a simple prompt like, “How can I assist you today?” and then refine it to be more specific based on the types of queries your customers commonly have.

LangChain makes it easy to continuously tweak and improve these prompts until they are just right.

Bust some major myths about prompt engineering here

Prompt Templates

Prompt templates are pre-built structures that you can use to consistently format your prompts. Instead of crafting each prompt from scratch, you can use a template that includes all the necessary elements and just fill in the blanks.

For instance, if you frequently need your LLM to generate fun facts about different animals, you could create a prompt template like, “Tell me an {adjective} fact about {animal}.”

When you want to use it, you simply plug in the specifics: “Tell me an interesting fact about zebras.” This ensures that your prompts are always well-structured and ready to go, without the hassle of constant rewriting.

Explore the 10-step roadmap to becoming a prompt engineer

These templates are especially handy because they can be shared and reused across different projects, making your workflow much more efficient. LangChain’s prompt templates also integrate smoothly with other components, allowing you to build complex applications with ease.

Whether you’re a seasoned developer or just starting out, these tools make it easier to harness the full power of LLMs.

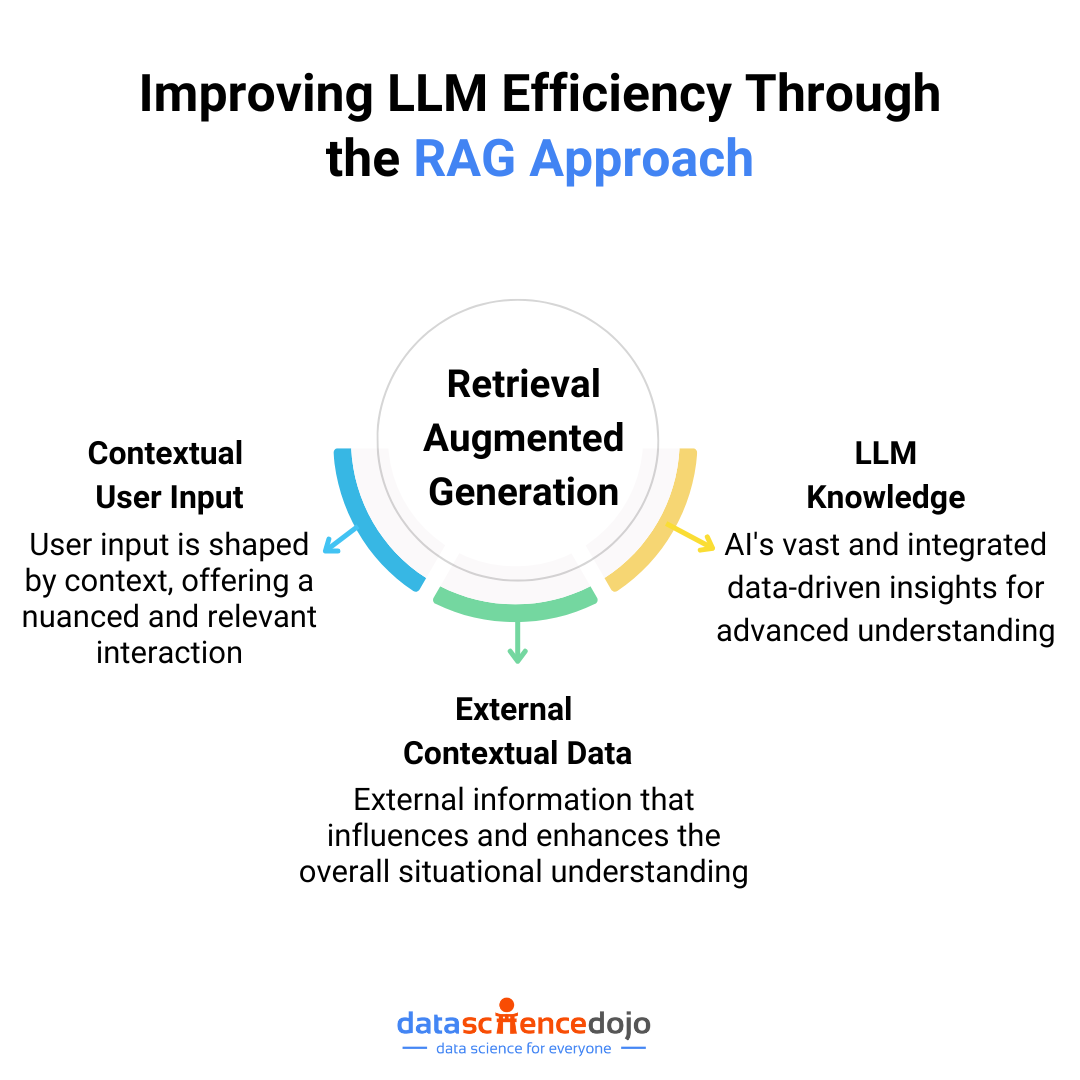

4. Retrieval Augmented Generation (RAG)

RAG combines the power of retrieving relevant information from external sources with the generative capabilities of large language models (LLMs). Let’s explore why this is so important and how LangChain makes it all possible.

RAG Workflows

RAG is a technique that helps LLMs fetch relevant information from external databases or documents to ground their responses in reality. This reduces the chances of “hallucinations” – those moments when the AI just makes things up – and improves the overall accuracy of its responses.

Here’s your guide to learn more about Retrieval Augmented Generation

Imagine you’re using an AI assistant to get the latest financial market analysis. Without RAG, the AI might rely solely on outdated training data, potentially giving you incorrect or irrelevant information. But with RAG, the AI can pull in the most recent market reports and data, ensuring that its analysis is accurate and up-to-date.

Implementation

LangChain supports the implementation of RAG workflows in the following ways:

- integrating various document sources, databases, and APIs to retrieve the latest information

- uses advanced search algorithms to query the external data sources

- processing of retrieved information and its incorporation into the LLM’s generative process

Hence, when you ask the AI a question, it doesn’t just rely on what it already “knows” but also brings in fresh, relevant data to inform its response. It transforms simple AI responses into well-informed, trustworthy interactions, enhancing the overall user experience.

5. Memory Capabilities

LangChain excels at handling memory, allowing AI to remember previous conversations. This is crucial for maintaining context and ensuring relevant and coherent responses over multiple interactions. The conversation history is retained by recalling recent exchanges or summarizing past interactions.

It makes the interactions with AI more natural and engaging. This makes LangChain particularly useful for customer support chatbots, enhancing user satisfaction by maintaining context over multiple interactions.

6. Deployment and Monitoring

With the integration of LangSmith and LangServe, the LangChain framework has the potential to assist you in the deployment and monitoring of AI applications.

LangSmith is essential for debugging, testing, and monitoring LangChain applications through a unified platform for inspecting chains, tracking performance, and continuously optimizing applications. It allows you to catch issues early and ensure smooth operation.

Meanwhile, LangServe simplifies deployment by turning any LangChain application into a REST API, facilitating integration with other systems and platforms and ensuring accessibility and scalability.

Collectively, these features make LangChain a useful tool to build and develop AI applications using LLMs.

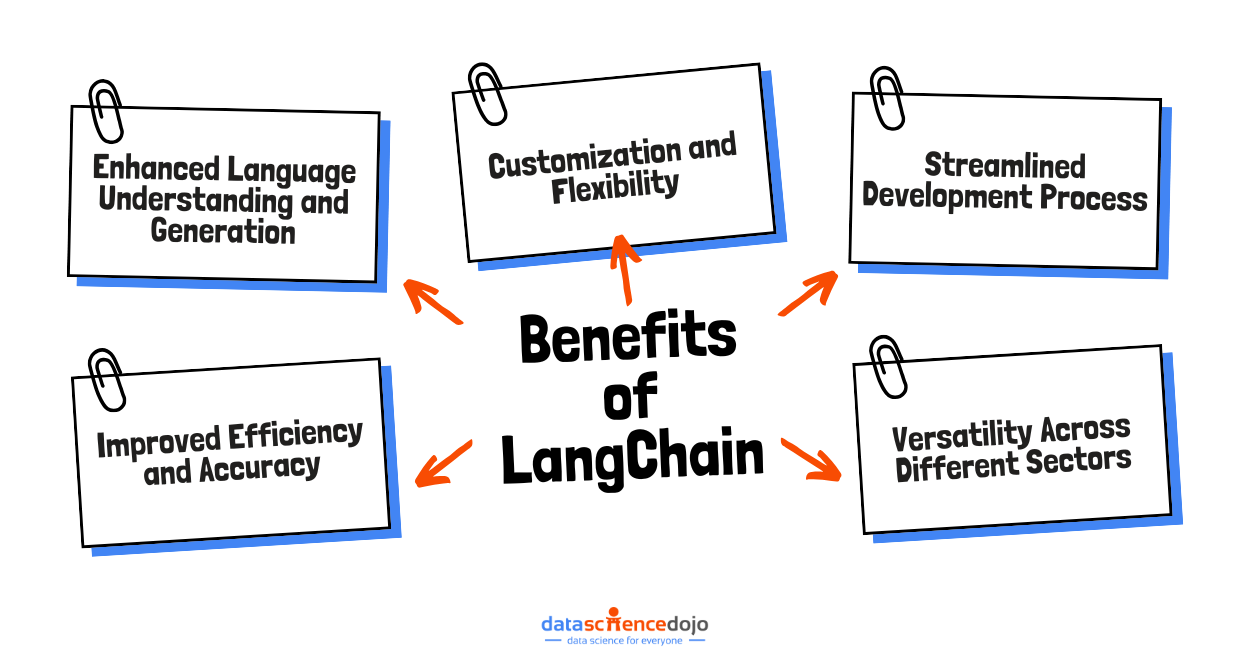

Benefits of Using LangChain

LangChain offers a multitude of benefits that make it an invaluable tool for developers working with large language models (LLMs). Let’s dive into some of these key advantages and understand how they can transform your AI projects.

Enhanced Language Understanding and Generation

LangChain enhances language understanding and generation by integrating various models, allowing developers to leverage the strengths of each. It leads to improved language processing, resulting in applications that can comprehend and generate human-like language in a natural and meaningful manner.

Customization and Flexibility

LangChain’s modular structure allows developers to mix and match building blocks to create tailored solutions for a wide range of applications.

Whether developing a simple FAQ bot or a complex system integrating multiple data sources, LangChain’s components can be easily added, removed, or replaced, ensuring the application can evolve over time without requiring a complete overhaul, thus saving time and resources.

Streamlined Development Process

It streamlines the development process by simplifying the chaining of various components, offering pre-built modules for common tasks like data retrieval, natural language processing, and user interaction.

This reduces the complexity of building AI applications from scratch, allowing developers to focus on higher-level design and logic. This chaining construct not only accelerates development but also makes the codebase more manageable and less prone to errors.

Improved Efficiency and Accuracy

The framework enhances efficiency and accuracy in language tasks by combining multiple components, such as using a retrieval module to fetch relevant data and a language model to generate responses based on that data. Moreover, the ability to fine-tune each component further boosts overall performance, making LangChain-powered applications highly efficient and reliable.

Versatility Across Sectors

LangChain is a versatile framework that can be used across different fields like content creation, customer service, and data analytics. It can generate high-quality content and social media posts, power intelligent chatbots, and assist in extracting insights from large datasets to predict trends. Thus, it can meet diverse business needs and drive innovation across industries.

These benefits make LangChain a powerful tool for developing advanced AI applications. Whether you are a developer, a product manager, or a business leader, leveraging LangChain can significantly elevate your AI projects and help you achieve your goals more effectively.

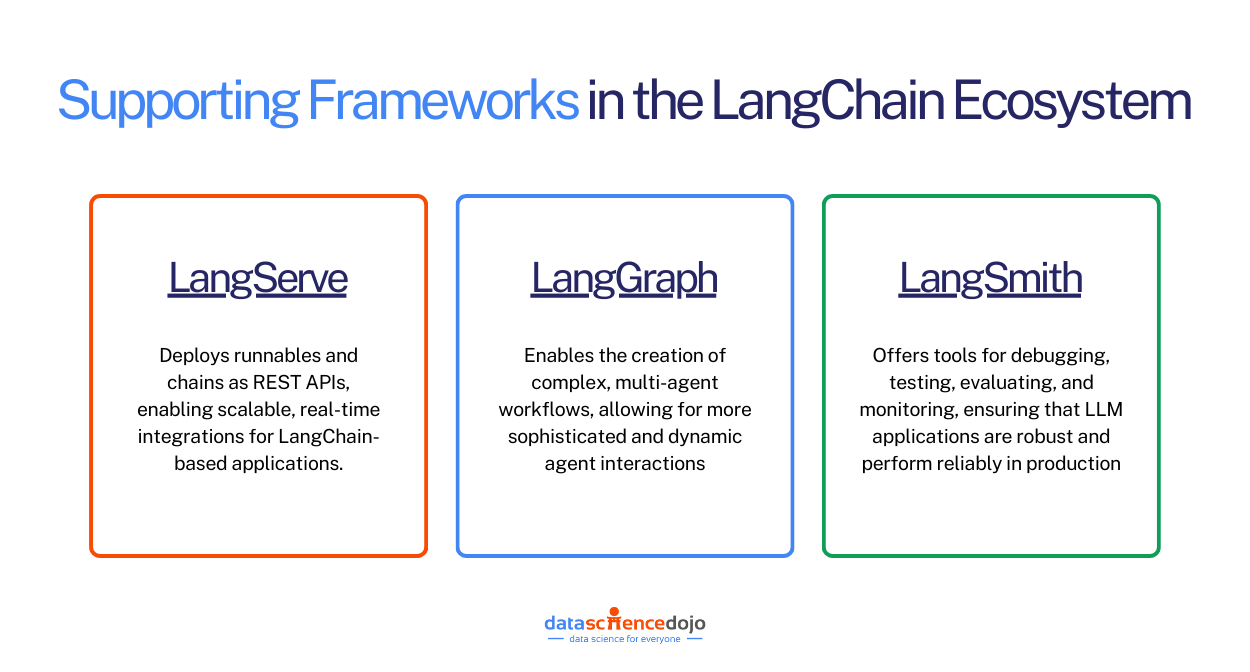

Supporting Frameworks in the LangChain Ecosystem

Different frameworks support the LangChain system to harness the full potential of the toolkit. Among these are LangGraph, LangSmith, and LangServe, each one offering unique functionalities. Here’s a quick overview of their place in the LangChain ecosystem.

LangServe: Deploys runnables and chains as REST APIs, enabling scalable, real-time integrations for LangChain-based applications.

LangGraph: Extends LangChain by enabling the creation of complex, multi-agent workflows, allowing for more sophisticated and dynamic agent interactions.

LangSmith: Complements LangChain by offering tools for debugging, testing, evaluating, and monitoring, ensuring that LLM applications are robust and perform reliably in production.

Now let’s explore each tool and its characteristics.

LangServe

It is a component of the LangChain framework that is designed to convert LangChain runnables and chains into REST APIs. This makes applications easy to deploy and access for real-time interactions and integrations.

By handling the deployment aspect, LangServe allows developers to focus on optimizing their applications without worrying about the complexities of making them production-ready. It also assists in deploying applications as accessible APIs.

This integration capability is particularly beneficial for creating robust, real-time AI solutions that can be easily incorporated into existing infrastructures, enhancing the overall utility and reach of LangChain-based applications.

LangGraph

It is a framework that works with the LangChain ecosystem to enable workflows to revisit previous steps and adapt based on new information, assisting in the design of complex multi-agent systems. By allowing developers to use cyclical graphs, it brings a level of sophistication and adaptability that’s hard to achieve with traditional methods.

Here’s a detailed LangGraph tutorial on building a chatbot

LangGraph offers built-in state persistence and real-time streaming, allowing developers to capture and inspect the state of an agent at any specific point, facilitating debugging and ensuring traceability. It enables human intervention in agent workflows for the approval, modification, or rerouting of actions planned by agents.

LangGraph’s advanced features make it ideal for building sophisticated AI workflows where multiple agents need to collaborate dynamically, like in customer service bots, research assistants, and content creation pipelines.

LangSmith

It is a developer platform that integrates with LangChain to create a unified development environment, simplifying the management and optimization of your LLM applications. It offers everything you need to debug, test, evaluate, and monitor your AI applications, ensuring they run smoothly in production.

LangSmith is particularly beneficial for teams looking to enhance the accuracy, performance, and reliability of their AI applications by providing a structured approach to development and deployment.

For a quick review, below is a table summarizing the unique features of each component and other characteristics.

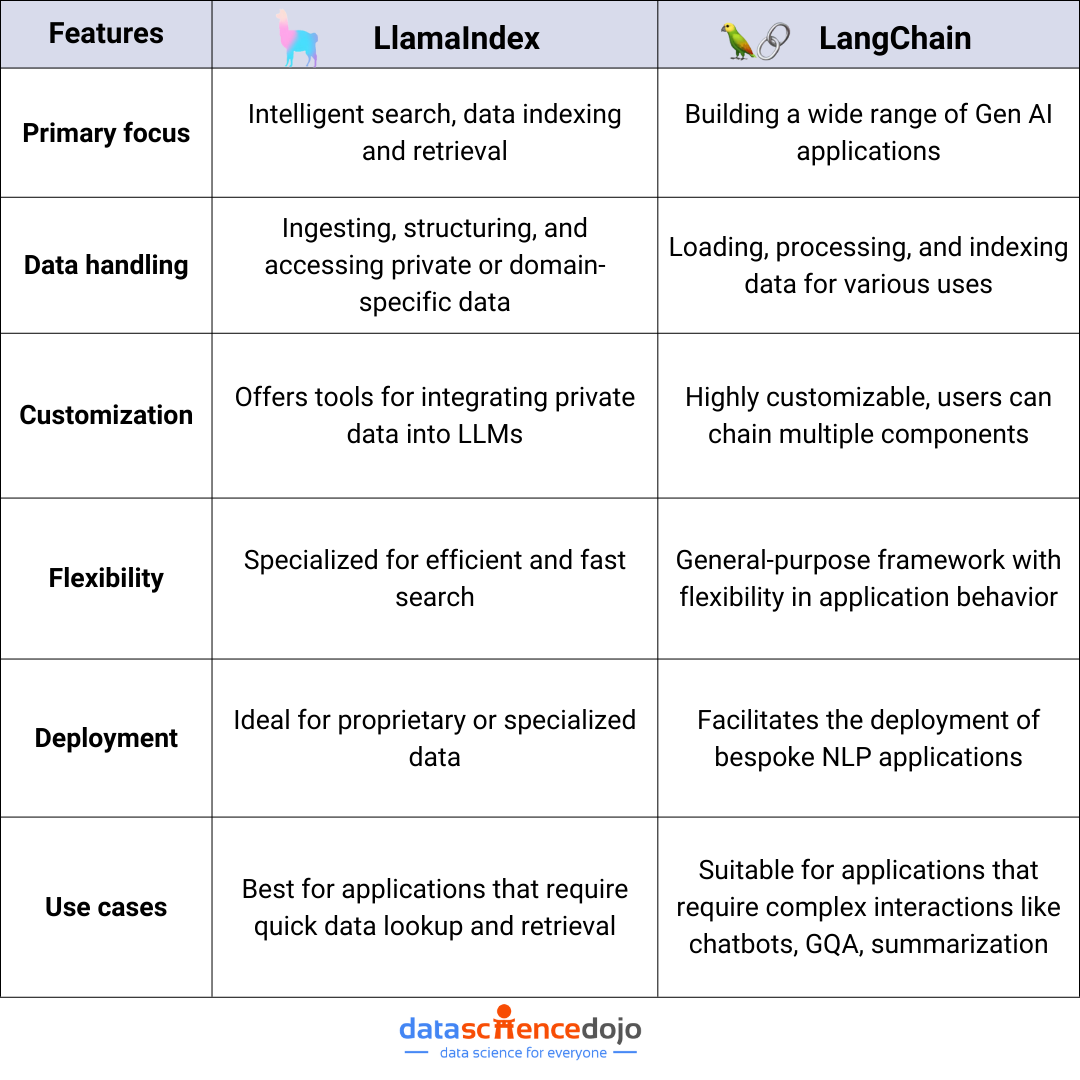

Addressing the LlamaIndex vs LangChain Debate

LlamaIndex and LangChain are two important frameworks for deploying AI applications. Let’s take a comparative lens to compare the two tools across key aspects to understand their unique strengths and applications.

Focused Approach vs. Flexibility

LlamaIndex is designed for search and retrieval applications. Its simplified interface allows straightforward interactions with LLMs for efficient document retrieval. LlamaIndex excels in handling large datasets with high accuracy and speed, making it ideal for tasks like semantic search and summarization.

LangChain, on the other hand, offers a comprehensive and modular framework for building diverse LLM-powered applications. Its flexible and extensible structure supports a variety of data sources and services. LangChain includes tools like Model I/O, retrieval systems, chains, and memory systems for granular control over LLM integration. This makes LangChain particularly suitable for constructing more complex, context-aware applications.

Use Cases and Integrations

LlamaIndex is suitable for use cases that require efficient data indexing and retrieval. Its engines connect multiple data sources with LLMs, enhancing data interaction and accessibility. It also supports data agents that manage both “read” and “write” operations, automate data management tasks, and integrate with various external service APIs.

Explore the role of LlamaIndex in uncovering insights in text exploration

Whereas, LangChain excels in extensive customization and multimodal integration. It supports a wide range of data connectors for effortless data ingestion and offers tools for building sophisticated applications like context-aware query engines. Its flexibility supports the creation of intricate workflows and optimized performance for specific needs, making it a versatile choice for various LLM applications.

Performance and Optimization

LlamaIndex is optimized for high throughput and fast processing, ensuring quick and accurate search results. Its design focuses on maximizing efficiency in data indexing and retrieval, making it a robust choice for applications with significant data processing demands.

Meanwhile, with features like chains, agents, and RAG, LangChain allows developers to fine-tune components and optimize performance for specific tasks. This ensures that applications built with LangChain can efficiently handle complex queries and provide customized results.

Explore the LlamaIndex vs LangChain debate in detail

Hence, the choice between these two frameworks is dependent on your specific project needs. While LlamaIndex is the go-to framework for applications that require efficient data indexing and retrieval, LangChain stands out for its flexibility and ability to build complex, context-aware applications with extensive customization options.

Both frameworks offer unique strengths, and understanding these can help developers align their needs with the right tool, leading to the construction of more efficient, powerful, and accurate LLM-powered applications.

Read more about the role of LlamaIndex and LangChain in orchestrating LLMs

Real-World Examples and Case Studies

Let’s look at some examples and use cases of LangChain in today’s digital world.

Customer Service

Advanced chatbots and virtual assistants can manage everything from basic FAQs to complex problem-solving. By integrating LangChain with LLMs like OpenAI’s GPT-4, businesses can develop chatbots that maintain context, offering personalized and accurate responses.

Learn to build custom AI chatbots with LangChain

This improves customer experience and reduces the workload on human representatives. With AI handling routine inquiries, human agents can focus on complex issues that require a personal touch, enhancing efficiency and satisfaction in customer service operations.

Healthcare

It automates repetitive administrative tasks like scheduling appointments, managing medical records, and processing insurance claims. This automation streamlines operations, ensuring healthcare providers deliver timely and accurate services to patients.

Several companies have successfully implemented LangChain to enhance their operations and achieve remarkable results. Some notable examples include:

Retool

The company leveraged LangSmith to improve the accuracy and performance of its fine-tuned models. As a result, Retool delivered a better product and introduced new AI features to their users much faster than traditional methods would have allowed. It highlights that LangChain’s suite of tools can speed up the development process while ensuring high-quality outcomes.

Elastic AI Assistant

They used both LangChain and LangSmith to accelerate development and enhance the quality of their AI-powered products. The integration allowed Elastic AI Assistant to manage complex workflows and deliver a superior product experience to their customers highlighting the impact of LangChain in real-world applications to streamline operations and optimize performance.

Hence, by providing a structured approach to development and deployment, LangChain ensures that companies can build, run, and manage sophisticated AI applications, leading to improved operational efficiency and customer satisfaction.

Frequently Asked Questions (FAQs)

Q1: How does it help in developing AI applications?

LangChain provides a set of tools and components that help integrate LLMs with other data sources and computation tools, making it easier to build sophisticated AI applications like chatbots, content generators, and data retrieval systems.

Q2: Can LangChain be used with different LLMs and tools?

Absolutely! LangChain is designed to be model-agnostic as it can work with various LLMs such as OpenAI’s GPT models, Google’s Flan-T5, and others. It also integrates with a wide range of tools and services, including vector databases, APIs, and external data sources.

Q3: How can I get started with LangChain?

Getting started with LangChain is easy. You can install it via pip or conda and access comprehensive documentation, tutorials, and examples on its official GitHub page. Whether you’re a beginner or an advanced developer, LangChain provides all the resources you need to build your first LLM-powered application.

Q4: Where can I find more resources and community support for LangChain?

You can find more resources, including detailed documentation, how-to guides, and community support, on the LangChain GitHub page and official website. Joining the LangChain Discord community is also a great way to connect with other developers, share ideas, and get help with your projects.

Feel free to explore LangChain and start building your own LLM-powered applications today! The possibilities are endless, and the community is here to support you every step of the way.

To start your learning journey, join our LLM bootcamp today for a deeper dive into LangChain and LLM applications!