Data professionals are continuously advancing with AI tools and technologies to enhance their capabilities and drive innovation. The integration of AI into data science has revolutionized the way information is analyzed, interpreted, and utilized.

Education in this field should incorporate practical exercises and projects involving LLML platforms that build essential skills for data scientists, equipping them to tackle real-world challenges.

Through hands-on experience, students can gain a deeper understanding of leveraging these platforms effectively, engaging in tasks such as data preprocessing, model selection, and hyperparameter tuning using LLML tools.

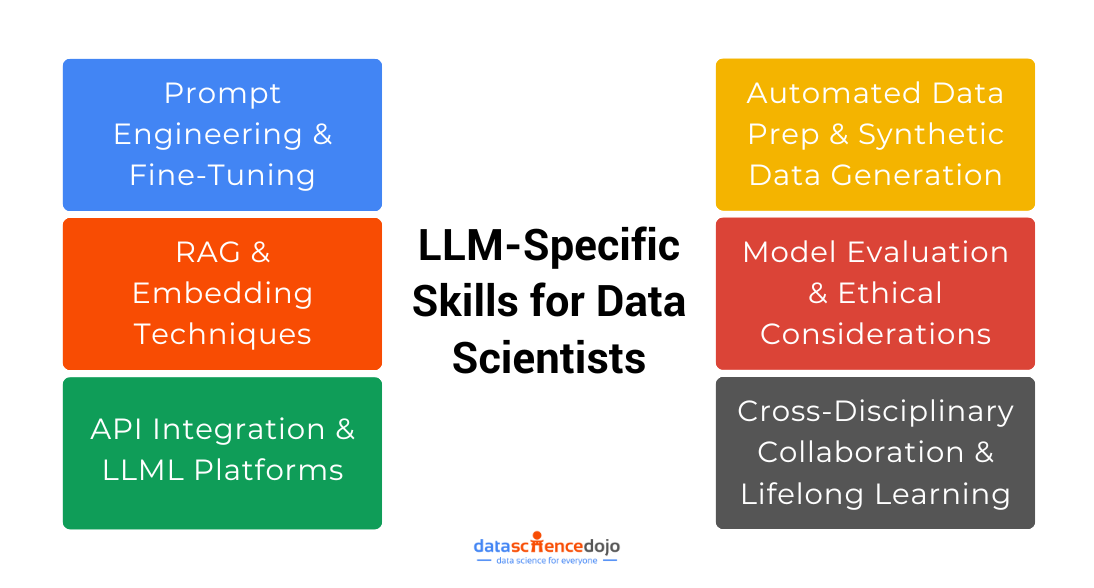

LLM-Specific Skills for Data Scientists

As large language models (LLMs) continue to transform data science workflows, data scientists must now build a specialized skill set that goes beyond traditional analytics. Here are key skills for data science professionals to thrive in an LLM-driven landscape:

- Prompt Engineering and Fine-Tuning:

Mastering prompt engineering is key when working with LLMs. This involves crafting clear, structured prompts to guide models toward producing relevant outputs. Additionally, knowing how to fine-tune LLMs—whether through supervised methods or advanced techniques like low-rank adaptation—ensures that models perform well on domain-specific tasks. - Retrieval Augmented Generation (RAG) and Embedding Techniques:

A deep understanding of how to integrate retrieval mechanisms into LLM workflows is increasingly important. Data scientists should be skilled in creating embedding-based search systems that can fetch contextually relevant data from large datasets, thereby enhancing model responses and overall performance. - API Integration and LLML Platforms:

As many LLMs are accessed via APIs or through low-code machine learning (LLML) platforms, proficiency in integrating these services into your data pipelines is essential. This includes familiarity with platforms like LangChain, LlamaIndex, and other orchestration tools that help manage the complex interplay between LLMs and other data science tools. - Automated Data Preprocessing and Synthetic Data Generation:

LLMs can streamline tasks such as data cleaning, preprocessing, and even the generation of synthetic datasets. Data scientists should know how to harness these capabilities to prepare high-quality input for models, thereby reducing manual labor and accelerating the analytical process. - Model Evaluation and Ethical Considerations:

Evaluating LLM outputs requires a nuanced understanding of both performance metrics (such as perplexity and cross-entropy) and the potential for biases or hallucinations. Being adept at interpreting results, validating outputs, and applying best practices for ethical AI use will help ensure that your models are reliable and responsible. - Interdisciplinary Collaboration and Continuous Learning:

LLMs are rapidly evolving, so staying current means actively engaging with new research, participating in bootcamps, and collaborating with experts from diverse fields. This continuous learning approach enables data scientists to adapt LLM tools to specific industry challenges and foster innovation across projects.

6 Ways Data Scientists are Leveraging Large Language Models with Examples

Here are some key ways data scientists are leveraging AI tools and technologies:

Advanced Machine Learning Algorithms:

Data professionals are utilizing more advanced machine learning algorithms to derive valuable insights from complex and large datasets. These algorithms enable them to build more accurate predictive models, identify patterns, and make data-driven decisions with greater confidence.

Think of Netflix and how it recommends movies and shows you might like based on what you’ve watched before. Data scientists are using more advanced machine learning algorithms to do similar things in various industries, like predicting customer behavior or optimizing supply chain operations.

Here’s your guide to Machine Learning Model Deployment

Automated Feature Engineering:

AI tools are being used to automate the process of feature engineering, allowing data scientists to extract, select, and transform features in a more efficient and effective manner. This automation accelerates the model development process and improves the overall quality of the models.

Imagine if you’re on Amazon and it suggests products that are related to what you’ve recently viewed or bought. This is powered by automated feature engineering, where AI helps identify patterns and relationships between different products to make these suggestions more accurate.

Natural Language Processing (NLP):

Data scientists are incorporating NLP techniques and technologies to analyze and derive insights from unstructured data such as text, audio, and video. This enables them to extract valuable information from diverse sources and enhance the depth of their analysis.

Have you used voice assistants like Siri or Alexa? Data scientists are using NLP to make these assistants smarter and more helpful. They’re also using NLP to analyze customer feedback and social media posts to understand sentiment and improve products and services.

Enhanced Data Visualization:

AI-powered data visualization tools are enabling data scientists to create interactive and dynamic visualizations that facilitate better communication of insights and findings. These tools help in presenting complex data in a more understandable and compelling manner.

When you see interactive and colorful charts on news websites or in business presentations that help explain complex data, that’s the power of AI-powered data visualization tools. Data scientists are using these tools to make data more understandable and actionable.

Real-time Data Analysis:

With AI-powered technologies, data scientists can perform real-time data analysis, allowing businesses to make immediate decisions based on the most current information available. This capability is crucial for industries that require swift and accurate responses to changing conditions.

In industries like finance and healthcare, real-time data analysis is crucial. For example, in finance, AI helps detect fraudulent transactions in real-time, while in healthcare, it aids in monitoring patient vitals and alerting medical staff to potential issues.

Autonomous Model Deployment:

AI tools are streamlining the process of deploying machine learning models into production environments. Data scientists can now leverage automated model deployment solutions to ensure seamless integration and operation of their predictive models.

Data scientists are using AI to streamline the deployment of machine learning models into production environments. Just like how self-driving cars operate autonomously, AI tools are helping models to be deployed seamlessly and efficiently.

As data scientists continue to embrace and integrate AI tools and technologies into their workflows, they are poised to unlock new possibilities in data analysis, decision-making, and business optimization in 2024 and beyond.

Read more: Your One-Stop Guide to Large Language Models and their Applications

Usage of Generative AI Tools like ChatGPT for Data Scientists

GPT (Generative Pre-trained Transformer) and similar natural language processing (NLP) models can be incredibly useful for data scientists in various tasks. Here are some ways data scientists can leverage GPT for regular data science tasks with real-life examples

Text Generation and Summarization: Data scientists can use GPT to generate synthetic text or create automatic summaries of lengthy documents. For example, in customer feedback analysis, GPT can be used to summarize large volumes of customer reviews to identify common themes and sentiments.

Language Translation: GPT can assist in translating text from one language to another, which can be beneficial when dealing with multilingual datasets. For instance, in a global marketing analysis, GPT can help translate customer feedback from different regions to understand regional preferences and sentiments.

Question Answering: GPT can be employed to build question-answering systems that can extract relevant information from unstructured text data. In a healthcare setting, GPT can support the development of systems that extract answers from medical literature to aid in diagnosis and treatment decisions.

Sentiment Analysis: Data scientists can utilize GPT to perform sentiment analysis on social media posts, customer feedback, or product reviews to gauge public opinion. For example, in brand reputation management, GPT can help identify and analyze sentiments expressed in online discussions about a company’s products or services.

Data Preprocessing and Labeling: GPT can be used for automated data preprocessing tasks such as cleaning and standardizing textual data. In a research context, GPT can assist in automatically labeling research papers based on their content, making them easier to categorize and analyze.

By incorporating GPT into their workflows, data scientists can enhance their ability to extract valuable insights from unstructured data, automate repetitive tasks, and improve the efficiency and accuracy of their analyses.

Also explore these 6 Books to Learn Data Science

AI Tools for Data Scientists

In the realm of AI tools for data scientists, there are several impactful ones that are driving significant advancements in the field. Let’s explore a few of these tools and their applications with real-life examples:

-

TensorFlow:

– TensorFlow is an open-source machine learning framework developed by Google. It is widely used for building and training machine learning models, particularly neural networks.

– Example: Data scientists can utilize TensorFlow to develop and train deep learning models for image recognition tasks. For instance, in the healthcare industry, TensorFlow can be employed to analyze medical images for the early detection of diseases such as cancer.

-

PyTorch:

– PyTorch is another popular open-source machine learning library, particularly favored for its flexibility and ease of use in building and training neural networks.

– Example: Data scientists can leverage PyTorch to create and train natural language processing (NLP) models for sentiment analysis of customer reviews. This can help businesses gauge public opinion about their products and services.

-

Scikit-learn:

– Scikit-learn is a versatile machine-learning library that provides simple and efficient tools for data mining and data analysis.

– Example: Data scientists can use Scikit-learn for clustering customer data to identify distinct customer segments based on their purchasing behavior. This can inform targeted marketing strategies and personalized recommendations.

-

H2O.ai:

– H2O.ai offers an open-source platform for scalable machine learning and deep learning. It provides tools for building and deploying machine learning models.

– Example: Data scientists can employ H2O.ai to develop predictive models for demand forecasting in retail, helping businesses optimize their inventory and supply chain management.

-

GPT-3 (Generative Pre-trained Transformer 3):

– GPT-3 is a powerful natural language processing model developed by OpenAI, capable of generating human-like text and understanding and responding to natural language queries.

– Example: Data scientists can utilize GPT-3 for generating synthetic text or summarizing large volumes of customer feedback to identify common themes and sentiments, aiding in customer sentiment analysis and product improvement.

These AI tools are instrumental in enabling data scientists to tackle a wide range of tasks, from image recognition and natural language processing to predictive modeling and recommendation systems, driving innovation and insights across various industries.

Read more: 6 Python Libraries for Data Science

Relevance of Data Scientists in the Era of Large Language Models

With the advent of Low-Code Machine Learning (LLML) platforms, data science education can stay relevant by adapting to the changing landscape of the industry. Here are a few ways data science education can evolve to incorporate LLML:

Emphasize Core Concepts: While LLML platforms provide pre-built solutions and automated processes, it’s essential for data science education to focus on teaching core concepts and fundamentals. This includes statistical analysis, data preprocessing, feature engineering, and model evaluation. By understanding these concepts, data scientists can effectively leverage the LLML platforms to their advantage.

Teach Interpretation and Validation: LLML platforms often provide ready-to-use models and algorithms. However, it’s crucial for data science education to teach students how to interpret and validate the results generated by these platforms. This involves understanding the limitations of the models, assessing the quality of the data, and ensuring the validity of the conclusions drawn from LLML-generated outputs.

Foster Critical Thinking: LLML platforms simplify the process of building and deploying machine learning models. However, data scientists still need to think critically about the problem at hand, select appropriate algorithms, and interpret the results. Data science education should encourage critical thinking skills and teach students how to make informed decisions when using LLML platforms.

Stay Up-to-Date: LLML platforms are constantly evolving, introducing new features and capabilities. Data science education should stay up-to-date with these advancements and incorporate them into the curriculum. This can be done through partnerships with LLML platform providers, collaboration with industry professionals, and continuous monitoring of the latest trends in the field.

By adapting to the rise of LLML platforms, data science education can ensure that students are equipped with the necessary skills to leverage these tools effectively. It’s important to strike a balance between teaching core concepts and providing hands-on experience with LLML platforms, ultimately preparing students to navigate the evolving landscape of data science.