Model Context Protocol (MCP) is rapidly emerging as the foundational layer for intelligent, tool-using AI systems, especially as organizations shift from prompt engineering to context engineering. Developed by Anthropic and now adopted by major players like OpenAI and Microsoft, MCP provides a standardized, secure way for large language models (LLMs) and agentic systems to interface with external APIs, databases, applications, and tools. It is revolutionizing how developers scale, govern, and deploy context-aware AI applications at the enterprise level.

As the world embraces agentic AI, where models don’t just generate text but interact with tools and act autonomously, MCP ensures those actions are interoperable, auditable, and secure, forming the glue that binds agents to the real world.

What Is Agentic AI? Master 6 Steps to Build Smart Agents

What is Model Context Protocol?

Model Context Protocol is an open specification that standardizes the way LLMs and AI agents connect with external systems like REST APIs, code repositories, knowledge bases, cloud applications, or internal databases. It acts as a universal interface layer, allowing models to ground their outputs in real-world context and execute tool calls safely.

Key Objectives of MCP:

-

Standardize interactions between models and external tools

-

Enable secure, observable, and auditable tool usage

-

Reduce integration complexity and duplication

-

Promote interoperability across AI vendors and ecosystems

Unlike proprietary plugin systems or vendor-specific APIs, MCP is model-agnostic and language-independent, supporting multiple SDKs including Python, TypeScript, Java, Swift, Rust, Kotlin, and more.

Learn more about Agentic AI Communication Protocols

Why MCP Matters: Solving the M×N Integration Problem

Before MCP, integrating each of M models (agents, chatbots, RAG pipelines) with N tools (like GitHub, Notion, Postgres, etc.) required M × N custom connections—leading to enormous technical debt.

MCP collapses this to M + N:

-

Each AI agent integrates one MCP client

-

Each tool or data system provides one MCP server

-

All components communicate using a shared schema and protocol

This pattern is similar to USB-C in hardware: a unified protocol for any model to plug into any tool, regardless of vendor.

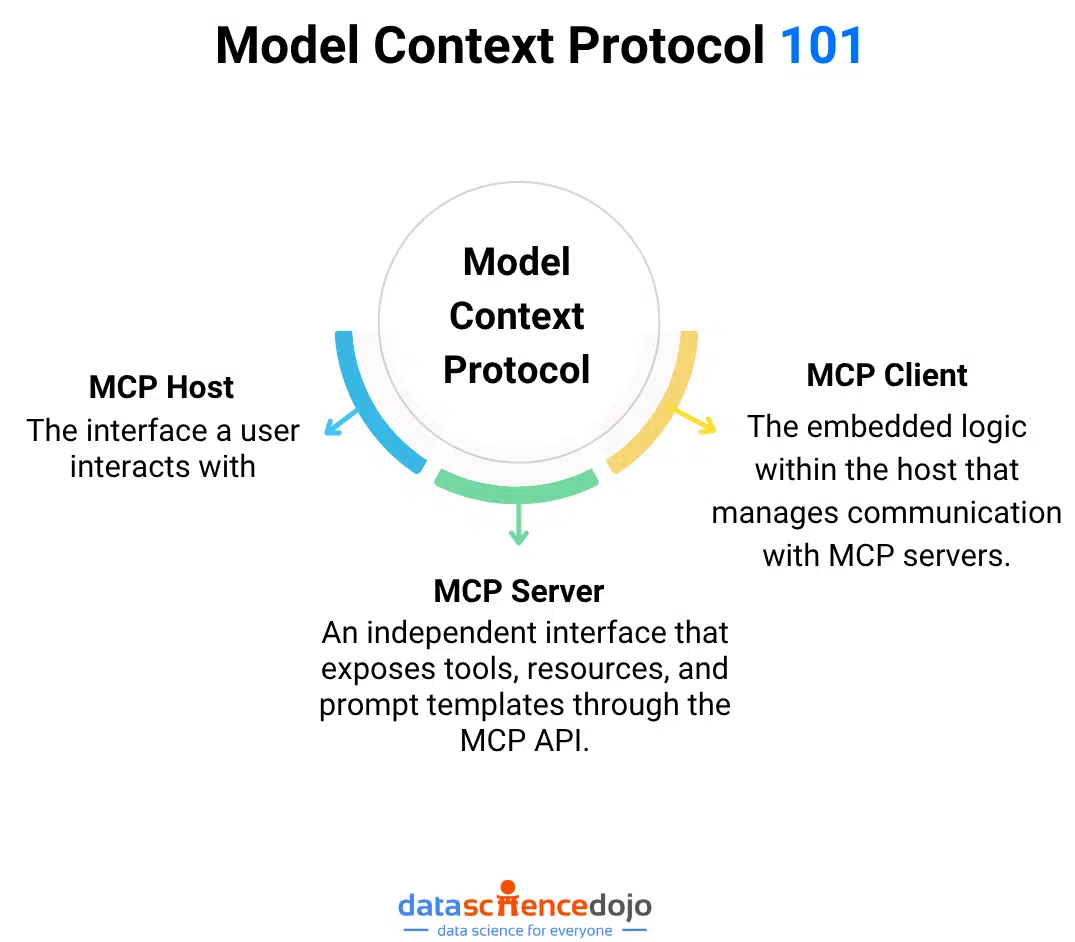

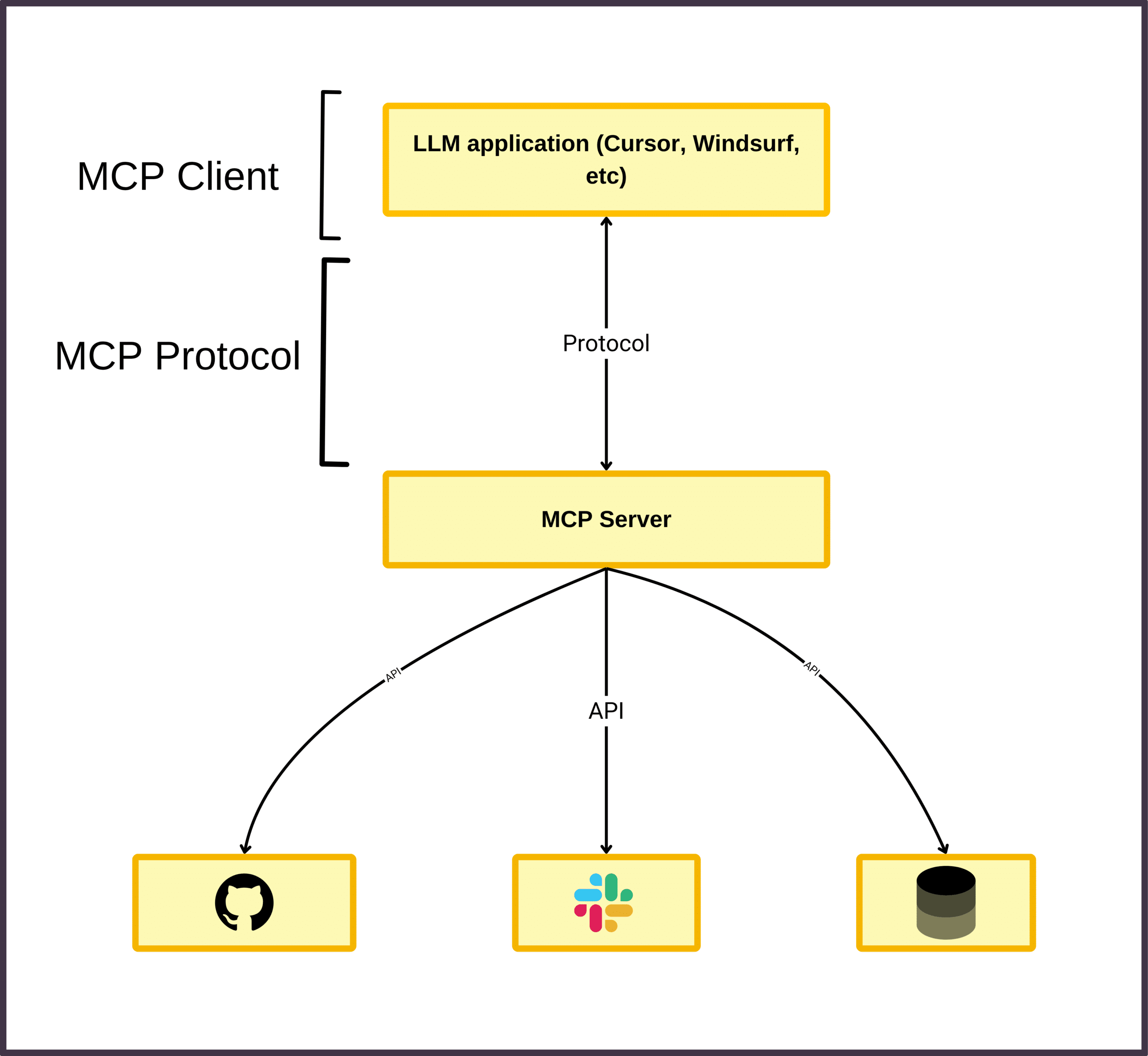

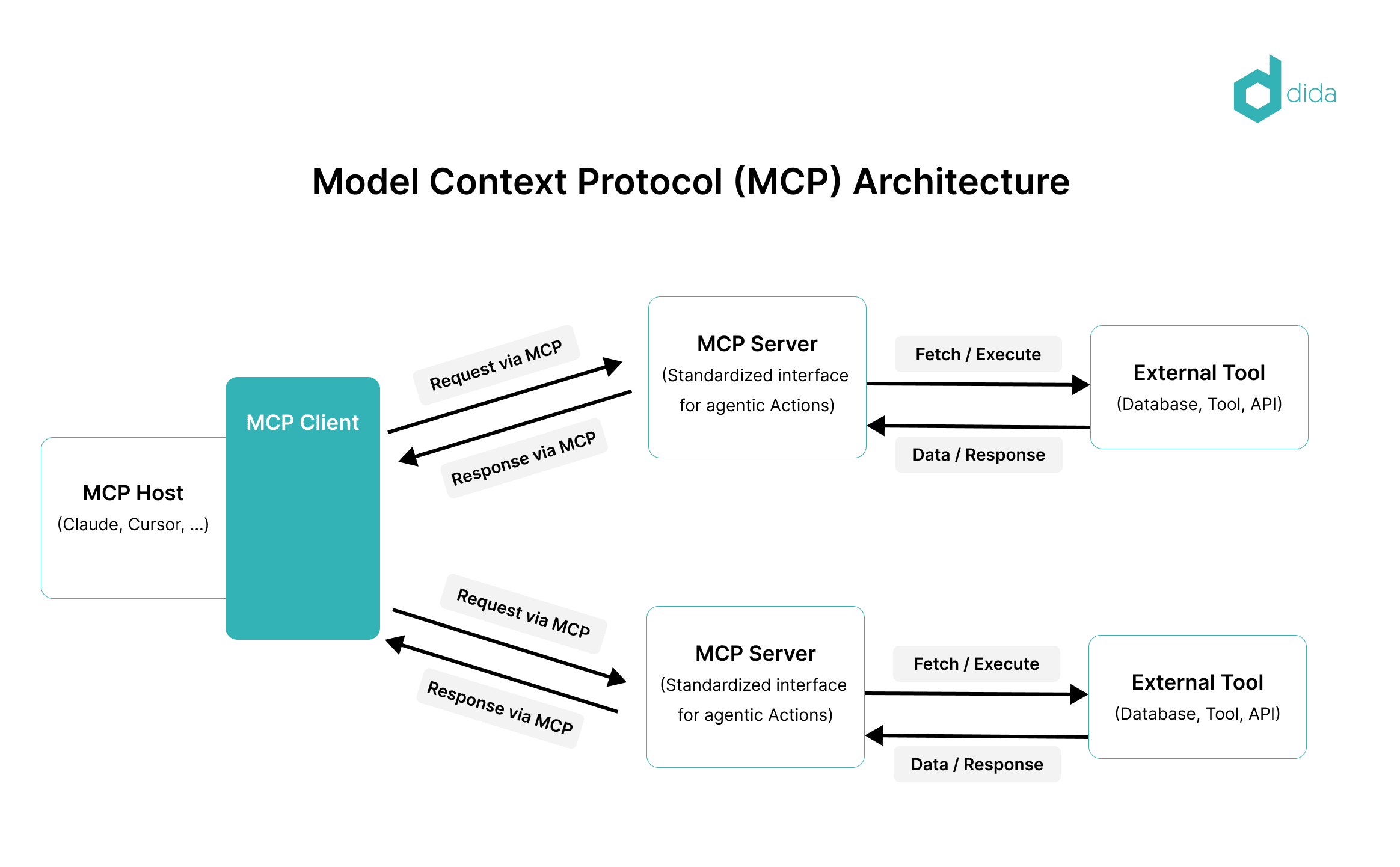

Architecture: Clients, Servers, and Hosts

MCP is built around a structured host–client–server architecture:

1. Host

The interface a user interacts with—e.g., an IDE, a chatbot UI, a voice assistant.

2. Client

The embedded logic within the host that manages communication with MCP servers. It mediates requests from the model and sends them to the right tools.

3. Server

An independent interface that exposes tools, resources, and prompt templates through the MCP API.

Supported Transports:

-

stdio: For local tool execution (high trust, low latency)

-

HTTP/SSE: For cloud-native or remote server integration

Example Use Case:

An AI coding assistant (host) uses an MCP client to connect with:

-

A GitHub MCP server to manage issues or PRs

-

A CI/CD MCP server to trigger test pipelines

-

A local file system server to read/write code

All these interactions happen via a standard protocol, with complete traceability.

Key Features and Technical Innovations

A. Unified Tool and Resource Interfaces

-

Tools: Executable functions (e.g., API calls, deployments)

-

Resources: Read-only data (e.g., support tickets, product specs)

-

Prompts: Model-guided instructions on how to use tools or retrieve data effectively

This separation makes AI behavior predictable, modular, and controllable.

B. Structured Messaging Format

MCP defines strict message types:

-

user,assistant,tool,system,resource

Each message is tied to a role, enabling:

-

Explicit context control

-

Deterministic tool invocation

-

Preventing prompt injection and role leakage

C. Context Management

MCP clients handle context windows efficiently:

-

Trimming token history

-

Prioritizing relevant threads

-

Integrating summarization or vector embeddings

This allows agents to operate over long sessions, even with token-limited models.

D. Security and Governance

MCP includes:

-

OAuth 2.1, mTLS for secure authentication

-

Role-based access control (RBAC)

-

Tool-level permission scopes

-

Signed, versioned components for supply chain security

E. Open Extensibility

-

Dozens of public MCP servers now exist for GitHub, Slack, Postgres, Notion, and more.

-

SDKs available in all major programming languages

-

Supports custom toolchains and internal infrastructure

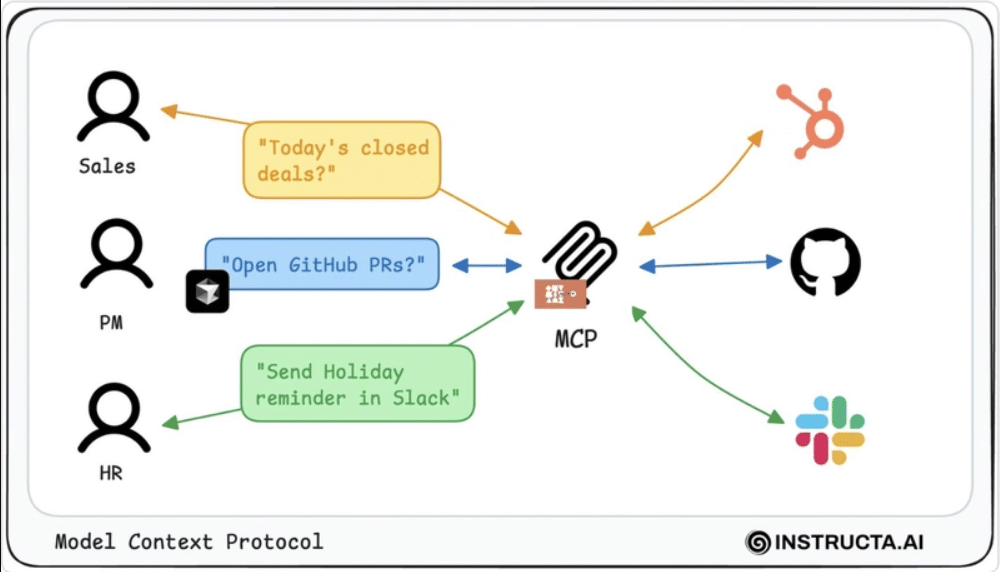

Model Context Protocol in Practice: Enterprise Use Cases

1. AI Assistants

LLMs access user history, CRM data, and company knowledge via MCP-integrated resources—enabling dynamic, contextual assistance.

2. RAG Pipelines

Instead of static embedding retrieval, RAG agents use MCP to query live APIs or internal data systems before generating responses.

3. Multi-Agent Workflows

Agents delegate tasks to other agents, tools, or humans, all via standardized MCP messages—enabling team-like behavior.

4. Developer Productivity

LLMs in IDEs use MCP to:

-

Review pull requests

-

Run tests

-

Retrieve changelogs

-

Deploy applications

5. AI Model Evaluation

Testing frameworks use MCP to pull logs, test cases, and user interactions—enabling automated accuracy and safety checks.

Learn how to build enterprise level LLM Applications in our LLM Bootcamp

Security, Governance, and Best Practices

Key Protections:

-

OAuth 2.1 for remote authentication

-

RBAC and scopes for granular control

-

Logging at every tool/resource boundary

-

Prompt/tool injection protection via strict message typing

Emerging Risks (From Security Audits):

-

Model-generated tool calls without human approval

-

Overly broad access scopes (e.g., root-level API tokens)

-

Unsandboxed execution leading to code injection or file overwrite

Recommended Best Practices:

-

Use MCPSafetyScanner or static analyzers

-

Limit tool capabilities to least privilege

-

Audit all calls via logging and change monitoring

-

Use vector databases for scalable context summarization

Learn More About LLM Observability and Monitoring

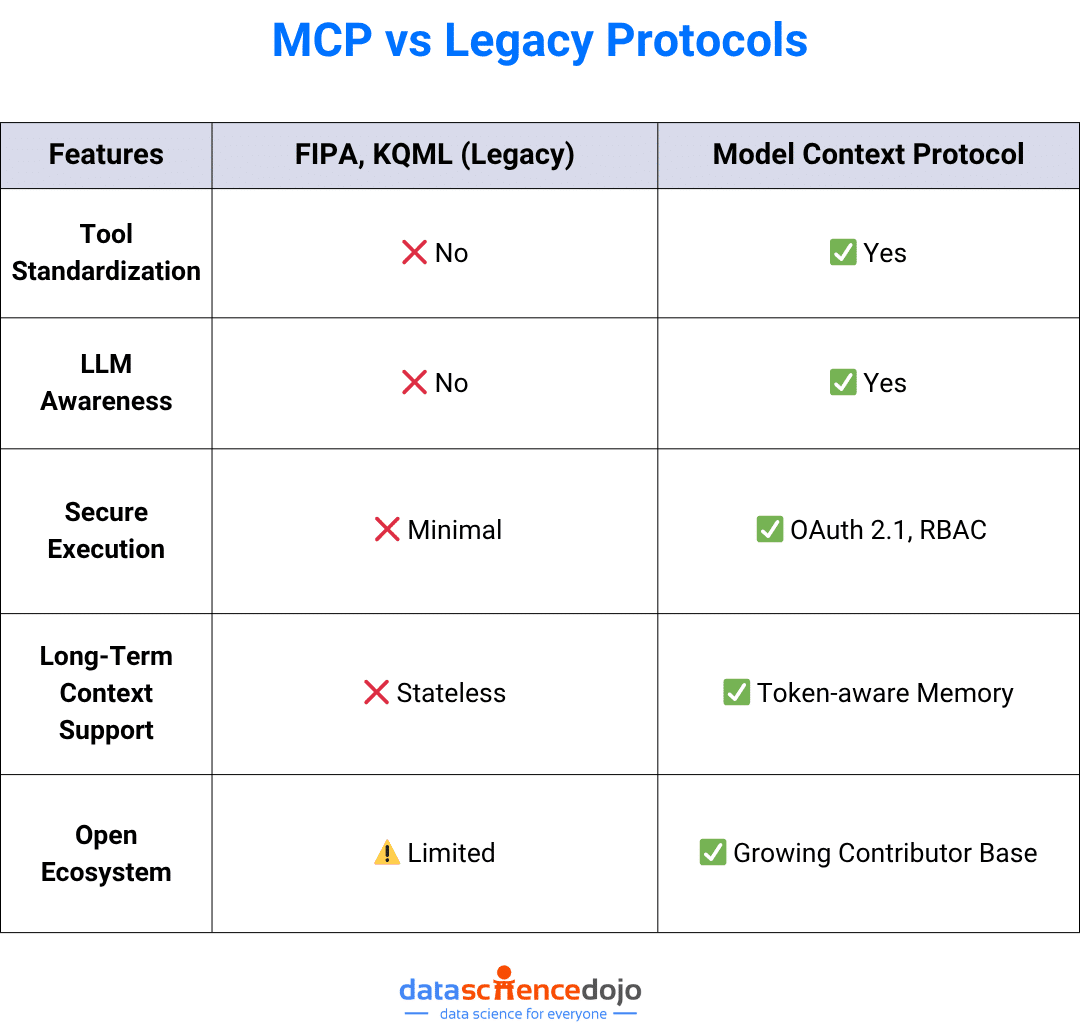

MCP vs. Legacy Protocols

Enterprise Implementation Roadmap

Phase 1: Assessment

-

Inventory internal tools, APIs, and data sources

-

Identify existing agent use cases or gaps

Phase 2: Pilot

-

Choose a high-impact use case (e.g., customer support, devops)

-

Set up MCP client + one or two MCP servers

Phase 3: Secure and Monitor

-

Apply auth, sandboxing, and audit logging

-

Integrate with security tools (SIEM, IAM)

Phase 4: Scale and Institutionalize

-

Develop internal patterns and SDK wrappers

-

Train teams to build and maintain MCP servers

-

Codify MCP use in your architecture governance

Want to learn how to build production ready Agentic Applications? Check out our Agentic AI Bootcamp

Challenges, Limitations, and the Future of Model Context Protocol

Known Challenges:

-

Managing long context histories and token limits

-

Multi-agent state synchronization

-

Server lifecycle/versioning and compatibility

Future Innovations:

-

Embedding-based context retrieval

-

Real-time agent collaboration protocols

-

Cloud-native standards for multi-vendor compatibility

-

Secure agent sandboxing for tool execution

As agentic systems mature, MCP will likely evolve into the default interface layer for enterprise-grade LLM deployment, much like REST or GraphQL for web apps.

FAQ

Q: What is the main benefit of MCP for enterprises?

A: MCP standardizes how AI models connect to tools and data, reducing integration complexity, improving security, and enabling scalable, context-aware AI solutions.

Q: How does MCP improve security?

A: MCP enforces authentication, authorization, and boundary controls, protecting against prompt/tool injection and unauthorized access.

Q: Can MCP be used with any LLM or agentic AI system?

A: Yes, MCP is model-agnostic and supported by major vendors (Anthropic, OpenAI), with SDKs for multiple languages.

Q: What are the best practices for deploying MCP?

A: Use vector databases, optimize context windows, sandbox local servers, and regularly audit/update components for security.

Conclusion:

Model Context Protocol isn’t just another spec, it’s the API standard for agentic intelligence. It abstracts away complexity, enforces governance, and empowers AI systems to operate effectively across real-world tools and systems.

Want to build secure, interoperable, and production-grade AI agents?

-

Explore Data Science Dojo’s LLM Bootcamp

-

Learn more about Agentic AI Protocols

-

Try building your own MCP server with LangGraph or the MCP SDK