All of these written texts are unstructured; text mining algorithms and techniques work best on structured data.

Text analytics for machine learning: Part 1

Have you ever wondered how Siri can understand English? How can you type a question into Google and get what you want?

Over the next week, we will release a five-part blog series on text analytics that will give you a glimpse into the complexities and importance of text mining and natural language processing.

This first section discusses how text is converted to numerical data.

In the past, we have talked about how to build machine learning models on structured data sets. However, life does not always give us data that is clean and structured. Much of the information generated by humans has little or no formal structure: emails, tweets, blogs, reviews, status updates, surveys, legal documents, and so much more. There is a wealth of knowledge stored in these kinds of documents which data scientists and analysts want access to. “Text analytics” is the process by which you extract useful information from text.

Some examples include:

- Predicting the stock market with Tweets

- Detecting fraudulent activities from email messages

- Forecast box office success from just the screen play

- Evaluating cultural fit and personality type from resumes

All these written texts are unstructured; machine learning algorithms and techniques work best (or often, work only) on structured data. So, for our machine learning models to operate on these documents, we must convert the unstructured text into a structured matrix. Usually this is done by transforming each document into a sparse matrix (a big but mostly empty table). Each word gets its own column in the dataset, which tracks whether a word appears (binary) in the text OR how often the word appears (term-frequency). For example, consider the two statements below. They have been transformed into a simple term frequency matrix. Each word gets a distinct column, and the frequency of occurrence is tracked. If this were a binary matrix, there would only be ones and zeros instead of a count of the terms.

Make words usable for machine learning

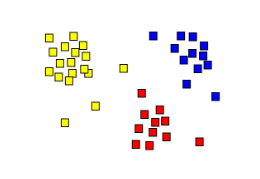

Why do we want numbers instead of text? Most machine learning algorithms and data analysis techniques assume numerical data (or data that can be ranked or categorized). Similarity between documents is calculated by determining the distance between the frequency of words. For example, if the word “team” appears 4 times in one document and 5 times in a second document, they will be calculated as more similar than a third document where the word “team” only appears once.

Text mining: Build a matrix

While our example was simple (6 words), term frequency matrices on larger datasets can be tricky.

Imagine turning every word in the Oxford English dictionary into a matrix, that’s 171,476 columns. Now imagine adding everyone’s names, every corporation or product or street name that ever existed. Now feed it slang. Feed it every rap song. Feed it fantasy novels like Lord of the Rings or Harry Potter so that our model will know what to do when it encounters “The Shire” or “Hogwarts.” Good, now that’s just English. Do the same thing again for Russian, Mandarin, and every other language.

After this is accomplished, we are approaching a several billion-column matrix; two problems arise. First, it becomes computationally unfeasible and memory intensive to perform calculations over this matrix. Secondly, the curse of dimensionality kicks in and distance measurements become so absurdly large in scale that they all seem the same. Most of the research and time that goes into natural language processing is less about the syntax of language (which is important) but more about how to reduce the size of this matrix.

Now that we know what we must do and the challenges that we must face in order to reach our desired result. The next three blogs in the series will be directly addressed to these problems. We will introduce you to 3 concepts: conforming, stemming, and stop word removal.

Want to learn more about text mining and text analytics?

Check out our short video on our data science bootcamp curriculum page OR watch our video on tweet sentiment analysis.

Written by Phuc Duong