This blog digs deeper into different data mining techniques and hacks for beginners.

Data mining has become increasingly crucial in today’s digital age, as the amount of data generated continues to skyrocket. In fact, it’s estimated that by 2025, the world will generate 463 exabytes of data every day, which is equivalent to 212,765,957 DVDs per day! With such an overwhelming amount of data, data mining has become an essential process for businesses and organizations to extract valuable insights and make data-driven decisions.

According to a recent survey, 97% of organizations are now investing in data mining and analytics, recognizing the importance of this field in driving business success. However, for beginners, navigating the world of data mining can be challenging, with so many tools and techniques to choose from.

To help beginners get started, we’ve compiled a list of ten data mining tips. From starting with small datasets to staying up-to-date with the latest trends, these tips can help beginners make sense of the world of data mining and harness the power of their data to drive business success.

Importance of Data Mining

Before moving forward with data mining tips, let’s first discuss its importance.

Data mining is a crucial process that allows organizations to extract valuable insights from large datasets. By understanding their data, businesses can optimize their operations, reduce costs, and make data-driven decisions that can lead to long-term success. Let’s have a look at some points referring to why data mining is really essential.

- It allows organizations to extract valuable insights and knowledge from large datasets, which can drive business success.

- By analyzing data, organizations can identify trends, patterns, and relationships that might be otherwise invisible to the human eye.

- It can help organizations make data-driven decisions, allowing them to respond quickly to changes in their industry and gain a competitive edge.

- Data mining can help businesses identify customer behavior and preferences, allowing them to tailor their marketing strategies to their target audience and improve customer satisfaction.

- By understanding their data, businesses can optimize their operations, streamline processes, and reduce costs.

- It can be used to identify fraud and detect security breaches, helping to protect organizations and their customers.

- It can be used in healthcare to improve patient outcomes and identify potential health risks.

- Data mining can help governments identify areas of concern, allocate resources, and make informed policy decisions.

- It can be used in scientific research to identify patterns and relationships that might be otherwise impossible to detect.

- With the growth of the Internet of Things (IoT) and the massive amounts of data generated by connected devices, data mining has become even more critical in today’s world. Overall, it is a vital tool for organizations across all industries. By harnessing the power of their data, businesses can gain insights, optimize operations, and make data-driven decisions that can lead to long-term success.

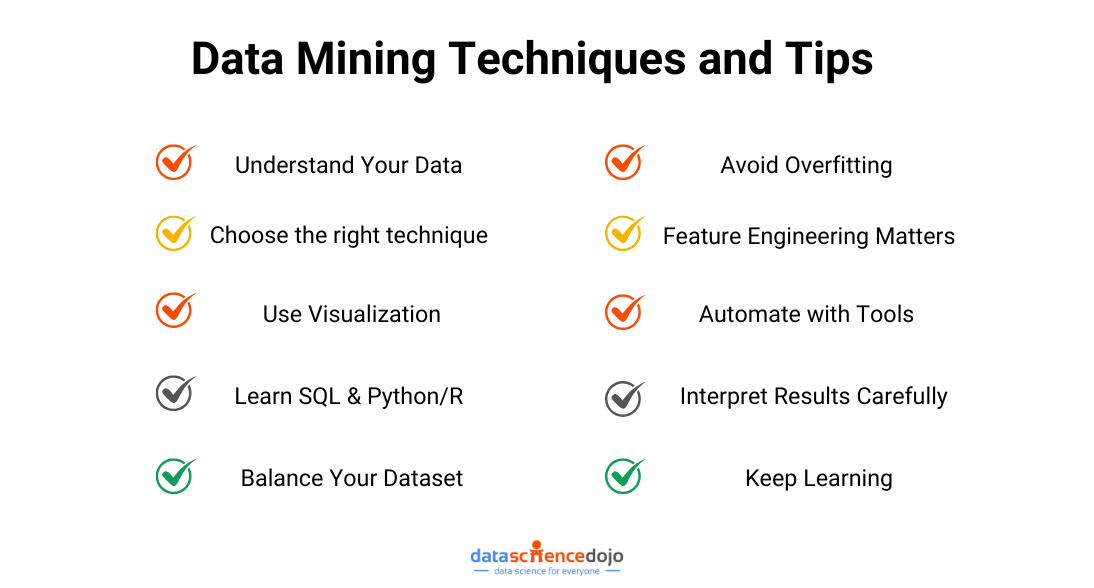

Data Mining Techniques and Tips For Beginners

Now, without any further ado, let’s move toward some tips and techniques that can help you with data mining.

1. Understand Your Data

Before diving into data mining, you need to truly understand your data. Start by exploring it—check what types of data you’re dealing with, look for missing values, detect outliers, and analyze distributions using visual tools like histograms and box plots. A quick glance at your dataset can save you from major headaches later!

Next comes data cleaning, which is all about fixing messy data. Got missing values? Either drop those rows or fill them in using mean, median, or predictive imputation. Watch out for duplicates—they can skew your results! Standardize inconsistent formats, fix typos, and ensure categorical data is uniform (e.g., “NY” vs. “New York”). This step is like tidying up your workspace before starting a big project.

Once the data is clean, it’s time for preprocessing. Scale numerical features to keep everything on the same level—normalization works well for distance-based models, while standardization is great for algorithms like SVM. Convert categorical data into numbers using one-hot encoding or label encoding. And don’t forget feature selection—keeping only the most useful features makes your model smarter and faster.

Before you start modeling, split your dataset into training, validation, and test sets. This ensures that your model learns properly and doesn’t just memorize the data. Prepping data might feel tedious, but trust me, it’s the foundation of every great model. Do it right, and your results will be worth it!

2. Choose the right technique

Not all data mining problems are the same, so picking the right technique is key! First, figure out what you’re trying to achieve. If you’re classifying things (like spam vs. not spam), use classification algorithms like Decision Trees, Random Forests, or Neural Networks. Need to predict numerical values (like house prices)? That’s regression—Linear Regression or Gradient Boosting can help.

If you want to group similar data points, clustering is your best bet. K-Means and DBSCAN work well for segmenting customers or detecting patterns in unlabeled data. For uncovering hidden relationships in data (like “People who buy X also buy Y”), association rule mining—think Apriori or FP-Growth—is the way to go.

Dealing with tons of features? Dimensionality reduction techniques like PCA and t-SNE help simplify data while keeping important patterns intact. And if you’re working with sequential data, like time-series forecasting, methods like ARIMA or LSTMs (Long Short-Term Memory networks) are game changers.

The bottom line? Every problem has its best-fit algorithm, so understand your data and goal before choosing your approach. Experiment with different techniques, compare results, and fine-tune until you get the best performance. The right tool makes all the difference!

3. Use Visualization

Numbers can be overwhelming, but visualization makes patterns clear. Before diving into algorithms, plot your data to understand distributions, trends, and relationships.

Histograms show how data is spread, while scatter plots reveal correlations. Use line charts to track trends over time and box plots to catch outliers that might distort results.

For categorical data, bar charts and pie charts make comparisons easy. If you’re working with many variables, heatmaps and pair plots uncover hidden relationships.

Even after building a model, visualization helps! Use confusion matrices, ROC curves, and precision-recall graphs to evaluate performance. Tools like Matplotlib, Seaborn, and Tableau make this process simple. A good chart can reveal insights that raw numbers might miss!

4. Learn SQL & Python/R

Data mining starts with data, and SQL is key for retrieving it. Learn how to filter, sort, join, and aggregate data efficiently using SQL queries. It’s the go-to tool for handling databases, whether small or massive.

Once you have your data, Python and R help you clean, analyze, and model it. Python is great for automation, machine learning (Scikit-learn, TensorFlow), and visualization (Matplotlib, Seaborn). R excels in statistical analysis and data visualization (ggplot2, dplyr).

Knowing both SQL and a programming language like Python or R gives you the power to extract, transform, and analyze large datasets efficiently. The better you get at these tools, the faster and smarter your data mining process will be!

5. Balance Your Dataset

In real-world data, some categories may appear far more often than others. This is called imbalanced data, and it can lead to biased models. For example, in fraud detection, fraudulent transactions might make up less than 1% of the data, causing the model to ignore them.

To fix this, use oversampling to duplicate minority class examples or undersampling to reduce majority class instances. A more advanced method is SMOTE (Synthetic Minority Over-sampling Technique), which generates synthetic data points to balance the dataset.

Besides resampling, try using cost-sensitive algorithms that penalize misclassifications of the minority class. Also, focus on metrics like precision, recall, and F1-score instead of just accuracy, as accuracy can be misleading in imbalanced datasets.

Balancing your dataset ensures your model doesn’t just favor the majority class but truly learns to detect important patterns!

6. Avoid Overfitting

Overfitting occurs when a model learns the noise in the training data instead of the actual patterns, making it perform exceptionally well on training data but poorly on unseen data. This leads to models that are too complex and overly specific to the dataset. To avoid this, use cross-validation, which trains the model on different subsets of the data to ensure it generalizes well. Pruning is useful for decision trees, helping remove unnecessary branches that don’t contribute much to predictions.

Regularization techniques like L1 (Lasso) and L2 (Ridge) penalties control model complexity by reducing excessive reliance on specific features. Always monitor your model’s performance on test data to ensure it doesn’t just memorize but truly understands the data.

7. Feature Engineering Matters

Feature engineering is the process of transforming raw data into more useful inputs for a model. Simply feeding a model unprocessed data rarely yields the best results. Creating new features, selecting the most relevant ones, and encoding categorical data properly can significantly boost accuracy and efficiency.

For example, instead of using a raw timestamp, extract meaningful attributes like “day of the week,” “time of day,” or “season” to capture behavioral patterns in data. In text mining, converting text into TF-IDF (Term Frequency-Inverse Document Frequency) values instead of raw words can improve model performance. The more meaningful and well-structured your features are, the more effectively your model can learn from the data.

8. Automate with Tools

Data mining involves repetitive and time-consuming tasks like data preprocessing, feature selection, and hyperparameter tuning. Instead of doing everything manually, use tools that speed up and simplify the process.

Weka and Orange provide user-friendly, drag-and-drop interfaces for quick model building. For more control and flexibility, Python libraries like Scikit-learn, TensorFlow, and Pandas allow automation of everything from cleaning data to training machine learning models. Automation not only saves time but also ensures consistent, repeatable results, allowing you to focus on fine-tuning models and extracting insights rather than manual labor.

9. Interpret Results Carefully

Many beginners focus too much on accuracy, but in data mining, accuracy alone is not enough. In cases like fraud detection or medical diagnosis, accuracy can be misleading.

For example, if only 1% of transactions in a dataset are fraudulent, a model that predicts “no fraud” for every transaction will be 99% accurate—but completely useless. Instead, focus on metrics like precision (how many predicted positives are correct), recall (how many actual positives were detected), and F1-score (a balance of precision and recall).

Visualization tools like confusion matrices, ROC (Receiver Operating Characteristic) curves, and precision-recall graphs give a deeper understanding of model performance. A truly good model doesn’t just predict well—it predicts correctly in the right situations.

10. Keep Learning

Data mining is constantly evolving, with new algorithms, tools, and techniques emerging regularly. What works today may become outdated tomorrow, so continuous learning is essential. Follow data science blogs, attend webinars, and explore platforms like Kaggle to practice real-world problems. Engage with open-source communities and experiment with different models to stay ahead of the curve.

Moreover, read research papers on new methodologies, and don’t be afraid to explore advanced techniques like deep learning, reinforcement learning, and autoML. The best data miners are those who never stop learning—because in this field, innovation never stops!

Written by Claudia Jeffrey