In this blog, we will have a look at the list of top 10 Machine Learning Demos offered by Data Science Dojo that will provide ease to use ML (Machine Learning) techniques free.

With more people entering Data Science, Machine Learning and Artificial Intelligence are among the top emerging areas of work in the 21st century. Many people are opting for this area for them.

The other perspective to view the situation is to utilize these innovative technologies in business. For this reason, recently Data Science Dojo has revamped its platform called Machine Learning Demos. The primary benefit of using these demos is that a few of them are programmed on Azure APIs while others are trained on different ML models, and we can easily use them free of cost.

Machine learning demos from DSD

DSD offers a lot of training and boot camps Data Science Bootcamps to get started with the field, so these demos are also an add-on to our teaching.

So, if you are interested in exploring the practical applications of this modern tech, this set of free ML demos can help you a lot in many ways. The top ones are listed below go and check them out:

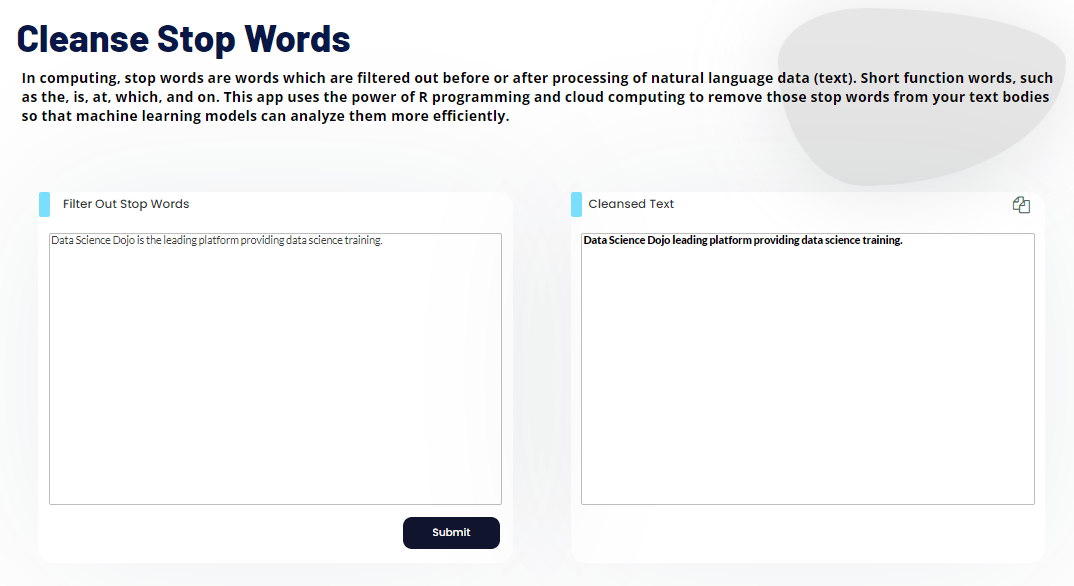

1. Cleanse stop words:

This demo uses the Azure services for the backend while according to the user point of view, it has quite easy to use Interface and we can use this demo to make text data cleaner for ML models. Go to Cleanse Stop words demo input your text data and get the cleaned text in just one click.

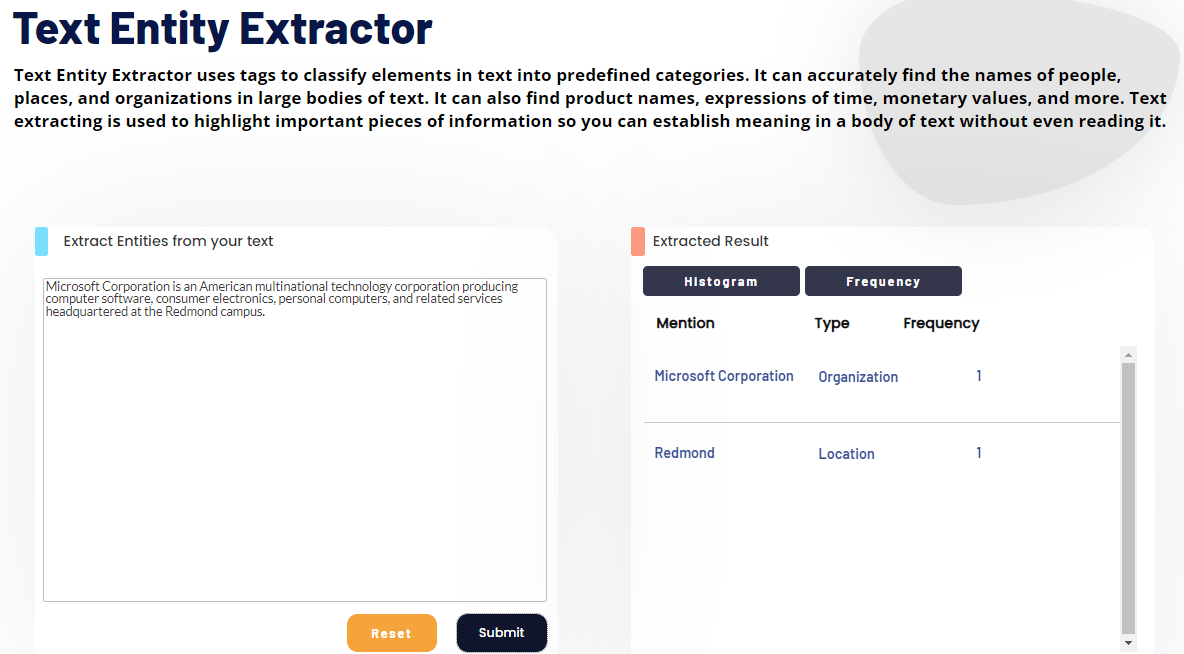

2. Text entity extractor:

Entity extraction helps to sort the unstructured data and find valuable information from the given text. This demo is based on Azure API. It’s simple UI (User Interface) provides an effortless way to use azure services for entity extraction. Go to Text Entity Extractor demo and just input your text to categorize it based on semantic type.

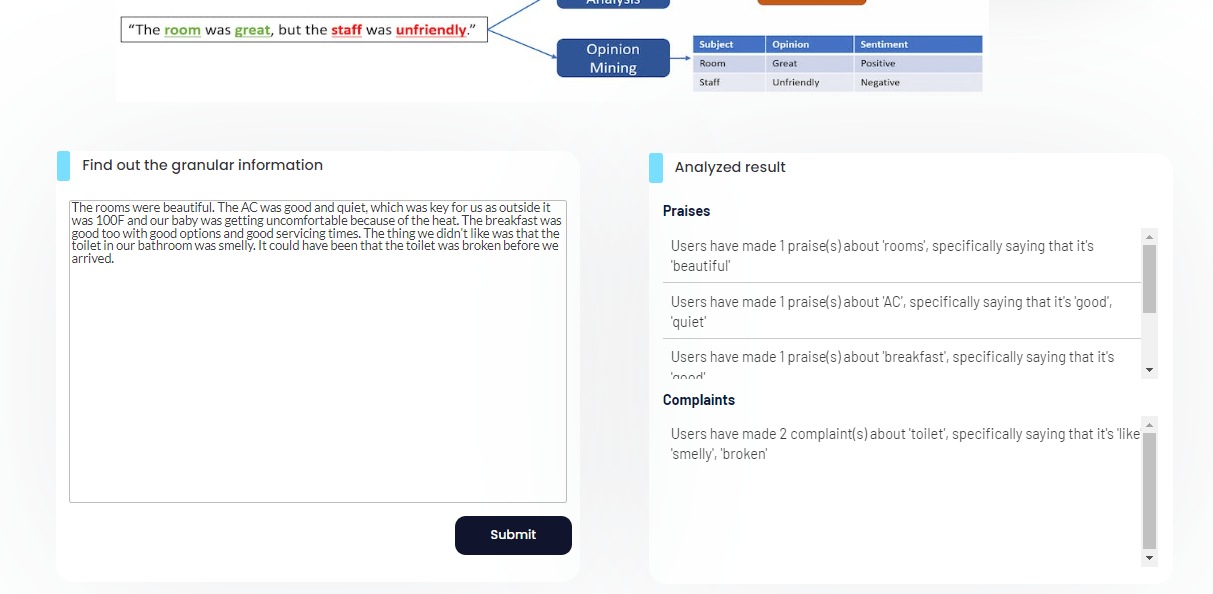

3. Opinion mining:

Sentiment analysis, also referred to as opinion mining, is one of the key techniques in Natural Language Processing (NLP). The business view of opinion mining is highly appreciable as it leads to extracting sentiments from customers’ feedback. This demo is based on Azure Text API while its UI efficiently separates the praises and complaints from the given text. Try Opinion Mining Demo!

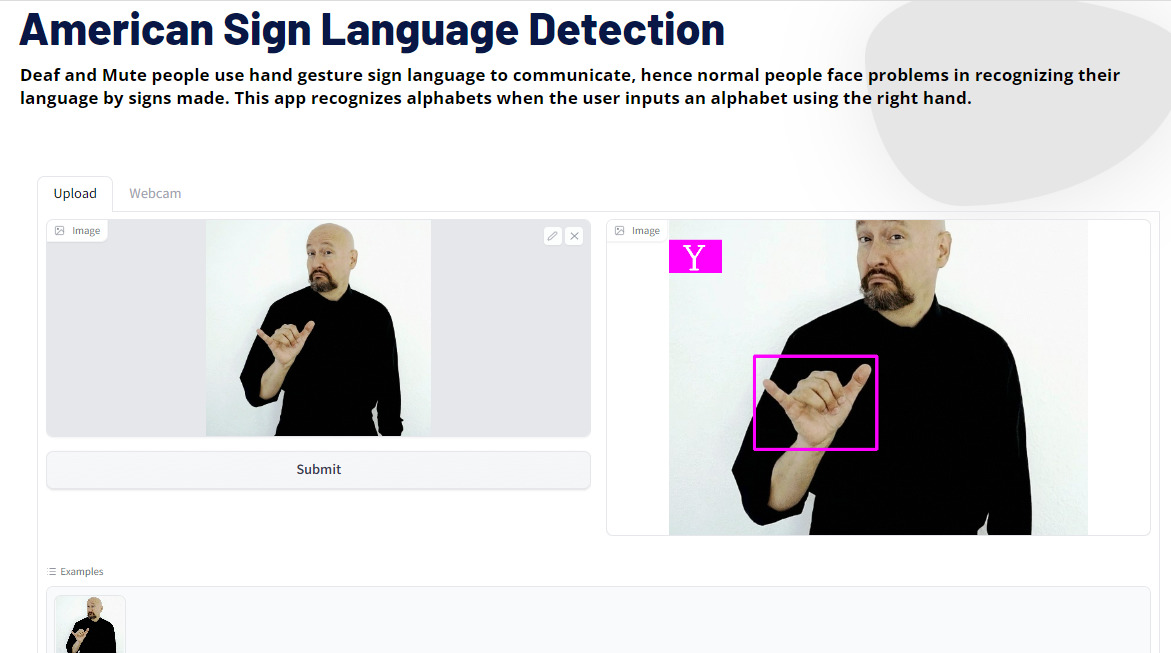

4. American sign language detection:

Systems for recognizing sign language are being developed to make it easier for signers and non-signers to communicate. This demo is built on Python famous package called Mediapipe with some other packages like Tensorflow, Cvzone and Numpy. Go to Sign Language demo, and when the user inputs an alphabet using the right hand in the camera it detects the alphabet.

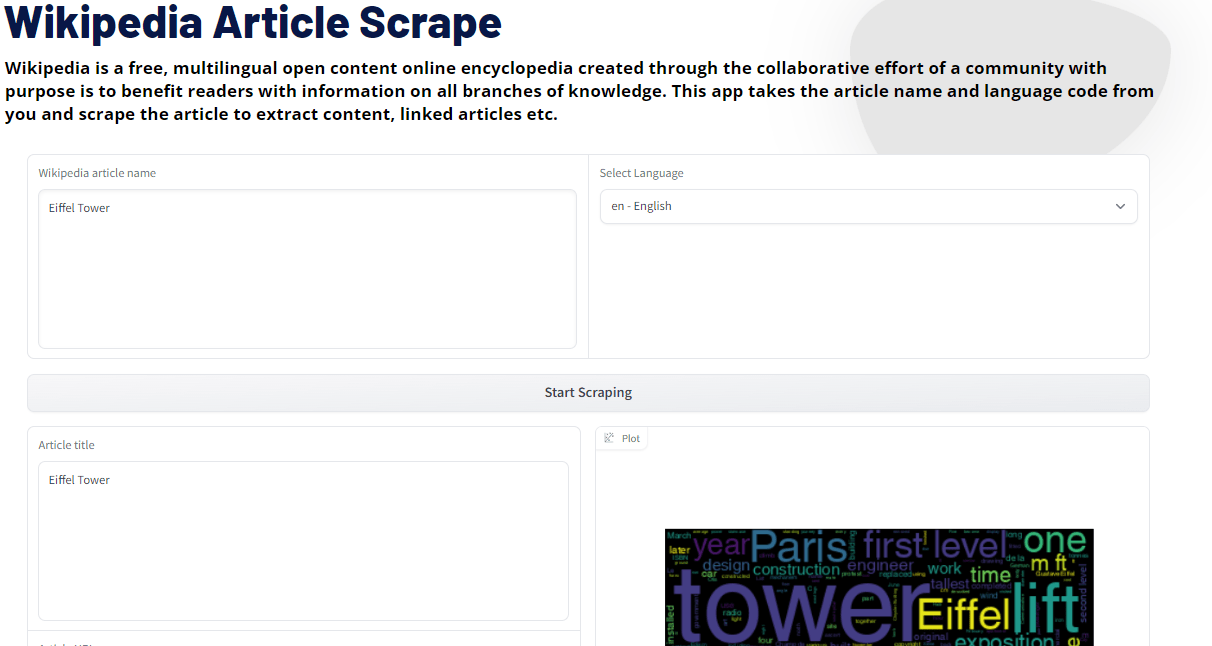

5. Wikipedia article scrape:

Besides the fact that Wikipedia is free, it is an also open multilingual content online encyclopedia. This demo is based on famous python packages Wikipedia and Worcloud. This demo really helps in research to find the articles. Go to Wikipedia Article Scrape, and give the article name and language code and scrape the article to extract content, linked articles etc.

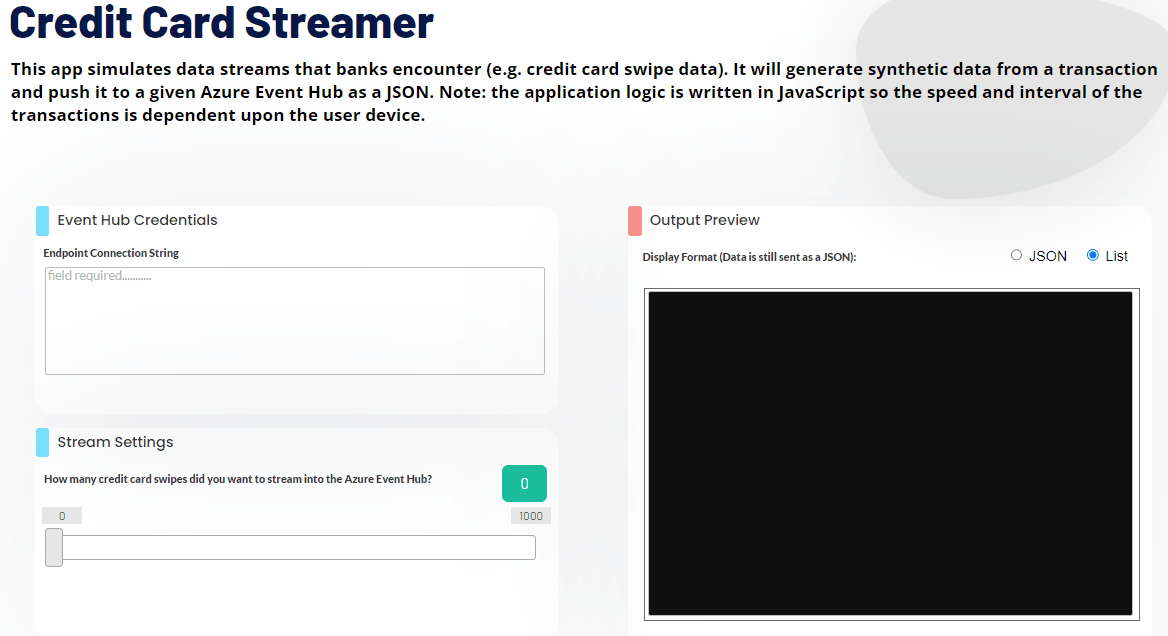

6. Credit card streamer:

We have a few Data streaming demos; Credit Card Streamer is one from that category. This demo is based on Azure SDK in python, give the endpoint string of Event Hub, and set the stream, it will connect this app to Event Hub and your swipes send to Azure Event Hub. Go to Credit Card Streamer and try.

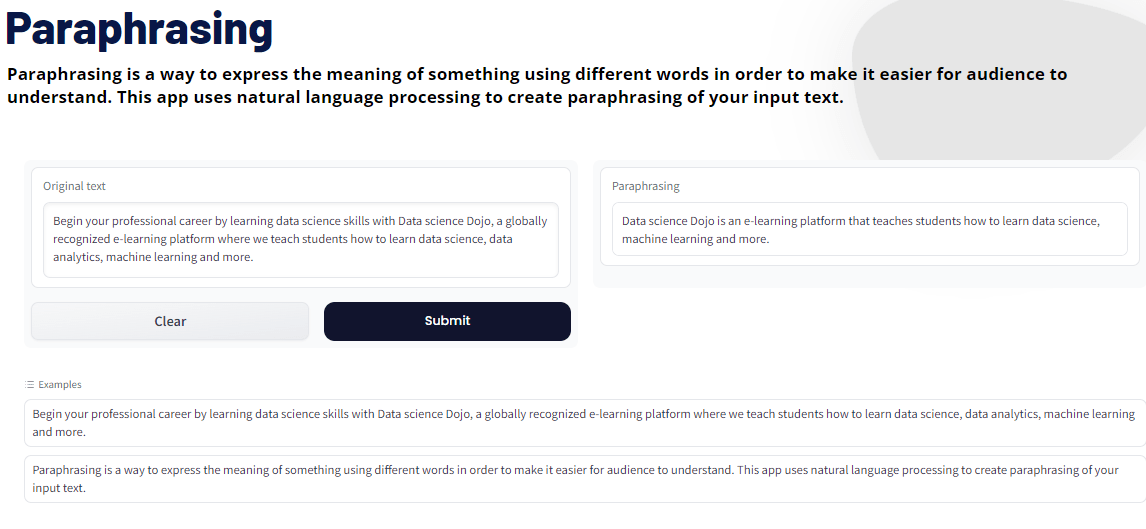

7. Paraphrasing:

The basic objective of paraphrasing is to translate the original message into your own words to demonstrate that you have understood the paragraph sufficiently to restate it.

This demo is built on Python, and it uses a transformer library with some other famous Python packages like PyTorch, timm, sentence piece, and sentence-splitter. Go to the Paraphrasing demo, it uses natural language processing to create a paraphrasing of your input text.

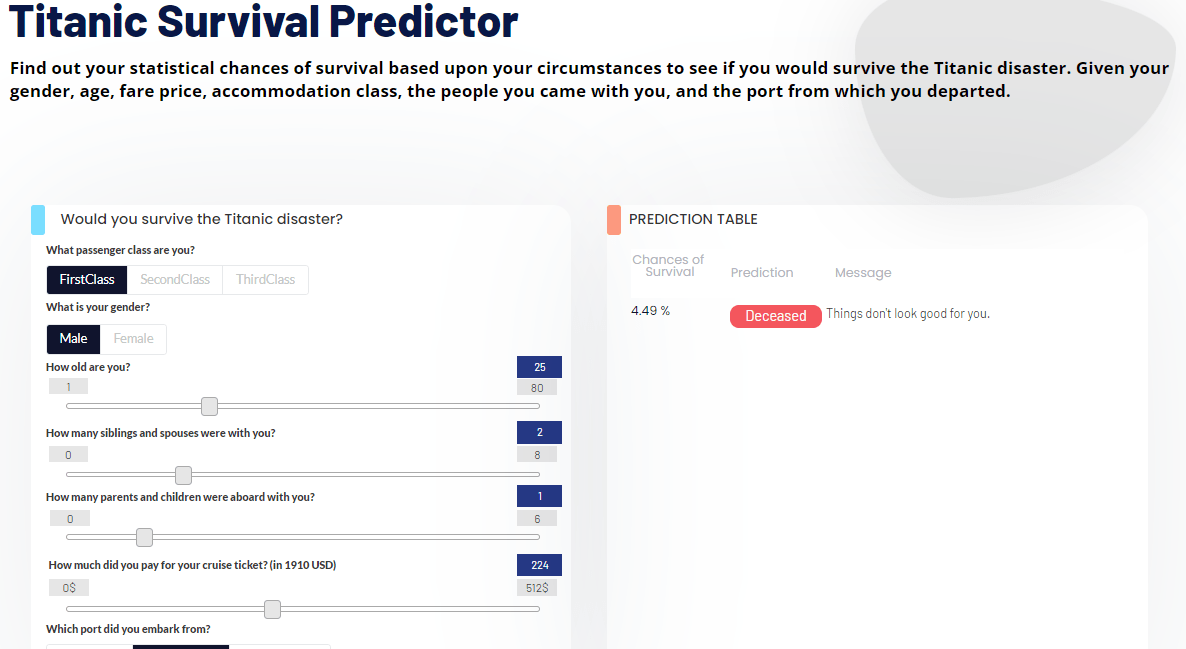

8. Titanic survival predictor:

This demo is unique from our predictive demos category and is based on Azure API. It will predict that the person would survive the Titanic Disaster based on the given required inputs. The backend is built on Python while the UI gives the message based on chances of survival. Go to the Titanic Survival Predictor demo and try it once (just for curiosity 😊)

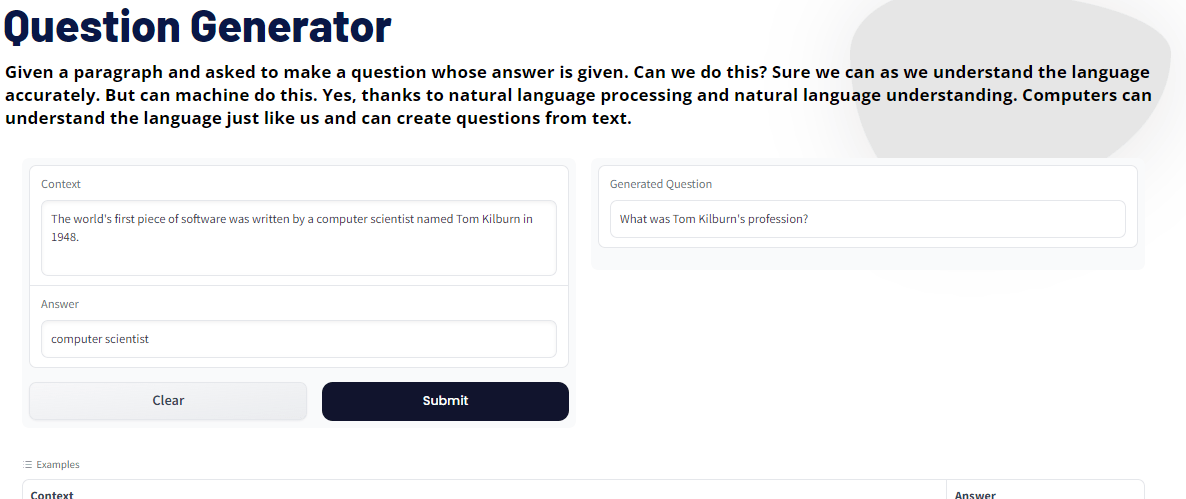

9. Question generator:

This demo is built on a Python library transformer. Transformers package contains over 30 pre-trained models and 100 languages, along with eight major architectures for natural language understanding (NLU) and natural language generation (NLG).

In educational purposes, we can use this demo. It saves teachers time and effort to make a quiz related to the given content. Go to Question Generator demo, just give the context of the question and the correct answer then click submit, this demo automatically generates the Question based on given inputs.

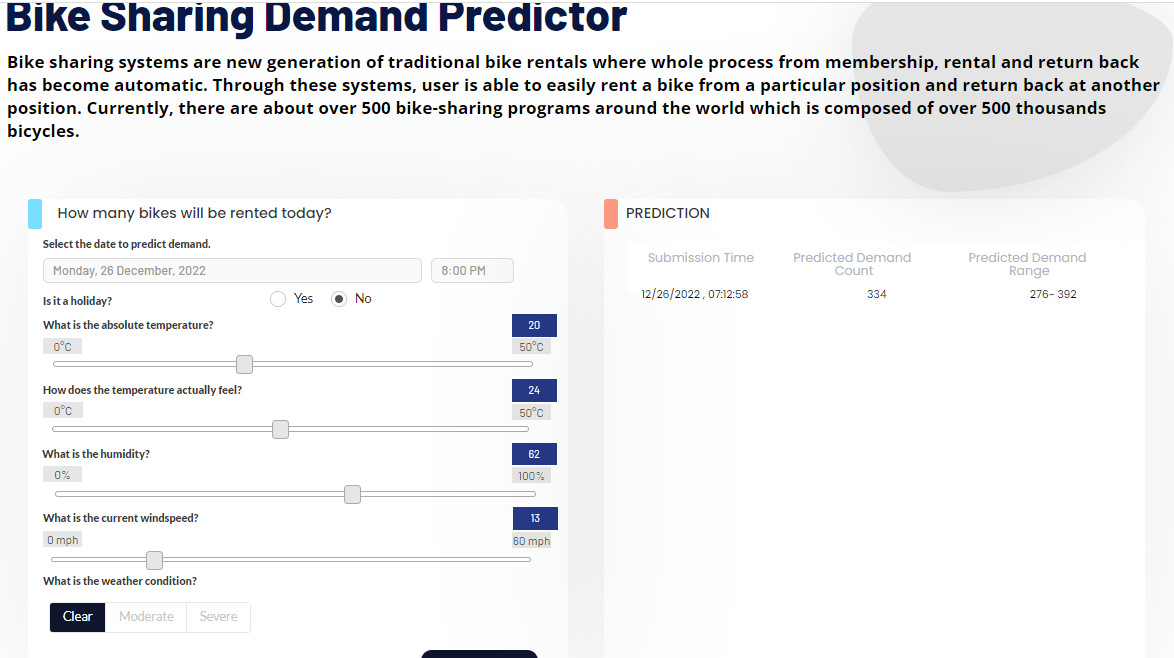

10. Bike sharing demand predictor:

The last demo we are going to discuss in this blog is also from the list of predictive demos category. This demo uses Azure API for predicting the demand of bike sharing while the UI allows you to change the inputs dynamically from sliders. Must go and check Bike Sharing Demand Predictor.

Stay updated for interesting ML demos

Recently in 2022, we have revamped our demo site completely. And now we have 29+ demos on our site. We have categorized them into categories for the ease of users so that they can pick the demo based on tasks, these are only a few top ML demos, other than these, we do have many informative and interesting demos on this site.

Once you are familiar with data-driven tasks it is most important to utilize them for improving our businesses, we have received a lot of positive feedback from the customers this year that motivates us to improve and add more advanced demos to our site. I assure you; it is worth it to use, go, and explore: