Are you confused about where to start working on your large language model? It all starts with an understanding of a typical LLM project lifecycle. As part of the generative AI world, LLMs have led to innovation in machine-learning tasks.

Let’s take a look at the steps that make up an LLM project lifecycle and their impact on the process.

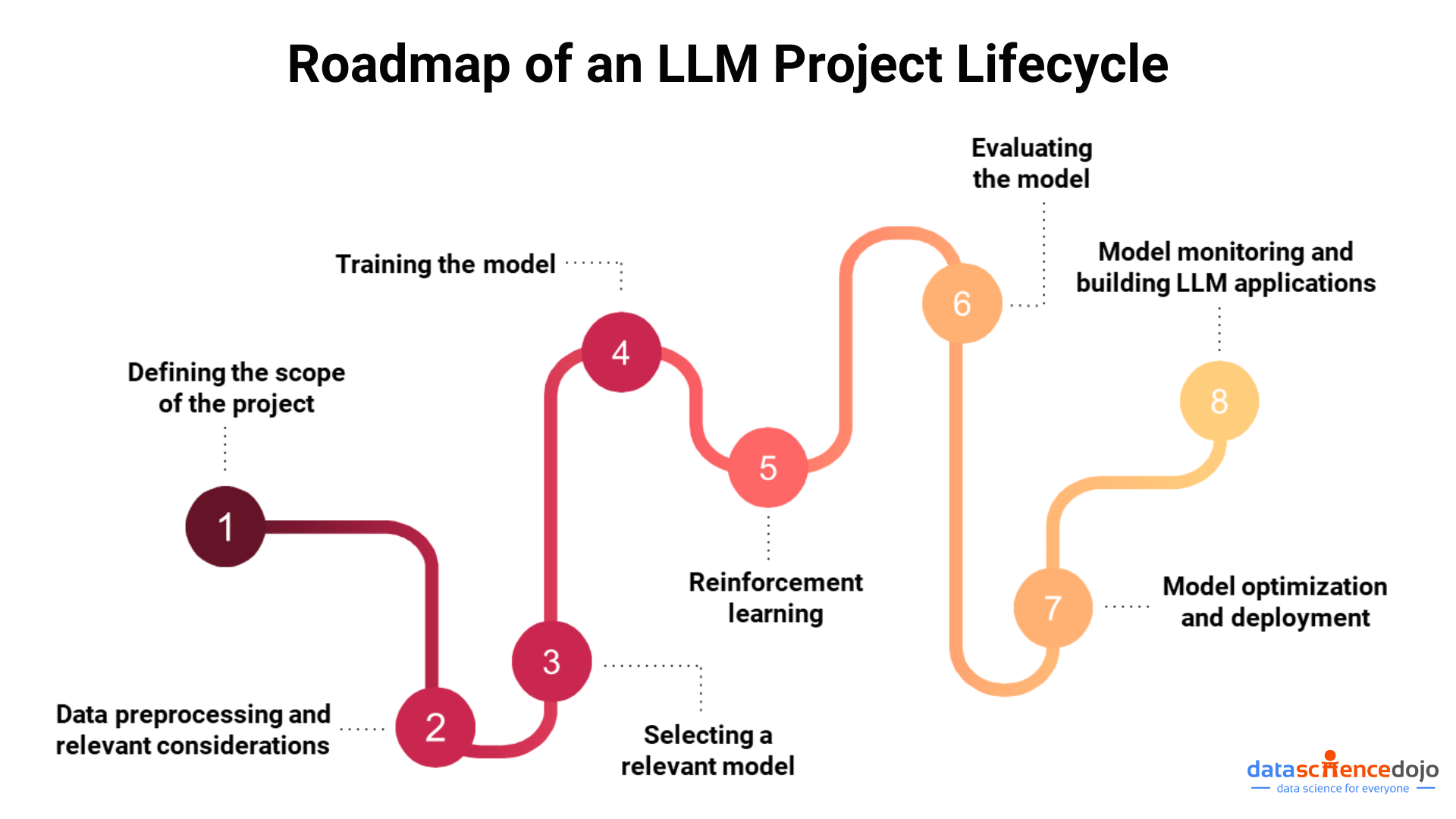

Roadmap to Understanding an LLM Project Lifecycle

Within the realm of generative AI, a project involving large language models can be a daunting task. It demands proper coordination and skills to execute a task successfully. In order to create an ease of understanding, we have broken down a typical LLM project lifecycle into multiple steps.

In this section, we will delve deeper into the various stages of the process.

Defining the Scope of the Project

It is paramount to begin your LLM project lifecycle by understanding its scope. It begins with a comprehension of the problem you aim to solve. Market research and stakeholder interviews are a good place to start at this stage. You must also review the available technological possibilities.

Discover the Full Details of LLMs: Click Here to Learn More

LLMs are multifunctional but the size and architecture of the model determine its ability, ranging from long-form text generation and text summarization to language translation. Based on your research, you can determine the specifics of your LLM project and hence the scope of it.

The next part of this step is to explore the feasibility of a solution in generative AI. You must use this to set clear and measurable objectives as they would define the roadmap for your LLM project lifecycle.

Data Preprocessing and Relevant Considerations

Now that you have defined your problem, the next step is to look for relevant data. Data collection can encompass various sources, depending on your problem. Once you have the data, you need to clean and preprocess it. The goal is to make the data usable for model training.

Moreover, it is important in your LLM project lifecycle to consider all the ethical and legal considerations when dealing with data. You must have the clearance to use data, including protection laws, anonymization, and user consent. Moreover, you must ensure the prevention of potential biases through the diversity of perspectives in the data.

Selecting a Relevant Model

When it comes to model selection, you have two choices. Either use an existing base model or pre-train your own from scratch. Based on your project demands, you can start by exploring the available models to check if any aligns with your requirements.

Models like GPT-4 and PalM2 are powerful model options. Moreover, you can also explore FLAN-T5 – a hugging face model, offering enhanced Text-to-Text Transfer Transformer features. However, you need to consider license and certification details before choosing an open-source base model.

In case none of the existing models fulfill your demands, you need to pre-train a model from scratch to begin your LLM project lifecycle. It requires machine-learning expertise, computational resources, and time. The large investment in pre-training results in a highly customized model for your project.

- What is pre-training? It is a compute-intensive phase of unsupervised learning tasks. In an LLM project lifecycle, the objective primarily focuses on text generation or next-token prediction. During this complex process, the model is trained and the transformer architecture is decided. It results in the creation of Formation Models.

Training the Model

The next step in the LLM project lifecycle is to adapt and train the foundation model. The goal is to refine your LLM model with your project requirements. Let’s look at some common techniques for the model training process.

- Prompt engineering: As the name suggests, this method relies on prompt generation. You must structure prompts carefully for your LLM model to get accurate results. It requires you to have a proper understanding of your model and the project goals.

For a typical LLM model, a prompt is provided to the model for it to generate a text. This complete process is called inference. It is the simplest phase in an LLM project lifecycle that aims to refine your model responses and enhance its performance.

- Fine-tuning: At this point, you focus on customizing your model to your specific project needs. The fine-tuning process enables you to convert a generic model into a tailored one by using domain-specific data, resulting in its optimized performance for particular tasks. It is a supervised learning task that adds weights to the foundation model, making it more efficient in the process.

- Caching: It is one of the less-renowned but important techniques in the training process. It involves the frequent storage of prompts and responses to speed up your model’s performance. Caching high-dimensional vectors results in faster retrieval of information and generation of more efficient results.

Reinforcement Learning

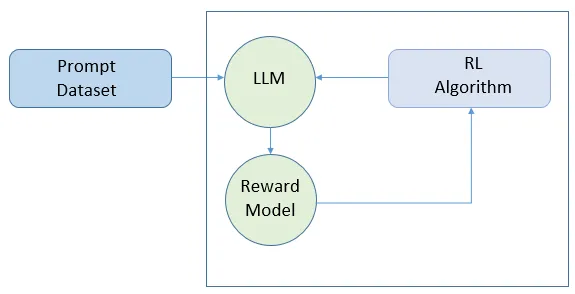

Reinforcement learning happens from human or AI feedback, where the former is called RLHF and the latter is RLAIF. RLHF is aimed at aligning the LLM model with human values, expectations, and standards. The human evaluators review, rate, and provide feedback on the model performance.

It is an iterative process completed using rewards against each successful model output which results in the creation of a rewards model. Then the RLAIF is used to scale human feedback that ensures the model is completely aligned with the human values.

Evaluating the Model

It involves the validation and testing of your LLM model. The model is tested using unseen data (also referred to as test data). The output is evaluated against a set of metrics. Some common LLM evaluation metrics include BLEU (Bilingual Evaluation Understudy), GLUE (General Language Understanding Evaluation), and HELM (Holistic Evaluation of Language Models).

Along with the set metrics, the results are also analyzed for adherence to ethical standards and the absence of biases. This ensures that your model for the LLM project lifecycle is efficient and relevant to your goals.

Model Optimization and Deployment

Model optimization is a prerequisite to the deployment process. You must ensure that the model is efficiently designed for your application environment. The process primarily includes the reduction of model size, enhancement of inference speed, and efficient operation of the model in real-world scenarios. It ensures faster inference using less memory.

Some common optimization techniques include:

- Distillation – it teaches a smaller model (called the student model) from a larger model (called the teacher model)

- Post-training quantization – it aims to reduce the precision of model weights

- Pruning – it focuses on removing the model weights that have negligible impact

This stage of the LLM project lifecycle concludes with seamless integration of workflows, existing systems, and architectures. It ensures smooth accessibility and operation of the model.

Model Monitoring and Building LLM Applications

The LLM project lifecycle does not end at deployment. It is crucial to monitor the model’s performance in real-world situations and ensure its adaptability to evolving requirements. It also focuses on addressing any issues that arise and regularly updating the model parameters.

Finally, your model is ready for building robust LLM applications. These platforms can cater to diverse goals, including automated content creation, advanced predictive analysis, and other solutions to complex problems.

Summarizing the LLM Project Lifecycle

Hence, the roadmap to completing an LLM project lifecycle is a complex trajectory involving multiple stages. Each stage caters to a unique aspect of the model development process. The final goal is to create a customized and efficient machine-learning model to deploy and build innovative LLM applications.