In the ever-evolving landscape of natural language processing (NLP), embedding techniques have played a pivotal role in enhancing the capabilities of language models.

The birth of Word Embeddings

Before venturing into the large number of embedding techniques that have emerged in the past few years, we must first understand the problem that led to the creation of such techniques.

Word embeddings were created to address the absence of efficient text representations for NLP models. Since NLP techniques operate on textual data, which inherently cannot be directly integrated into machine learning models designed to process numerical inputs, a fundamental question arose: how can we convert text into a format compatible with these models?

Lean more about Text Analytics

Basic approaches like one-hot encoding and Bag-of-Words (BoW) were employed in the initial phases of NLP development. However, these methods were eventually discarded due to their evident shortcomings in capturing the contextual and semantic nuances of language. Each word was treated as an isolated unit, without understanding its relationship with other words or its usage in different contexts.

Word2Vec

In 2013, Google presented a new technique to overcome the shortcomings of the previous word embedding techniques, called Word2Vec. It represents words in a continuous vector space, better known as an embedding space, where semantically similar words are located close to each other.

This contrasted with traditional methods, like one-hot encoding, which represents words as sparse, high-dimensional vectors. The dense vector representations generated by Word2Vec had several advantages, including the ability to capture semantic relationships, support vector arithmetic (e.g., “king” – “man” + “woman” = “queen”), and improve the performance of various NLP tasks like language modeling, sentiment analysis, and machine translation.

Transition to GloVe and FastText

The success of Word2Vec paved the way for further innovations in the realm of word embeddings. The Global Vectors for Word Representation (GloVe) model, introduced by Stanford researchers in 2014, aimed to leverage global statistical information about word co-occurrences.

GloVe demonstrated improved performance over Word2Vec in capturing semantic relationships. Unlike Word2Vec, GloVe considers the entire corpus when learning word vectors, leading to a more global understanding of word relationships.

Fast forward to 2016, Facebook’s FastText introduced a significant shift by considering sub-word information. Unlike traditional word embeddings, FastText represented words as bags of character n-grams. This sub-word information allowed FastText to capture morphological and semantic relationships in a more detailed manner, especially for languages with rich morphology and complex word formations. This approach was particularly beneficial for handling out-of-vocabulary words and improving the representation of rare words.

The Rise of Transformer Models

The real game-changer in the evolution of embedding techniques came with the advent of the Transformer architecture. Introduced by researchers at Google in the form of the Attention is All You Need paper in 2017, Transformers demonstrated remarkable efficiency in capturing long-range dependencies in sequences.

The architecture laid the foundation for state-of-the-art models like OpenAI’s GPT (Generative Pre-trained Transformer) series and BERT (Bidirectional Encoder Representations from Transformers). Hence, the traditional understanding of embedding techniques is revamped with new solutions.

Impact of Embedding Techniques on Language Models

The embedding techniques mentioned above have significantly impacted the performance and capabilities of LLMs. Pre-trained models like GPT-3 and BERT leverage these embeddings to understand natural language context, semantics, and syntactic structures. The ability to capture context allows these models to excel in a wide range of NLP tasks, including sentiment analysis, text summarization, and question-answering.

Imagine the sentence: “The movie was not what I expected, but the plot twist at the end made it incredible.”

Traditional models might struggle with the negation of “not what I expected.” Word embeddings could capture some sentiment but might miss the subtle shift in sentiment caused by the positive turn of events in the latter part of the sentence.

In contrast, LLMs with contextualized embeddings can consider the entire sentence and comprehend the nuanced interplay of positive and negative sentiments. They grasp that the initial negativity is later counteracted by the positive twist, resulting in a more accurate sentiment analysis.

Advantages of Embeddings in LLMs

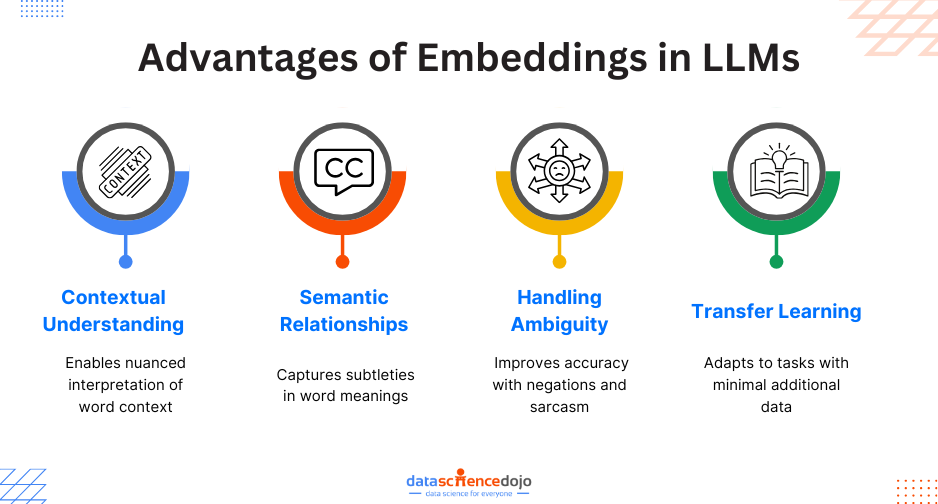

- Contextual Understanding: LLMs equipped with embeddings comprehend the context in which words appear, allowing for a more nuanced interpretation of sentiment in complex sentences.

- Semantic Relationships: Word embeddings capture semantic relationships between words, enabling the model to understand the subtleties and nuances of language.

- Handling Ambiguity: Contextual embeddings help LLMs handle ambiguous language constructs, such as negations or sarcasm, contributing to improved accuracy in sentiment analysis.

- Transfer Learning: The pre-training of LLMs with embeddings on vast datasets allows them to generalize well to various downstream tasks, including sentiment analysis, with minimal task-specific data.

To dive even deeper into embeddings and their role in LLMs, click here

How are Enterprises Using Embeddings in their LLM Processes?

In light of recent advancements, enterprises are keen on harnessing the robust capabilities of Large Language Models (LLMs) to construct comprehensive Software as a Service (SAAS) solutions. Nevertheless, LLMs come pre-trained on extensive datasets, and to tailor them to specific use cases, fine-tuning on proprietary data becomes essential.

This process can be laborious. To streamline this intricate task, the widely embraced Retrieval Augmented Generation (RAG) technique comes into play. RAG involves retrieving pertinent information from an external source, transforming it to a format suitable for LLM comprehension, and then inputting it into the LLM to generate textual output.

This innovative approach enables the fine-tuning of LLMs with knowledge beyond their original training scope. In this process, you need an efficient way to store, retrieve, and ingest data into your LLMs to use it accurately for your given use case.

One of the most common ways to store and search over unstructured data is to embed it and store the resulting embedding vectors, and then at query time to embed the unstructured query and retrieve the embedding vectors that are ‘most similar’ to the embedded query. Hence, without embedding techniques, your RAG approach will be impossible.

Understanding the Creation of Embeddings

Much like a machine learning model, an embedding model undergoes training on extensive datasets. Various models available can generate embeddings for you, and each model is distinct. You can find the top embedding models here.

It is unclear what makes an embedding model perform better than others. However, a common way to select one for your use case is to evaluate how many words a model can take in without breaking down. There’s a limit to how many tokens a model can handle at once, so you’ll need to split your data into chunks that fit within the limit. Hence, choosing a suitable model is a good starting point for your use case.

Creating embeddings with Azure OpenAI is a matter of a few lines of code. To create embeddings of a simple sentence like The food was delicious and the waiter…, you can execute the following code blocks:

- First, import AzureOpenAI from OpenAI

- Load in your environment variables

- Create your Azure OpenAI client.

- Create your embeddings

And you’re done! It’s really that simple to generate embeddings for your data. If you want to generate embeddings for an entire dataset, you can follow along with the great notebook provided by OpenAI itself here.

To Sum It Up!

The evolution of embedding techniques has revolutionized natural language processing, empowering language models with a deeper understanding of context and semantics. From Word2Vec to Transformer models, each advancement has enriched LLM capabilities, enabling them to excel in various NLP tasks.

Enterprises leverage techniques like Retrieval Augmented Generation, facilitated by embeddings, to tailor LLMs for specific use cases. Platforms like Azure OpenAI offer straightforward solutions for generating embeddings, underscoring their importance in NLP development. As we forge ahead, embeddings will remain pivotal in driving innovation and expanding the horizons of language understanding.