Vector embeddings refer to numerical representations of data in a continuous vector space. The data points in the three-dimensional space can capture the semantic relationships and contextual information associated with them.

With the advent of generative AI, the complexity of data makes vector embeddings a crucial aspect of modern-day processing and handling of information. They ensure efficient representation of multi-dimensional databases that are easier for AI algorithms to process.

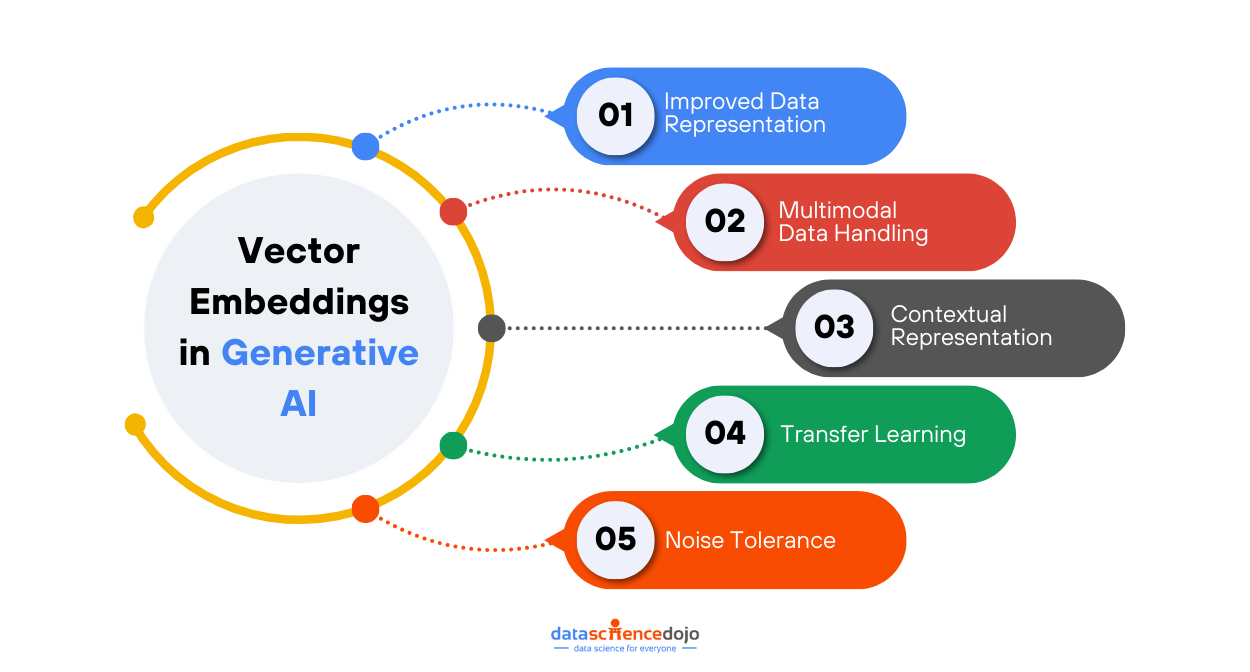

Key Role of Vector Embeddings in Generative AI

Generative AI relies on vector embeddings to understand the structure and semantics of input data. Let’s look at some key roles of embedded vectors in generative AI to ensure their functionality.

Improved Data Representation

Improved data representation through vector embeddings involves transforming complex data into a more meaningful and compact three-dimensional form. These embeddings effectively capture the semantic relationships within the data, allowing similar data items to be represented by similar vectors.

Explore Google’s 2 specialized vector embedding tools to boost healthcare research

This coherent representation enhances the ability of AI models to process and generate outputs that are contextually relevant and semantically aligned. Additionally, vector embeddings are instrumental in capturing latent representations, which are underlying patterns and features within the input data that may not be immediately apparent.

Explore the role of Generative AI and emerging AI trends on society

By utilizing these embeddings, AI systems can achieve more nuanced and sophisticated interpretations of diverse data types, ultimately leading to improved performance and more insightful analysis in various applications.

Multimodal Data Handling

Multimodal data handling refers to the capability of processing and integrating multiple types of data, such as text, images, audio, and time-series data, to create more comprehensive AI models. Vector space allows for multimodal creativity since generative AI is not restricted to a single form of data.

Dive deep into the Top 7 Software Development Use Cases of Generative AI

By utilizing vector embeddings that represent different data types, generative AI can effectively generate creative outputs across various forms using these embedded vectors.

This approach enhances the versatility and applicability of AI models, enabling them to understand and produce complex interactions between diverse data modalities, thereby leading to richer and more innovative AI-driven solutions.

Additionally, multimodal data handling allows AI systems to leverage the strengths of each data type, resulting in more accurate and contextually relevant outputs

Contextual Representation

Generative AI uses vector embeddings to control the style and content of outputs. The vector representations in latent spaces are manipulated to produce specific outputs that are representative of the contextual information in the input data.

It ensures the production of more relevant and coherent data output for AI algorithms.

Transfer Learning

Transfer Learning is a crucial concept in AI that involves utilizing knowledge gained from one task to enhance the performance of another related task. In the context of vector embeddings, transfer learning allows these embeddings to be initially trained on large datasets, capturing general patterns and features.

These pre-trained embeddings are then transferred and fine-tuned for specific generative tasks, enabling AI algorithms to leverage existing knowledge effectively. This approach not only significantly reduces the amount of required training data for the new task but also accelerates the training process and improves the overall performance of AI models by building upon previously learned information.

Explore 50+ Large Language Models and Generative AI Jokes to fight the Monday blues

By doing so, it enhances the adaptability and efficiency of AI systems across various applications.

Noise Tolerance and Generalizability

Noise tolerance and generalizability in the context of vector embeddings refer to the ability of AI models to handle data imperfections effectively. Data is frequently characterized by noise and missing information, which can pose significant challenges for accurate analysis and prediction.

However, in three-dimensional vector spaces, the continuous representation of data allows for the generation of meaningful outputs despite incomplete information. Vector embeddings, by encoding data into these spaces, are designed to accommodate and manage the noise present in data.

This capability is crucial for building robust models that are resilient to variations and uncertainties inherent in real-world data. It enables generalizability when dealing with uncertain data to generate diverse and meaningful outputs.

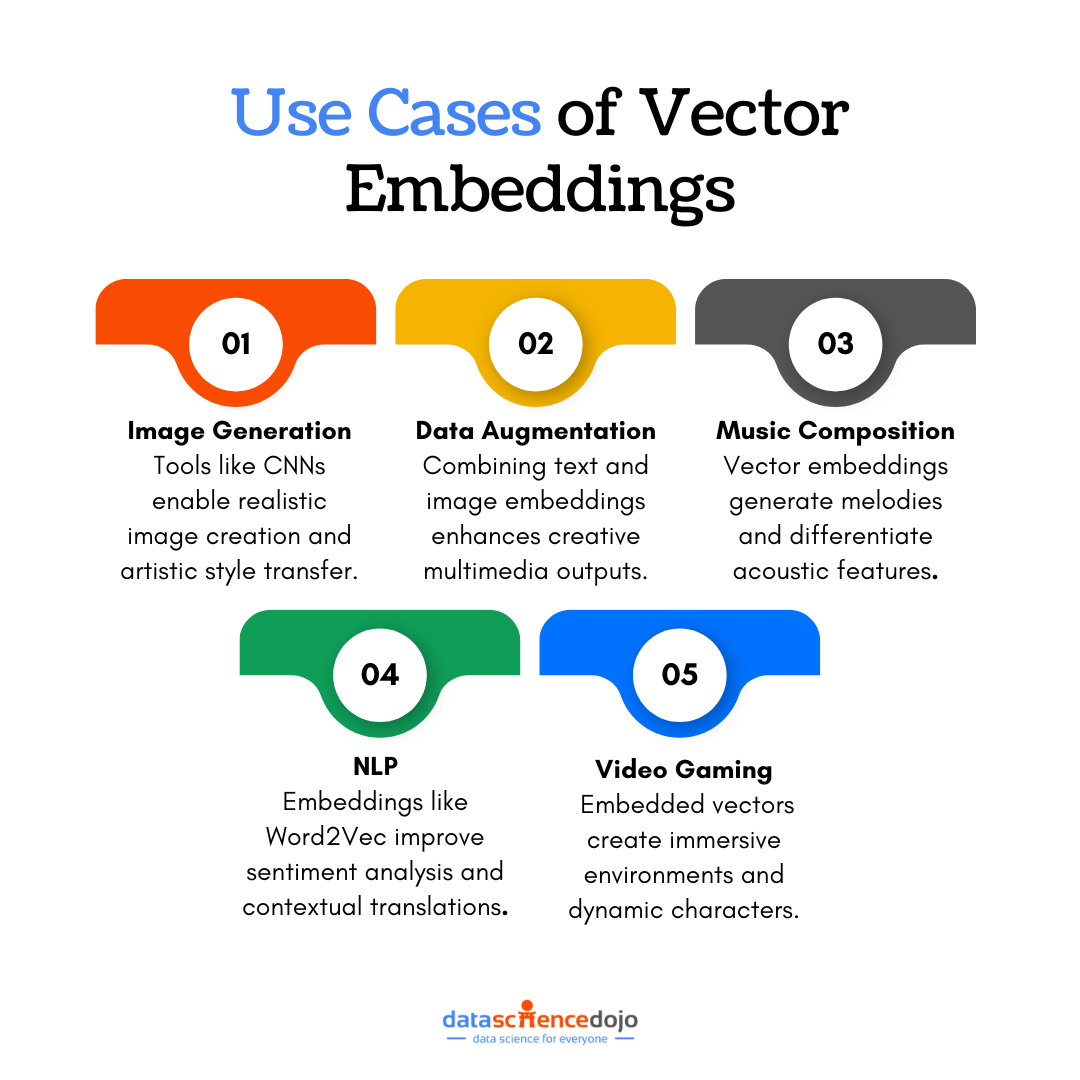

Use Cases of Vector Embeddings in Generative AI

There are different applications of vector embeddings in generative AI. While their use encompasses several domains, the following are some important use cases of embedded vectors:

Image generation

It involves Generative Adversarial Networks (GANs) that use embedded vectors to generate realistic images. They can manipulate the style, color, and content of images. Vector embeddings also ensure easy transfer of artistic style from one image to another.

The following are some common image embeddings:

- CNNs

They are known as Convolutional Neural Networks (CNNs) that extract image embeddings for different tasks like object detection and image classification. The dense vector embeddings are passed through CNN layers to create a hierarchical visual feature from images.

- Autoencoders

These are trained neural network models that are used to generate vector embeddings. It uses these embeddings to encode and decode images.

Data Augmentation

Vector embeddings integrate different types of data that can generate more robust and contextually relevant AI models. A common use of augmentation is the combination of image and text embeddings. These are primarily used in chatbots and content creation tools as they engage with multimedia content that requires enhanced creativity.

Know more about Embedding Techniques: A way to empower Language Models

Additionally, this approach enables models to better understand and generate complex interactions between visual and textual information, leading to more sophisticated AI applications.

Music Composition

Musical notes and patterns are represented by vector embeddings that the models can use to create new melodies. The audio embeddings allow the numerical representation of the acoustic features of any instrument for differentiation in the music composition process.

Some commonly used audio embeddings include:

- MFCCs

It stands for Mel Frequency Cepstral Coefficients. It creates vector embeddings using the calculation of spectral features of an audio. It uses these embeddings to represent the sound content.

- CRNNs

These are Convolutional Recurrent Neural Networks. As the name suggests, they deal with the convolutional and recurrent layers of neural networks. CRNNs allow the integration of the two layers to focus on spectral features and contextual sequencing of the audio representations produced.

Understand 5 Main Types of Neural Networks and their Applications

Natural Language Processing (NLP)

NLP uses vector embeddings in language models to generate coherent and contextual text. The embeddings are also capable of. Detecting the underlying sentiment of words and phrases and ensuring the final output is representative of it.

They can capture the semantic meaning of words and their relationship within a language. The following image shows how NLP integrates word embeddings with sentiment to produce more coherent results.

Some common text embeddings used in natural language processing include:

- Word2Vec

It represents words as a dense vector representation that trains a neural network to capture the semantic relationship of words. Using the distributional hypothesis enables the network to predict words in a context.

- GloVe

It stands for Global Vectors for Word Representation. It integrates global and local contextual information to improve NLP tasks. It particularly assists in sentiment analysis and machine translation.

- BERT

It means Bidirectional Encoder Representations from Transformers. They are used to pre-train transformer models to predict words in sentences. It is used to create context-rich embeddings.

Video Game Development

Another important use of vector embeddings is in video game development. Generative AI uses embeddings to create game environments, characters, and other assets. These embedded vectors also help ensure that the various elements are linked to the game’s theme and context.

Also learn about empowering non-profit organizations with Generative AI and LLMs

Challenges and Considerations in Vector Embeddings for Generative AI

Vector embeddings are crucial in improving the capabilities of generative AI. However, it is important to understand the challenges associated with their use and relevant considerations to minimize the difficulties. Here are some of the major challenges and considerations:

Data Quality and Quantity: The quality and quantity of data used to learn the vector embeddings and train models determine the performance of generative AI. Missing or incomplete data can negatively impact the trained models and final outputs.

It is crucial to carefully preprocess the data for any outliers or missing information to ensure the embedded vectors are learned efficiently. Moreover, the dataset must represent various scenarios to provide comprehensive results.

Ethical Concerns and Data Biases: Since vector embeddings encode the available information, any biases in training data are included and represented in the generative models, producing unfair results that can lead to ethical issues.

It is essential to be careful in data collection and model training processes. The use of fairness-aware embeddings can remove data bias. Regular audits of model outputs can also ensure fair results

Computation-Intensive Processing: Model training with vector embeddings can be a computation-intensive process. The computational demand is particularly high for large or high-dimensional embeddings.

Hence, it is important to consider the available resources and use distributed training techniques for fast processing.

Learn how to choose the right vector embedding model for Generative AI use cases

Future of Vector Embeddings in Generative AI

In the coming future, the link between vector embeddings and generative AI is expected to strengthen. The reliance on three-dimensional data representations can cater to the growing complexity of generative AI.

As AI technology progresses, efficient data representations through vector embeddings will also become necessary for smooth operation. Moreover, vector embeddings offer improved interpretability of information by integrating human-readable data with computational algorithms.

The features of these embeddings offer enhanced visualization that ensures a better understanding of complex information and relationships in data, enhancing representation, processing, and analysis.

Hence, the future of generative AI puts vector embeddings at the center of its progress and development.