In the near future, generative AI will be as essential as the internet—completely reshaping how we work, create, and interact. This powerful technology can automate complex tasks in seconds, making life easier, but only if you know how to communicate with it effectively. That’s where prompt engineering comes in.

Think of AI as a genius with incredible potential but no direction. It’s waiting for you to guide it, and the better your instructions, the better the results.

To master prompt engineering, you need to learn how to craft clear, concise, and effective prompts that align with your desired outcomes. Mastering prompt engineering isn’t just a skill—it’s the key to unlocking AI’s full power. Let’s dive in and explore why it’s so important.

Excited to explore some must-know prompting techniques and master prompt engineering? let’s dig in!

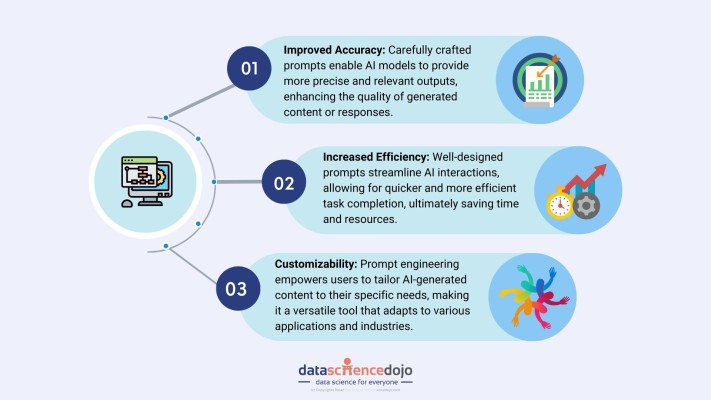

What Makes Prompt Engineering Critical?

Prompt engineering is quickly becoming the secret weapon for making the most out of AI tools. Think of it as the key to unlocking better productivity and smarter workflows. By crafting clear and precise prompts, you can guide AI to deliver accurate, relevant, and high-quality outputs—saving time and cutting down on frustration.

Why It Matters More Than Ever?

With AI becoming a go-to in industries like customer support, content creation, and data analysis, the ability to effectively communicate with these tools is a game-changer. Well-designed prompts don’t just make AI smarter—they make your job easier.

- Imagine an AI chatbot that doesn’t just reply, but provides detailed, on-point solutions for your customers.

- Or a tool that generates content ideas that perfectly align with your brand’s tone, audience, and goals.

That’s the power of prompt engineering.

Real-Life Wins with Better Prompts

- In Customer Support: AI chatbots like ChatGPT, powered by the right prompts, can answer customer queries instantly and with personalized responses. Result? Faster resolutions and happier customers.

- For Content Creators: Struggling with writer’s block? Using tailored prompts, marketers generate catchy ad copy, SEO-optimized blog ideas, and even social media captions effortlessly.

- In Data Analysis: Analysts use prompt-driven tools to summarize complex datasets, identify trends, and even spot anomalies—all in a fraction of the time it would take manually.

Whether you’re streamlining a process, engaging with customers, or brainstorming the next big campaign, mastering prompt engineering can take your AI game to the next level. It’s no longer just a skill—it’s becoming an essential tool for staying ahead in an AI-driven world.

How Does Prompt Engineering Work?

At its core, prompt engineering is all about communication—telling AI exactly what you need in a way it understands. Think of it like giving instructions to a highly intelligent assistant. The clearer and more specific you are, the better the results.

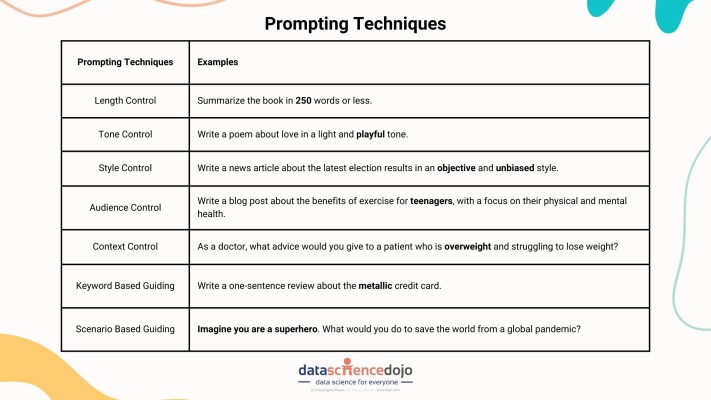

There are different types of prompting techniques you can use:

Also learn different prompting techniques to use AI video generators

- Length Control – Sometimes, you need a quick summary instead of a long-winded response. With length control, you can tell AI exactly how much to say—whether it’s a tweet-sized answer or a detailed paragraph.

- Tone Control – Ever noticed how the same words can feel different based on how they’re said? Tone control helps shape AI’s response to be formal, playful, empathetic, or anything in between, making sure it matches the mood you want.

- Style Control – Writing styles vary—news articles are factual, while stories are creative. This technique ensures AI sticks to the right style, whether you need something professional, casual, or even poetic.

- Audience Control – A blog post for teenagers shouldn’t sound like a research paper. This technique tailors AI’s responses based on who’s reading—ensuring it’s engaging, relatable, and relevant to the target audience.

- Context Control – Giving AI a role, like “You’re a doctor advising a patient,” helps it generate more relevant and informed responses. This technique ensures answers align with the scenario you’re working with.

- Keyword-Based Guiding – Need AI to focus on certain words? This technique makes sure important terms or phrases appear in the response, keeping content aligned with specific themes or branding.

- Scenario-Based Guiding – Want creative or problem-solving answers? By placing AI in a hypothetical situation, like imagining it’s a superhero saving the world, you can generate unique, out-of-the-box responses.

Let’s put your knowledge to test before we understand some principles for prompt engineering. Here’s a quick quiz for you to measure your understanding!

Let’s get a deeper outlook on different principles governing prompt engineering:

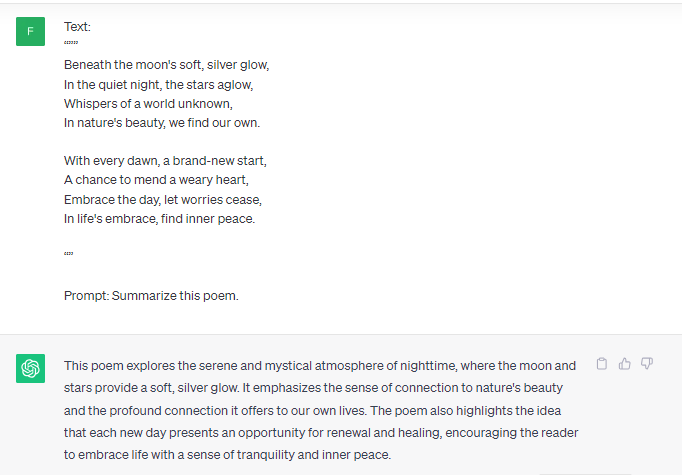

1.Be Clear and Specific

The clearer your prompts, the better the model’s output. Precision in your instructions helps guide the model toward the right results. Here’s how to improve your prompt clarity:

- Use delimiters: Delimiters such as square brackets […], angle brackets <…>, triple quotes “””, triple dashes —, and triple backticks “` help define the structure and context of your request. They act as clear signals for the model, indicating how the input should be interpreted and formatted. For instance, using square brackets around certain elements can make the intent of the prompt more obvious to the model.

- Separate text from the prompt: A clear distinction between your request and the supporting text enhances the model’s understanding of the task at hand. For example, place instructions or questions in distinct parts, allowing the model to recognize and prioritize the actual prompt over any extra details. A clear separation ensures that the model processes only the relevant information.

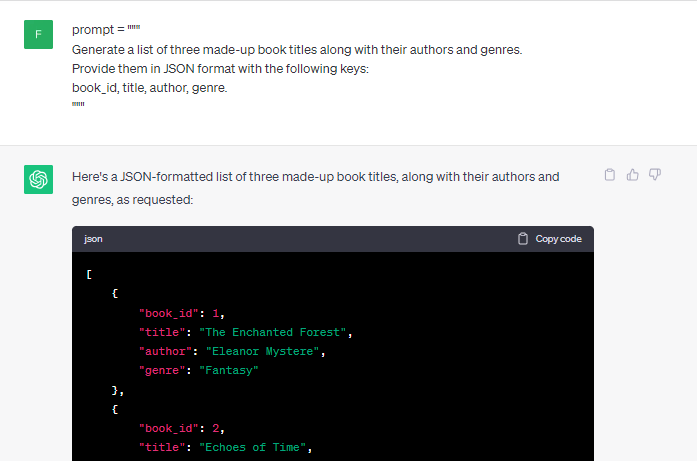

- Ask for a structured output: When you need information in a specific format, such as JSON, HTML, or XML, ask the model directly. Structured outputs are easier to parse and work with, especially for complex tasks. By specifying the format upfront, the model is better equipped to give you the precise type of response you need.

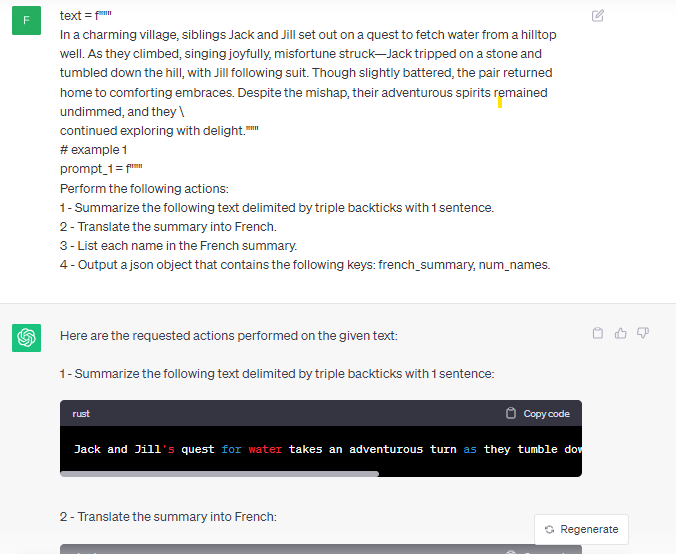

2. Give the LLM Time to Think:

Large language models (LLMs) can sometimes rush to conclusions, especially when tasked with complex requests. Slowing down the process and guiding the model through its reasoning leads to more accurate results. Here’s how you can help the model take its time:

- Specify the steps required to complete the task: Instead of expecting an immediate answer, break down the task into manageable steps. Instruct the model to follow these steps in sequence. This will help ensure the task is approached systematically, leading to more refined and correct outputs.

- Instruct the model to seek its own solution: Rather than simply asking the model for a solution, request that it first think through the problem and analyze its approach. This helps prevent rushed or incorrect conclusions. If the model is uncertain, it will check its reasoning and arrive at a more reliable response.

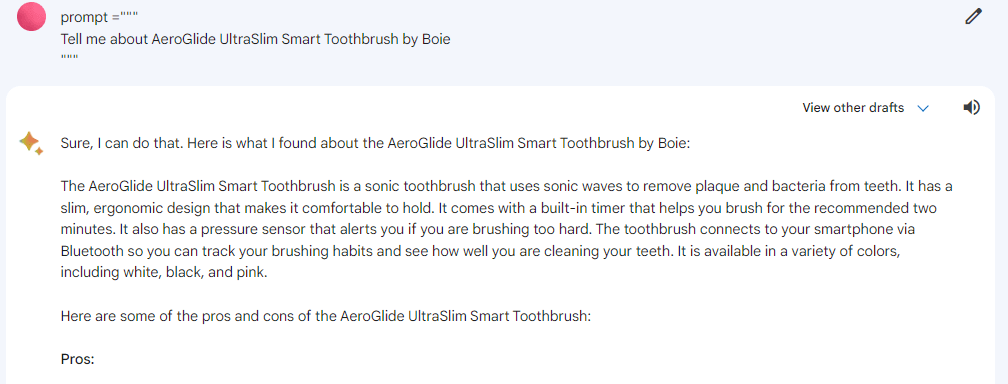

3. Know the Limitations of the Model

While LLMs are becoming increasingly sophisticated, they still have limitations, especially when dealing with hypothetical scenarios or providing insights on things that don’t exist. Understanding these constraints helps you set realistic expectations.

- Handle hypothetical scenarios carefully: LLMs can sometimes treat hypothetical questions as if the concepts involved are real. For example, if you ask about a product or technology that doesn’t exist, an LLM may still generate an answer as if it does. Being aware of this can help you phrase your prompts to avoid confusion or inaccuracies.

To illustrate this point, we asked Gemini to provide information about a hypothetical toothpaste:

Read along to explore the two approaches used for prompting

4. Iterate, Iterate, Iterate

Rarely does a single prompt lead to the desired results on the first try. The real success in prompt engineering comes from constant refinement and iteration. Here’s why:

- Continuous improvement: After receiving the model’s initial response, evaluate its accuracy and completeness. If needed, refine your prompt or add more specific details to guide the model toward a better outcome. This process of adjusting and reiterating is key to achieving high-quality results.

- Step-by-step prompting: Sometimes breaking down your request into smaller, more manageable steps can yield better results. Instead of asking for a complex answer all at once, prompt the model step-by-step to ensure the output is more precise.

For step-by-step prompting techniques, watch this video tutorial.

The Goal: To Master Prompt Engineering

In conclusion, prompt engineering is the gateway to unlocking the full potential of generative AI. By mastering this skill, you can guide AI to produce more accurate, efficient, and creative outputs, ultimately enhancing human-machine collaboration. As AI continues to shape various industries, prompt engineering will be the key to ensuring it works in harmony with our needs, driving innovation and transforming the way we interact with technology.