Knowledge graphs and LLMs are the building blocks of the most recent advancements happening in the world of artificial intelligence (AI). Combining knowledge graphs (KGs) and LLMs produces a system that has access to a vast network of factual information and can understand complex language.

The system has the potential to use this accessibility to answer questions, generate textual outputs, and engage with other NLP tasks. This blog aims to explore the potential of integrating knowledge graphs and LLMs, navigating through the promise of revolutionizing AI.

Introducing Knowledge Graphs and LLMs

Before we understand the impact and methods of integrating KGs and LLMs, let’s visit the definition of the two concepts.

What are Knowledge Graphs (KGs)?

They are a visual web of information that focuses on connecting factual data in a meaningful manner. Each set of data is represented as a node with edges building connections between them. This representational storage of data allows a computer to recognize information and relationships between the data points.

KGs organize data to highlight connections and new relationships in a dataset. Moreover, it enabled improved search results as knowledge graphs integrate the contextual information to provide more relevant results.

What are Large Language Models (LLMs)?

LLMs are a powerful tool within the world of AI using deep learning techniques for general-purpose language generation and other natural language processing (NLP) tasks. They train on massive amounts of textual data to produce human-quality texts.

Large language models have revolutionized human-computer interactions with the potential for further advancements. However, LLMs are limited in the factual grounding of their results. It makes LLMs able to produce high-quality and grammatically accurate results that can be factually inaccurate.

Combining KGs and LLMs

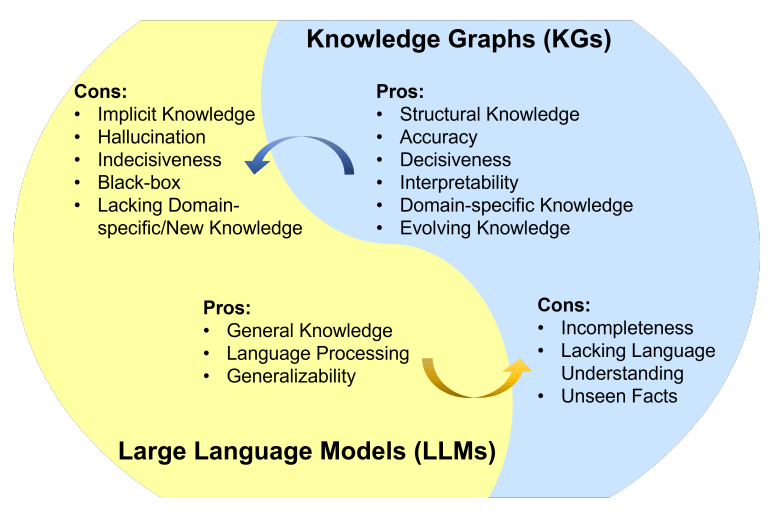

Within the world of AI and NLP, integrating the concepts of KGs and LLMs has the potential to open up new avenues of exploration. While knowledge graphs cannot understand language, they are good at storing factual data. Unlike KGs, LLMs excel in language understanding but lack factual grounding.

Combining the two entities brings forward a solution that addresses the weaknesses of both. The strengths of KGs and LLMs cover each concept’s limitations, enhancing both data processing and understanding capabilities. It leverages the strengths of LLMs in natural language understanding and the structured, interlinked data representation of knowledge graphs.

Some key impacts of this integration include:

Enhanced Information Retrieval

Integrating LLMs with knowledge graphs can significantly improve information retrieval systems. For instance, Google has been working on enhancing its search engine by combining LLMs like BERT with its extensive knowledge graph. This allows for a better understanding of search queries by considering the relationships and context provided by the knowledge graph, leading to more relevant and accurate search results.

Improved Conversational Agents

LLMs are already being used in virtual assistants like Siri and Alexa for natural language processing. By integrating these models with knowledge graphs, these agents can access structured data to provide more precise and contextually relevant responses.

Advanced Recommendation Systems

LLMs can interpret user preferences and sentiments from unstructured data, while knowledge graphs can map these preferences against a structured network of related items, offering more personalized and context-aware recommendations. It can be particularly useful for companies like Amazon and Netflix.

Scientific Research and Discovery

In fields like drug discovery, integrating LLMs with knowledge graphs can facilitate the exploration of existing research data and the generation of new hypotheses. For instance, IBM’s Watson has been used in healthcare to analyze vast amounts of medical literature. By combining its NLP capabilities with a knowledge graph of medical terms and relationships, researchers can uncover previously unknown connections between diseases and potential treatments.

While we understand the impact of this integration, let’s look at some proposed methods of combining these two key technological aspects.

Read more about the applications of knowledge graphs in LLMs

Frameworks to Combine KGs and LLMs

It is one thing to talk about combining knowledge graphs and large language models, implementing the idea requires planning and research. So far, researchers have explored three different frameworks aiming to integrate KGs and LLMs for enhanced outputs.

In this section, we will explore these three frameworks that are published as a paper in IEEE Transactions on Knowledge and Data Engineering.

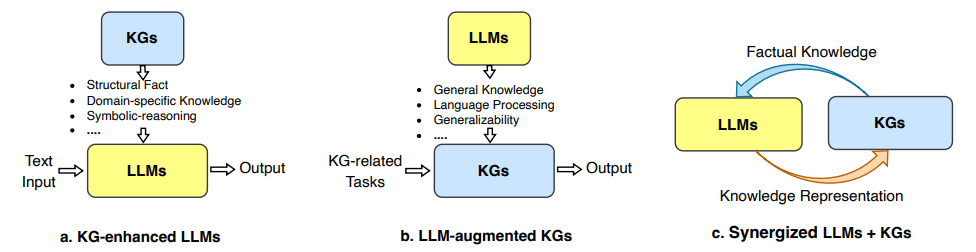

KG-Enhanced LLMs

This framework focuses on using knowledge graphs to train LLMs. The factual knowledge and relationship links in the KGs become accessible to the LLMs in addition to the traditional textual data during the training phase. A LLM can then learn from the information available in KGs.

As a result, LLMs can get a boost in factual accuracy and grounding by incorporating the data from KGs. It will also enable the models to fact-check the outputs and produce more accurate and informative results.

LLM-Augmented KGs

This design shifts the structure of the first framework. Instead of KGs enhancing LLMs, they leverage the reasoning power of large language models to improve knowledge graphs. It makes LLMs smart assistants to improve the output of KGs, curating their information representation.

Moreover, this framework can leverage LLMs to find problems and inconsistencies in information connections of KGs. The high reasoning of LLMs also enables them to infer new relationships in a knowledge graph, enriching its outputs.

This builds a pathway to create more comprehensive and reliable knowledge graphs, benefiting from the reasoning and inference abilities of LLMs.

Explore data visualization – the best way to communicate

Synergized LLMs + KGs

This framework proposes a mutually beneficial relationship between the two AI components. Each entity works to improve the other through a feedback loop. It is designed in the form of a continuous learning cycle between LLMs and KGs.

It can be viewed as a concept that combines the two above-mentioned frameworks into a single design where knowledge graphs enhance language model outputs and LLMs analyze and improve KGs.

It results in a dynamic cycle where KGs and LLMs constantly improve each other. The iterative design of this integration framework leads to a more powerful and intelligent system overall.

While we have looked at the three different frameworks of integration of KGs and LLMs, the synergized LLMs + KGs is the most advanced approach in this field. It promises to unlock the full potential of both entities, supporting the creation of superior AI systems with enhanced reasoning, knowledge representation, and text generation capabilities.

Future of LLM and KG Integration

The combination of Large Language Models (LLMs) and knowledge graphs is paving the way for an AI landscape that’s smarter and more capable than ever before. By merging the adaptability and creativity of language models with the precision and dependability of structured data, this integration is opening up a world of new possibilities across various sectors.

Imagine real-time decision-making, ethical AI solutions, and highly personalized user experiences—all made possible by this powerful synergy. Whether in healthcare, education, or finance, the applications are not only exciting but also transformative.

Learn more about the role of AI in education

As this blend continues to develop, we are on the brink of achieving AI that is not just powerful but also transparent, reliable, and focused on human needs. The future of AI innovation is unfolding right before us, driven by the harmonious collaboration of LLMs and knowledge graphs.