Self-driving cars were once a futuristic dream, but today, Tesla Dojo is bringing groundbreaking innovation to the field. It is not just reshaping Tesla’s self-driving technology but also setting new standards for AI infrastructure. In a field dominated by giants like Nvidia and Google, Tesla’s bold move into custom-built AI hardware is turning heads – and for good reason.

But what makes Tesla Dojo so special, and why does it matter?

In this blog, we will dive into what makes Tesla Dojo so revolutionary, from its specialized design to its potential to accelerate AI advancements across industries. Whether you’re an AI enthusiast or just curious about the future of technology, Tesla Dojo is a story you won’t want to miss.

What is Tesla Dojo?

Tesla Dojo is Tesla’s groundbreaking AI supercomputer, purpose-built to train deep neural networks for autonomous driving. First unveiled during Tesla’s AI Day in 2021, Dojo represents a leap in Tesla’s mission to enhance its Full Self-Driving (FSD) and Autopilot systems.

But what makes Dojo so special, and how does it differ from traditional AI training systems?

At its core, Tesla Dojo is designed to handle the massive computational demands of training AI models for self-driving cars. Its main purpose is to process massive amounts of driving data collected from Tesla vehicles and run simulations to enhance the performance of its FSD technology.

Unlike traditional autonomous vehicle systems that use sensors like LiDAR and radar, Tesla’s approach is vision-based, relying on cameras and advanced neural networks to mimic human perception and decision-making for fully autonomous driving.

While we understand Tesla Dojo as an AI supercomputer, let’s look deeper into what this computer is made up of.

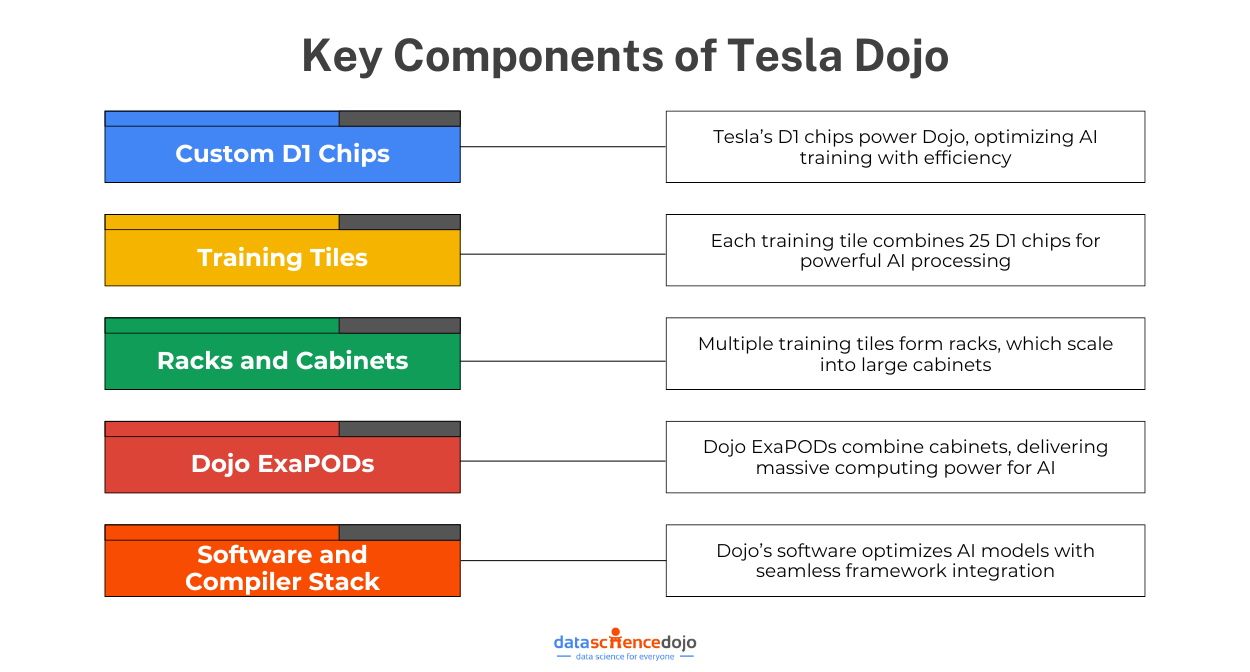

Key Components of Tesla Dojo

Dojo is not just another supercomputer, but a tailor-made solution for Tesla’s vision-based approach to autonomous driving. Tesla has leveraged its own hardware and software in Dojo’s development to push the boundaries of AI and machine learning (ML) for safer and more capable self-driving technology.

Below are the key components of Tesla Dojo to train its FSD neural networks are as follows:

-

Custom D1 Chips

At the core of Dojo are Tesla’s proprietary D1 chips, designed specifically for AI training workloads. Each D1 chip contains 50 billion transistors and is built using a 7-nanometer semiconductor process, delivering 362 teraflops of compute power.

Its high-bandwidth, low-latency design is optimized for matrix multiplication (essential for deep learning). These high-performance and efficient chips can handle compute and data transfer tasks simultaneously, making them ideal for ML applications. Hence, the D1 chips eliminate the need for traditional GPUs (like Nvidia’s).

-

Training Tiles

A single Dojo training tile consists of 25 D1 chips working together as a unified system. Each tile delivers 9 petaflops of compute power and 36 terabytes per second of bandwidth. These tiles are self-contained units with integrated hardware for power, cooling, and data transfer.

These training tiles are highly efficient for large-scale ML tasks. The tiles reduce latency in processes by eliminating traditional GPU-to-GPU communication bottlenecks.

-

Racks and Cabinets

Training tiles are the building blocks of these racks and cabinets. Multiple training tiles are combined to form racks. These racks are further assembled into cabinets to increase the computational power.

For instance, six tiles make up one rack, providing 54 petaflops of compute. Two such racks form a cabinet which are further combined to form the ExaPODs.

-

Scalability with Dojo ExaPODs

The highest level of Tesla’s Dojo architecture is the Dojo ExaPod – a complete supercomputing cluster. An ExaPOD contains 10 Dojo Cabinets, delivering 1.1 exaflops (1 quintillion floating-point operations per second).

The ExaPOD configuration allows Tesla to scale Dojo’s computational capabilities by deploying multiple ExaPODs. This modular design ensures Tesla can expand its compute power to meet the increasing demands of training its neural networks.

-

Software and Compiler Stack

It connects Tesla Dojo’s custom hardware, including the D1 chips, with AI training workflows. Tailored to maximize efficiency and performance, the stack consists of a custom compiler that translates AI models into instructions optimized for Tesla’s ML-focused Instruction Set Architecture (ISA).

Integration with popular frameworks like PyTorch and TensorFlow makes Dojo accessible to developers, while a robust orchestration system efficiently manages training workloads, ensuring optimal resource use and scalability.

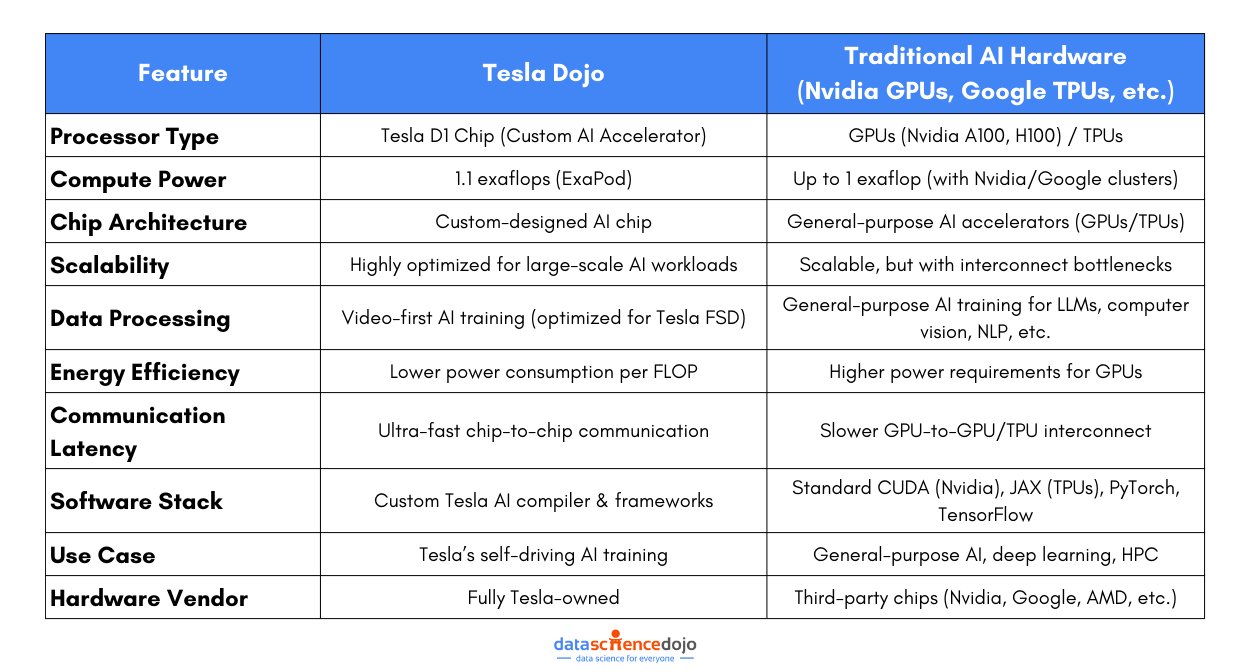

Comparing Dojo to Traditional AI Hardware

Thus, these components collectively make Dojo a uniquely tailored supercomputer, emphasizing efficiency, scalability, and the ability to handle massive amounts of driving data for FSD training. This not only enables faster training of Tesla’s FSD neural networks but also accelerates progress toward autonomous driving.

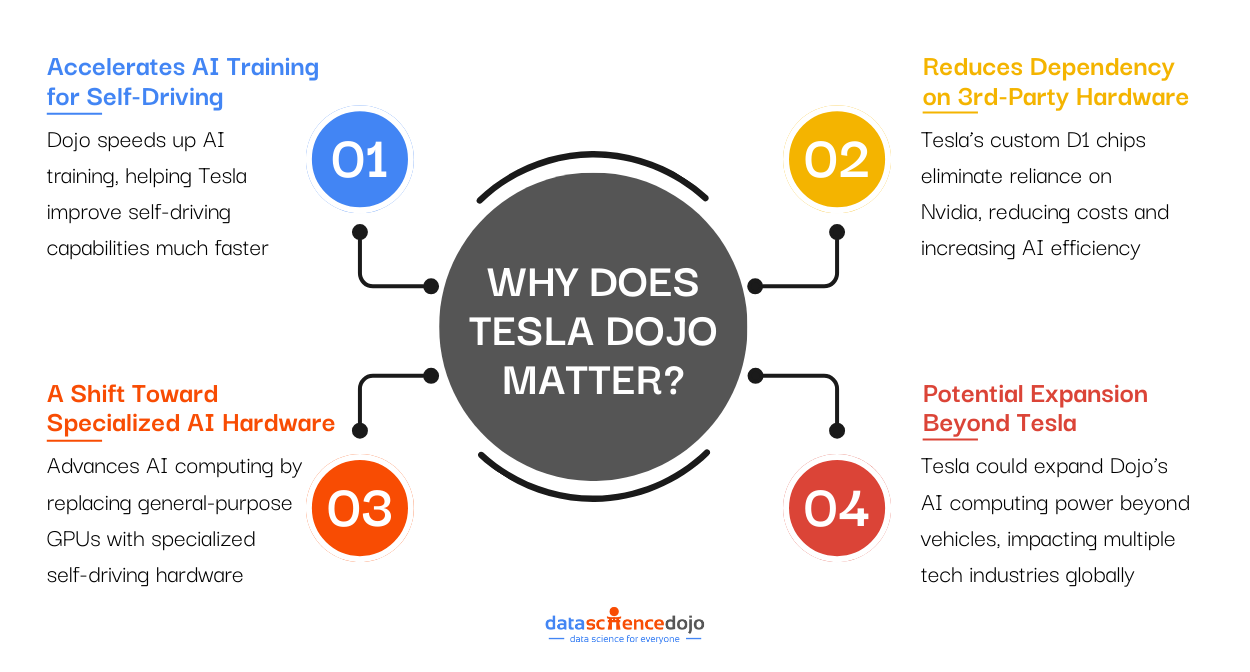

Why Does Tesla Dojo Matter?

Tesla Dojo represents a groundbreaking step in AI infrastructure, specifically designed to meet the demands of large-scale, high-performance AI training.

Its significance within the world of AI can be summed up as follows:

1. Accelerates AI Training for Self-Driving

Tesla’s Full Self-Driving (FSD) and Autopilot systems rely on massive AI models trained with real-world driving data. Training these models requires processing petabytes of video footage to help Tesla’s cars learn how to drive safely and autonomously.

This is where Dojo plays a role by speeding up the training process, allowing Tesla to refine and improve its AI models much faster than before. It means quicker software updates and smarter self-driving capabilities, leading to safer autonomous vehicles that react better to real-world conditions.

2. Reduces Dependency on Nvidia & Other Third-Party Hardware

Just like most AI-driven companies, Tesla has relied on Nvidia GPUs to power its AI model training. While Nvidia’s hardware is powerful, it comes with challenges like high costs, supply chain delays, and dependency on an external provider, all being key factors to slow Tesla’s AI development.

Tesla has taken a bold step by developing its own custom D1 chips. It not only optimizes the entire AI training process but also enables Tesla to create its own custom Dojo supercomputer. Thus, cutting costs while gaining full control over its AI infrastructure and eliminating many bottlenecks caused by third-party reliance.

Explore the economic potential of AI within the chip design industry

3. A Shift Toward Specialized AI Hardware

Most AI training today relies on general-purpose GPUs, like Nvidia’s H100, which are designed for a wide range of AI applications. However, Tesla’s Dojo is different as it is built specifically for training self-driving AI models using video data.

By designing its own hardware, Tesla has created a system that is highly optimized for its unique AI challenges, making it faster and more efficient. This move follows a growing trend in the tech world. Companies like Google (with TPUs) and Apple (with M-series chips) have also built their own specialized AI hardware to improve performance.

Tesla’s Dojo is a sign that the future of AI computing is moving away from one-size-fits-all solutions and toward custom-built hardware designed for specific AI applications.

You can also learn about Google’s specialized tools for healthcare

4. Potential Expansion Beyond Tesla

If Dojo proves successful, Tesla could offer its AI computing power to other companies, like Amazon sells AWS cloud services and Google provides TPU computing for AI research. It would make Tesla more than use an electric vehicle company.

Expanding Dojo beyond Tesla’s own needs could open up new revenue streams and position the company as a tech powerhouse. Instead of just making smarter cars, Tesla could help train AI for industries like robotics, automation, and machine learning, making its impact on the AI world even bigger.

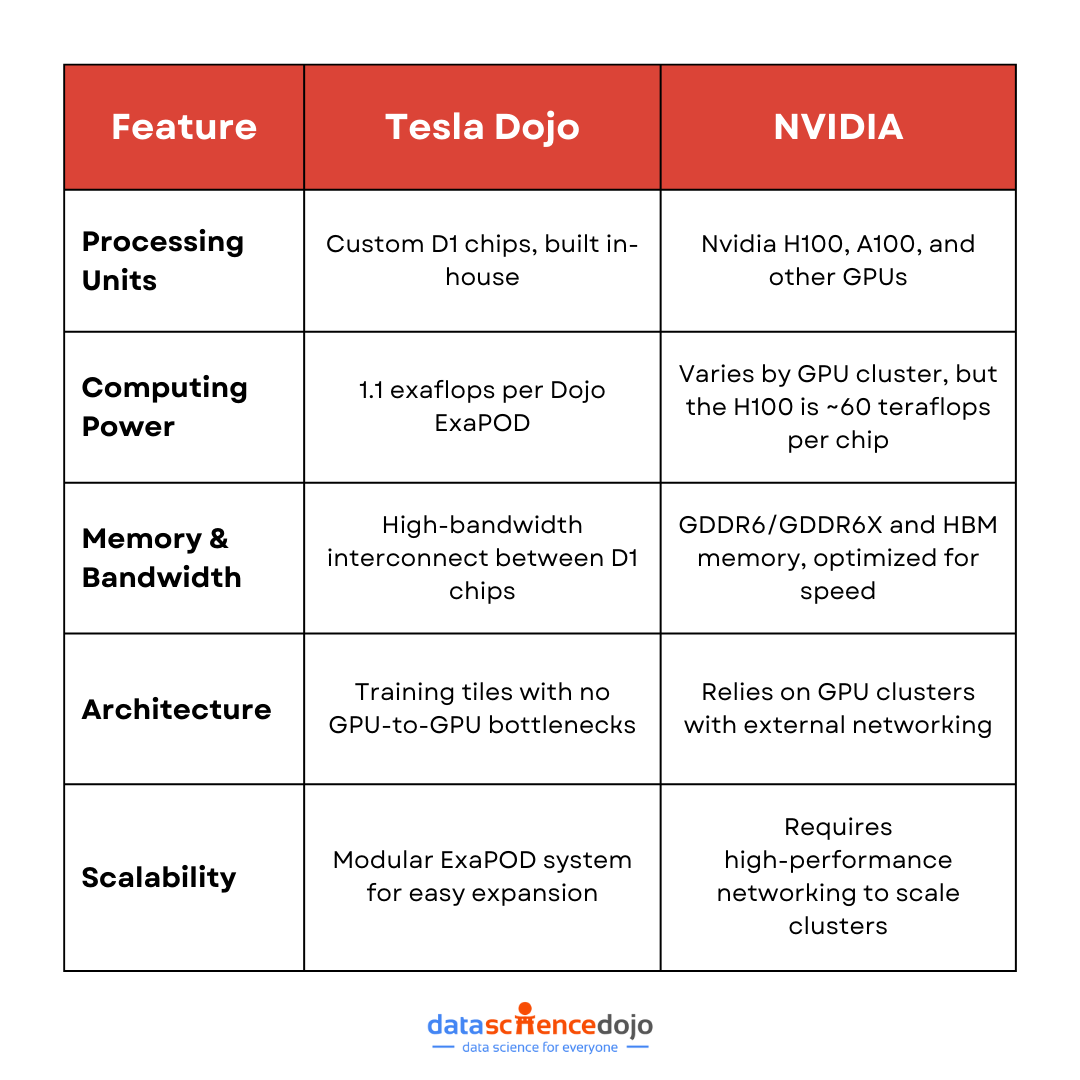

Tesla Dojo vs. Nvidia: A Battle of AI Computing Power

Tesla and Nvidia are two giants in AI computing, but they have taken very different approaches to AI hardware. While Nvidia has long been the leader in AI processing with its powerful GPUs, Tesla is challenging the status quo with Dojo, a purpose-built AI supercomputer designed specifically for training self-driving AI models.

So, how do these two compare in terms of architecture, performance, scalability, and real-world applications? Let’s break it down.

1. Purpose and Specialization

One of the biggest differences between Tesla Dojo and Nvidia GPUs is their intended purpose.

- Tesla Dojo is built exclusively for Tesla’s Full Self-Driving (FSD) AI training. It is optimized to process vast amounts of real-world video data collected from Tesla vehicles to improve neural network training for autonomous driving.

- Nvidia GPUs, like the H100 and A100, are general-purpose AI processors used across various industries, including cloud computing, gaming, scientific research, and machine learning. They power AI models for companies like OpenAI, Google, and Meta.

Key takeaway: Tesla Dojo is highly specialized for self-driving AI, while Nvidia’s GPUs serve a broader range of AI applications.

2. Hardware and Architecture

Tesla has moved away from traditional GPU-based AI training and designed Dojo with custom hardware to maximize efficiency.

Key takeaway: Tesla’s D1 chips remove GPU bottlenecks, while Nvidia’s GPUs are powerful but require networking to scale AI workloads.

3. Performance and Efficiency

AI training requires enormous computational resources, and both Tesla Dojo and Nvidia GPUs are designed to handle this workload. But which one is more efficient?

- Tesla Dojo delivers 1.1 exaflops of compute power per ExaPOD, optimized for video-based AI processing crucial to self-driving. It eliminates GPU-to-GPU bottlenecks and external supplier reliance, enhancing efficiency and control.

- Nvidia’s H100 GPUs offer immense power but rely on external networking for large-scale AI workloads. Used by cloud providers like AWS and Google Cloud, they support various AI applications beyond self-driving.

Key takeaway: Tesla optimizes Dojo for AI training efficiency, while Nvidia prioritizes versatility and wide adoption.

4. Cost and Scalability

One of the main reasons Tesla developed Dojo was to reduce dependency on Nvidia’s expensive GPUs.

- Tesla Dojo reduces costs by eliminating third-party reliance. Instead of buying thousands of Nvidia GPUs, Tesla now has full control over its AI infrastructure.

- Nvidia GPUs are expensive but widely used. Many AI companies, including OpenAI and Google, rely on Nvidia’s data center GPUs, making them the industry standard.

While Nvidia dominates the AI chip market, Tesla’s custom-built approach could lower AI training costs in the long run by reducing hardware expenses and improving energy efficiency.

Key takeaway: Tesla Dojo offers long-term cost benefits, while Nvidia remains the go-to AI hardware provider for most companies.

Read more about the growth of NVIDIA

Hence, the battle between Tesla Dojo and Nvidia is not just about raw power but the future of AI computing. Tesla is betting on a custom-built, high-efficiency approach to push self-driving technology forward, while Nvidia continues to dominate the broader AI landscape with its versatile GPUs.

As AI demands grow, the question is not which is better, but which approach will define the next era of innovation. One thing is for sure – this race is just getting started.

What Does this Mean for AI?

Tesla Dojo marks the beginning of a new chapter in the world of AI. It has led to a realization that specialized hardware plays a crucial role in enhancing performance for specific AI tasks. This shift will enable faster and more efficient training of AI models, reducing both costs and energy consumption.

Moreover, with Tesla entering the AI hardware space, the dominance of companies like Nvidia and Google in high-performance AI computing is being challenged. If Dojo proves successful, it could inspire other industries to develop their own specialized AI chips, fostering faster innovation in fields like robotics, automation, and deep learning.

The development of Dojo also underscores the growing need for custom-built hardware and software to handle the increasing complexity and scale of AI workloads. It sets a precedent for application-specific AI solutions, paving the way for advancements across various industries.