Finding the top Python packages for data science and libraries that aren’t only popular, but get the job done isn’t easy. Here’s a list to help you out.

Out of all the Python scientific libraries and packages available, which ones are not only popular but the most useful in getting the job done?

Python packages for data science and libraries

To help you filter down a list of libraries and packages worth adding to your data science toolbox, we have compiled our top picks for aspiring and practicing data scientists. But you’ll also want to know how to best use these tools for tricky, real-world data problems. So instead of leaving you with yet another top-choice list among a quintillion list, we explain how to make the most of these libraries using real-world examples.

You can learn more about how these packages fit into data science with Data Science Dojo’s introduction to Python course.

Data manipulation

Pandas

There’s a reason why pandas consistently tops published ranks on data science-related libraries in Python. The library can help you with a variety of tasks, but it is particularly useful for data manipulation or data wrangling. It can save you a lot of leg work in not only your typical rudimentary data manipulation tasks but also in handling some pretty tricky problems you might encounter when slicing and filtering.

Multi-indexed data can be one of these tricky tasks. The library pandas takes care of advanced indexing, including multi-indexing, where you might need to work with higher-dimensional data or multiple index levels. For example, the number of user interactions might be indexed by 1) product category, 2) the time of day the user interacted with the product, and 3) the location of the user.

Instead of your typical table of rows and columns to represent the data, you might find it better to organize the number of user interactions into all cases that fall under the x product category, with y time of day, and z location. This way you can easily see user interactions across each condition of product category, time of day, and user location. This saves you from having to apply a filter or group for all combinations of conditions in your traditional row-and-table structure.

Here is one way to multi-index data in pandas. With less than a few lines of code, pandas makes this easy to implement in Python:

import pandas as pd

data_multi_indx = table_data.set_index(['Product', 'Day of Week'])

print(data_multi_indx)

'''

Output:

Location Num User Interactions

Product Day of Week

Product 1 Morning A 3

Morning B 90

Morning C 7

Afternoon A 17

Afternoon B 1

Afternoon C 82

Product 2 Morning A 27

Morning B 70

Morning C 3

Afternoon A 1

Afternoon B 1

Afternoon C 98

Product 3 Morning A 94

Morning B 5

Morning C 1

Afternoon A 0

Afternoon B 7

Afternoon C 93

'''

For the more rudimentary data manipulation tasks, pandas doesn’t require much effort on your part. You can simply use the functions available for imputing missing values, one-hot encoding, dropping columns and rows, and so on.

Here are a few example classes and functions pandas that make rudimentary data manipulation easy in a few lines of code, at most.

For more lessons with Pandas, visit Data Independent.

| Feature | Description |

|---|---|

fillna(value) |

Fill in missing values on a column or the whole data frame with a value such as the mean, median, or mode. |

isna(data)/isnull(data) |

Check for missing values. |

get_dummies(data_frame['Column']) |

Apply one-hot encoding on a column. |

to_numeric(data_frame['Column']) |

Convert a column of values from strings to numeric values. |

to_string(data_frame['Column']) |

Convert a column of values from numeric values to strings. |

to_datetime(data_frame['Column']) |

Convert a column of datetimes in string format to standard datetime format. |

drop(columns=['Column0','Column1']) |

Drop specific columns or useless columns in your data frame. |

drop(data.frame.index[[rownum0,rownum1]]) |

Drop specific rows or useless rows in your data frame. |

NumPy

Another library that keeps topping the ranks is numpy. This library can handle many tasks, but it is particularly useful when working with multi-dimensional arrays and performing calculations on these arrays. This can be tricky to do in more conventional ways, where you need to find the index of a value or certain values inside another index, with multiple indices.

Read about Top Python projects to choose from in 2023

This is where numpy shows its strength. Its array() function means standard arrays can be simply added and nicely bundled into a multi-dimensional array. Calculations on these arrays can also be easily implemented using numpy’s vast array (pun intended) of mathematical functions.

Let’s picture an example where numpy’s multi-dimensional arrays are useful. A company tracks or records if a user was/was not shown a mobile product in the morning, afternoon, and night, delivered through a mobile notification. Based on the level of user interaction with the shown product, the company also records a user engagement score.

Data points on each user’s shown product and engagement score are stored inside an array; each array stores these values for each user. The company would like to quickly and simply bundle all user arrays.

In addition to this, using engagement score and purchase history, the company would like to calculate and identify the minimum distance (or difference) across all users’ data points so that users who follow a similar pattern can be categorized and targeted accordingly.

numpy’s array() makes it easy to bundle user arrays into a multi-dimensional array and argmin() and linalg.norm() find the min Euclidean distance between users, as an example of the kinds of calculations that can be done on a multi-dimensional array:

import numpy as np

# Records tracking whether user was/was not shown product during

# morning, afternoon, and night, and user engagement score

user_0 = [0,0,1,0.7]

user_1 = [0,1,0,0.4]

user_2 = [1,0,0,0.0]

user_3 = [0,0,1,0.9]

user_4 = [0,1,0,0.3]

user_5 = [1,0,0,0.0]

# Create a multi-dimensional array to bundle all users

# Can use arrays with mixed data types by specifying

# the object data type in numpy multi-dimensional arrays

users_multi_dim = np.array([user_0,user_1,user_2,user_3,user_4,user_5],dtype=object)

print(users_multi_dim)

'''

Output:

[[0 0 1 0.7]

[0 1 0 0.4]

[1 0 0 0.0]

[0 0 1 0.9]

[0 1 0 0.3]

[1 0 0 0.0]]

'''

# To view which user was/was not shown the product

# either morning, afternoon or night, pandas easily

# allows you to index and label the data

row_names = [_ for _ in ['User 0','User 1','User 2','User 3','User 4','User 5']]

col_names = [_ for _ in ['Product Shown Morning','Product Shown Afternoon',

'Product Shown Night','User Engagement Score']]

users_df_indexed = pd.DataFrame(users_multi_dim,index=row_names,columns=col_names)

print(users_df_indexed)

'''

Output:

Product Shown Morning Product Shown Afternoon Product Shown Night User Engagement Score

User 0 0 0 1 0.7

User 1 0 1 0 0.4

User 2 1 0 0 0

User 3 0 0 1 0.9

User 4 0 1 0 0.3

User 5 1 0 0 0

'''

# Find which existing user is closest to the engagement

# and purchase behavior of a new user by calculating the

# min Euclidean distance on a numpy multi-dimensional array

user_0 = [0.7,51.90,2]

user_1 = [0.4,25.95,1]

user_2 = [0.0,0.00,0]

user_3 = [0.9,77.85,3]

user_4 = [0.3,25.95,1]

user_5 = [0.0,0.00,0]

users_multi_dim = np.array([user_0,user_1,user_2,user_3,user_4,user_5])

new_user = np.array([0.8,77.85,3])

closest_to_new = np.argmin(np.linalg.norm(users_multi_dim-new_user,axis=1))

print('User', closest_to_new, 'is closest to the new user')

'''

Output:

User 3 is closest to the new user

'''

Data modeling

Statsmodels

The main strength of statsmodels is its focus on statistics, going beyond the ‘machine learning out-of-the-box’ approach. This makes it a popular choice for data scientists. Conducting statistical tests to find significantly different variables, checking for normality in your data, checking the standard errors, and so on, cannot be underestimated when trying to build the most effective model you can build. Your model is only as good as your inputs, and statsmodels is designed to help you better understand and customize your inputs.

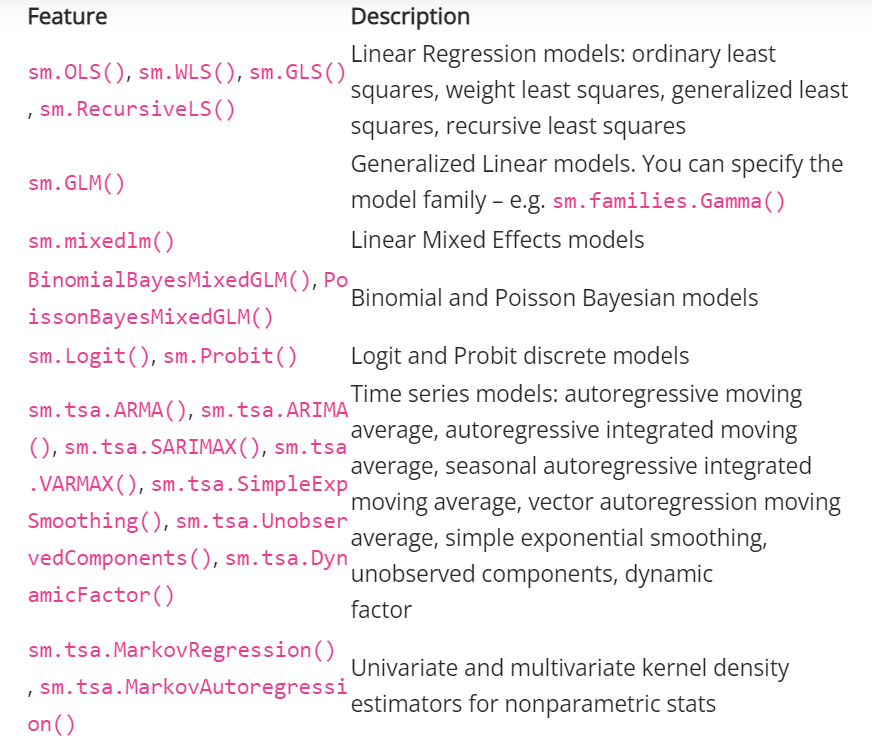

The library also covers an exhaustive list of predictive models to choose from, depending on your predictors and outcome variable(s). It covers your classic Linear Regression models (including ordinary least squares, weighted least squares, recursive least squares, and more), Generalized Linear models, Linear Mixed Effects models, Binomial and Poisson Bayesian models, Logit and Probit models, Time Series models (including autoregressive integrated moving average, dynamic factor, unobserved component, and more), Hidden Markov models, Principal Components and other techniques for Multivariate models, Kernel Density estimators, and lots more.

Here are the classes and functions in statsmodels that cover the main modeling techniques useful for many prediction tasks.

Scikit-learn

Any library that makes machine learning more accessible and easier to implement is bound to make the top choice list among aspiring and practicing data scientists. The Library scikit-learn not only allows models to be easily implemented out-of-the-box but also offers some auto fine-tuning.

Finding the best possible combination of model parameters is a key example of fine-tuning. The library offers a few good ways to search for the optimal set of parameters, given the algorithm and problem to solve. The grid search and random search algorithms in scikit-learn evaluate different combinations of parameters until they find the best combo that results in the best outcome, or a better-performing model.

The grid search goes through every possible combination, whereas the random search randomly samples the parameters over a fixed number of times/iterations. Cross-validating your model on many subsets of data is also easy to implement using scikit-learn. With this kind of automation, the library offers data scientists a massive time saver when building models.

The library also covers all the essential machine learning models from classification (including Support Vector Machine, Random Forest, etc), to regression (including Ridge Regression, Lasso Regression, etc), and clustering (including k-Means, Mean Shift, etc).

Here are the classes and functions in scikit-learn that cover the main modeling techniques useful for many prediction tasks.

| Feature | Description |

|---|---|

SVC(), GaussianNB(), LogisticRegression(), DecisionTreeClassifier(),

|

Classification models: Support Vector Machine, Gaussian Naïve Bayes, Logistic Regression, Decision Tree, Random Forest, Stochastic Gradient Descent, Multi-Layer Perceptron |

linear_model.Ridge(), linear_model.Lasso(), SVR(), DecisionTreeRegressor(),

|

Regression models: Ridge Regression, Lasso Regression, Support Vector Machine, Decision Tree, Random Forest, Stochastic Gradient Descent, Multi-Layer Perceptron |

KMeans(), AffinityPropagation(), MeanShift(), AgglomerativeClustering |

Clustering models: k-Means, Affinity Propagation, Mean Shift, Agglomerative Hierarchical Clustering |

Data visualization

Plotly

The libraries matplotlib and seaborn will easily take care of your basic static plot functions, which are important for your own internal exploration or understanding of the data. But when presenting visual insights to business folks or users, interactivity is where we are headed these days.

Using JavaScript functionality, plotly renders interactive graphs in the form of zooming in and panning out of the graph panel, hovering over objects for more information, and dragging objects into position to further explore relationships in the data. Graphs can be customized to your heart’s content.

Here are just a few of the many tricks that Plotly offers:

| Feature | Description |

|---|---|

hovermode, hoverinfo |

Controls the mode and text when a user hovers over an object. |

on_selection(), on_click() |

Allows a user to select or click on an object and have that selected object change color, for example. |

update |

Modifies a graph’s layout and data such as titles and annotations. |

animate |

Creates an animated graph. |

Bokeh

Much like plotly, bokeh also offers interactive graphs. But one feature that stands out in bokeh is linked interactions. This is useful when keeping separate graphs in unison, where the user interacts with one graph and needs to compare with the other while they are in sync. For example, a user zooms into a graph, effectively changing the range of the graph, and then would like to compare it with the second graph. The second graph would need to automatically update its range so that both graphs can be easily compared like-for-like.

Here are some key tricks that bokeh offers:

| Feature | Description |

|---|---|

figure() |

Creates a new plot and allows linking to the range of another plot. |

HoverTool(), hover_glyph |

Allows users to hover over an object for more information. |

selection_glyph |

Selects a particular glyph object for styling. |

Slider() |

Creates a slider to dynamically update the plot based on the slide range. |