Moreover, LLMs have numerous quirks: they hallucinate (confidently spouting falsehoods), format responses poorly, slip into the wrong tone, go “off the rails,” or get overly cautious. They even repeat themselves, making long interactions tiresome.

Evaluation helps catch these flaws, ensuring models stay accurate, reliable, and ready for real-world use.

In this blog, you’ll get a clear view of how to evaluate LLMs. We’ll dive into what evaluation means for these models, explore key industry benchmarks that test their abilities, and highlight the best metrics for scoring performance. You’ll also discover top leaderboards where the latest models stack up.

Excited? Let’s dig in.

What is LLM Evaluation?

LLM evaluation is all about testing how well a large language model performs. Think of it like grading a student’s test—each question measures different skills, like comprehension, accuracy, and relevance.

With LLMs, evaluation means putting models through carefully designed tests, or benchmarks, to see if they can handle tasks they were built for, like answering questions, generating text, or holding conversations.

This process involves measuring their responses against a set of standards, using metrics to score performance. In simple terms, LLM evaluation shows us where models excel and where they still need work.

Why is LLM Evaluation Significant?

LLM evaluation provides a common language for developers and researchers to make quick, clear decisions on whether a model is fit for use. Plus, evaluation acts like a roadmap for improvement—pinpointing areas where a model needs refining helps prioritize upgrades and makes each new version smarter, safer, and more reliable.

To sum it, evaluation ensures that models are accurate, reliable, unbiased, and ethical.

Key Components of LLM Evaluation

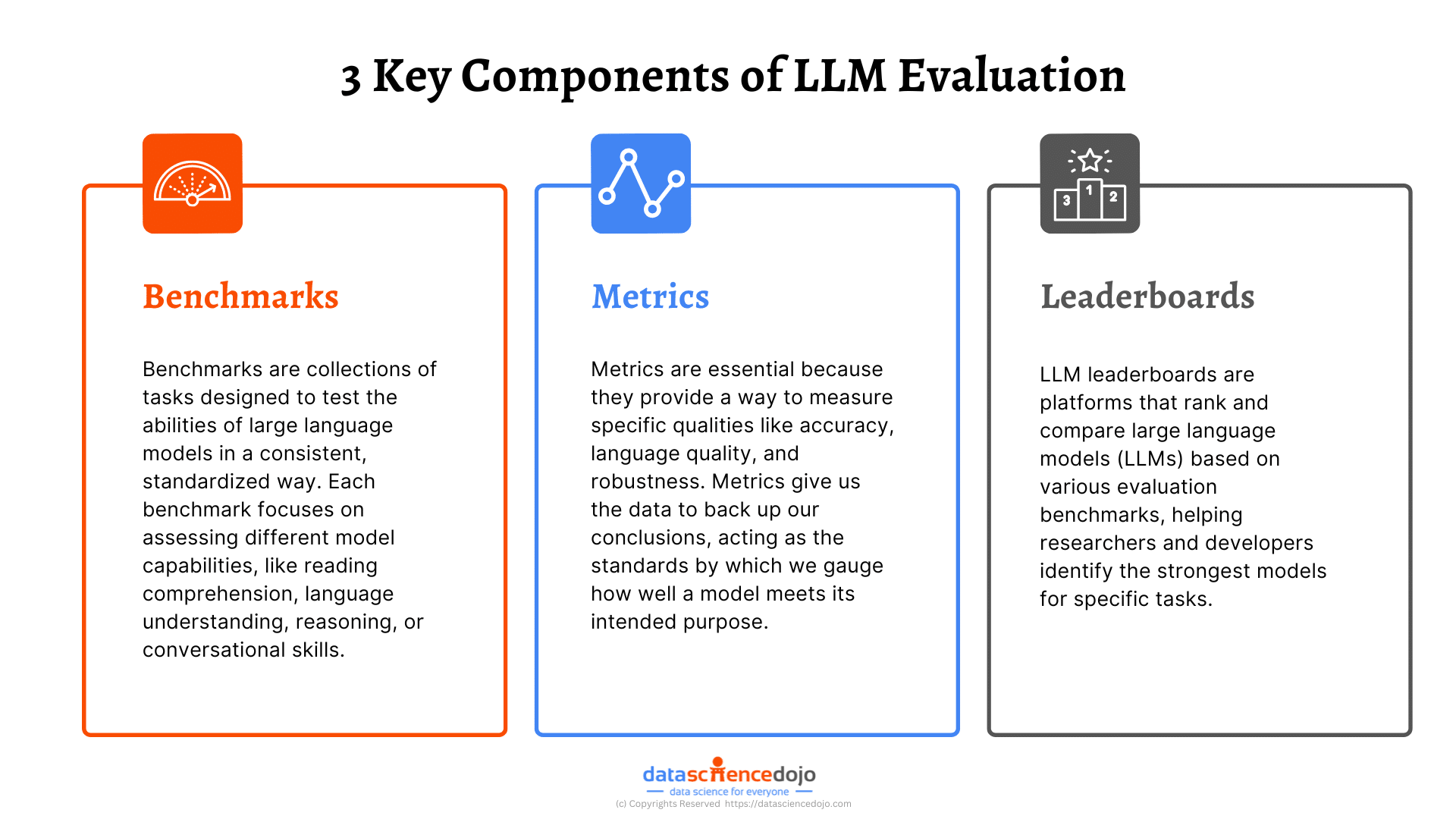

LLM Evaluation Datasets/Benchmarks:

Evaluation datasets or benchmarks are collections of tasks designed to test the abilities of large language models in a consistent, standardized way. Think of them as structured tests that models have to “pass” to prove they’re capable of performing specific language tasks.

These benchmarks contain sets of questions, prompts, or tasks with pre-determined correct answers or expected outputs. When LLMs are evaluated against these benchmarks, their responses are scored based on how closely they align with the expected answers.

Read in detail about benchmarks for LLM evaluation

Each benchmark focuses on assessing different model capabilities, like reading comprehension, language understanding, reasoning, or conversational skills.

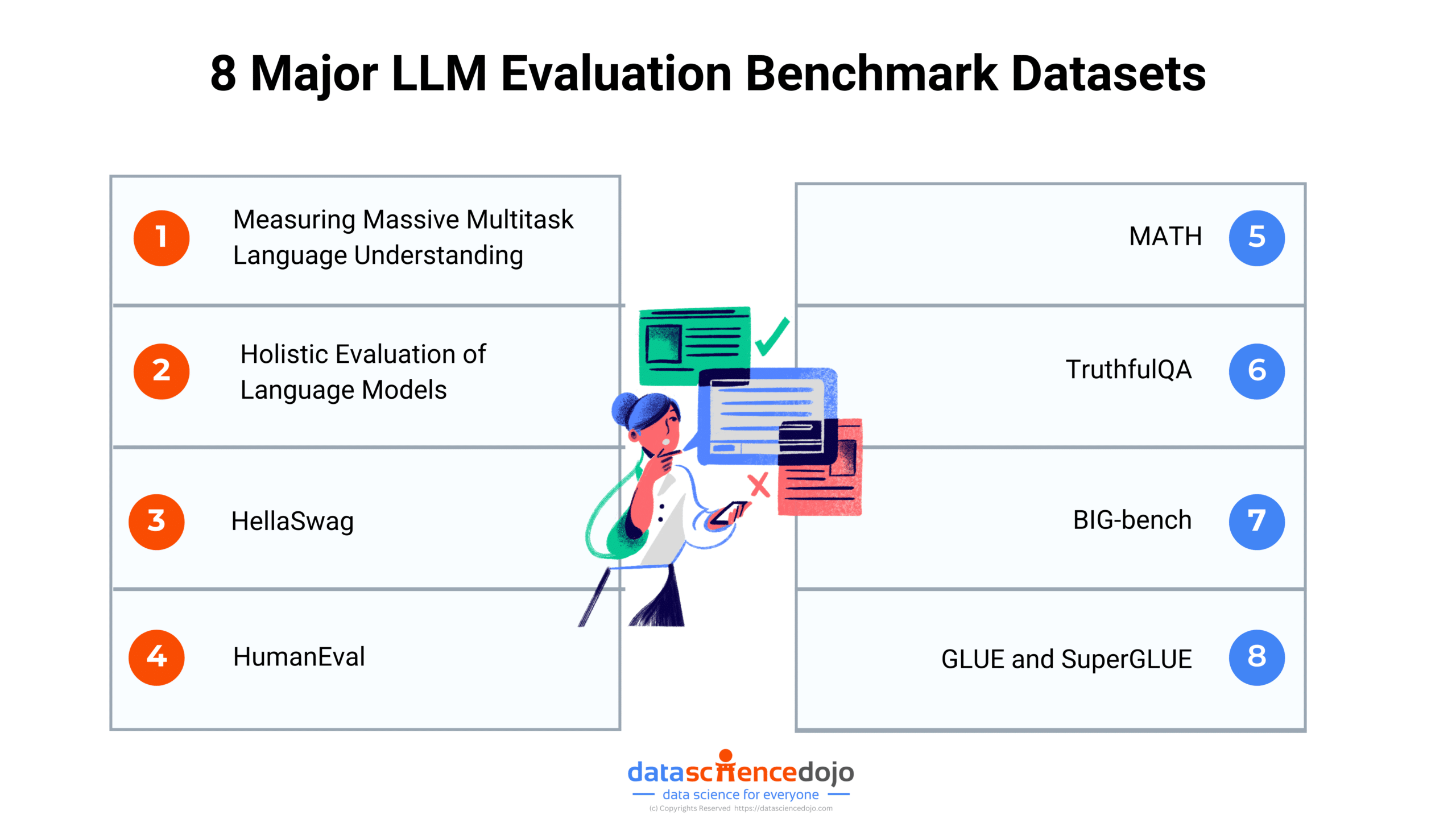

1. Measuring Massive Multitask Language Understanding (MMLU):

MMLU is a comprehensive LLM Evaluation benchmark created to evaluate the knowledge and reasoning abilities of large language models across a wide range of topics. Developed by OpenAI, it’s one of the most extensive benchmarks available, containing 57 subjects.

These subjects range from general knowledge areas like history and geography to specialized fields like law, medicine, and computer science. Each subject includes multiple-choice questions designed to assess the model’s understanding of various disciplines at different difficulty levels.

What is its Purpose?

The purpose of MMLU is to test how well a model can generalize across diverse topics and handle a broad array of real-world knowledge, similar to an academic or professional exam.

With questions spanning high school, undergraduate, and professional levels, MMLU evaluates whether a model can accurately respond to complex, subject-specific queries, making it ideal for measuring the depth and breadth of a model’s knowledge.

What Skills Does It Assess?

MMLU assesses several core skills in language models:

- Subject knowledge

- Reasoning and logic

- Adaptability and multitasking

In short, MMLU is designed to comprehensively assess an LLM’s versatility, depth of understanding, and adaptability across subjects, making it an essential benchmark for evaluating models intended for complex, multi-domain applications.

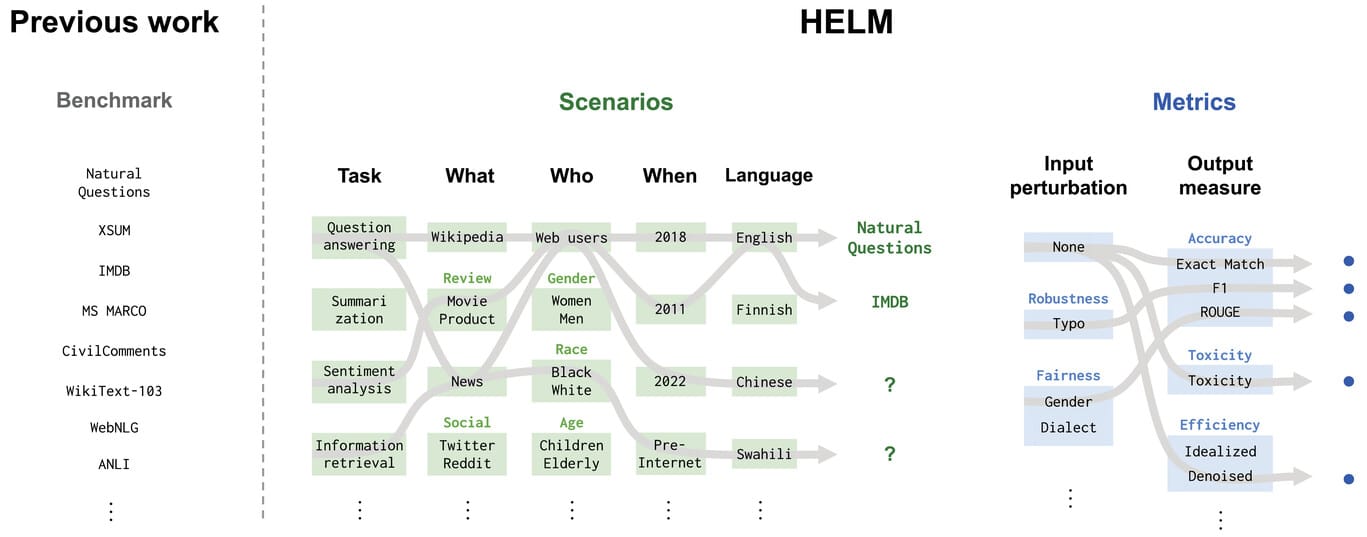

2. Holistic Evaluation of Language Models (HELM):

Developed by Stanford’s Center for Research on Foundation Models, HELM is intended to evaluate models holistically.

While other benchmarks test specific skills like reading comprehension or reasoning, HELM takes a multi-dimensional approach, assessing not only technical performance but also ethical and operational readiness.

What is its Purpose?

The purpose of HELM is to move beyond typical language understanding assessments and consider how well models perform across real-world, complex scenarios. By including LLM evaluation metrics for accuracy, fairness, efficiency, and more, HELM aims to create a standard for measuring the overall trustworthiness of language models.

What Skills Does It Assess?

HELM evaluates a diverse set of skills and qualities in language models, including:

- Language understanding and generation

- Fairness and bias mitigation

- Robustness and adaptability

- Transparency and explainability

In essence, HELM is a versatile framework that provides a multi-dimensional evaluation of language models, prioritizing not only technical performance but also the ethical and practical readiness of models for deployment in diverse applications.

3. HellaSwag

HellaSwag is a benchmark designed to test commonsense reasoning in large language models. It consists of multiple-choice questions where each question describes a scenario, and the model must select the most plausible continuation among several options. The questions are specifically crafted to be challenging, often requiring the model to understand and predict everyday events with subtle contextual cues.

What is its Purpose?

The purpose of HellaSwag is to push LLMs beyond simple language comprehension, testing whether they can reason about everyday scenarios in a way that aligns with human intuition. It’s intended to expose weaknesses in models’ ability to generate or choose answers that seem natural and contextually appropriate, highlighting gaps in their commonsense knowledge.

What Skills Does It Assess?

HellaSwag primarily assesses commonsense reasoning and contextual understanding. The benchmark challenges models to recognize patterns in common situations and select responses that are not only correct but also realistic. It gauges whether a model can avoid nonsensical answers, an essential skill for generating plausible and relevant text in real-world applications.

4. HumanEval

HumanEval is a benchmark specifically created to evaluate the code-generation capabilities of language models. It comprises programming problems that models are tasked with solving by writing functional code. Each problem includes input-output examples that the generated code must match, allowing evaluators to check if the solutions are correct.

Explore the role of LLMs in code generation

What is its Purpose?

The purpose of HumanEval is to measure an LLM’s ability to produce syntactically correct and functionally accurate code. This benchmark focuses on assessing models trained in code generation and is particularly useful for testing models in development environments, where automation of coding tasks can be valuable.

What Skills Does It Assess?

HumanEval assesses programming knowledge, problem-solving ability, and precision in code generation. It checks whether the model can interpret a programming task, apply appropriate syntax and logic, and produce executable code that meets specified requirements. It’s especially useful for evaluating models intended for software development assistance.

Here’s a list of the best AI code generator tools for developers

5. MATH

MATH is a benchmark specifically designed to test mathematical reasoning and problem-solving skills in LLMs. It consists of a wide range of math problems across different topics, including algebra, calculus, geometry, and combinatorics. Each problem requires detailed, multi-step calculations to reach the correct solution.

What is its Purpose?

The purpose of MATH is to assess a model’s capacity for advanced mathematical thinking and logical reasoning. It is particularly aimed at understanding if models can solve problems that require more than straightforward memorization or basic arithmetic. MATH provides insight into a model’s ability to handle complex, multi-step operations, which are vital in STEM fields.

Also read about the top 7 statistical distributions

What Skills Does It Assess?

MATH evaluates numerical reasoning, logical deduction, and problem-solving skills. Unlike simple calculation tasks, MATH challenges models to break down problems into smaller steps, apply the correct formulas, and logically derive answers. This makes it a strong benchmark for testing models used in scientific, engineering, or educational settings.

6. TruthfulQA

TruthfulQA is a benchmark designed to evaluate how truthful a model’s responses are to questions. It consists of questions that are often intentionally tricky, covering topics where models might be prone to generating confident but inaccurate information (also known as hallucination).

What is its Purpose?

The purpose of TruthfulQA is to test whether models can avoid spreading misinformation or confidently delivering incorrect responses. It aims to highlight models’ tendencies to “hallucinate” and emphasizes the importance of factual accuracy, especially in areas where misinformation can be harmful, like health, law, and finance.

What Skills Does It Assess?

TruthfulQA assesses factual accuracy, resistance to hallucination, and understanding of truthfulness. The benchmark gauges whether a model can distinguish between factual information and plausible-sounding but incorrect content, a critical skill for models used in domains where reliable information is essential.

Explore the transforming trends of LLM evaluation

7. BIG-bench (Beyond the Imitation Game Benchmark)

BIG-bench is an extensive and diverse benchmark designed to test a wide range of language model abilities, from basic language comprehension to complex reasoning and creativity. It includes hundreds of tasks, some of which are unconventional or open-ended, making it one of the most challenging and comprehensive benchmarks available.

What is its Purpose?

The purpose of BIG-bench is to push the boundaries of LLMs by including tasks that go beyond conventional benchmarks. It is designed to test models on generalization, creativity, and adaptability, encouraging the development of models capable of handling novel situations and complex instructions.

Learn about the impact of AI on the top 7 creative industries

What Skills Does It Assess?

BIG-bench assesses a broad spectrum of skills, including commonsense reasoning, problem-solving, linguistic creativity, and adaptability. By covering both standard and unique tasks, it gauges whether a model can perform well across many domains, especially in areas where lateral thinking and flexibility are required.

8. GLUE and SuperGLUE

GLUE (General Language Understanding Evaluation) and SuperGLUE are benchmarks created to evaluate basic language understanding skills in LLMs. GLUE includes a series of tasks such as sentence similarity, sentiment analysis, and textual entailment. SuperGLUE is an expanded, more challenging version of GLUE, designed for models that perform well on the original GLUE tasks.

What is its Purpose?

The purpose of GLUE and SuperGLUE is to provide a standardized measure of general language understanding across foundational NLP tasks. These benchmarks aim to ensure that models can handle common language tasks that are essential for general-purpose applications, establishing a baseline for linguistic competence.

What Skills Does It Assess?

GLUE and SuperGLUE assess language comprehension, sentiment recognition, and inference skills. They measure whether models can interpret sentence relationships, analyze tone, and understand linguistic nuances. These benchmarks are fundamental for evaluating models intended for conversational AI, text analysis, and other general NLP tasks.

Metrics Used in LLM Evaluation

After defining what LLM evaluation is and exploring key benchmarks, it’s time to dive into metrics—the tools that score and quantify model performance.

In LLM evaluation, metrics are essential because they provide a way to measure specific qualities like accuracy, language quality, and robustness. Without metrics, we’d only have subjective opinions on model performance, making it difficult to objectively compare models or track improvements.

Metrics give us the data to back up our conclusions, acting as the standards by which we gauge how well a model meets its intended purpose.

These metrics can be organized into three primary categories based on the type of performance they assess:

- Language Quality and Coherence

- Semantic Understanding and Contextual Relevance

- Robustness, Safety, and Ethical Alignment

Explore the list of leading LLM evaluation metrics you must know

1. Language Quality and Coherence Metrics

Purpose

Language quality and coherence metrics evaluate the fluency, clarity, and readability of generated text. In tasks like translation, summarization, and open-ended text generation, these metrics assess whether a model’s output is well-structured, natural, and easy to understand, helping us determine if a model’s language production feels genuinely human-like.

Key Metrics

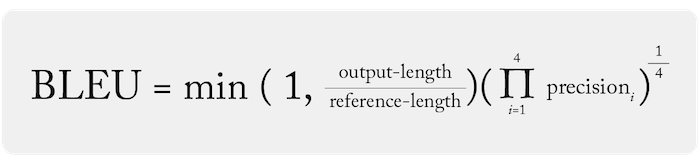

- BLEU (Bilingual Evaluation Understudy): BLEU measures the overlap between generated text and a reference text, focusing on how well the model’s phrasing matches the expected answer. It’s widely used in machine translation and rewards precision in word choice, offering insights into how well a model generates accurate language.

Source: Arize AI - ROUGE (Recall-Oriented Understudy for Gisting Evaluation): ROUGE measures how much of the content from the original text is preserved in the generated summary. Commonly used in summarization, ROUGE captures recall over precision, meaning it’s focused on ensuring the model includes the essential ideas of the original text, rather than mirroring it word-for-word.

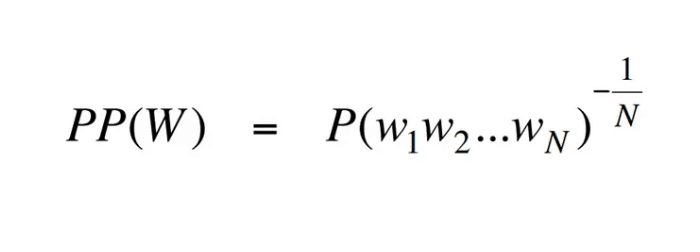

- Perplexity: Perplexity measures the model’s ability to predict a sequence of words. A lower perplexity score indicates the model generates more fluent and natural-sounding language, which is critical for ensuring readability in generated content. It’s particularly helpful in assessing language models intended for storytelling, dialogue, and other open-ended tasks where coherence is key.

2. Semantic Understanding and Contextual Relevance Metrics

Purpose

Semantic understanding and contextual relevance metrics assess how well a model captures the intended meaning and stays contextually relevant. These metrics are particularly valuable in tasks where the specific words used are less important than conveying the correct overall message, such as paraphrasing and sentence similarity.

Key Metrics

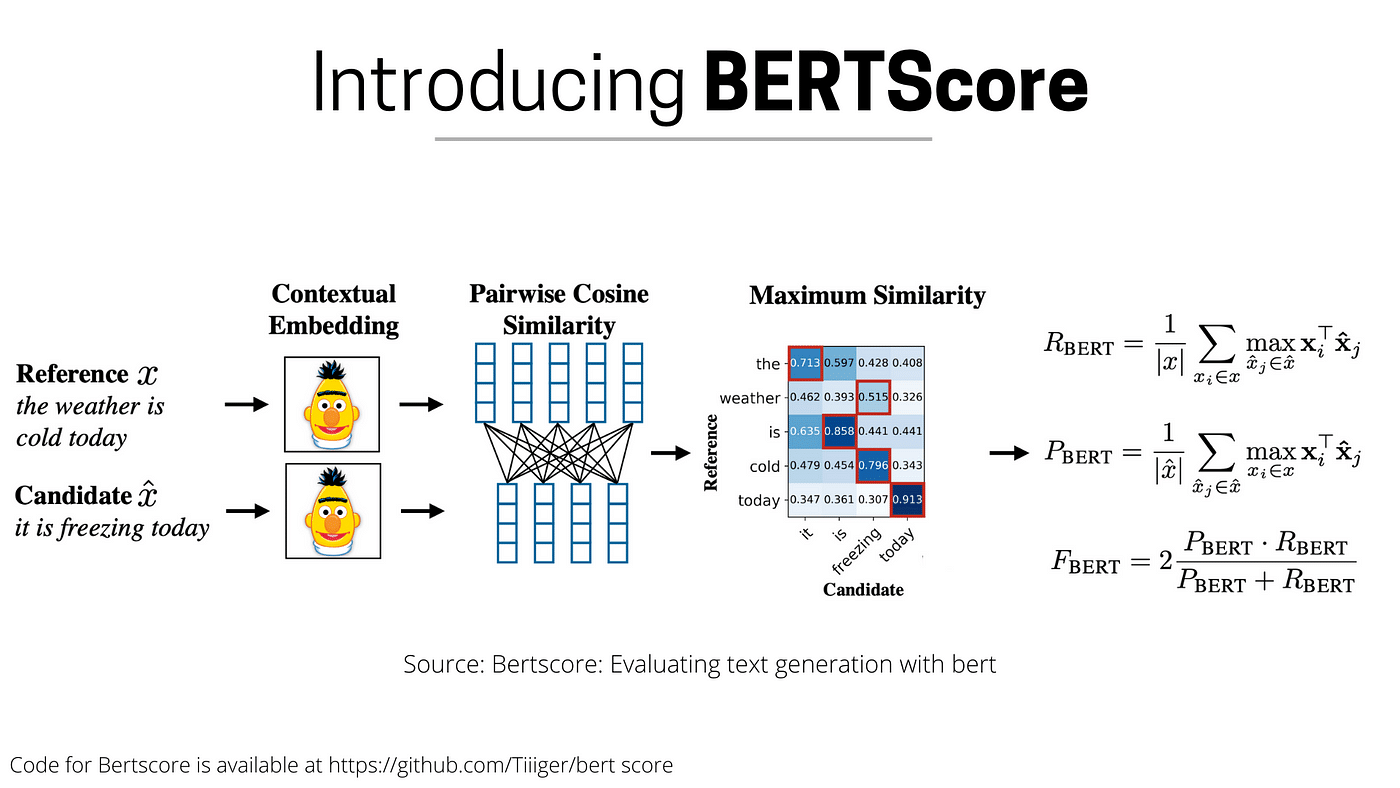

- BERTScore: BERTScore uses embeddings from pre-trained language models (like BERT) to measure the semantic similarity between the generated text and reference text. By focusing on meaning rather than exact wording, BERTScore is ideal for tasks where preserving meaning is more important than matching words exactly.

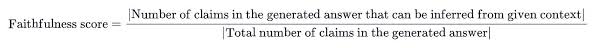

Source: Towards Data Science - Faithfulness: Faithfulness measures the factual consistency of the generated answer relative to the given context. It evaluates whether the model’s response remains accurate to the provided information, making it essential for applications that prioritize factual accuracy, like summarization and factual reporting.

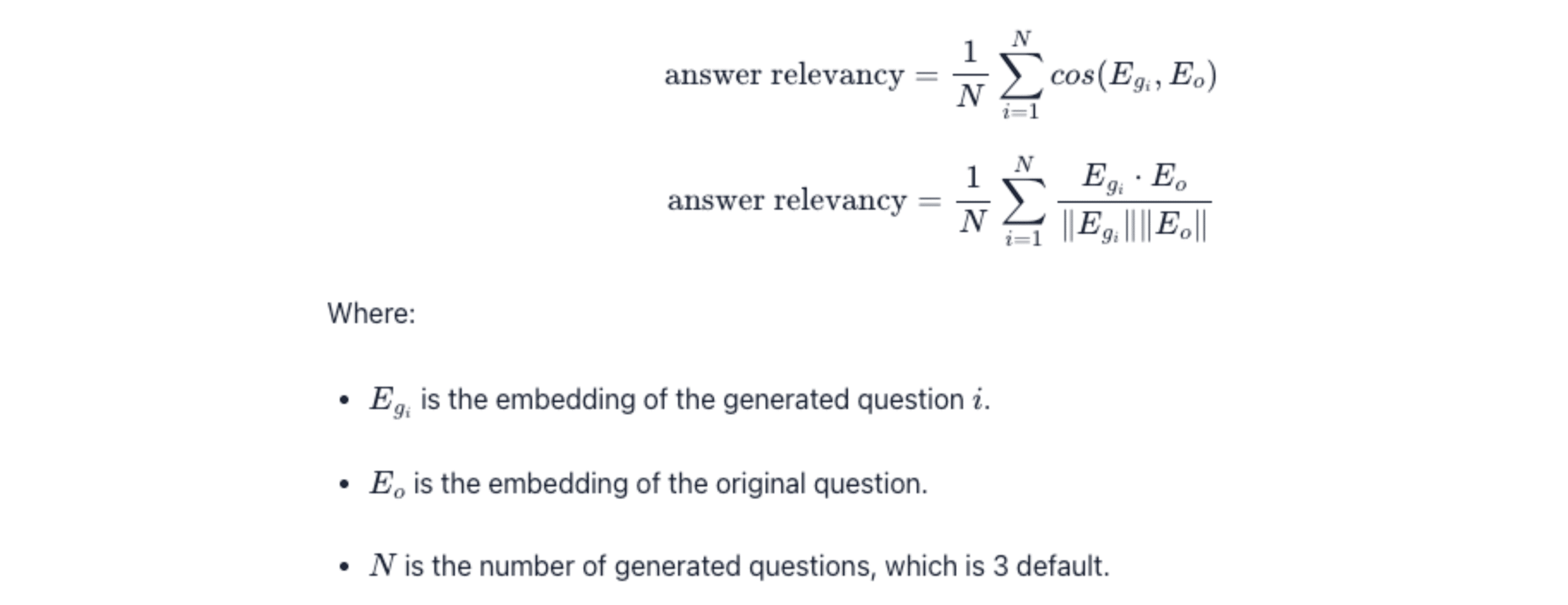

Source: Towards Data Science - Answer Relevance: Answer Relevance assesses how well an answer aligns with the original question. This metric is often calculated by averaging the cosine similarities between the original question and several paraphrased versions. Answer Relevance is crucial in question-answering tasks where the response should directly address the user’s query.

3. Robustness, Safety, and Ethical Alignment Metrics

Purpose

Robustness, safety, and ethical alignment metrics measure a model’s resilience to challenging inputs and ensure it produces responsible, unbiased outputs. These metrics are critical for models deployed in real-world applications, as they help ensure that the model won’t generate harmful, offensive, or biased content and that it will respond appropriately to various user inputs.

Key Metrics

- Demographic Parity: Ensures that positive outcomes are distributed equally across demographic groups. This means the probability of a positive outcome should be the same across all groups. It’s essential for fair treatment in applications where equal access to benefits is desired.

- Equal Opportunity: Ensures fairness in true positive rates by making sure that qualified individuals across all demographic groups have equal chances for positive outcomes. This metric is particularly valuable in scenarios like hiring, where equally qualified candidates from different backgrounds should have the same likelihood of being selected.

- Counterfactual Fairness: Measures whether the outcome remains the same for an individual if only their demographic attribute changes (e.g., gender or race). This ensures the model’s decisions aren’t influenced by demographic features irrelevant to the outcome.

LLM Leaderboards: Tracking and Comparing Model Performance

LLM leaderboards are platforms that rank and compare large language models based on various evaluation benchmarks, helping researchers and developers identify the strongest models for specific tasks. These leaderboards provide a structured way to measure a model’s capabilities, from basic text generation to more complex tasks like code generation, multilingual understanding, or commonsense reasoning.

Read more about the top LLM leaderboards you must explore

By showcasing the relative strengths and weaknesses of models, leaderboards serve as a roadmap for improvement and guide decision-making for developers and users alike. Below is the list of some major 5 LLM leaderboards you can use for evaluation.

HuggingFace Open LLM Leaderboard

HuggingFace is one of the most popular open-source leaderboards that performs LLM evaluation using the Eleuther AI LM Evaluation Harness.

It ranks models across benchmarks like MMLU (multitask language understanding), TruthfulQA for factual accuracy, and HellaSwag for commonsense reasoning. The Open LLM Leaderboard provides up-to-date, detailed scores for diverse LLMs, making it a go-to resource for comparing open-source models.

LMSYS Chatbot Arena Leaderboard

The LMSYS Chatbot Arena uses an Elo ranking system to evaluate LLMs based on user preferences in pairwise comparisons. It incorporates MT-Bench and MMLU as benchmarks, allowing users to see how well models perform in real-time conversational settings.

This leaderboard is widely recognized for its interactivity and broad community involvement, though human bias can influence rankings due to subjective preferences.

Massive Text Embedding Benchmark (MTEB) Leaderboard

This leaderboard specifically evaluates text embedding models across 56 datasets and eight tasks, supporting over 100 languages. The MTEB leaderboard is essential for comparing models on tasks like classification, retrieval, and clustering, making it valuable for projects that rely on high-quality embeddings for downstream tasks.

Berkeley Function-Calling Leaderboard

Focused on evaluating LLMs’ ability to handle function calls accurately, the Berkeley Function-Calling Leaderboard is vital for models integrated into automation frameworks like LangChain. It assesses models based on their accuracy in executing specific function calls, which is critical for applications requiring precise task execution, like API integrations.

Artificial Analysis LLM Performance Leaderboard

This leaderboard takes a customer-focused approach by evaluating LLMs based on real-world deployment metrics, such as Time to First Token (TTFT) and tokens per second (throughput).

It also combines standardized benchmarks like MMLU and Chatbot Arena Elo scores, offering a unique blend of performance and quality metrics that help users find LLMs suited for high-traffic, serverless environments

These leaderboards provide a detailed snapshot of the latest advancements and performance levels across models, making them invaluable tools for anyone working with or developing large language models.

Wrapping Up: The Art and Science of LLM Evaluation

Evaluating large language models (LLMs) is both essential and complex, balancing precision, quality, and cost. Through benchmarks, metrics, and leaderboards, we get a structured view of a model’s capabilities, from accuracy to ethical reliability.

However, as powerful as these tools are, evaluation remains an evolving field with room for improvement in quality, consistency, and speed. With ongoing advancements, these methods will continue to refine how we measure, trust, and improve LLMs, ensuring they’re well-equipped for real-world applications.