Ever asked an AI a simple question and got an answer that sounded perfect—but was completely made up? That’s what we call an AI hallucination. It’s when large language models (LLMs) confidently generate false or misleading information, presenting it as fact. Sometimes these hallucinations are harmless, even funny. Other times, they can spread misinformation or lead to serious mistakes.

So, why does this happen? And more importantly, how can we prevent it?

In this blog, we’ll explore the fascinating (and sometimes bizarre) world of AI hallucinations—what causes them, the risks they pose, and what researchers are doing to make AI more reliable.

AI Hallucination Phenomenon

This inclination to produce unsubstantiated “facts” is commonly referred to as hallucination, and it arises due to the development and training methods employed in contemporary LLMs, as well as generative AI models in general.

What Are AI Hallucinations? AI hallucinations occur when a large language model (LLM) generates inaccurate information. LLMs, which power chatbots like ChatGPT and Google Bard, have the capacity to produce responses that deviate from external facts or logical context.

These hallucinations may appear convincing due to LLMs’ ability to generate coherent text, relying on statistical patterns to ensure grammatical and semantic accuracy within the given prompt.

- However, hallucinations aren’t always plausible and can sometimes be nonsensical, making it challenging to pinpoint their exact causes on a case-by-case basis.

- An alternative term for AI hallucinations is “confabulation.” While most commonly associated with LLMs, these inaccuracies can also manifest in AI-generated video, images, and audio.

Examples of AI Hallucinations

One well-known instance of AI hallucination occurred when Google’s chatbot, Bard, provided false information about the James Webb Space Telescope. In response to the query, “What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?”

Case study: Bard

Bard falsely claimed that the telescope had captured the first images of an exoplanet beyond our solar system. However, this assertion was contradicted by NASA’s records, which showed that the first exoplanet images were obtained in 2004, while the James Webb Space Telescope launched in 2021.

This response by Bard, though seemingly plausible and contextually relevant, was debunked through fact-checking.

Another instance involved Meta’s demonstration of Galactica, an LLM designed for scientific researchers and students. When asked to draft a paper on creating avatars, the model cited a fabricated paper authored by a real expert in a related field.

Read more about -> 10 innovative ways to monetize business using ChatGPT

Types of AI Hallucinations

AI hallucinations can range from minor inconsistencies to wholly fabricated or contradictory information, including:

- Sentence contradiction: This occurs when an LLM generates a sentence that contradicts a previous sentence within the same output.

Example: Prompt: “Write a description of a landscape in four-word sentences.” Output: “The grass was green. The mountains were blue. The river was purple. The grass was brown.”

- Prompt contradiction: In this case, a sentence generated by the LLM contradicts the initial prompt used to generate it.

Example: Prompt: “Write a birthday card for my niece.” Output: “Happy anniversary, mom and dad!”

- Factual contradiction: LLMs may present fictitious information as fact.

Example: Prompt: “Name three cities in the United States.” Output: “New York, Los Angeles, Toronto.”

- Irrelevant or random hallucinations: These occur when the generated information lacks relevance to the input or output context.

Example: Prompt: “Describe London to me.” Output: “London is a city in England. Cats need to be fed at least once a day.”

Learn how to become a prompt engineer in 10 steps

Causes of AI Hallucinations

Several technical reasons may underlie the occurrence of hallucinations in LLMs, although the exact mechanisms are often opaque. Some potential causes include:

- Data quality: Hallucinations can result from flawed information in the training data, which may contain noise, errors, biases, or inconsistencies.

- Generation method: Training and generation methods, even with consistent and reliable data, can contribute to hallucinations. Prior model generations’ biases or false decoding from the transformer may be factors. Models may also exhibit a bias toward specific or generic words, influencing the information they generate.

- Input context: Unclear, inconsistent, or contradictory input prompts can lead to hallucinations. Users can enhance results by refining their input prompts.

You might also like: US AI vs China AI

Challenges Posed by AI Hallucinations

AI hallucinations present several challenges, including:

- Eroding user trust: Hallucinations can significantly undermine user trust in AI systems. As users perceive AI as more reliable, instances of betrayal can be more impactful.

- Anthropomorphism risk: Describing erroneous AI outputs as hallucinations can anthropomorphize AI technology to some extent. It’s crucial to remember that AI lacks consciousness and its own perception of the world. Referring to such outputs as “mirages” rather than “hallucinations” might be more accurate.

- Misinformation and deception: Hallucinations have the potential to spread misinformation, fabricate citations, and be exploited in cyberattacks, posing a danger to information integrity.

- Black box nature: Many LLMs operate as black box AI, making it challenging to determine why a specific hallucination occurred. Fixing these issues often falls on users, requiring vigilance and monitoring to identify and address hallucinations.

- Ethical and Legal Implications: AI hallucinations can lead to the generation of harmful or biased content, raising ethical concerns and potential legal liabilities. Misleading outputs in sensitive fields like healthcare, law, or finance could result in serious consequences, making it crucial to ensure responsible AI deployment.

Training Models

Generative AI models have captivated the world with their ability to create text, images, music, and more. But it’s important to remember—they don’t possess true intelligence. Instead, they operate as advanced statistical systems that predict data based on patterns learned from massive training datasets, often sourced from the internet. To truly understand how these models work, let’s break down their nature and how they’re trained.

The Nature of Generative AI Models

Before diving into the training process, it’s crucial to understand what generative AI models are and how they function. Despite their impressive outputs, these models aren’t thinking or reasoning—they’re making highly sophisticated guesses based on data.

- Statistical Systems: At their core, generative AI models are complex statistical engines. They don’t “create” in the human sense but predict the next word, image element, or note based on learned patterns.

- Pattern Learning: Through exposure to vast datasets, these models identify recurring structures and contextual relationships, enabling them to produce coherent and relevant outputs.

- Example-Based Learning: Though trained on countless examples, these models don’t understand the data—they simply calculate the most probable next element. This is why outputs can sometimes be inaccurate or nonsensical.

How Language Models (LMs) Are Trained

Understanding the nature of generative AI sets the stage for exploring how these models are actually trained. The process behind language models, in particular, is both simple and powerful, focusing on prediction rather than comprehension.

- Masking and Prediction: Language models are trained using a technique where certain words in a sentence are masked, and the model predicts the missing words based on context. It’s similar to how your phone’s predictive text suggests the next word while typing.

- Efficacy vs. Coherence: This approach is highly effective at producing fluent text, but because the model is predicting based on probabilities, it doesn’t always result in coherent or factually accurate outputs. This is where AI hallucinations often arise.

Shortcomings of Large Language Models (LLMs)

- Grammatical but Incoherent Text: LLMs can produce grammatically correct but incoherent text, highlighting their limitations in generating meaningful content.

- Falsehoods and Contradictions: They can propagate falsehoods and combine conflicting information from various sources without discerning accuracy.

- Lack of Intent and Understanding: LLMs lack intent and don’t comprehend truth or falsehood; they form associations between words and concepts without assessing their accuracy.

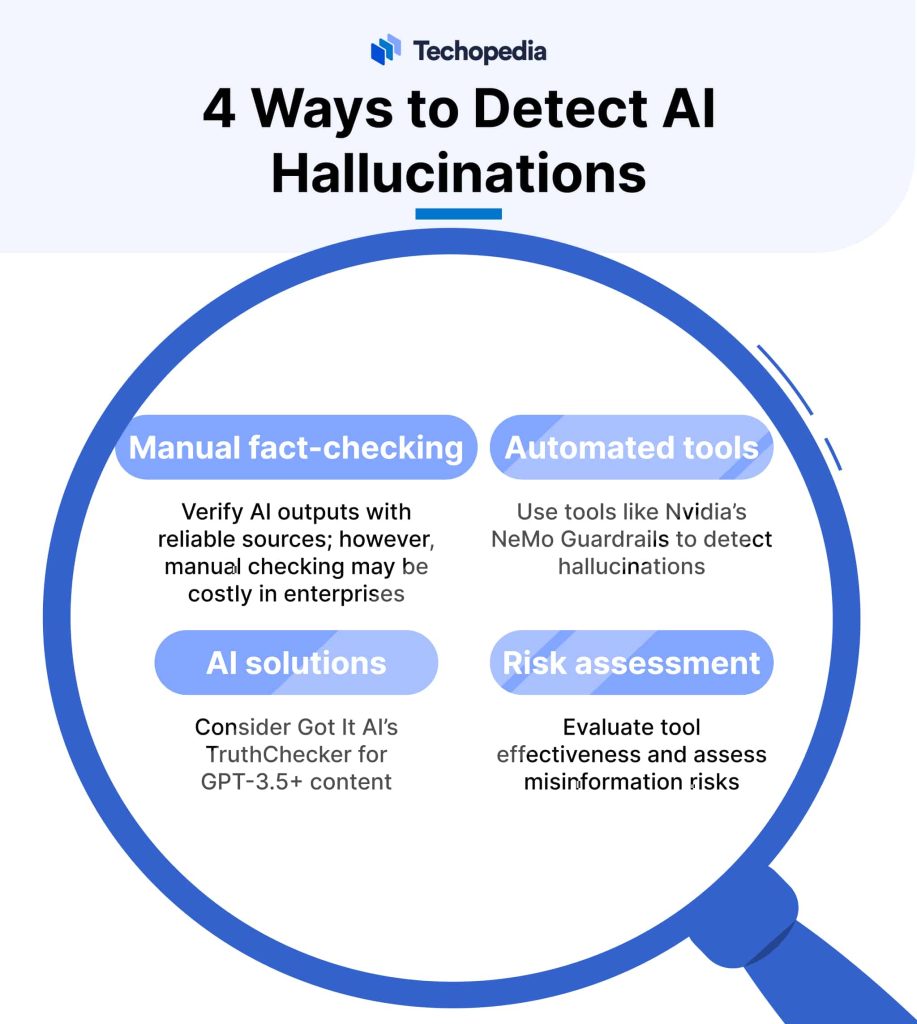

Addressing Hallucination in LLMs

- Challenges of Hallucination: Hallucination in LLMs arises from their inability to gauge the uncertainty of their predictions and their consistency in generating outputs.

- Mitigation Approaches: While complete elimination of hallucinations may be challenging, practical approaches can help reduce them.

Practical Approaches to Mitigate Hallucination

- Knowledge Integration: Integrating high-quality knowledge bases with LLMs can enhance accuracy in question-answering systems.

- Reinforcement Learning from Human Feedback (RLHF): This approach involves training LLMs, collecting human feedback, and fine-tuning models based on human judgments.

- Limitations of RLHF: Despite its promise, RLHF also has limitations and may not entirely eliminate hallucination in LLMs.

In summary, generative AI models like LLMs lack true understanding and can produce incoherent or inaccurate content. Mitigating hallucinations in these models requires careful training, knowledge integration, and feedback-driven fine-tuning, but complete elimination remains a challenge. Understanding the nature of these models is crucial in using them responsibly and effectively.

Exploring Different Perspectives: The Role of Hallucination in Creativity

Considering the potential unsolvability of hallucination, at least with current Large Language Models (LLMs), is it necessarily a drawback? According to Berns, not necessarily. He suggests that hallucinating models could serve as catalysts for creativity by acting as “co-creative partners.” While their outputs may not always align entirely with facts, they could contain valuable threads worth exploring. Employing hallucination creatively can yield outcomes or combinations of ideas that might not readily occur to most individuals.

You might also like: Human-Computer Interaction with LLMs

“Hallucinations” as an Issue in Context

However, Berns acknowledges that “hallucinations” become problematic when the generated statements are factually incorrect or violate established human, social, or cultural values. This is especially true in situations where individuals rely on the LLMs as experts.

He states, “In scenarios where a person relies on the LLM to be an expert, generated statements must align with facts and values. However, in creative or artistic tasks, the ability to generate unexpected outputs can be valuable. A human recipient might be surprised by a response to a query and, as a result, be pushed into a certain direction of thought that could lead to novel connections of ideas.”

Are LLMs Held to Unreasonable Standards?

On another note, Ha argues that today’s expectations of LLMs may be unreasonably high. He draws a parallel to human behavior, suggesting that humans also “hallucinate” at times when we misremember or misrepresent the truth. However, he posits that cognitive dissonance arises when LLMs produce outputs that appear accurate on the surface but may contain errors upon closer examination.

A Skeptical Approach to LLM Predictions

Ultimately, the solution may not necessarily reside in altering the technical workings of generative AI models. Instead, the most prudent approach for now seems to be treating the predictions of these models with a healthy dose of skepticism.

In a Nutshell

AI hallucinations in Large Language Models pose a complex challenge, but they also offer opportunities for creativity. While current mitigation strategies may not entirely eliminate hallucinations, they can reduce their impact. However, it’s essential to strike a balance between leveraging AI’s creative potential and ensuring factual accuracy, all while approaching LLM predictions with skepticism in our pursuit of responsible and effective AI utilization.