Welcome to Data Science Dojo’s weekly newsletter, “The-Data-Driven Dispatch“. In this dispatch, we’ll decode the buzz around Retrieval Augmented Generation (LLM RAG).

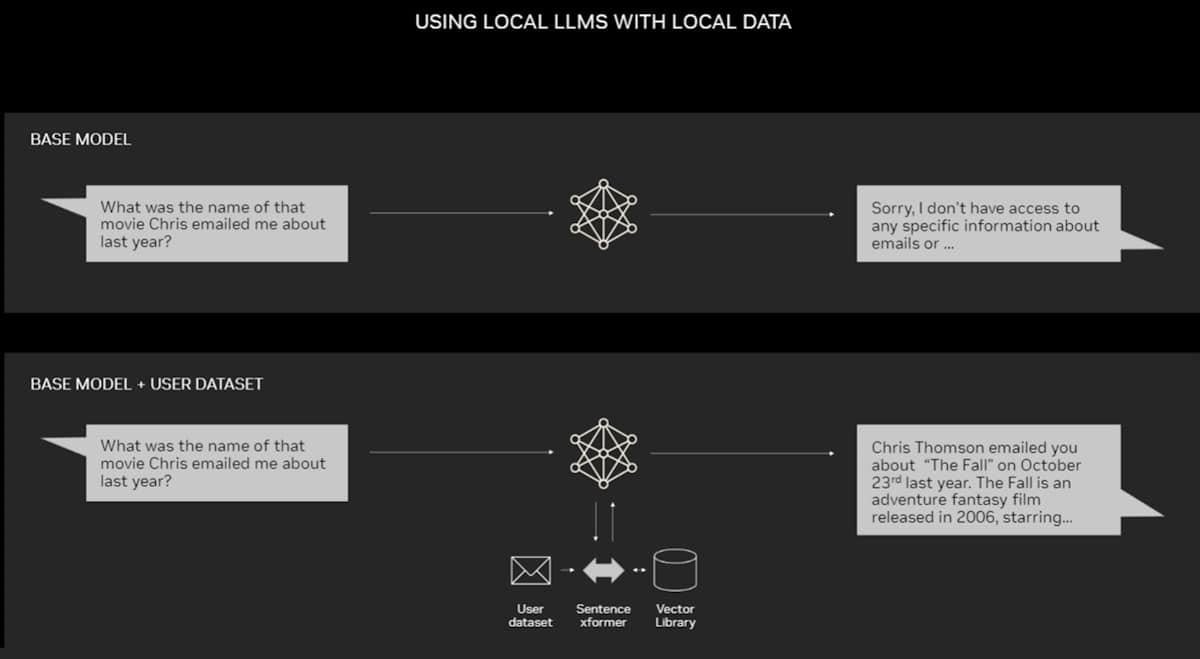

Are LLMs useful for enterprises? Well, one can argue that what is the use of a large language model that is trained on trillions of tokens but knows little to nothing about my business?

For the enterprise, the LLM is unaware of crucial information. It doesn’t know what brand image to uphold in its conversations. It definitely will not understand the company’s financial complexities, and it would practically be like an archer throwing arrows out in the blind.

However, the good news is that we can enhance an LLM’s knowledge by including the enterprise’s proprietary data. There are several methods to it including Retrieval-Augmented Generation (RAG), Fine-tuning, and In-context Learning.

Among these methods for enhancing an LLM, RAG is one of the most popular because of how useful it has proved to be.

Get ready to unpack RAG! This dispatch will explore everything you need to know: what it is, why it matters, and how to use it.

What is Retrieval-Augmented Generation?

Consider a fresh graduate (LLM) starting their first job. Their manager (RAG) recognizes they lack industry and company knowledge, making them prone to errors. To bridge this gap, the manager provides crucial information to ensure the new hire delivers the correct output.

Quite similarly, RAG is a framework that connects external sources of information with the LLM so that its responses are context-aware and accurate.

In the case of an enterprise, the RAG model connects an LLM with the company’s important data allowing the model to provide useful and relevant assistance.

Understanding How LLM RAG Works, Step-by-Step!

Dive Deeper into how Retrieval augmented generation (RAG) works

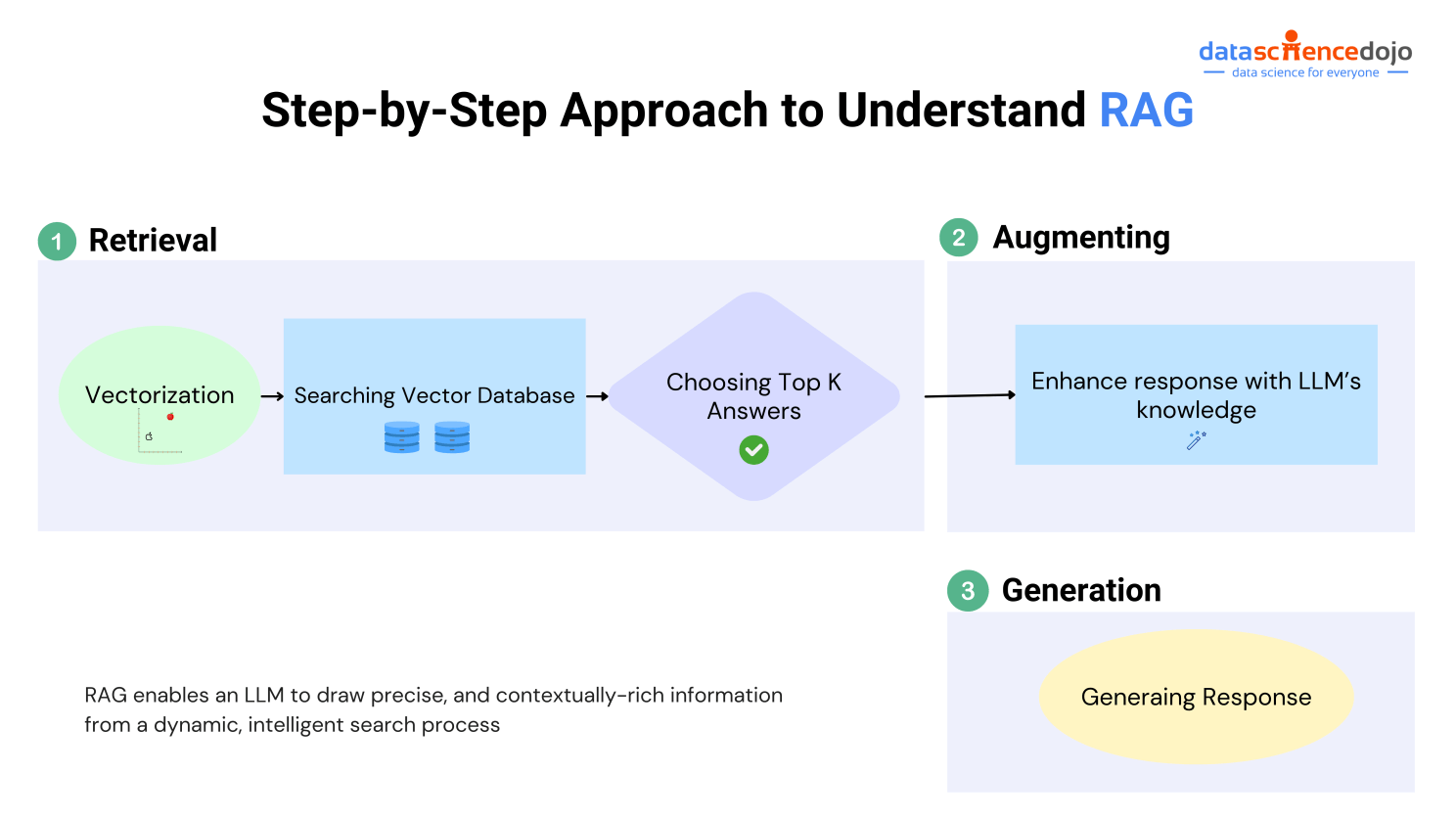

Step 1 – Retrieval:

When you ask any question from your LLM, your query is first converted into the language the computer understands. Then, based on the context and the meaning of your question, it finds relevant information from the additional database that you have provided to it.

For a firm, it will toggle from the added data you will have provided about the company. Then it will select the top k matches, let’s say 5. These will be 5 pieces of information that match closely with the essence of your query.

Step 2- Augmenting:

No, the LLM doesn’t just present the answers that it took from the database you provided. Instead, the model also leverages its own information to enrich the response with relevant insights. Read more

Step 3 – Generation:

Finally, all the information retrieved is used to compose rich, and relevant answers to your query.

Did you see how this method not only tapped into the extensive knowledge of an LLM but also ensured it was grounded in facts relevant and recent to your company?

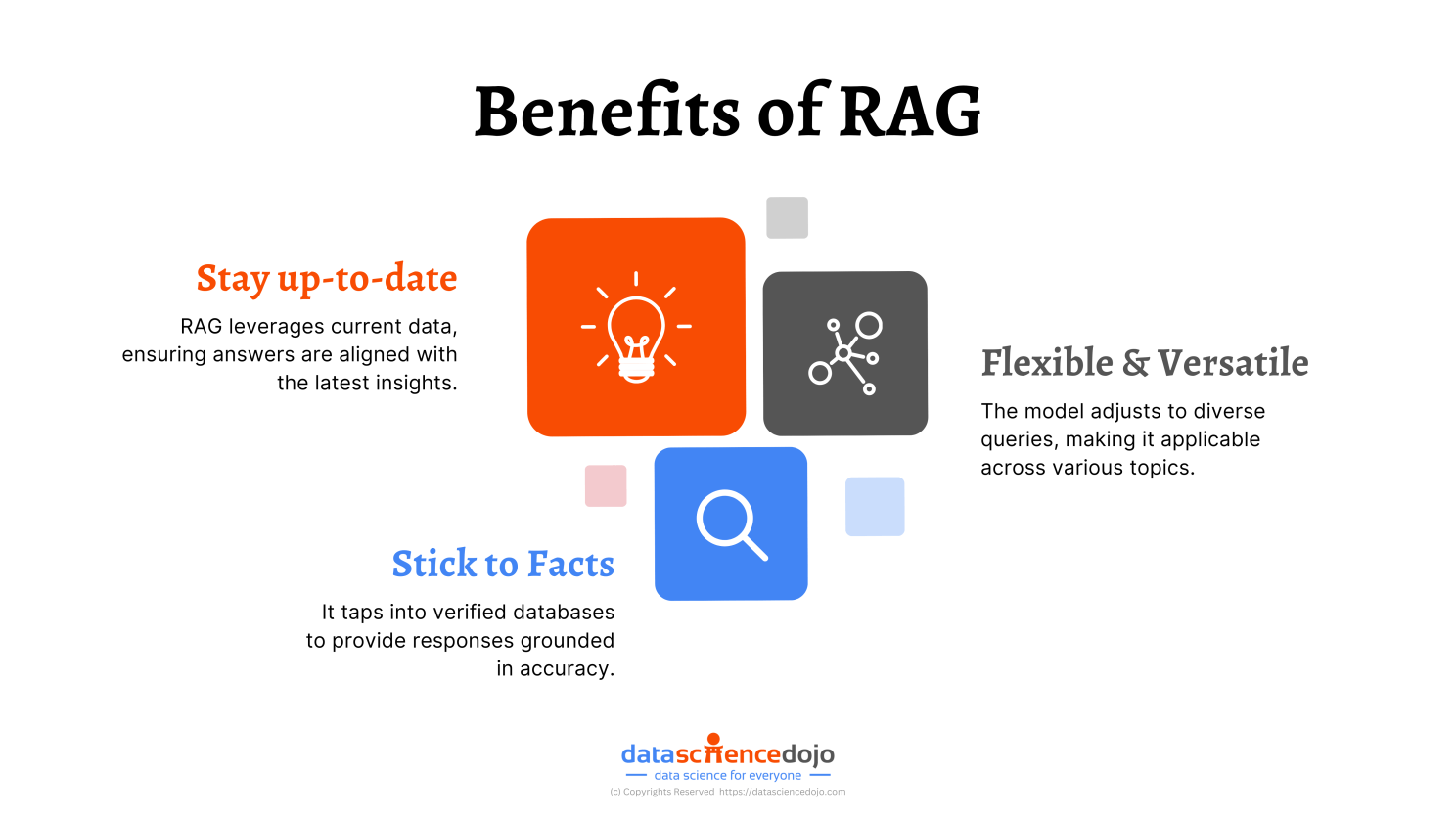

How Does RAG Make LLMs Better for Enterprises?

Read more: Demystifying RAG, Its Benefits, Use Cases and Future Implications

Use Cases for LLM RAG

The RAG framework elevates LLMs and consequently, it broadens the horizons of how we can leverage large language models in enterprises. For example:

- Real-Time Fact-Checking

- Dynamic Content Creation

- Enhanced Educational Tools

- Improved Medical and Legal Assistance

Read more: RAG Vs finetuning: A comprehensive guide to understanding the applications of the two approaches

Want to learn more about AI? Our blog is the go-to source for the latest tech news.

Enhancing the Power of Retrieval-Augmented Generation

While RAG is a powerful framework, it still has numerous nuances. One is, what if the retrieval of information from the data is not done right? Destroys the whole purpose of LLM RAG, yes?

Here’s an important session that dives deeper into advanced considerations to deploy the RAG model that makes a difference.

Wait until it starts to get real for you!

LLMs for Everyone: Online Course

Want to benefit from the power of LLMs but find them too technical? We believe LLMs are everyone. Here’s a comprehensive online course that dives into all the important concepts and areas that will get you started with LLMs.

Learn more about the curriculum of the course.

Finally, let’s end this week’s dispatch with interesting news that made it to the headlines.

- Musk’s Grok AI goes open-source: Becomes the largest open-source LLM available. Read more

- Nvidia CEO Jensen Huang announces new AI chips: ‘We need bigger GPUs’. Read more

- Apple buys Canadian AI startup as it races to add features. Read more

- Google DeepMind unveils AI football tactics coach honed with Liverpool. Read more

- Apple is in talks to let Google’s Gemini power iPhone AI features, Bloomberg News says. Read more